AI_Gen_Novel

基于大语言模型(LLM)和多智能体(Multi-Agent),探究AI写小说能力的边界

Stars: 73

AI_Gen_Novel is a project exploring the limits of AI in writing online fiction. Leveraging large language models and multi-agent technology, the tool aims to automatically generate web novels by compressing long texts, optimizing prompts, and enhancing originality. The tool combines the core idea of RecurrentGPT with language-based iterative computation to create texts of any length. Future directions include enhancing model capabilities, optimizing program architecture, and introducing more prior knowledge for structured storytelling.

README:

近年来,AI在文学创作领域取得了显著进展。从AI微小说大赛到阅文妙笔,再到Midreal AI,这些案例都证明了AI在文学创作上的巨大潜力。作为一名网络文学爱好者,我希望通过大语言模型与多智能体技术,来开发一款能够自动生成网络小说的应用。

网文的创作,可以套用写作的认知过程模型,该模型将写作视为一个目标导向的思考过程,包括非线性的认知活动:计划、转换和审阅。

文献和实践表明,LLM 在转换和审阅上表现较好,而在计划阶段存在缺陷。具体体现为

- 有限的理解和推理能力

- 无法记忆和生成长文本

- 缺乏原创性和多样性

面对这些问题,我的解决方案是

- 利用LLM的能力压缩长文本为几句话组成的记忆

- 优化Prompt,多智能体协作,激发 LLM 的能力,提升其原创性

- 借鉴RecurrentGPT的核心思想,基于语言的循环计算,通过迭代的方式创作任意长度的文本

- 结合网络小说创作的先验知识,对创作流程进行优化

经过一定的探索后,我认为目前的大语言模型还没有足够的能力创作长篇网络小说。

未来可能的发展方向是:

对大模型能力要求

- 更长的上下文

- 更强的理解和推理能力

- 以人为评判标准的网文生成的强化学习训练

程序架构的优化

- 多次尝试,多智能体讨论,提升原创性

- 引入更多先验知识,如结构化的剧情框架

https://modelscope.cn/studios/cjyyxn/AI_Gen_Novel/summary

首先,安装所需的依赖项:

pip install -r requirements.txt项目依赖一个大语言模型。你需要实现LLM.py中的chatLLM函数。

-

直接运行

demo.py,将自动创作小说,并将结果保存在novel_record.md文件中。 -

运行

app.py启动一个基于gradio的应用,通过打开显示的链接,你可以体验到AI小说生成的可视化过程。

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for AI_Gen_Novel

Similar Open Source Tools

AI_Gen_Novel

AI_Gen_Novel is a project exploring the limits of AI in writing online fiction. Leveraging large language models and multi-agent technology, the tool aims to automatically generate web novels by compressing long texts, optimizing prompts, and enhancing originality. The tool combines the core idea of RecurrentGPT with language-based iterative computation to create texts of any length. Future directions include enhancing model capabilities, optimizing program architecture, and introducing more prior knowledge for structured storytelling.

Qing-Digital-Self

Qing-Digital-Self is a project that creates a personal digital twin by fine-tuning a large language model on your chat history. The aim is to replicate your unique style of expression and conversational behavior accurately. The project includes bilingual support and comprehensive tutorials covering data extraction, chat data cleaning and conversion, LlamaFactory fine-tuning process, and testing and usage of the fine-tuned model. It offers a different perspective and assistance compared to similar projects. The project is currently in development with version v0.1.6, and welcomes contributions and issue reports from developers.

ai-self-coding-book

The 'ai-self-coding-book' repository is a guidebook that aims to teach how to create complex applications with commercial value using natural language and AI, rather than simple toy projects. It provides insights on AI programming concepts and practical applications, emphasizing real-world use cases and best practices for development.

arcadia

Arcadia is an all-in-one enterprise-grade LLMOps platform that provides a unified interface for developers and operators to build, debug, deploy, and manage AI agents. It supports various LLMs, embedding models, reranking models, and more. Built on langchaingo (golang) for better performance and maintainability. The platform follows the operator pattern that extends Kubernetes APIs, ensuring secure and efficient operations.

llms-interview-questions

This repository contains a comprehensive collection of 63 must-know Large Language Models (LLMs) interview questions. It covers topics such as the architecture of LLMs, transformer models, attention mechanisms, training processes, encoder-decoder frameworks, differences between LLMs and traditional statistical language models, handling context and long-term dependencies, transformers for parallelization, applications of LLMs, sentiment analysis, language translation, conversation AI, chatbots, and more. The readme provides detailed explanations, code examples, and insights into utilizing LLMs for various tasks.

RepoMaster

RepoMaster is an AI agent that leverages GitHub repositories to solve complex real-world tasks. It transforms how coding tasks are solved by automatically finding the right GitHub tools and making them work together seamlessly. Users can describe their tasks, and RepoMaster's AI analysis leads to auto discovery and smart execution, resulting in perfect outcomes. The tool provides a web interface for beginners and a command-line interface for advanced users, along with specialized agents for deep search, general assistance, and repository tasks.

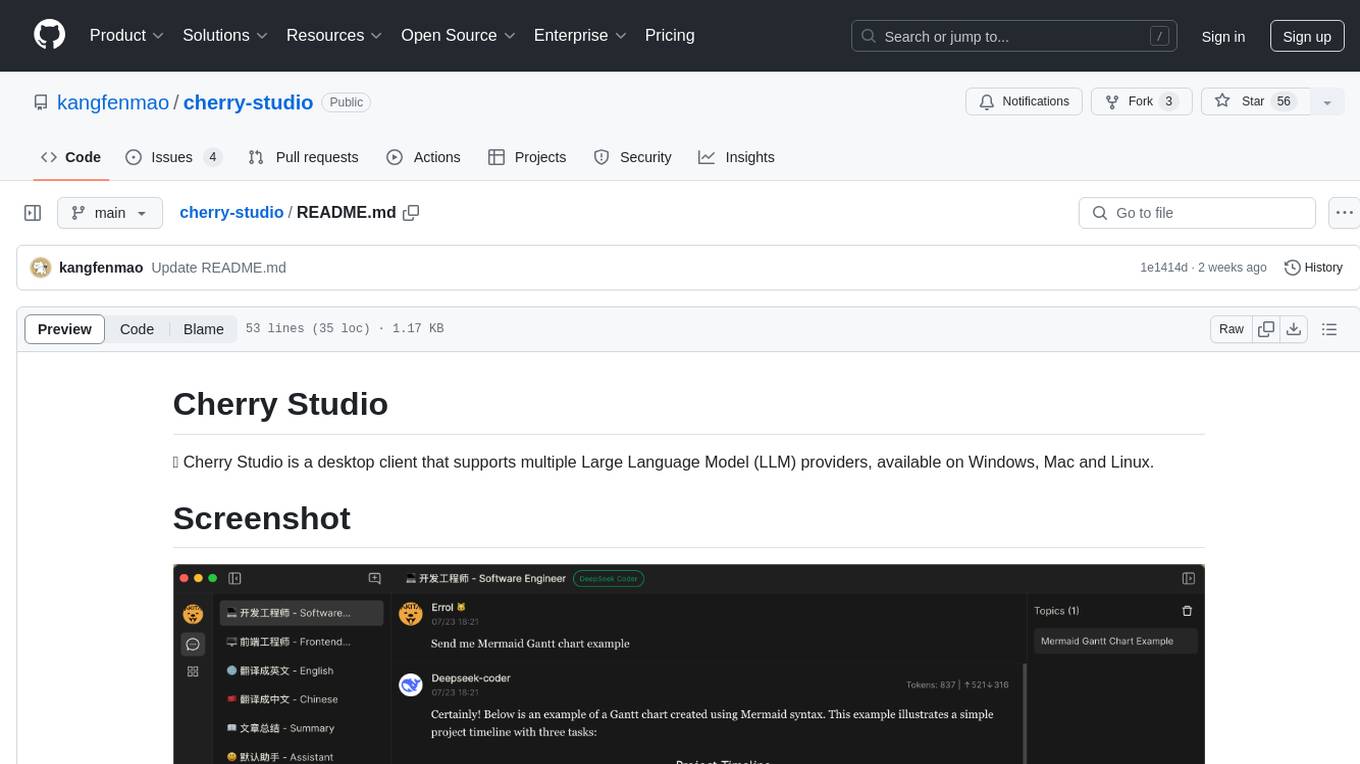

cherry-studio

Cherry Studio is a desktop client that supports multiple Large Language Model (LLM) providers, available on Windows, Mac, and Linux. It allows users to create multiple Assistants and topics, use multiple models to answer questions in the same conversation, and supports drag-and-drop sorting, code highlighting, and Mermaid chart. The tool is designed to enhance productivity and streamline the process of interacting with various language models.

narratrix

NarratrixAI is an AI-powered tabletop roleplaying platform that leverages AI to create dynamic, responsive, and immersive storytelling experiences. It allows users to create their own stories, use it as character chat, or as a full tabletop RPG experience. The platform features a powerful chat system, flexible AI integration, rich character management, powerful storytelling tools, and developer-friendly customization options. Narratrix supports various AI providers through a manifest system and is built with Tauri for native performance across Windows, macOS, and Linux platforms.

memU

MemU is an open-source memory framework designed for AI companions, offering high accuracy, fast retrieval, and cost-effectiveness. It serves as an intelligent 'memory folder' that adapts to various AI companion scenarios. With MemU, users can create AI companions that remember them, learn their preferences, and evolve through interactions. The framework provides advanced retrieval strategies, 24/7 support, and is specialized for AI companions. MemU offers cloud, enterprise, and self-hosting options, with features like memory organization, interconnected knowledge graph, continuous self-improvement, and adaptive forgetting mechanism. It boasts high memory accuracy, fast retrieval, and low cost, making it suitable for building intelligent agents with persistent memory capabilities.

astron-agent

Astron Agent is an enterprise-grade, commercial-friendly Agentic Workflow development platform that integrates AI workflow orchestration, model management, AI and MCP tool integration, RPA automation, and team collaboration features. It supports high-availability deployment, enabling organizations to rapidly build scalable, production-ready intelligent agent applications and establish their AI foundation for the future. The platform is stable, reliable, and business-friendly, with key features such as enterprise-grade high availability, intelligent RPA integration, ready-to-use tool ecosystem, and flexible large model support.

LLM-Agent-Survey

LLM-Agent-Survey is a comprehensive repository that provides a curated list of papers related to Large Language Model (LLM) agents. The repository categorizes papers based on LLM-Profiled Roles and includes high-quality publications from prestigious conferences and journals. It aims to offer a systematic understanding of LLM-based agents, covering topics such as tool use, planning, and feedback learning. The repository also includes unpublished papers with insightful analysis and novelty, marked for future updates. Users can explore a wide range of surveys, tool use cases, planning workflows, and benchmarks related to LLM agents.

LLM

LLM is a repository focused on providing educational courses on artificial intelligence models, specifically focusing on language and image understanding. The courses are taught by Alireza Akhavan Pour and cover topics such as generative AI models and language-image understanding. The repository also includes information on how to contact the team through Telegram channels for course updates and Q&A sessions. Participants are encouraged to add their LLM or VLM course certificates to their LinkedIn profiles and tag Alireza Akhavan Pour for recognition and resume enhancement.

ai

Jetify's AI SDK for Go is a unified interface for interacting with multiple AI providers including OpenAI, Anthropic, and more. It addresses the challenges of fragmented ecosystems, vendor lock-in, poor Go developer experience, and complex multi-modal handling by providing a unified interface, Go-first design, production-ready features, multi-modal support, and extensible architecture. The SDK supports language models, embeddings, image generation, multi-provider support, multi-modal inputs, tool calling, and structured outputs.

joliGEN

JoliGEN is an integrated framework for training custom generative AI image-to-image models. It implements GAN, Diffusion, and Consistency models for various image translation tasks, including domain and style adaptation with conservation of semantics. The tool is designed for real-world applications such as Controlled Image Generation, Augmented Reality, Dataset Smart Augmentation, and Synthetic to Real transforms. JoliGEN allows for fast and stable training with a REST API server for simplified deployment. It offers a wide range of options and parameters with detailed documentation available for models, dataset formats, and data augmentation.

Prompt_Engineering

Prompt Engineering Techniques is a comprehensive repository for learning, building, and sharing prompt engineering techniques, from basic concepts to advanced strategies for leveraging large language models. It provides step-by-step tutorials, practical implementations, and a platform for showcasing innovative prompt engineering techniques. The repository covers fundamental concepts, core techniques, advanced strategies, optimization and refinement, specialized applications, and advanced applications in prompt engineering.

natively-cluely-ai-assistant

Natively is a free, open-source, privacy-first AI assistant designed to help users in real time during meetings, interviews, presentations, and conversations. Unlike traditional AI tools that work after the conversation, Natively operates while the conversation is happening. It runs as an invisible, always-on-top desktop overlay, listens when prompted, observes the screen content, and provides instant, context-aware assistance. The tool is fully transparent, customizable, and grants users complete control over local vs cloud AI, data, and credentials.

For similar tasks

AI_Gen_Novel

AI_Gen_Novel is a project exploring the limits of AI in writing online fiction. Leveraging large language models and multi-agent technology, the tool aims to automatically generate web novels by compressing long texts, optimizing prompts, and enhancing originality. The tool combines the core idea of RecurrentGPT with language-based iterative computation to create texts of any length. Future directions include enhancing model capabilities, optimizing program architecture, and introducing more prior knowledge for structured storytelling.

llm_steer

LLM Steer is a Python module designed to steer Large Language Models (LLMs) towards specific topics or subjects by adding steer vectors to different layers of the model. It enhances the model's capabilities, such as providing correct responses to logical puzzles. The tool should be used in conjunction with the transformers library. Users can add steering vectors to specific layers of the model with coefficients and text, retrieve applied steering vectors, and reset all steering vectors to the initial model. Advanced usage involves changing default parameters, but it may lead to the model outputting gibberish in most cases. The tool is meant for experimentation and can be used to enhance role-play characteristics in LLMs.

Self-Iterative-Agent-System-for-Complex-Problem-Solving

The Self-Iterative Agent System for Complex Problem Solving is a solution developed for the Alibaba Mathematical Competition (AI Challenge). It involves multiple LLMs engaging in multi-round 'self-questioning' to iteratively refine the problem-solving process and select optimal solutions. The system consists of main and evaluation models, with a process that includes detailed problem-solving steps, feedback loops, and iterative improvements. The approach emphasizes communication and reasoning between sub-agents, knowledge extraction, and the importance of Agent-like architectures in complex tasks. While effective, there is room for improvement in model capabilities and error prevention mechanisms.

moonpalace

MoonPalace is a debugging tool for API provided by Moonshot AI. It supports all platforms (Mac, Windows, Linux) and is simple to use by replacing 'base_url' with 'http://localhost:9988'. It captures complete requests, including 'accident scenes' during network errors, and allows quick retrieval and viewing of request information using 'request_id' and 'chatcmpl_id'. It also enables one-click export of BadCase structured reporting data to help improve Kimi model capabilities. MoonPalace is recommended for use as an API 'supplier' during code writing and debugging stages to quickly identify and locate various issues related to API calls and code writing processes, and to export request details for submission to Moonshot AI to improve Kimi model.

log10

Log10 is a one-line Python integration to manage your LLM data. It helps you log both closed and open-source LLM calls, compare and identify the best models and prompts, store feedback for fine-tuning, collect performance metrics such as latency and usage, and perform analytics and monitor compliance for LLM powered applications. Log10 offers various integration methods, including a python LLM library wrapper, the Log10 LLM abstraction, and callbacks, to facilitate its use in both existing production environments and new projects. Pick the one that works best for you. Log10 also provides a copilot that can help you with suggestions on how to optimize your prompt, and a feedback feature that allows you to add feedback to your completions. Additionally, Log10 provides prompt provenance, session tracking and call stack functionality to help debug prompt chains. With Log10, you can use your data and feedback from users to fine-tune custom models with RLHF, and build and deploy more reliable, accurate and efficient self-hosted models. Log10 also supports collaboration, allowing you to create flexible groups to share and collaborate over all of the above features.

LMOps

LMOps is a research initiative focusing on fundamental research and technology for building AI products with foundation models, particularly enabling AI capabilities with Large Language Models (LLMs) and Generative AI models. The project explores various aspects such as prompt optimization, longer context handling, LLM alignment, acceleration of LLMs, LLM customization, and understanding in-context learning. It also includes tools like Promptist for automatic prompt optimization, Structured Prompting for efficient long-sequence prompts consumption, and X-Prompt for extensible prompts beyond natural language. Additionally, LLMA accelerators are developed to speed up LLM inference by referencing and copying text spans from documents. The project aims to advance technologies that facilitate prompting language models and enhance the performance of LLMs in various scenarios.

awesome-llm-json

This repository is an awesome list dedicated to resources for using Large Language Models (LLMs) to generate JSON or other structured outputs. It includes terminology explanations, hosted and local models, Python libraries, blog articles, videos, Jupyter notebooks, and leaderboards related to LLMs and JSON generation. The repository covers various aspects such as function calling, JSON mode, guided generation, and tool usage with different providers and models.

PromptAgent

PromptAgent is a repository for a novel automatic prompt optimization method that crafts expert-level prompts using language models. It provides a principled framework for prompt optimization by unifying prompt sampling and rewarding using MCTS algorithm. The tool supports different models like openai, palm, and huggingface models. Users can run PromptAgent to optimize prompts for specific tasks by strategically sampling model errors, generating error feedbacks, simulating future rewards, and searching for high-reward paths leading to expert prompts.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.