wanwu

China Unicom's Yuanjing Wanwu Agent Platform is an enterprise-grade, multi-tenant AI agent development platform. It helps users build applications such as intelligent agents, workflows, and rag, and also supports model management. The platform features a developer-friendly license, and we welcome all developers to build upon the platform.

Stars: 1398

Wanwu AI Agent Platform is an enterprise-grade one-stop commercially friendly AI agent development platform designed for business scenarios. It provides enterprises with a safe, efficient, and compliant one-stop AI solution. The platform integrates cutting-edge technologies such as large language models and business process automation to build an AI engineering platform covering model full life-cycle management, MCP, web search, AI agent rapid development, enterprise knowledge base construction, and complex workflow orchestration. It supports modular architecture design, flexible functional expansion, and secondary development, reducing the application threshold of AI technology while ensuring security and privacy protection of enterprise data. It accelerates digital transformation, cost reduction, efficiency improvement, and business innovation for enterprises of all sizes.

README:

Wanwu AI Agent Platform is an enterprise-grade one-stop commercially friendly AI agent development platform designed for business scenarios. It is committed to providing enterprises with a safe, efficient, and compliant one-stop AI solution. With the core philosophy of "technology openness and ecological co-construction", we integrate cutting-edge technologies such as large language models and business process automation to build an AI engineering platform with a complete functional system covering model full life-cycle management, MCP, web search, AI agent rapid development, enterprise knowledge base construction, and complex workflow orchestration. The platform adopts a modular architecture design, supports flexible functional expansion and secondary development, and greatly reduces the application threshold of AI technology while ensuring the security and privacy protection of enterprise data. Whether it is for small and medium-sized enterprises to quickly build intelligent applications or for large enterprises to achieve intelligent transformation of complex business scenarios, the Wanwu AI Agent Platform can provide strong technical support to help enterprises accelerate the process of digital transformation, achieve cost reduction and efficiency improvement, and business innovation.

🔥 Adopt a permissive and friendly Apache 2.0 License, supporting developers to freely expand and develop secondary

✔ Enterprise-level engineering: Provides a complete toolchain from model management to application landing, solving the "last mile" problem of LLM technology landing

✔ Open-source ecological: Adopt a permissive and friendly Apache 2.0 License, supporting developers to freely expand and develop

✔ Full-stack technology support: Equipped with a professional team to provide architecture consulting, performance optimization and full-cycle empowerment for ecological partners

✔ Multi-tenant architecture: Provides a multi-tenant account system to meet the core needs of users in cost control, data security isolation, business elasticity expansion, industry customization, rapid online and ecological collaboration

✔ XinChuang adaptation: Already adapted to domestic XinChuang databases TiDB and OceanBase

1. Model Management (Model Hub) ▸ Supports the unified access and lifecycle management of hundreds of proprietary/open-source large models (including GPT, Claude, Llama, etc.)

▸ Deeply adapts to OpenAI API standards and Unicom Yuanjing ecological models, realizing seamless switching of heterogeneous models

▸ Provides multi-inference backend support (vLLM, TGI, etc.) and self-hosted solutions to meet the computing power needs of enterprises of different scales

▸ Standardized interfaces: Enable AI models to seamlessly connect to various external tools (such as GitHub, Slack, databases, etc.) without the need to develop adapters for each data source separately

▸ Built-in rich and selected recommendations: Integrates 100+ industry MCP interfaces, making it easy for users to call up quickly and easily

▸ Real-time information acquisition: Possesses powerful web search capabilities, capable of obtaining the latest information from the Internet in real-time. In question and answer scenarios, when a user's question requires the latest news, data, and other information, the platform can quickly search and return accurate results, enhancing the timeliness and accuracy of the answers

▸ Multi-source data integration: Integrates various Internet data sources, including news websites, academic databases, industry reports, etc. Through the integration and analysis of multi-source data, it provides users with more comprehensive and in-depth information. For example, in market research scenarios, relevant data can be obtained from multiple data sources at the same time for comprehensive analysis and evaluation

▸ Intelligent search strategy: Adopt intelligent search algorithms, automatically optimize search strategies based on user questions to improve search efficiency and accuracy. Support keyword search, semantic search and other search methods to meet the needs of different users. At the same time, intelligently sort and filter search results, prioritize the display of the most relevant and valuable information

▸ Quickly build complex AI business processes through low-code drag-and-drop canvas

▸ Built-in conditional branching, API, large model, knowledge base, code, MCP and other nodes, support end-to-end process debugging and performance analysis

▸ Provides the whole process knowledge management capabilities of knowledge base creation → document parsing → vectorization → retrieval → fine sorting, supports multiple formats such as pdf/docx/txt/xlsx/csv/pptx documents, and also supports the capture and access of web resources

▸ Integrates multi-modal retrieval, cascading segmentation and adaptive segmentation, significantly improves the accuracy of Q&A

▸ Can be based on the function call (Function Calling) agent construction paradigm, supports tool expansion, private knowledge base association and multi-round dialogue

▸ Support online debugging

▸ Provides RESTful API, supports deep integration with existing enterprise systems (OA/CRM/ERP, etc.)

▸ Provides fine-grained permission control to ensure stable operation in production environments

| Function | Wanwu | Dify.AI | Fastgpt | Ragflow | Coze open source version |

|---|---|---|---|---|---|

| Model import | ✅ | ✅ | ❌(Built-in models) | ✅ | ❌(Built-in models) |

| RAG engine | ✅ | ✅ | ✅ | ✅ | ✅ |

| MCP | ✅ | ✅ | ✅ | ✅(Need to install tools to use) | ❌ |

| Direct OCR import | ✅ | ❌ | ❌ | ❌ | ❌ |

| Search enhancement | ✅ | ✅(Need to install tools to use) | ✅ | ✅(Need to install tools to use) | ✅ |

| Agent | ✅ | ✅ | ✅ | ✅ | ✅ |

| Workflow | ✅ | ✅ | ✅ | ✅ | ✅ |

| Local deployment | ✅ | ✅ | ✅ | ✅ | ✅ |

| license friendly | ✅ | ❌(Commercially restricted) | ❌(Commercially restricted) | Not fully open source | ✅ |

| Multi-tenant | ✅ | ❌(Commercially restricted) | ❌(Commercially restricted) | ✅ | ✅(Users are not interconnected) |

As of August 1, 2025.

- Intelligent Customer Service: Realize high-accuracy business consultation and ticket processing based on RAG + Agent

- Knowledge Management: Build an exclusive enterprise knowledge base, support semantic search and intelligent summary generation

- Process Automation: Realize AI-assisted decision-making for business processes such as contract review and reimbursement approval through the workflow engine The platform has been successfully applied in multiple industries such as finance, industry, and government, helping enterprises transform the theoretical value of LLM technology into actual business benefits. We sincerely invite developers to join the open source community and jointly promote the democratization of AI technology.

- The workflow module of the Wanwu AI Agent Platform uses the following project, you can go to its warehouse to view the details.

- v0.1.8 and earlier: wanwu-agentscope project

- v0.2.0 and later: wanwu-workflow project

- Docker Installation (Recommended)

-

Before the first run

1.1 Copy the environment variable file

cp .env.bak .env

1.2 Modify the

WANWU_ARCHandWANWU_EXTERNAL_IPvariables in the.envfile according to the system# amd64 / arm64 WANWU_ARCH=amd64 # external ip port (Note: if the browser accesses Wanwu deployed on a non-localhost server, you need to change localhost to the external IP, for example, 192.168.xx.xx) WANWU_EXTERNAL_IP=localhost1.3 Create a Docker running network

docker network create wanwu-net -

Start the service (the image will be automatically pulled from Docker Hub during the first run)

# For amd64 system: docker compose --env-file .env --env-file .env.image.amd64 up -d # For arm64 system: docker compose --env-file .env --env-file .env.image.arm64 up -d

-

Log in to the system: http://localhost:8081

Default user: admin Default password: Wanwu123456 -

Stop the service

# For amd64 system: docker compose --env-file .env --env-file .env.image.amd64 down # For arm64 system: docker compose --env-file .env --env-file .env.image.arm64 down

- Source Code Start (Development)

- Based on the above Docker installation steps, start the system service completely

- Take the backend bff-service service as an example

2.1 Stop bff-service

2.2 Compile the bff-service executable filemake -f Makefile.develop stop-bff

2.3 Start bff-service# For amd64 system: make build-bff-amd64 # For arm64 system: make build-bff-arm64make -f Makefile.develop run-bff

- Based on the above Docker installation steps, completely stop the system service

- Update to the latest version of the code

2.1 In the wanwu repository directory, update the code

2.2 Recopy the environment variable file (if there are changes to the environment variables, please modify them again)

# Switch to the main branch git checkout main # Pull the latest code git pull

# Backup the current .env file cp .env .env.old # Copy the .env file cp .env.bak .env

- Based on the above Docker installation steps, completely start the system service

To help you quickly get started with this project, we strongly recommend that you first check out the Documentation Operation Manual. We provide users with interactive and structured operation guides, where you can directly view operation instructions, interface documents, etc., greatly reducing the threshold for learning and use. The detailed function list is as follows:

| Feature | Detailed Description |

|---|---|

| Model Management | Supports users to import LLM, Embedding, and Rerank models from various model providers, including Unicom Yuanjing, OpenAI-API-compatible, Ollama, Tongyi Qianwen, and Volcano Engine. Model Import Methods - Detailed Version |

| Knowledge Base | In terms of document parsing capabilities: supports uploading of 12 file types and URL parsing; document parsing methods include OCR and high-precision model parsing (titles/tables/formulas); document segmentation settings support both general segmentation and parent-child segmentation. In terms of optimization capabilities: supports metadata management and metadata filtering queries, supports adding, deleting, and modifying segmented content, supports setting keyword tags for segments to improve recall performance, supports segment enable/disable operations, and supports hit testing. In terms of retrieval capabilities: supports multiple retrieval modes including vector search, full-text search, and hybrid search. In terms of Q&A capabilities: supports automatic citation of sources and generating answers with both text and images.<br |

| Resource Library | Supports importing your own MCP services or custom tools for use in workflows and agents. |

| Safety Guardrails | Users can create sensitive word lists to control the safety of the model's output. |

| Text Q&A | A dedicated knowledge advisor based on a private knowledge base. It supports features like knowledge base management, Q&A, knowledge summarization, personalized parameter configuration, safety guardrails, and retrieval configuration to improve the efficiency of knowledge management and learning. Supports publishing text Q&A applications publicly or privately, and can be published as an API. |

| Workflow | Extends the capabilities of agents. Composed of nodes, it provides a visual workflow editor. Users can orchestrate multiple different workflow nodes to implement complex and stable business processes. Supports publishing workflow applications publicly or privately, can be published as an API, and supports import/export. |

| Agent | Create agents based on user scenarios and business requirements. Supports model selection, prompt setting, web search, knowledge base selection, MCP, workflows, and custom tools. Supports publishing agent applications publicly or privately, and can be published as an API and a Web URL. |

| App Marketplace | Allows users to experience published applications, including Text Q&A, Workflows, and Agents. |

| MCP Hub | Features 100+ pre-selected industry-specific MCP servers, ready for immediate use. |

| Settings | The platform supports multi-tenancy, allowing users to manage organizations, roles, users, and perform basic platform configuration. |

- [ ] Multi-modal model access

- [ ] Support custom MCP Server, which means that workflows, agents, or APIs that conform to the OpenAPI specification can be added to the MCP Server for release

- [ ] Knowledge base sharing

- [ ] Agent and model evaluation

- [ ] Agent monitoring statistics

- [ ] Model experience

- [ ] Prompt engineering

-

[Q] Error when starting Elastic (elastic-wanwu) on Linux system: Memory limited without swap. [A] Stop the service, run

sudo sysctl -w vm.max_map_count=262144, and then restart the service. -

[Q] After the system services start normally, the mysql-wanwu-setup and elastic-wanwu-setup containers exit with status code Exited (0). [A] This is normal. These two containers are used to complete some initialization tasks and will automatically exit after execution.

-

[Q] Regarding model import [A] Taking the import of Unicom Yuanjing LLM as an example (the process is similar for importing OpenAI-API-compatible models, Embedding, or Rerank types):

1. The Open API interface for Unicom Yuanjing MaaS Cloud LLM is, for example: https://maas.ai-yuanjing.com/openapi/compatible-mode/v1/chat/completions 2. The API Key applied for by the user on Unicom Yuanjing MaaS Cloud looks like: sk-abc********************xyz 3. Confirm that the API and Key can correctly request the LLM. Taking a request to yuanjing-70b-chat as an example: curl --location 'https://maas.ai-yuanjing.com/openapi/compatible-mode/v1/chat/completions' \ --header 'Content-Type: application/json' \ --header 'Accept: application/json' \ --header 'Authorization: Bearer sk-abc********************xyz' \ --data '{ "model": "yuanjing-70b-chat", "messages": [{ "role": "user", "content": "你好" }] }' 4. Import the model: 4.1 [Model Name] must be the model that can be correctly requested in the curl command above; for example, yuanjing-70b-chat. 4.2 [API Key] must be the key that can be correctly requested in the curl command above; for example, sk-abc********************xyz (note: do not include the 'Bearer' prefix). 4.3 [Inference URL] must be the URL that can be correctly requested in the curl command above; for example, https://maas.ai-yuanjing.com/openapi/compatible-mode/v1 (note: do not include the /chat/completions suffix). 5. Importing an Embedding model is the same as importing an LLM as described above. Note that the inference URL should not include the /embeddings suffix. 6. Importing a Rerank model is the same as importing an LLM as described above. Note that the inference URL should not include the /rerank suffix.

The Yuanjing Wanwu AI Agent Platform is released under the Apache License 2.0.

| QQ Group1(Full):490071123 | QQ Group2:1026898615 |

|---|---|

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for wanwu

Similar Open Source Tools

wanwu

Wanwu AI Agent Platform is an enterprise-grade one-stop commercially friendly AI agent development platform designed for business scenarios. It provides enterprises with a safe, efficient, and compliant one-stop AI solution. The platform integrates cutting-edge technologies such as large language models and business process automation to build an AI engineering platform covering model full life-cycle management, MCP, web search, AI agent rapid development, enterprise knowledge base construction, and complex workflow orchestration. It supports modular architecture design, flexible functional expansion, and secondary development, reducing the application threshold of AI technology while ensuring security and privacy protection of enterprise data. It accelerates digital transformation, cost reduction, efficiency improvement, and business innovation for enterprises of all sizes.

WeKnora

WeKnora is a document understanding and semantic retrieval framework based on large language models (LLM), designed specifically for scenarios with complex structures and heterogeneous content. The framework adopts a modular architecture, integrating multimodal preprocessing, semantic vector indexing, intelligent recall, and large model generation reasoning to build an efficient and controllable document question-answering process. The core retrieval process is based on the RAG (Retrieval-Augmented Generation) mechanism, combining context-relevant segments with language models to achieve higher-quality semantic answers. It supports various document formats, intelligent inference, flexible extension, efficient retrieval, ease of use, and security and control. Suitable for enterprise knowledge management, scientific literature analysis, product technical support, legal compliance review, and medical knowledge assistance.

agent-zero

Agent Zero is a personal, organic agentic framework designed to be dynamic, transparent, customizable, and interactive. It uses the computer as a tool to accomplish tasks, with features like general-purpose assistant, computer as a tool, multi-agent cooperation, customizable and extensible framework, and communication skills. The tool is fully Dockerized, with Speech-to-Text and TTS capabilities, and offers real-world use cases like financial analysis, Excel automation, API integration, server monitoring, and project isolation. Agent Zero can be dangerous if not used properly and is prompt-based, guided by the prompts folder. The tool is extensively documented and has a changelog highlighting various updates and improvements.

beeai-platform

BeeAI is an open-source platform that simplifies the discovery, running, and sharing of AI agents across different frameworks. It addresses challenges such as framework fragmentation, deployment complexity, and discovery issues by providing a standardized platform for individuals and teams to access agents easily. With features like a centralized agent catalog, framework-agnostic interfaces, containerized agents, and consistent user experiences, BeeAI aims to streamline the process of working with AI agents for both developers and teams.

saga-reader

Saga Reader is an AI-driven think tank-style reader that automatically retrieves information from the internet based on user-specified topics and preferences. It uses cloud or local large models to summarize and provide guidance, and it includes an AI-driven interactive companion reading function, allowing you to discuss and exchange ideas with AI about the content you've read. Saga Reader is completely free and open-source, meaning all data is securely stored on your own computer and is not controlled by third-party service providers. Additionally, you can manage your subscription keywords based on your interests and preferences without being disturbed by advertisements and commercialized content.

chatnio

Chat Nio is a next-generation AIGC one-stop business solution that combines the advantages of frontend-oriented lightweight deployment projects with powerful API distribution systems. It offers rich model support, beautiful UI design, complete Markdown support, multi-theme support, internationalization support, text-to-image support, powerful conversation sync, model market & preset system, rich file parsing, full model internet search, Progressive Web App (PWA) support, comprehensive backend management, multiple billing methods, innovative model caching, and additional features. The project aims to address limitations in conversation synchronization, billing, file parsing, conversation URL sharing, channel management, and API call support found in existing AIGC commercial sites, while also providing a user-friendly interface design and C-end features.

TaskingAI

TaskingAI brings Firebase's simplicity to **AI-native app development**. The platform enables the creation of GPTs-like multi-tenant applications using a wide range of LLMs from various providers. It features distinct, modular functions such as Inference, Retrieval, Assistant, and Tool, seamlessly integrated to enhance the development process. TaskingAI’s cohesive design ensures an efficient, intelligent, and user-friendly experience in AI application development.

LiteRT

LiteRT is Google's open-source high-performance runtime for on-device AI, previously known as TensorFlow Lite. The repository is currently not intended for open-source development, but aims to evolve to allow direct building and contributions. LiteRT supports Python versions 3.9, 3.10, 3.11 on Linux and MacOS. It ensures compatibility with existing .tflite file extension and format, offering conversion tools and continued active development under the name LiteRT.

instill-core

Instill Core is an open-source orchestrator comprising a collection of source-available projects designed to streamline every aspect of building versatile AI features with unstructured data. It includes Instill VDP (Versatile Data Pipeline) for unstructured data, AI, and pipeline orchestration, Instill Model for scalable MLOps and LLMOps for open-source or custom AI models, and Instill Artifact for unified unstructured data management. Instill Core can be used for tasks such as building, testing, and sharing pipelines, importing, serving, fine-tuning, and monitoring ML models, and transforming documents, images, audio, and video into a unified AI-ready format.

higress

Higress is an open-source cloud-native API gateway built on the core of Istio and Envoy, based on Alibaba's internal practice of Envoy Gateway. It is designed for AI-native API gateway, serving AI businesses such as Tongyi Qianwen APP, Bailian Big Model API, and Machine Learning PAI platform. Higress provides capabilities to interface with LLM model vendors, AI observability, multi-model load balancing/fallback, AI token flow control, and AI caching. It offers features for AI gateway, Kubernetes Ingress gateway, microservices gateway, and security protection gateway, with advantages in production-level scalability, stream processing, extensibility, and ease of use.

synmetrix

Synmetrix is an open source data engineering platform and semantic layer for centralized metrics management. It provides a complete framework for modeling, integrating, transforming, aggregating, and distributing metrics data at scale. Key features include data modeling and transformations, semantic layer for unified data model, scheduled reports and alerts, versioning, role-based access control, data exploration, caching, and collaboration on metrics modeling. Synmetrix leverages Cube.js to consolidate metrics from various sources and distribute them downstream via a SQL API. Use cases include data democratization, business intelligence and reporting, embedded analytics, and enhancing accuracy in data handling and queries. The tool speeds up data-driven workflows from metrics definition to consumption by combining data engineering best practices with self-service analytics capabilities.

cherry-studio

Cherry Studio is a desktop client that supports multiple LLM providers on Windows, Mac, and Linux. It offers diverse LLM provider support, AI assistants & conversations, document & data processing, practical tools integration, and enhanced user experience. The tool includes features like support for major LLM cloud services, AI web service integration, local model support, pre-configured AI assistants, document processing for text, images, and more, global search functionality, topic management system, AI-powered translation, and cross-platform support with ready-to-use features and themes for a better user experience.

ApeRAG

ApeRAG is a production-ready platform for Retrieval-Augmented Generation (RAG) that combines Graph RAG, vector search, and full-text search with advanced AI agents. It is ideal for building Knowledge Graphs, Context Engineering, and deploying intelligent AI agents for autonomous search and reasoning across knowledge bases. The platform offers features like advanced index types, intelligent AI agents with MCP support, enhanced Graph RAG with entity normalization, multimodal processing, hybrid retrieval engine, MinerU integration for document parsing, production-grade deployment with Kubernetes, enterprise management features, MCP integration, and developer-friendly tools for customization and contribution.

mlcraft

Synmetrix (prev. MLCraft) is an open source data engineering platform and semantic layer for centralized metrics management. It provides a complete framework for modeling, integrating, transforming, aggregating, and distributing metrics data at scale. Key features include data modeling and transformations, semantic layer for unified data model, scheduled reports and alerts, versioning, role-based access control, data exploration, caching, and collaboration on metrics modeling. Synmetrix leverages Cube (Cube.js) for flexible data models that consolidate metrics from various sources, enabling downstream distribution via a SQL API for integration into BI tools, reporting, dashboards, and data science. Use cases include data democratization, business intelligence, embedded analytics, and enhancing accuracy in data handling and queries. The tool speeds up data-driven workflows from metrics definition to consumption by combining data engineering best practices with self-service analytics capabilities.

koog

Koog is a Kotlin-based framework for building and running AI agents entirely in idiomatic Kotlin. It allows users to create agents that interact with tools, handle complex workflows, and communicate with users. Key features include pure Kotlin implementation, MCP integration, embedding capabilities, custom tool creation, ready-to-use components, intelligent history compression, powerful streaming API, persistent agent memory, comprehensive tracing, flexible graph workflows, modular feature system, scalable architecture, and multiplatform support.

aibrix

AIBrix is an open-source initiative providing essential building blocks for scalable GenAI inference infrastructure. It delivers a cloud-native solution optimized for deploying, managing, and scaling large language model (LLM) inference, tailored to enterprise needs. Key features include High-Density LoRA Management, LLM Gateway and Routing, LLM App-Tailored Autoscaler, Unified AI Runtime, Distributed Inference, Distributed KV Cache, Cost-efficient Heterogeneous Serving, and GPU Hardware Failure Detection.

For similar tasks

wanwu

Wanwu AI Agent Platform is an enterprise-grade one-stop commercially friendly AI agent development platform designed for business scenarios. It provides enterprises with a safe, efficient, and compliant one-stop AI solution. The platform integrates cutting-edge technologies such as large language models and business process automation to build an AI engineering platform covering model full life-cycle management, MCP, web search, AI agent rapid development, enterprise knowledge base construction, and complex workflow orchestration. It supports modular architecture design, flexible functional expansion, and secondary development, reducing the application threshold of AI technology while ensuring security and privacy protection of enterprise data. It accelerates digital transformation, cost reduction, efficiency improvement, and business innovation for enterprises of all sizes.

LightMem

LightMem is a lightweight and efficient memory management framework designed for Large Language Models and AI Agents. It provides a simple yet powerful memory storage, retrieval, and update mechanism to help you quickly build intelligent applications with long-term memory capabilities. The framework is minimalist in design, ensuring minimal resource consumption and fast response times. It offers a simple API for easy integration into applications with just a few lines of code. LightMem's modular architecture supports custom storage engines and retrieval strategies, making it flexible and extensible. It is compatible with various cloud APIs like OpenAI and DeepSeek, as well as local models such as Ollama and vLLM.

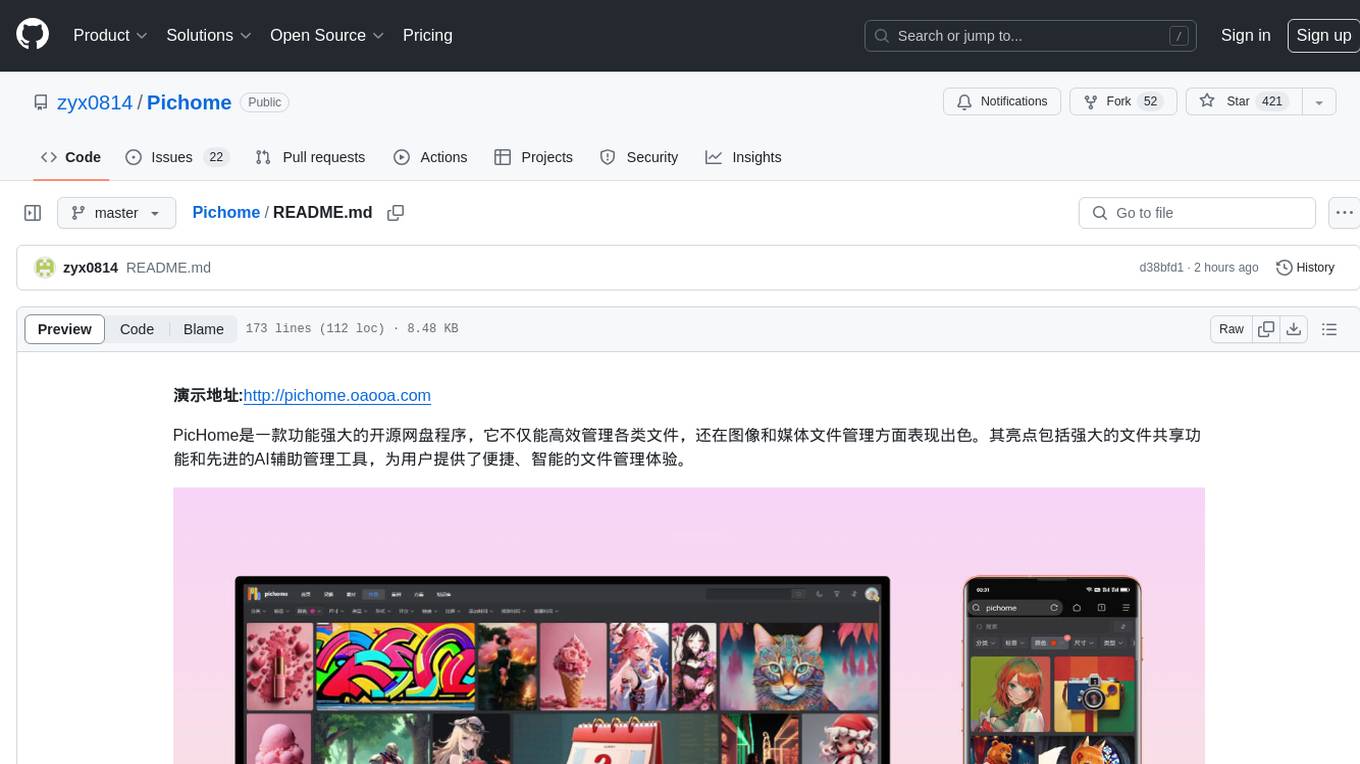

Pichome

PicHome is a powerful open-source cloud storage program that efficiently manages various types of files and excels in image and media file management. Its highlights include robust file sharing features and advanced AI-assisted management tools, providing users with a convenient and intelligent file management experience. The program offers diverse list modes, customizable file information display, enhanced quick file preview, advanced tagging, custom cover and preview images, multiple preview images, and multi-library management. Additionally, PicHome features strong file sharing capabilities, allowing users to share entire libraries, create personalized showcase web pages, and build complete data sharing websites. The AI-assisted management aspect includes AI file renaming, tagging, description writing, batch annotation, and file Q&A services, all aimed at improving file management efficiency. PicHome supports a wide range of file formats and can be applied in various scenarios such as e-commerce, gaming, design, development, enterprises, schools, labs, media, and entertainment institutions.

MInference

MInference is a tool designed to accelerate pre-filling for long-context Language Models (LLMs) by leveraging dynamic sparse attention. It achieves up to a 10x speedup for pre-filling on an A100 while maintaining accuracy. The tool supports various decoding LLMs, including LLaMA-style models and Phi models, and provides custom kernels for attention computation. MInference is useful for researchers and developers working with large-scale language models who aim to improve efficiency without compromising accuracy.

HaE

HaE is a framework project in the field of network security (data security) that combines artificial intelligence (AI) large models to achieve highlighting and information extraction of HTTP messages (including WebSocket). It aims to reduce testing time, focus on valuable and meaningful messages, and improve vulnerability discovery efficiency. The project provides a clear and visual interface design, simple interface interaction, and centralized data panel for querying and extracting information. It also features built-in color upgrade algorithm, one-click export/import of data, and integration of AI large models API for optimized data processing.

wtf.nvim

wtf.nvim is a Neovim plugin that enhances diagnostic debugging by providing explanations and solutions for code issues using ChatGPT. It allows users to search the web for answers directly from Neovim, making the debugging process faster and more efficient. The plugin works with any language that has LSP support in Neovim, offering AI-powered diagnostic assistance and seamless integration with various resources for resolving coding problems.

LLMSpeculativeSampling

This repository implements speculative sampling for large language model (LLM) decoding, utilizing two models - a target model and an approximation model. The approximation model generates token guesses, corrected by the target model, resulting in improved efficiency. It includes implementations of Google's and Deepmind's versions of speculative sampling, supporting models like llama-7B and llama-1B. The tool is designed for fast inference from transformers via speculative decoding.

AI-Studio

MindWork AI Studio is a desktop application that provides a unified chat interface for Large Language Models (LLMs). It is free to use for personal and commercial purposes, offers independence in choosing LLM providers, provides unrestricted usage through the providers API, and is cost-effective with pay-as-you-go pricing. The app prioritizes privacy, flexibility, minimal storage and memory usage, and low impact on system resources. Users can support the project through monthly contributions or one-time donations, with opportunities for companies to sponsor the project for public relations and marketing benefits. Planned features include support for more LLM providers, system prompts integration, text replacement for privacy, and advanced interactions tailored for various use cases.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.