OpenCat

An open source quadruped robot pet framework for developing Boston Dynamics-style four-legged robots that are perfect for STEM, coding & robotics education, IoT robotics applications, AI-enhanced robotics application services, research, and DIY robotics kit development.

Stars: 3501

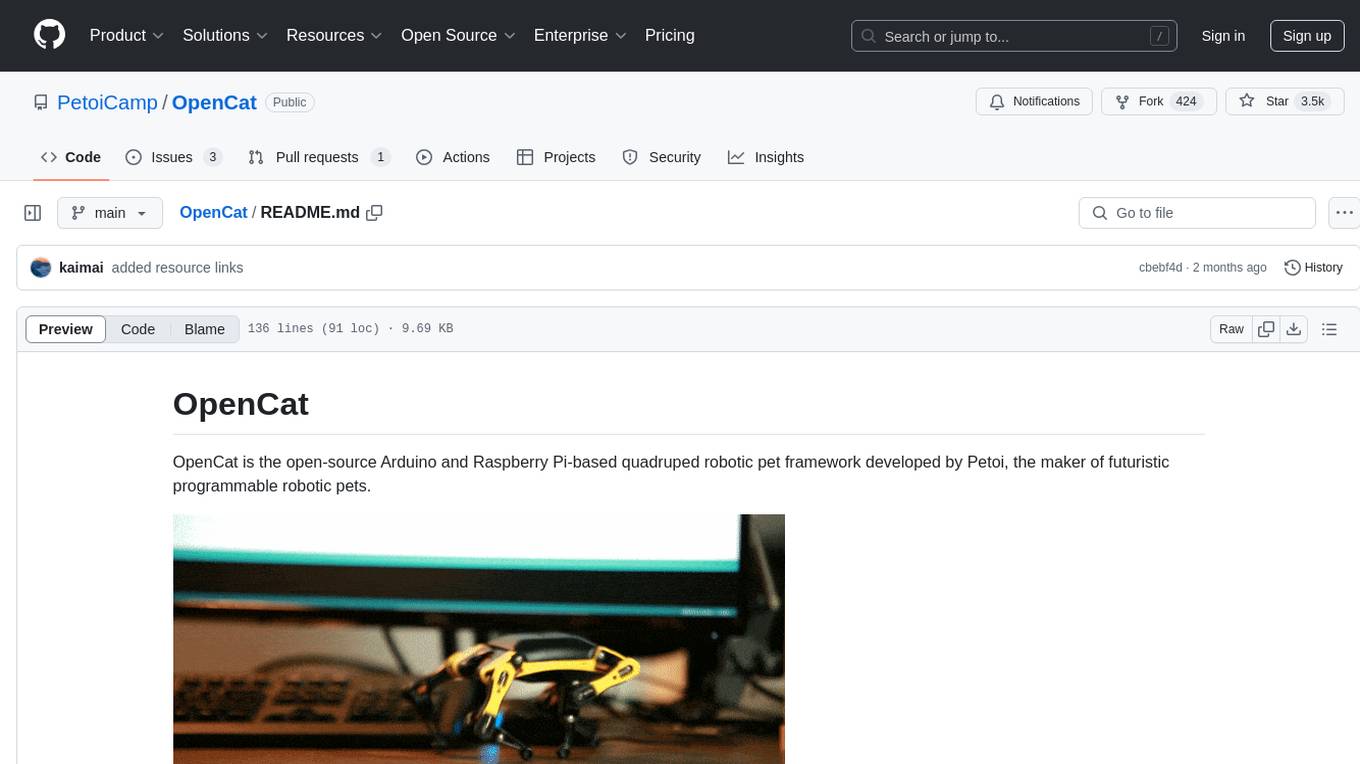

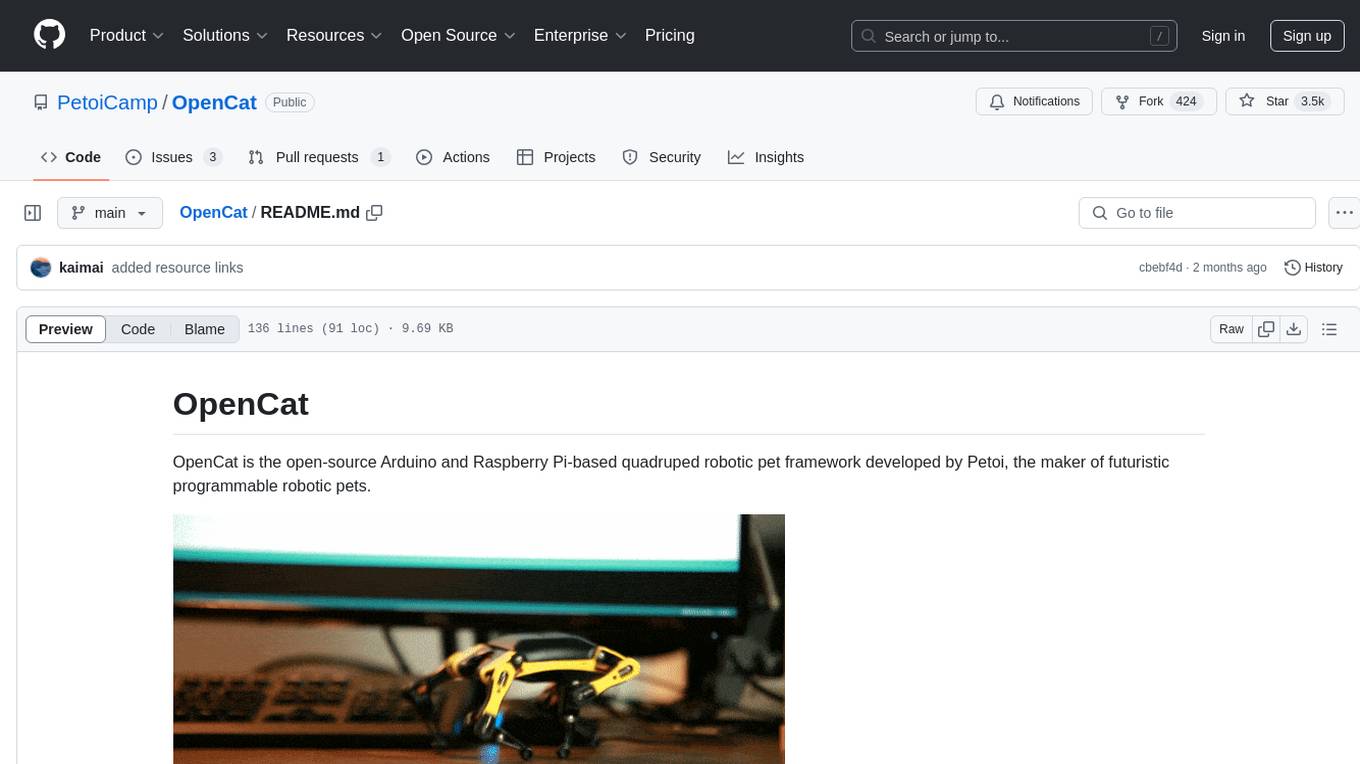

OpenCat is an open-source Arduino and Raspberry Pi-based quadruped robotic pet framework developed by Petoi. It aims to foster collaboration in quadruped robotics research, education, and engineering development of agile and affordable quadruped robot pets. The project provides a base open source platform for creating programmable gaits, locomotion, and deployment of inverse kinematics quadruped robots, enabling simulations to the real world via block-based coding/C/C++/Python programming languages. Users have deployed various robotics/AI/IoT applications and the project has successfully crowdfunded mini robot kits, shipped worldwide, and established a production line for affordable robotic kits and accessories.

README:

OpenCat is the open-source Arduino and Raspberry Pi-based quadruped robotic pet framework developed by Petoi, the maker of futuristic programmable robotic pets.

Inspired by Boston Dynamics' Big Dog and Spot Mini, Dr. Rongzhong Li started the project in his dorm at Wake Forest University in 2016. After one year of R&D, he founded Petoi LLC and devoted all his resources to developing open source robots.

The goal is to foster collaboration in quadruped(four-legged) robotic research, education, and engineering development of agile and affordable quadruped robot pets, bring STEM concepts to the mass, and inspire newcomers (including many kids and adults) to join the robotic AI revolution to create more applications.

The project is a complex quadruped robot system for anyone exploring quadruped robotics. We want to share our design and work with the community and bring down the hardware and software costs with mass production. OpenCat has been deployed on all Petoi's bionic palm-sized, realistic lifelike cute robot cat Nybble and high-performance robot dog Bittle. We now have established a production line and can ship these affordable robotic kits and accessories worldwide.

This project provides a base open source platform to create amazing programmable gaits, locomotion, and deployment of inverse kinematics quadruped robots and bring simulations to the real world via block-based coding/C/C++/Python programming languages.

Our users have deployed many robotics/AI/IoT applications:

- robot dog with autonomous movement & object detection

- Raspberry Pi robotics projects

- NVIDIA Issac simulations and reinforcement learning on our robots

- developed visual and lidar-based SLAM with ROS using Bittle and Raspberry Pi

- imitation learning using Tiny Machine Learning Models with Petoi Bittle and Raspberry Pi

- IoT automation of a robot fleet with AWS to improve worker safety

- 3D-Printed Accessories

- build their own DIY 3D-print robot pets powered by OpenCat

We've successfully crowdfunded these two mini robot kits and shipped thousands of units worldwide.

With our customized Arduino-Uno board and high-performance servos coordinating all instinctive and sophisticated movements(walking, running, jumping, backflipping), one can clip on various sensors and camera to bring in perception and inject artificial intelligence capabilities by mounting a Raspberry Pi or other AI chips(such as Nvidia Jetson Nano) through wired/wireless connections.

Please see Petoi FAQs for more info.

Also, Check out all of the OpenCat and Petoi robot user showcases.

OpenCat software works on both Nybble and Bittle, controlled by NyBoard based on ATmega328P. More detailed documentation can be found at the Petoi Doc Center.

To configure the board:

-

Download the repo and unfold. Remove the -main (or any branch name) suffix of the folder.

-

Open the file OpenCat.ino, select your robot and board version.

#define BITTLE //Petoi 9 DOF robot dog: 1x on head + 8x on leg

//#define NYBBLE //Petoi 11 DOF robot cat: 2x on head + 1x on tail + 8x on leg

//#define NyBoard_V0_1

//#define NyBoard_V0_2

#define NyBoard_V1_0

//#define NyBoard_V1_1- Comment out

#define MAIN_SKETCHso that it will turn the code to the board configuration mode. Upload and follow the serial prompts to proceed.

// #define MAIN_SKETCH-

If you activate

#define AUTO_INIT, the program will automatically set up without prompts. It will not reset joint offsets but calibrate the IMU. It's just a convenient option for our production line. -

Plug the USB uploader to the NyBoard and install the driver if no USB port is found under Arduino -> Tools -> Port.

-

Press the upload button (->) at the top-left corner in Arduino IDE.

-

Open the serial monitor of Arduino IDE. You can find the button either under Tools, or at the top-right corner of the IDE.

Set the serial monitor as no line ending and 115200 baud rate. The serial prompts:

Reset joint offsets? (Y/n)

Y

Input ‘Y’ and hit enter, if you want to reset all the joint offsets to 0.

The program will do the reset, then update the constants and instinctive skills in the static memory.

- IMU (Inertial Measurement Unit) calibration.

The serial prompts:

Calibrate the IMU? (Y/n):

Y

Input ‘Y’ and hit enter, if you have never calibrated the IMU or want to redo calibration.

Put the robot flat on the table and don't touch it. The robot will long beep six times to give you enough time. Then it will read hundreds of sensor data and save the offsets. It will beep when the calibration finishes.

When the serial monitor prints "Ready!", you can close the serial monitor to do the next step.

- Uncomment

#define MAIN_SKETCHto make it active. This time the code becomes the normal program for the major functionalities. Upload the code.

#define MAIN_SKETCHWhen the serial monitor prints "Ready!", the robot is ready to take your next instructions.

-

If you have never calibrated the joints’ offsets or reset the offsets in Step2, you need to calibrate them. If you boot up the robot with one side up, it will enter the calibration state automatically for you to install the legs. Otherwise, it will enter the normal rest state

-

You can use the serial monitor to calibrate it directly. Or you may plug in the Bluetooth dongle, and use the Petoi app (on Android/iOS) for a more user-friendly interface. The mobile app is available on:

- IOS: App Store

- Android: Google Play

You can refer to the calibration section in the user manual (https://bittle.petoi.com/6-calibration) and Guide for the Petoi App(https://docs.petoi.com/app-guide).

- you can use the infrared remote or other applications (such as the Petoi App, Python, serial monitor ... etc.) to play with the robot (https://bittle.petoi.com/7-play-with-bittle).

For updates:

- star this repository to receive timely notifications on changes.

- visit www.petoi.com and subscribe to our official newsletters for project announcements. We also host a forum at petoi.camp.

- follow us on Twitter, Instagram, Facebook, Linkedin, and YouTube channel for fun videos and community activities.

- Advanced tutorials made by users

- Review, open-box, and demos by users

- OpenCat robot showcase by users

- OpenCat robot gallery

OpenCat robots have been used in robotics education for K12 schools and colleges to teach students about STEM, coding, and robotics:

- robotics education showcases

- STEM & Robotics Curriculum & Resources for Teaching Coding & Robotics

- Robotics competitions

- Petoi robotics contest

The old repository for OpenCat is too redundant with large image logs and is obsolete with no further updates.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for OpenCat

Similar Open Source Tools

OpenCat

OpenCat is an open-source Arduino and Raspberry Pi-based quadruped robotic pet framework developed by Petoi. It aims to foster collaboration in quadruped robotics research, education, and engineering development of agile and affordable quadruped robot pets. The project provides a base open source platform for creating programmable gaits, locomotion, and deployment of inverse kinematics quadruped robots, enabling simulations to the real world via block-based coding/C/C++/Python programming languages. Users have deployed various robotics/AI/IoT applications and the project has successfully crowdfunded mini robot kits, shipped worldwide, and established a production line for affordable robotic kits and accessories.

Simulator-Controller

Simulator Controller is a modular administration and controller application for Sim Racing, featuring a comprehensive plugin automation framework for external controller hardware. It includes voice chat capable Assistants like Virtual Race Engineer, Race Strategist, Race Spotter, and Driving Coach. The tool offers features for setup, strategy development, monitoring races, and more. Developed in AutoHotkey, it supports various simulation games and integrates with third-party applications for enhanced functionality.

GlaDOS

This project aims to create a real-life version of GLaDOS, an aware, interactive, and embodied AI entity. It involves training a voice generator, developing a 'Personality Core,' implementing a memory system, providing vision capabilities, creating 3D-printable parts, and designing an animatronics system. The software architecture focuses on low-latency voice interactions, utilizing a circular buffer for data recording, text streaming for quick transcription, and a text-to-speech system. The project also emphasizes minimal dependencies for running on constrained hardware. The hardware system includes servo- and stepper-motors, 3D-printable parts for GLaDOS's body, animations for expression, and a vision system for tracking and interaction. Installation instructions cover setting up the TTS engine, required Python packages, compiling llama.cpp, installing an inference backend, and voice recognition setup. GLaDOS can be run using 'python glados.py' and tested using 'demo.ipynb'.

AI4U

AI4U is a tool that provides a framework for modeling virtual reality and game environments. It offers an alternative approach to modeling Non-Player Characters (NPCs) in Godot Game Engine. AI4U defines an agent living in an environment and interacting with it through sensors and actuators. Sensors provide data to the agent's brain, while actuators send actions from the agent to the environment. The brain processes the sensor data and makes decisions (selects an action by time). AI4U can also be used in other situations, such as modeling environments for artificial intelligence experiments.

viseron

Viseron is a self-hosted, local-only NVR and AI computer vision software that provides features such as object detection, motion detection, and face recognition. It allows users to monitor their home, office, or any other place they want to keep an eye on. Getting started with Viseron is easy by spinning up a Docker container and editing the configuration file using the built-in web interface. The software's functionality is enabled by components, which can be explored using the Component Explorer. Contributors are welcome to help with implementing open feature requests, improving documentation, and answering questions in issues or discussions. Users can also sponsor Viseron or make a one-time donation.

TagUI

TagUI is an open-source RPA tool that allows users to automate repetitive tasks on their computer, including tasks on websites, desktop apps, and the command line. It supports multiple languages and offers features like interacting with identifiers, automating data collection, moving data between TagUI and Excel, and sending Telegram notifications. Users can create RPA robots using MS Office Plug-ins or text editors, run TagUI on the cloud, and integrate with other RPA tools. TagUI prioritizes enterprise security by running on users' computers and not storing data. It offers detailed logs, enterprise installation guides, and support for centralised reporting.

ModernBERT

ModernBERT is a repository focused on modernizing BERT through architecture changes and scaling. It introduces FlexBERT, a modular approach to encoder building blocks, and heavily relies on .yaml configuration files to build models. The codebase builds upon MosaicBERT and incorporates Flash Attention 2. The repository is used for pre-training and GLUE evaluations, with a focus on reproducibility and documentation. It provides a collaboration between Answer.AI, LightOn, and friends.

lumigator

Lumigator is an open-source platform developed by Mozilla.ai to help users select the most suitable language model for their specific needs. It supports the evaluation of summarization tasks using sequence-to-sequence models such as BART and BERT, as well as causal models like GPT and Mistral. The platform aims to make model selection transparent, efficient, and empowering by providing a framework for comparing LLMs using task-specific metrics to evaluate how well a model fits a project's needs. Lumigator is in the early stages of development and plans to expand support to additional machine learning tasks and use cases in the future.

chatgpt-universe

ChatGPT is a large language model that can generate human-like text, translate languages, write different kinds of creative content, and answer your questions in a conversational way. It is trained on a massive amount of text data, and it is able to understand and respond to a wide range of natural language prompts. Here are 5 jobs suitable for this tool, in lowercase letters: 1. content writer 2. chatbot assistant 3. language translator 4. creative writer 5. researcher

GLaDOS

GLaDOS Personality Core is a project dedicated to building a real-life version of GLaDOS, an aware, interactive, and embodied AI system. The project aims to train GLaDOS voice generator, create a 'Personality Core,' develop medium- and long-term memory, provide vision capabilities, design 3D-printable parts, and build an animatronics system. The software architecture focuses on low-latency voice interactions and minimal dependencies. The hardware system includes servo- and stepper-motors, 3D printable parts for GLaDOS's body, animations for expression, and a vision system for tracking and interaction. Installation instructions involve setting up a local LLM server, installing drivers, and running GLaDOS on different operating systems.

SystemAnimatorOnline

XR Animator is a video/webcam-based AI motion capture application designed for VTubing and the metaverse era. It uses machine learning solutions to detect 3D poses from a live webcam video, driving a 3D avatar as if controlled by the user's body. It supports full-body AI motion tracking, face tracking, and various XR/3D purposes. The tool can be used for VTubing, recording mocap motion, exporting motions to different formats, customizing backgrounds and scenes, and animating 3D models in other applications. It also supports AR on Android Chrome browser, AR selfie feature, and has relatively low system requirements for wide device compatibility.

pwnagotchi

Pwnagotchi is an AI tool leveraging bettercap to learn from WiFi environments and maximize crackable WPA key material. It uses LSTM with MLP feature extractor for A2C agent, learning over epochs to improve performance in various WiFi environments. Units can cooperate using a custom parasite protocol. Visit https://www.pwnagotchi.ai for documentation and community links.

Aimmy

Aimmy is a universal AI-Based Aim Alignment Mechanism developed by BabyHamsta, MarsQQ & Taylor to make gaming more accessible for users who have difficulty aiming. It utilizes DirectML, ONNX, and YOLOV8 for player detection, offering high accuracy and fast performance. Aimmy features an easy-to-use UI, extensive customizability, and is free of ads and paywalls. It is designed for gamers facing challenges like physical or mental disabilities, poor hand-eye coordination, or aiming difficulties due to environmental factors. Aimmy provides various features like AI detection, customizability, anti-recoil system, mouse movement methods, hotswappability, and a model/configuration store with repository support.

magic

Magic Cloud is a software development automation platform based on AI, Low-Code, and No-Code. It allows dynamic code creation and orchestration using Hyperlambda, generative AI, and meta programming. The platform includes features like CRUD generation, No-Code AI, Hyperlambda programming language, AI agents creation, and various components for software development. Magic is suitable for backend development, AI-related tasks, and creating AI chatbots. It offers high-level programming capabilities, productivity gains, and reduced technical debt.

pyOllaMx

PyOllaMx is a chatbot application that serves as a gateway to both Ollama and Apple MlX models. It allows users to chat with these models through a native MacOS interface, eliminating the need for external applications like Bing or Chat-GPT. The tool provides a versatile and user-friendly experience for interacting with different models, enabling tasks such as model discovery, model management, chatting, and web searching. PyOllaMx simplifies the workflow for users who want to leverage Ollama and MlX models on their Mac devices.

godot_rl_agents

Godot RL Agents is an open-source package that facilitates the integration of Machine Learning algorithms with games created in the Godot Engine. It provides interfaces for popular RL frameworks, support for memory-based agents, 2D and 3D games, AI sensors, and is licensed under MIT. Users can train agents in the Godot editor, create custom environments, export trained agents in ONNX format, and utilize advanced features like different RL training frameworks.

For similar tasks

OpenCat

OpenCat is an open-source Arduino and Raspberry Pi-based quadruped robotic pet framework developed by Petoi. It aims to foster collaboration in quadruped robotics research, education, and engineering development of agile and affordable quadruped robot pets. The project provides a base open source platform for creating programmable gaits, locomotion, and deployment of inverse kinematics quadruped robots, enabling simulations to the real world via block-based coding/C/C++/Python programming languages. Users have deployed various robotics/AI/IoT applications and the project has successfully crowdfunded mini robot kits, shipped worldwide, and established a production line for affordable robotic kits and accessories.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.