blinkshot

A realtime AI image generator

Stars: 776

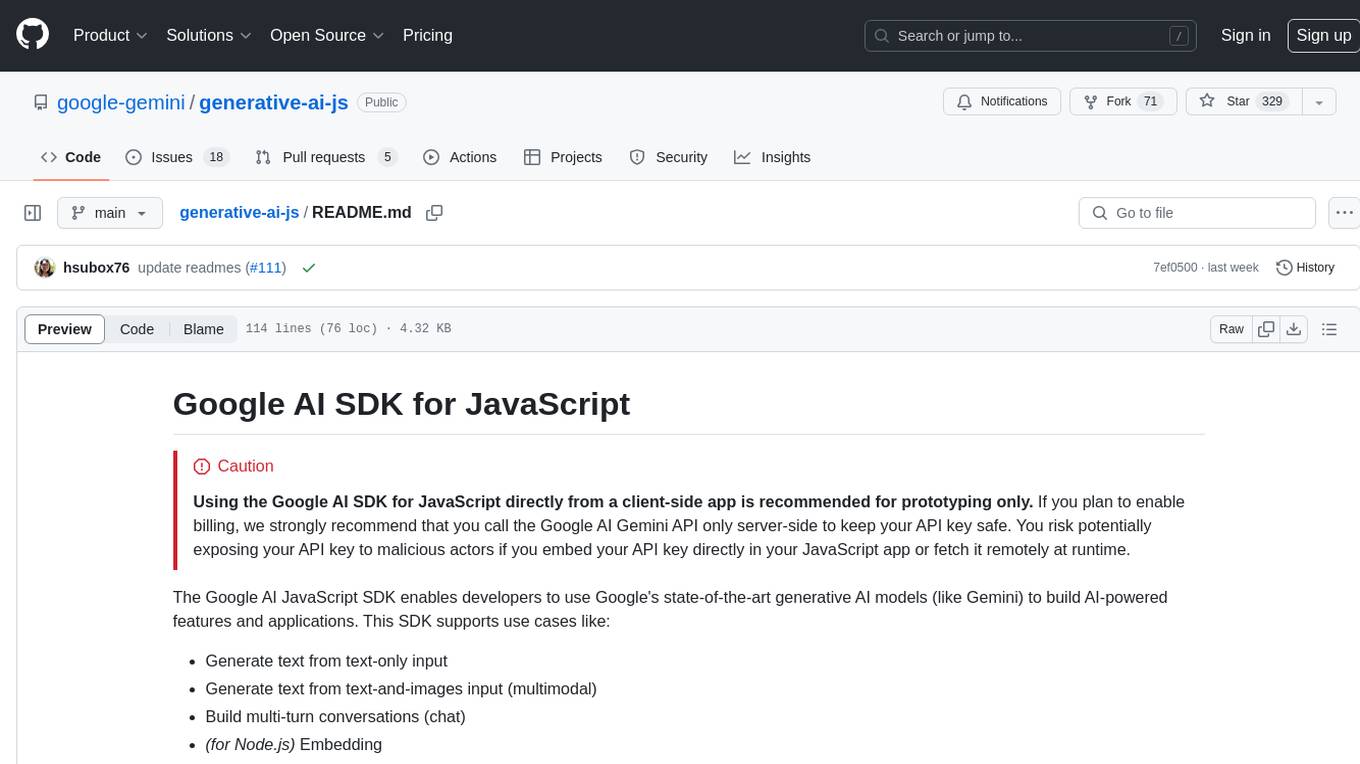

BlinkShot is an open source real-time AI image generator powered by Flux through Together.ai. It utilizes Flux Schnell from BFL for the image model, Together AI for inference, Next.js app router with Tailwind for the frontend, Helicone for observability, and Plausible for website analytics. Users can clone the repository, add their Together AI API key, and run the app locally to generate AI images. Future tasks include adding a call-to-action to fork the code on GitHub, implementing a download button on hover, allowing users to adjust resolutions and steps, adding an app description to the footer, and introducing themes.

README:

An open source real-time AI image generator. Powered by Flux through Together.ai.

- Flux Schnell from BFL for the image model

- Together AI for inference

- Next.js app router with Tailwind

- Helicone for observability

- Plausible for website analytics

- Clone the repo:

git clone https://github.com/Nutlope/blinkshot - Create a

.env.localfile and add your Together AI API key:TOGETHER_API_KEY= - Run

npm installandnpm run devto install dependencies and run locally

- [ ] Show a download button so people can get their images

- [ ] Add auth and rate limit by email instead of IP

- [ ] Show people how many credits they have left

- [ ] Build an image gallery of cool generations w/ their prompts

- [ ] Add replay functionality so people can replay consistent generations

- [ ] Add a setting to select between steps (2-5)

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for blinkshot

Similar Open Source Tools

blinkshot

BlinkShot is an open source real-time AI image generator powered by Flux through Together.ai. It utilizes Flux Schnell from BFL for the image model, Together AI for inference, Next.js app router with Tailwind for the frontend, Helicone for observability, and Plausible for website analytics. Users can clone the repository, add their Together AI API key, and run the app locally to generate AI images. Future tasks include adding a call-to-action to fork the code on GitHub, implementing a download button on hover, allowing users to adjust resolutions and steps, adding an app description to the footer, and introducing themes.

loras-dev

Loras is an open source real-time AI image generator powered by Flux through Together.ai. It utilizes Flux Dev from BFL for the image model, Together AI for inference, Next.js app router with Tailwind, Helicone for observability, and Plausible for website analytics. Users can clone the repository, add their Together AI API key to a .env.local file, install dependencies, and run the tool locally to generate AI images in real-time.

llamatutor

Llama Tutor is an open source AI personal tutor powered by Llama 3 70B & Together.ai. It utilizes a tech stack including Llama 3.1 70B, Together AI, Next.js app router with Tailwind, Serper for the search API, Helicone for observability, and Plausible for website analytics. Users can clone the repo, set up accounts for necessary APIs, configure environment variables, and run the app locally. Future tasks include adding share & copy buttons, follow-up questions, splitting pages, organizing icons, enhancing the landing page, implementing a mobile menu, exploring generative UI, and improving dropdowns.

dataline

DataLine is an AI-driven data analysis and visualization tool designed for technical and non-technical users to explore data quickly. It offers privacy-focused data storage on the user's device, supports various data sources, generates charts, executes queries, and facilitates report building. The tool aims to speed up data analysis tasks for businesses and individuals by providing a user-friendly interface and natural language querying capabilities.

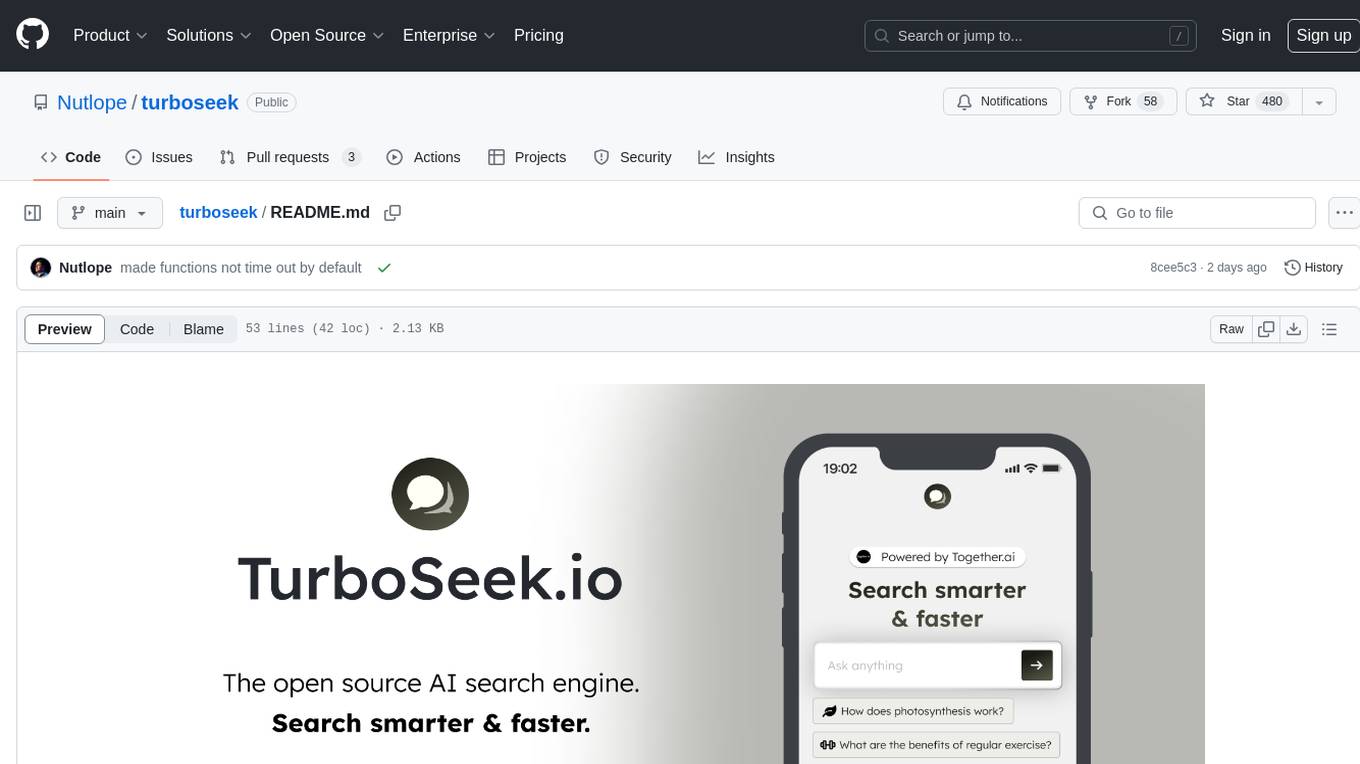

turboseek

TurboSeek is an open source AI search engine powered by Together.ai. It utilizes Next.js with Tailwind for the app router, Together AI for LLM inference, Mixtral 8x7B & Llama-3 for the LLMs, Bing for the search API, Helicone for observability, and Plausible for website analytics. The tool takes a user's question, queries the Bing search API for top results, scrapes text from the links, sends the question and context to Mixtral-8x7B, and generates follow-up questions using Llama-3-8B. Future tasks include optimizing source parsing, ignoring video links, adding regeneration option, ensuring proper citations, enabling sharing, implementing scrolling during answers, fixing hard refresh, adding caching with upstash redis, incorporating advanced RAG techniques, and adding authentication with Clerk and postgres/prisma.

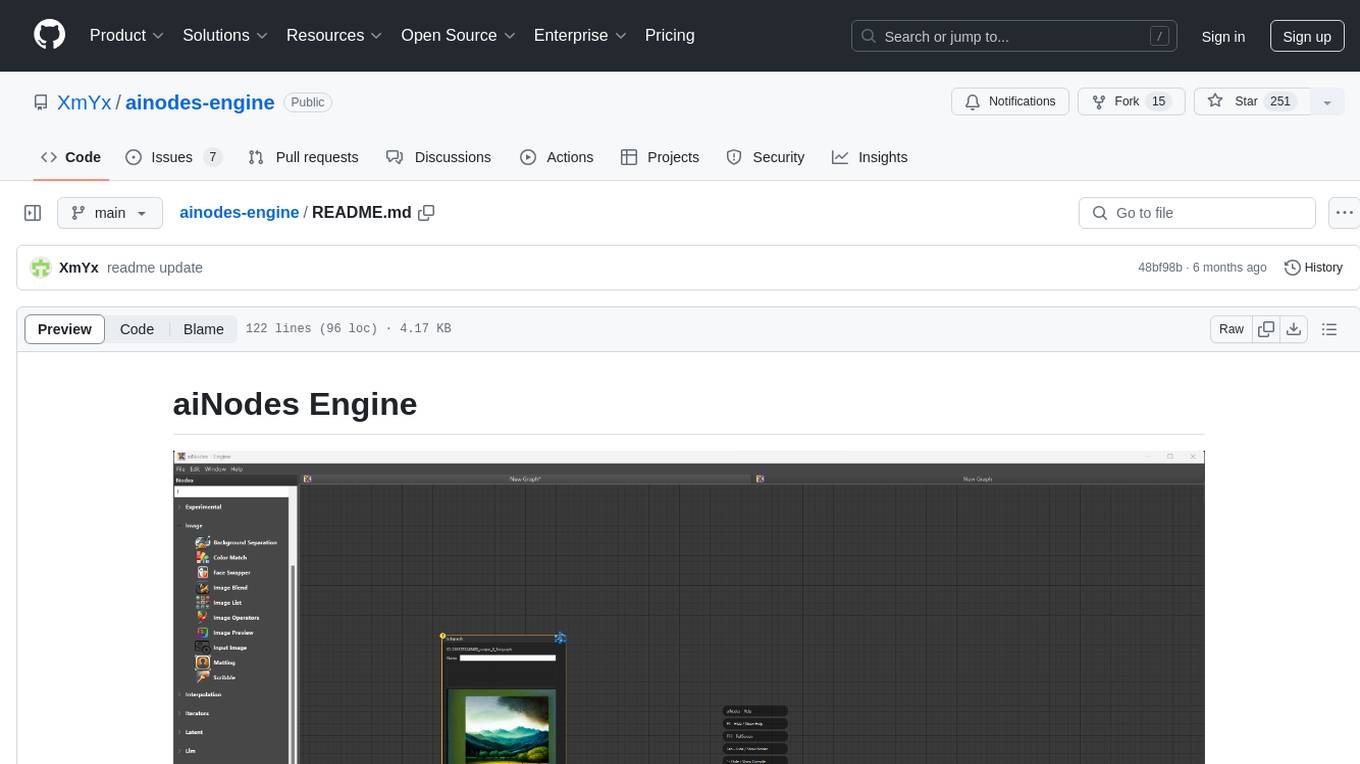

ainodes-engine

aiNodes Engine is a Python-based AI image/motion picture generator node engine with a live execution chain, python code editor node, and plug-in support. It offers full modularity, colored background drop, and easy node creation with IDE annotations. The project is officially supported by Deforum and incorporates various open-source projects like ComfyUI. It is designed to be flexible, with an Unreal-like execution chain, supporting features such as Deforum, Stable Diffusion, Upscalers, Kandinsky, ControlNet, and more. The engine allows for background separation, human matting/masking, compositing, drag and drop, subgraphs, and graph saving/loading from image metadata. It aims to provide a unique, controllable manner of working with a strict user-declared execution chain.

whisplay-ai-chatbot

Whisplay-AI-Chatbot is a pocket-sized AI chatbot device built using a Raspberry Pi Zero 2w. It features a PiSugar Whisplay HAT with an LCD screen, on-board speaker, and microphone. Users can interact with the chatbot by pressing a button, speaking, and receiving responses, similar to a futuristic walkie-talkie. The tool supports various functionalities such as adjusting volume autonomously, resetting conversation history, local ASR and TTS capabilities, image generation, and integration with APIs like Google Gemini and Grok. It also offers support for LLM8850 AI Accelerator for offline capabilities like ASR, TTS, and LLM API. The chatbot saves conversation history and generated images in a data folder, and users can customize the tool with different enclosure cases available for Pi02 and Pi5 models.

chatdev

ChatDev IDE is a tool for building your AI agent, Whether it's NPCs in games or powerful agent tools, you can design what you want for this platform. It accelerates prompt engineering through **JavaScript Support** that allows implementing complex prompting techniques.

generative-ai-swift

The Google AI SDK for Swift enables developers to use Google's state-of-the-art generative AI models (like Gemini) to build AI-powered features and applications. This SDK supports use cases like: - Generate text from text-only input - Generate text from text-and-images input (multimodal) - Build multi-turn conversations (chat)

OrionChat

Orion is a web-based chat interface that simplifies interactions with multiple AI model providers. It provides a unified platform for chatting and exploring various large language models (LLMs) such as Ollama, OpenAI (GPT model), Cohere (Command-r models), Google (Gemini models), Anthropic (Claude models), Groq Inc., Cerebras, and SambaNova. Users can easily navigate and assess different AI models through an intuitive, user-friendly interface. Orion offers features like browser-based access, code execution with Google Gemini, text-to-speech (TTS), speech-to-text (STT), seamless integration with multiple AI models, customizable system prompts, language translation tasks, document uploads for analysis, and more. API keys are stored locally, and requests are sent directly to official providers' APIs without external proxies.

minimal-llm-ui

This minimalistic UI serves as a simple interface for Ollama models, enabling real-time interaction with Local Language Models (LLMs). Users can chat with models, switch between different LLMs, save conversations, and create parameter-driven prompt templates. The tool is built using React, Next.js, and Tailwind CSS, with seamless integration with LangchainJs and Ollama for efficient model switching and context storage.

sdk

Vikit.ai SDK is a software development kit that enables easy development of video generators using generative AI and other AI models. It serves as a langchain to orchestrate AI models and video editing tools. The SDK allows users to create videos from text prompts with background music and voice-over narration. It also supports generating composite videos from multiple text prompts. The tool requires Python 3.8+, specific dependencies, and tools like FFMPEG and ImageMagick for certain functionalities. Users can contribute to the project by following the contribution guidelines and standards provided.

vertex-ai-creative-studio

GenMedia Creative Studio is an application showcasing the capabilities of Google Cloud Vertex AI generative AI creative APIs. It includes features like Gemini for prompt rewriting and multimodal evaluation of generated images. The app is built with Mesop, a Python-based UI framework, enabling rapid development of web and internal apps. The Experimental folder contains stand-alone applications and upcoming features demonstrating cutting-edge generative AI capabilities, such as image generation, prompting techniques, and audio/video tools.

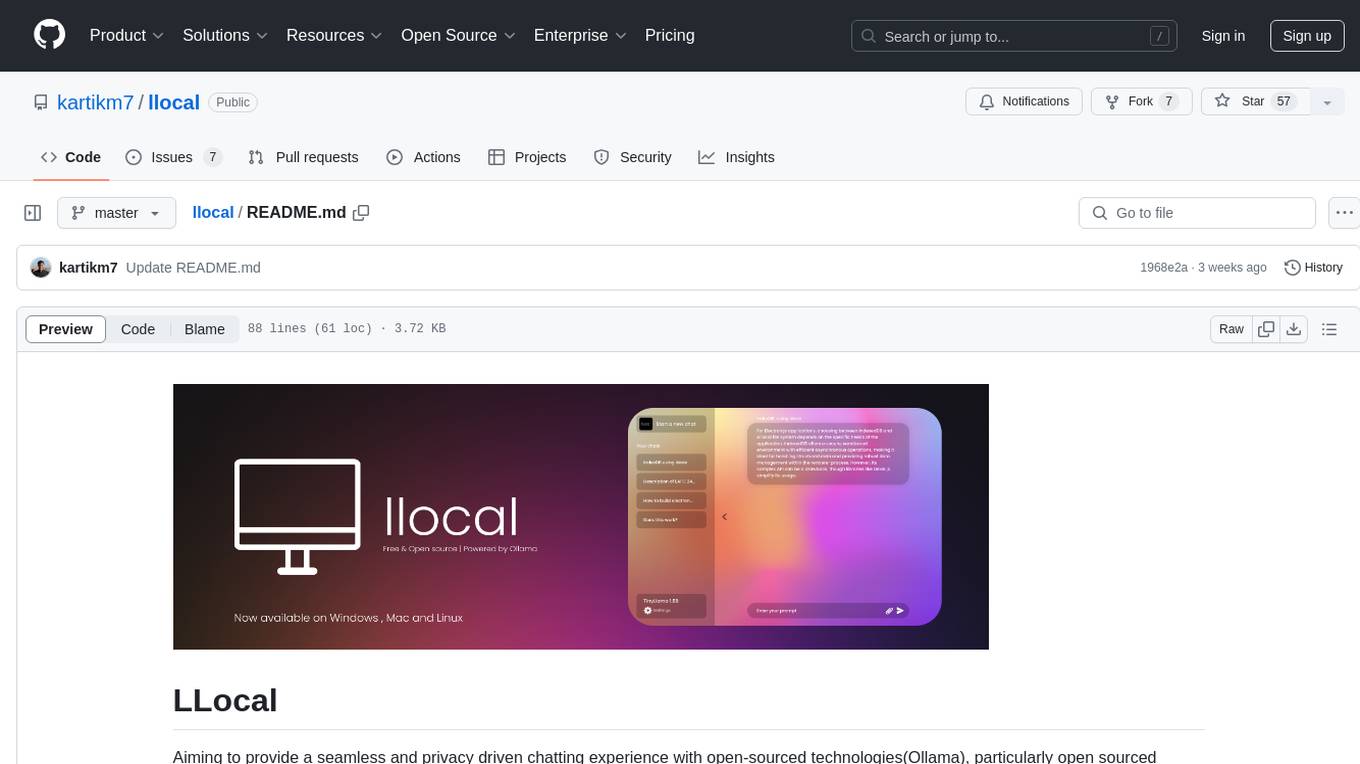

llocal

LLocal is an Electron application focused on providing a seamless and privacy-driven chatting experience using open-sourced technologies, particularly open-sourced LLM's. It allows users to store chats locally, switch between models, pull new models, upload images, perform web searches, and render responses as markdown. The tool also offers multiple themes, seamless integration with Ollama, and upcoming features like chat with images, web search improvements, retrieval augmented generation, multiple PDF chat, text to speech models, community wallpapers, lofi music, speech to text, and more. LLocal's builds are currently unsigned, requiring manual builds or using the universal build for stability.

generative-ai-js

Generative AI JS is a JavaScript library that provides tools for creating generative art and music using artificial intelligence techniques. It allows users to generate unique and creative content by leveraging machine learning models. The library includes functions for generating images, music, and text based on user input and preferences. With Generative AI JS, users can explore the intersection of art and technology, experiment with different creative processes, and create dynamic and interactive content for various applications.

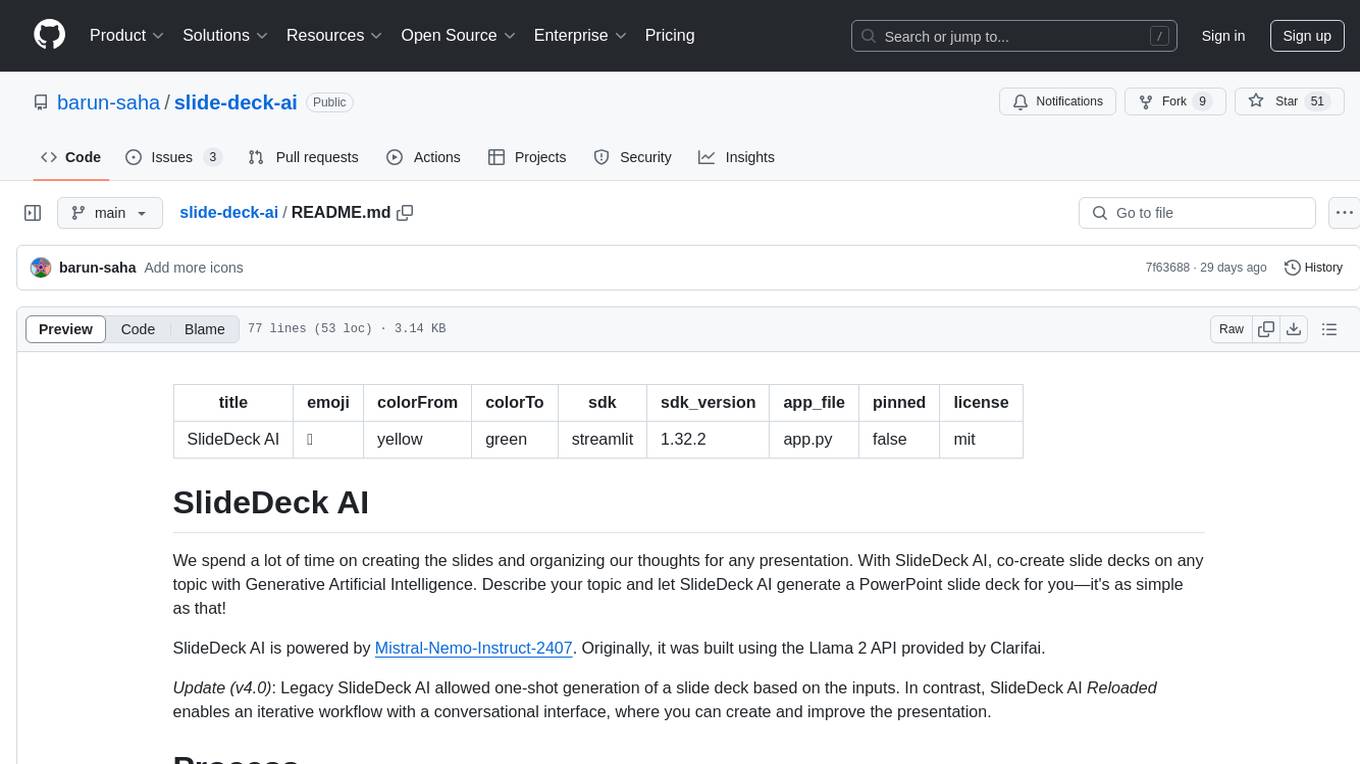

slide-deck-ai

SlideDeck AI is a tool that leverages Generative Artificial Intelligence to co-create slide decks on any topic. Users can describe their topic and let SlideDeck AI generate a PowerPoint slide deck, streamlining the presentation creation process. The tool offers an iterative workflow with a conversational interface for creating and improving presentations. It uses Mistral Nemo Instruct to generate initial slide content, searches and downloads images based on keywords, and allows users to refine content through additional instructions. SlideDeck AI provides pre-defined presentation templates and a history of instructions for users to enhance their presentations.

For similar tasks

blinkshot

BlinkShot is an open source real-time AI image generator powered by Flux through Together.ai. It utilizes Flux Schnell from BFL for the image model, Together AI for inference, Next.js app router with Tailwind for the frontend, Helicone for observability, and Plausible for website analytics. Users can clone the repository, add their Together AI API key, and run the app locally to generate AI images. Future tasks include adding a call-to-action to fork the code on GitHub, implementing a download button on hover, allowing users to adjust resolutions and steps, adding an app description to the footer, and introducing themes.

qrbtf

QRBTF is the world's first and best AI & parametric QR code generator developed by Latent Cat. It features original AI models trained on a large number of images for fast and high-quality inference. The parametric part is open source, offering various styles without requiring a backend. Users can create beautiful QR codes by entering a URL or text, selecting a style, adjusting parameters, and downloading in SVG or JPG format. The website supports English and Chinese, with contributions for i18n in other languages welcome. QRBTF also provides a React component for integration into projects.

lollms-webui

LoLLMs WebUI (Lord of Large Language Multimodal Systems: One tool to rule them all) is a user-friendly interface to access and utilize various LLM (Large Language Models) and other AI models for a wide range of tasks. With over 500 AI expert conditionings across diverse domains and more than 2500 fine tuned models over multiple domains, LoLLMs WebUI provides an immediate resource for any problem, from car repair to coding assistance, legal matters, medical diagnosis, entertainment, and more. The easy-to-use UI with light and dark mode options, integration with GitHub repository, support for different personalities, and features like thumb up/down rating, copy, edit, and remove messages, local database storage, search, export, and delete multiple discussions, make LoLLMs WebUI a powerful and versatile tool.

daily-poetry-image

Daily Chinese ancient poetry and AI-generated images powered by Bing DALL-E-3. GitHub Action triggers the process automatically. Poetry is provided by Today's Poem API. The website is built with Astro.

InvokeAI

InvokeAI is a leading creative engine built to empower professionals and enthusiasts alike. Generate and create stunning visual media using the latest AI-driven technologies. InvokeAI offers an industry leading Web Interface, interactive Command Line Interface, and also serves as the foundation for multiple commercial products.

LocalAI

LocalAI is a free and open-source OpenAI alternative that acts as a drop-in replacement REST API compatible with OpenAI (Elevenlabs, Anthropic, etc.) API specifications for local AI inferencing. It allows users to run LLMs, generate images, audio, and more locally or on-premises with consumer-grade hardware, supporting multiple model families and not requiring a GPU. LocalAI offers features such as text generation with GPTs, text-to-audio, audio-to-text transcription, image generation with stable diffusion, OpenAI functions, embeddings generation for vector databases, constrained grammars, downloading models directly from Huggingface, and a Vision API. It provides a detailed step-by-step introduction in its Getting Started guide and supports community integrations such as custom containers, WebUIs, model galleries, and various bots for Discord, Slack, and Telegram. LocalAI also offers resources like an LLM fine-tuning guide, instructions for local building and Kubernetes installation, projects integrating LocalAI, and a how-tos section curated by the community. It encourages users to cite the repository when utilizing it in downstream projects and acknowledges the contributions of various software from the community.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

StableSwarmUI

StableSwarmUI is a modular Stable Diffusion web user interface that emphasizes making power tools easily accessible, high performance, and extensible. It is designed to be a one-stop-shop for all things Stable Diffusion, providing a wide range of features and capabilities to enhance the user experience.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.