gurubase

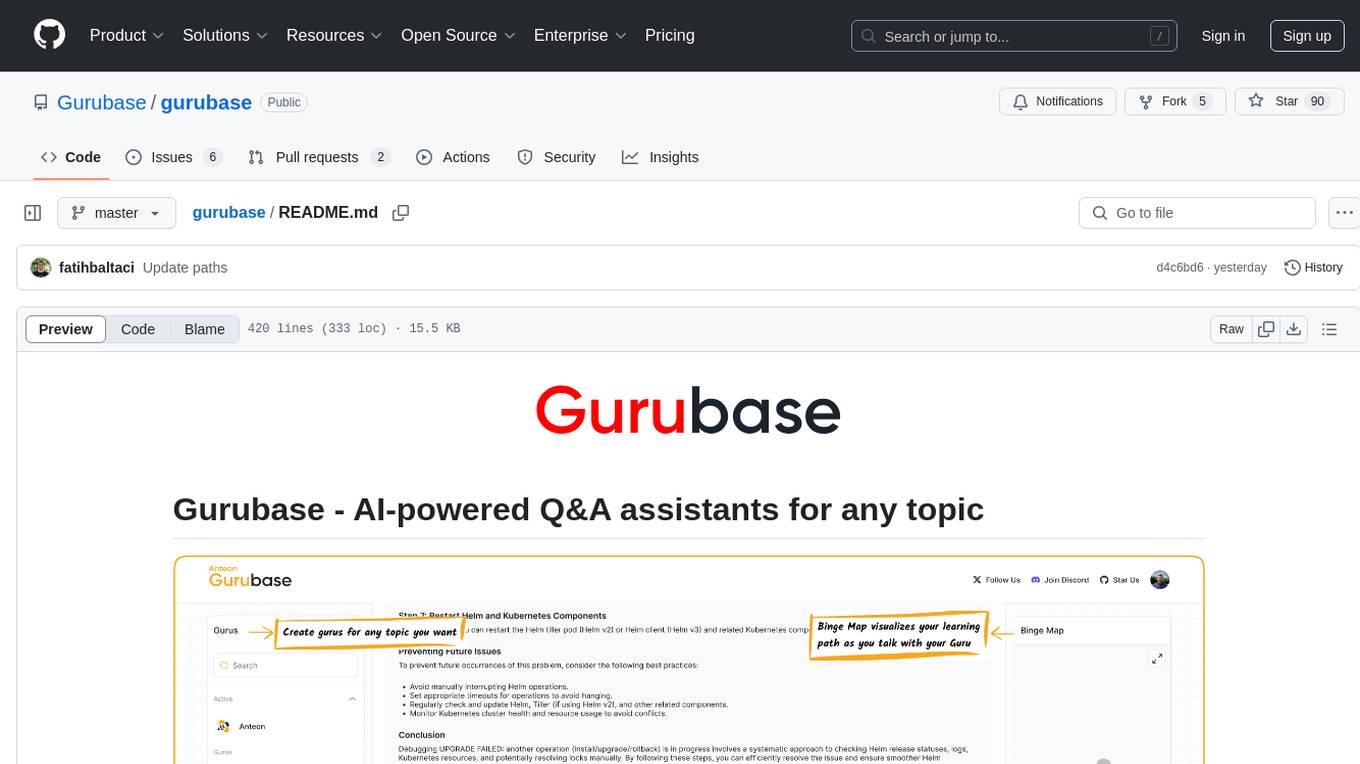

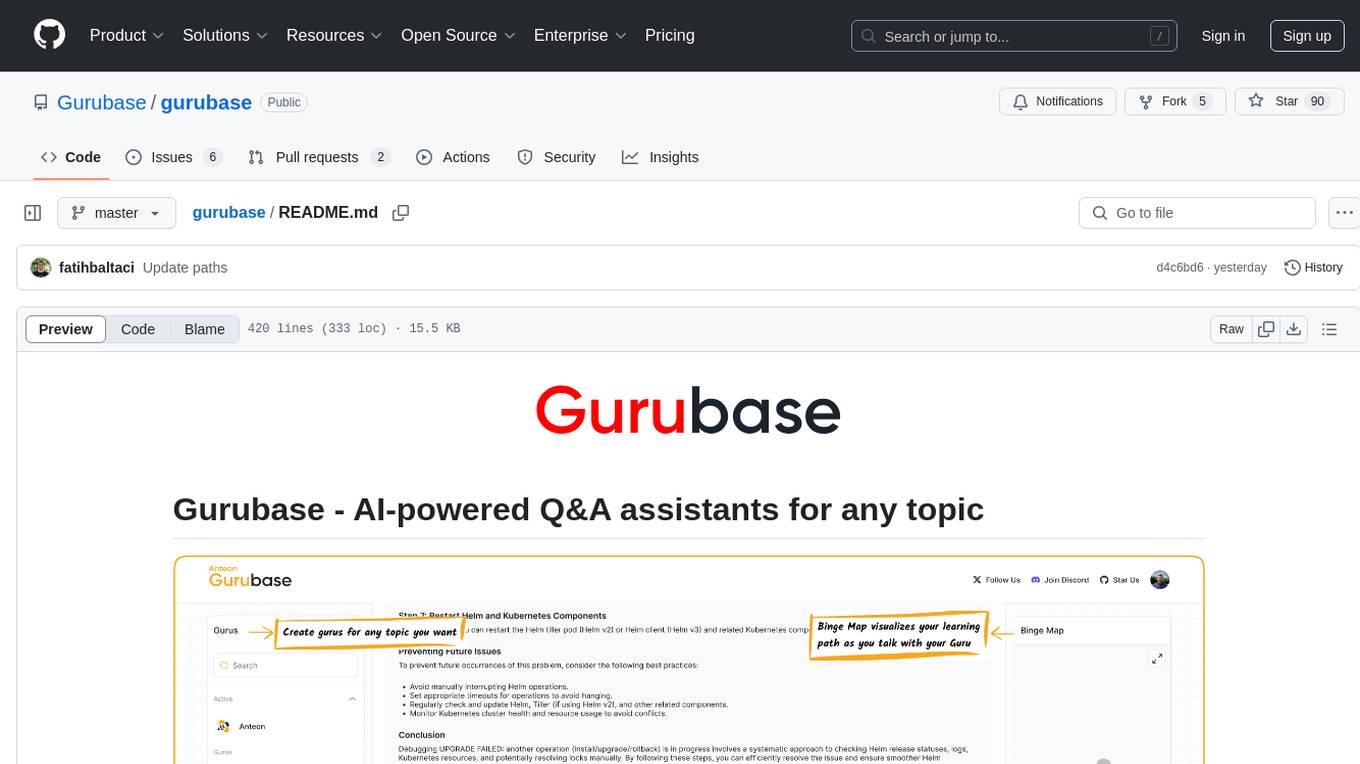

Gurubase lets you add an "Ask AI" button to your technical docs, turning your content into a searchable Q&A assistant. It uses web pages, PDFs, YouTube videos, and GitHub repos as sources to generate instant, accurate answers with references. Deploy it via Slack, Discord, or a web widget.

Stars: 576

Gurubase is an open-source RAG system that enables users to create AI-powered Q&A assistants ('Gurus') for various topics by integrating web pages, PDFs, YouTube videos, and GitHub repositories. It offers advanced LLM-based question answering, accurate context-aware responses through the RAG system, multiple data sources integration, easy website embedding, creation of custom AI assistants, real-time updates, personalized learning paths, and self-hosting options. Users can request Guru creation, manage existing Gurus, update datasources, and benefit from the system's features for enhancing user engagement and knowledge sharing.

README:

- What is Gurubase

- Features

- Quick Install

- How to Create a Guru

- How to Claim a Guru

- Showcase Your Guru

- How to Update Datasources

- Contributing

- License

- Help

- Used By

- Frequently Asked Questions

Gurubase is an open-source RAG system that lets you create AI-powered Q&A assistants ("Gurus") for any topic or need. Create a new Guru by adding:

- 📄 Webpages

- 📑 PDFs

- 🎥 YouTube videos

- 💻 GitHub repositories

Start asking questions directly on Gurubase, or embed it on your website to let your users ask questions about your product. It's already being used by hundreds of open-source repositories. You can also install the entire system on your server, check INSTALL.md for instructions on how to self-host Gurubase.

- 🤖 AI-Powered Q&A: Advanced LLM-based question answering, including instant evaluation mechanism to minimize hallucination as much as possible

- 🔄 RAG System: Retrieval Augmented Generation for accurate, context-aware responses

- 📚 Multiple Data Sources: Add web pages, PDFs, videos, and GitHub repositories as data sources for your Guru.

- 🔌 Easy Integration:

- Website Widget for embedding on your site

- Slack Bot for asking questions in Slack

- Discord Bot for asking questions in Discord

- 🎯 Custom Gurus: Create specialized AI assistants for specific topics

- 🔄 Real-time Updates: Keep the data sources up to date by reindexing them with one click

- ⛬ Binge: Visualize your learning path while talking with a Guru. You can navigate through it and create a personalized path

- 🛠 Self-hosted Option: Full control over your deployment. Install the entire system on your servers

If you prefer not to use Gurubase.io, you can install the entire system on your own servers.

curl -fsSL https://raw.githubusercontent.com/Gurubase/gurubase/refs/heads/master/gurubase.sh -o gurubase.sh

bash gurubase.shSee INSTALL.md for detailed installation instructions like upgrading, uninstalling, and more.

Currently, only the Gurubase team can create a Guru on Gurubase.io. Please open an issue on this repository with the title "Guru Creation Request" and include the GitHub repository link in the issue content. We prioritize Guru creation requests from the maintainers of the tools. Please mention whether you are the maintainer of the tool. If you are not the maintainer, it would be helpful to obtain the maintainer's permission before opening a creation request for the tool.

Although you can't create a Guru on Gurubase.io, you can manage it on Gurubase. For example, you can add, remove, or reindex the datasources. To claim a Guru, you must have a Gurubase account and be one of the tool's maintainers. Please open an issue with the title "Guru Claim Request". Include the link to the Guru (e.g., https://gurubase.io/g/anteon), your Gurubase username, and a link proving you are one of the maintainers of the tool, such as a PR merged by you.

Add an "Ask AI" widget to your website by importing a small JS script. For an example, check the Anteon docs.

Like hundreds of GitHub repositories, add a badge to your README to guide your users to learn about your tool on Gurubase.

[](https://gurubase.io/g/opencost)

Gurubase also offers a Slack bot that allows you to ask questions in your Slack channels. Learn more about it here.

Gurubase also offers a Discord bot that allows you to ask questions in your Discord channels. Learn more about it here.

Datasources can include your tool's documentation webpages, YouTube videos, or PDF files. You can add new ones, remove existing ones, or reindex them. Reindexing ensures your Guru is updated based on changes to the indexed datasources. For example, if you update your tool's documentation, you can reindex those pages so your Guru generates answers based on the latest data.

Once you claim your Guru, you will see your Gurus in the "My Gurus" section.

Click the Guru you want to update. On the edit page, click "Reindex" for the datasource you want to reindex.

You can also see the "Last Index Date" on the URL pages.

[!NOTE] GitHub repositories are reindexed automatically twice a day.

We welcome contributions to Gurubase! Please see our CONTRIBUTING.md file for guidelines on how to contribute, including code standards, testing requirements, and the pull request process.

Licensed under the Apache 2.0 License.

All the content generated by gurubase.io aligns with the license of the datasources used to generate answers. More details can be found on the Terms of Usage page, Section 2.

We prefer Discord for written communication. Join our channel! To stay updated on new features, you can follow us on X, Mastodon, and Bluesky.

For official documentation, visit Gurubase Documentation.

Gurubase currently hosts hundreds of Gurus, and it grows every day. Here are some repositories that showcase their Gurus in their READMEs or documentation.

Gurubase is an open-source RAG system that creates AI-powered Q&A assistants ("Gurus"). It processes various data sources like web pages, videos, PDFs, and GitHub code repositories to provide context-aware answers.

Gurubase uses a modern RAG architecture:

- Indexing: Processes and chunks data sources

- Embedding: Converts text into vector representations

- Storage: Stores vectors in Milvus for efficient similarity search

- Retrieval: Finds relevant context when questions are asked

- Generation: Uses LLMs to generate accurate answers based on retrieved context

- Evaluation: Evaluates the contexts to prevent hallucinations

Check the ARCHITECTURE.md file for more details.

Gurubase supports multiple data source types:

- 📄 Web Pages

- 📑 PDF Documents

- 🎥 YouTube Videos

- 💻 GitHub repositories for codebase indexing

- More formats coming soon! Open an issue if you want a new data source type.

Gurubase follows a microservices architecture, deployed as Docker compose.

- Frontend: Next.js 14 with TailwindCSS

- Backend: Django REST framework

- Vector Store: Milvus

- Message Queue: RabbitMQ

- Cache: Redis

- Database: PostgreSQL See ARCHITECTURE.md for details.

Minimum requirements:

- CPU: 4 cores

- RAM: 8GB

- Storage: 10GB SSD

- OS: Linux or macOS (Windows via WSL2) See INSTALL.md for detailed requirements.

- You can use it on Gurubase.io (or on Gurubase Self-hosted if you've installed it on your servers).

- You can embed an Ask AI widget into your website.

- You can add a Gurubase badge to your GitHub repository README.

- You can add a Slack bot to your Slack workspace to ask questions in your channels.

- You can add a Discord bot to your Discord server to ask questions in your channels.

- You can use it over API to integrate with your own applications.

Binge lets you:

- Create personalized learning paths on any Guru.

- Ask follow-up questions to dive deeper into the content.

- Visualize your learning path on the Binge Map and navigate it easily and efficiently.

- Save your progress to pick up where you left off.

- Manual reindexing available anytime. Check How to Update Datasources section to learn more

- GitHub repositories are reindexed automatically twice a day.

- Periodic reindexing for all data sources will be available soon

Yes, Gurubase offers a public API to interact with your Gurus.

- Code is licensed under Apache 2.0

- All data is stored locally in self-hosted deployments including the API keys

- No data is sent to external servers except LLM API calls

- Optional telemetry can be disabled

Gurubase.io is a hosted version of Gurubase. It's a great way to get started with Gurubase without the hassle of self-hosting.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for gurubase

Similar Open Source Tools

gurubase

Gurubase is an open-source RAG system that enables users to create AI-powered Q&A assistants ('Gurus') for various topics by integrating web pages, PDFs, YouTube videos, and GitHub repositories. It offers advanced LLM-based question answering, accurate context-aware responses through the RAG system, multiple data sources integration, easy website embedding, creation of custom AI assistants, real-time updates, personalized learning paths, and self-hosting options. Users can request Guru creation, manage existing Gurus, update datasources, and benefit from the system's features for enhancing user engagement and knowledge sharing.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.

SurfSense

SurfSense is a tool designed to help users save and organize content from the internet into a personal Knowledge Graph. It allows users to capture web browsing sessions and webpage content using a Chrome extension, enabling easy retrieval and recall of saved information. SurfSense offers features like powerful search capabilities, natural language interaction with saved content, self-hosting options, and integration with GraphRAG for meaningful content relations. The tool eliminates the need for web scraping by directly reading data from the DOM, making it a convenient solution for managing online information.

heurist-agent-framework

Heurist Agent Framework is a flexible multi-interface AI agent framework that allows processing text and voice messages, generating images and videos, interacting across multiple platforms, fetching and storing information in a knowledge base, accessing external APIs and tools, and composing complex workflows using Mesh Agents. It supports various platforms like Telegram, Discord, Twitter, Farcaster, REST API, and MCP. The framework is built on a modular architecture and provides core components, tools, workflows, and tool integration with MCP support.

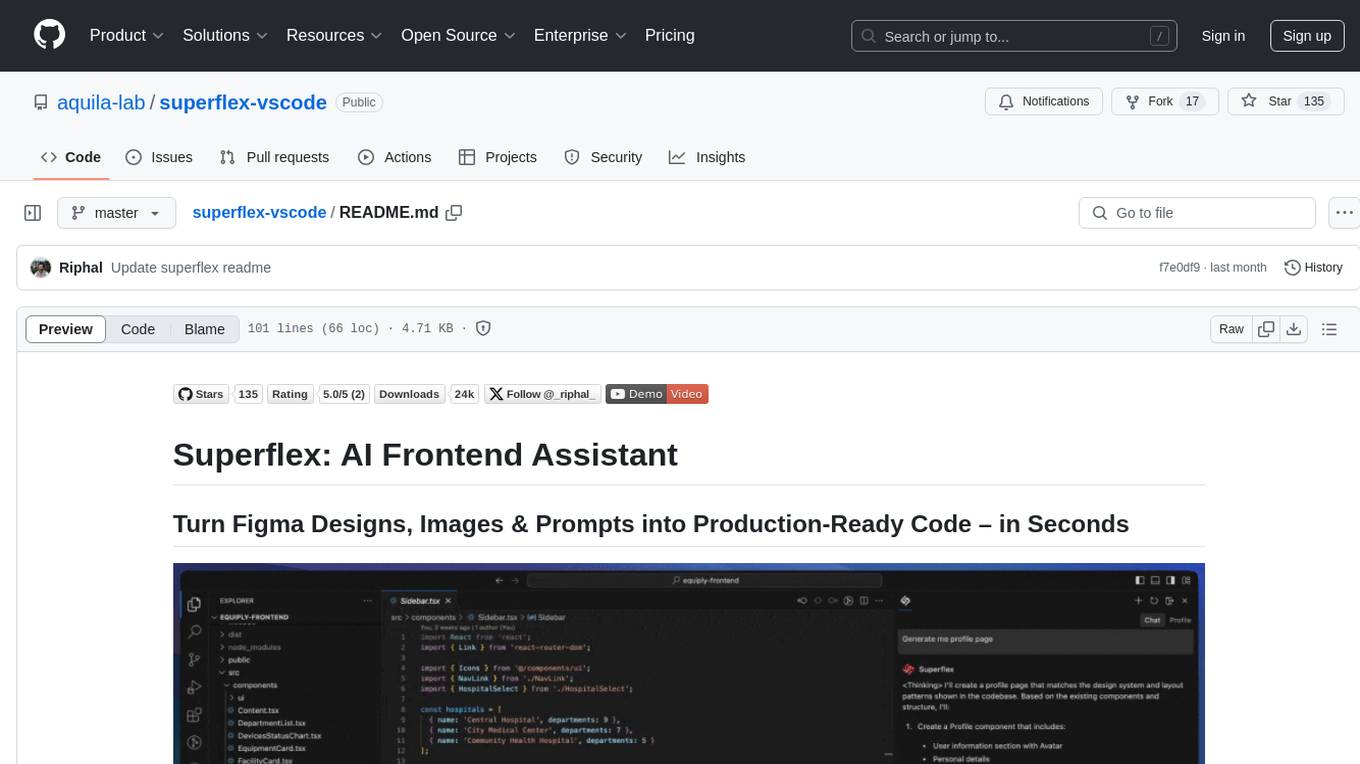

superflex-vscode

Superflex is an AI frontend assistant that streamlines frontend development by converting Figma designs, images, and prompts into production-ready code in seconds. It ensures design standards and coding style are maintained, offering features like generating entire page layouts from Figma, a new chat UI, enhanced usability with shortcuts and profiles, and the ability to add code snippets or files to the chat context seamlessly. Superflex saves time by automating repetitive coding tasks, promotes code consistency, and is beginner-friendly for designers or developers new to front-end work.

nanobrowser

Nanobrowser is an open-source AI web automation tool that runs in your browser. It is a free alternative to OpenAI Operator with flexible LLM options and a multi-agent system. Nanobrowser offers premium web automation capabilities while keeping users in complete control, with features like a multi-agent system, interactive side panel, task automation, follow-up questions, and multiple LLM support. Users can easily download and install Nanobrowser as a Chrome extension, configure agent models, and accomplish tasks such as news summary, GitHub research, and shopping research with just a sentence. The tool uses a specialized multi-agent system powered by large language models to understand and execute complex web tasks. Nanobrowser is actively developed with plans to expand LLM support, implement security measures, optimize memory usage, enable session replay, and develop specialized agents for domain-specific tasks. Contributions from the community are welcome to improve Nanobrowser and build the future of web automation.

weam

Weam is an open source platform designed to help teams systematically adopt AI. It provides a production-ready stack with Next.js frontend and Node.js/Python backend, allowing for immediate deployment and use. Weam connects to major LLM providers, enabling easy access to the latest AI models. The platform organizes AI interactions into 'Brains' for different departments, offering customization and expansion options. Features include chat system, productivity tools, sharing & access controls, prompt library, AI agents, RAG, MCP, enterprise features, pre-built automations, and upcoming AI app solutions. Weam is free, open source, and scalable to meet growing needs.

ai-driven-dev-community

AI Driven Dev Community is a repository aimed at helping developers become more efficient by utilizing AI tools in their daily coding tasks. It provides a collection of tools, prompts, snippets, and agents for developers to integrate AI into their workflow. The repository is regularly updated with new resources and focuses on best practices for using AI in development work. Users can find tools like Espanso, ChatGPT, GitHub Copilot, and VSCode recommended for enhancing their coding experience. Additionally, the repository offers guidance on customizing AI for developers, installing AI toolbox for software engineers, and contributing to the community through easy steps.

kestra

Kestra is an open-source event-driven orchestration platform that simplifies building scheduled and event-driven workflows. It offers Infrastructure as Code best practices for data, process, and microservice orchestration, allowing users to create reliable workflows using YAML configuration. Key features include everything as code with Git integration, event-driven and scheduled workflows, rich plugin ecosystem for data extraction and script running, intuitive UI with syntax highlighting, scalability for millions of workflows, version control friendly, and various features for structure and resilience. Kestra ensures declarative orchestration logic management even when workflows are modified via UI, API calls, or other methods.

whispering-ui

Whispering Tiger UI is a Native-UI tool designed to control the Whispering Tiger application, a free and Open-Source tool that can listen/watch to audio streams or in-game images on your machine and provide transcription or translation to a web browser using Websockets or over OSC. It features a Native-UI for Windows, easy access to all Whispering Tiger features including transcription, translation, text-to-speech, and in-game image recognition. The tool supports loopback audio device, configuration saving/loading, plugin support for additional features, and auto-update functionality. Users can create profiles, configure audio devices, select A.I. devices for speech-to-text, and install/manage plugins for extended functionality.

obsidian-smart-composer

Smart Composer is an Obsidian plugin that enhances note-taking and content creation by integrating AI capabilities. It allows users to efficiently write by referencing their vault content, providing contextual chat with precise context selection, multimedia context support for website links and images, document edit suggestions, and vault search for relevant notes. The plugin also offers features like custom model selection, local model support, custom system prompts, and prompt templates. Users can set up the plugin by installing it through the Obsidian community plugins, enabling it, and configuring API keys for supported providers like OpenAI, Anthropic, and Gemini. Smart Composer aims to streamline the writing process by leveraging AI technology within the Obsidian platform.

TaskingAI

TaskingAI brings Firebase's simplicity to **AI-native app development**. The platform enables the creation of GPTs-like multi-tenant applications using a wide range of LLMs from various providers. It features distinct, modular functions such as Inference, Retrieval, Assistant, and Tool, seamlessly integrated to enhance the development process. TaskingAI’s cohesive design ensures an efficient, intelligent, and user-friendly experience in AI application development.

refact

This repository contains Refact WebUI for fine-tuning and self-hosting of code models, which can be used inside Refact plugins for code completion and chat. Users can fine-tune open-source code models, self-host them, download and upload Lloras, use models for code completion and chat inside Refact plugins, shard models, host multiple small models on one GPU, and connect GPT-models for chat using OpenAI and Anthropic keys. The repository provides a Docker container for running the self-hosted server and supports various models for completion, chat, and fine-tuning. Refact is free for individuals and small teams under the BSD-3-Clause license, with custom installation options available for GPU support. The community and support include contributing guidelines, GitHub issues for bugs, a community forum, Discord for chatting, and Twitter for product news and updates.

upscayl

Upscayl is a free and open-source AI image upscaler that uses advanced AI algorithms to enlarge and enhance low-resolution images without losing quality. It is a cross-platform application built with the Linux-first philosophy, available on all major desktop operating systems. Upscayl utilizes Real-ESRGAN and Vulkan architecture for image enhancement, and its backend is fully open-source under the AGPLv3 license. It is important to note that a Vulkan compatible GPU is required for Upscayl to function effectively.

uxie

Uxie is a PDF reader app designed to revolutionize the learning experience. It offers features such as annotation, note-taking, collaboration tools, integration with LLM for enhanced learning, and flashcard generation with LLM feedback. Built using Nextjs, tRPC, Zod, TypeScript, Tailwind CSS, React Query, React Hook Form, Supabase, Prisma, and various other tools. Users can take notes, summarize PDFs, chat and collaborate with others, create custom blocks in the editor, and use AI-powered text autocompletion. The tool allows users to craft simple flashcards, test knowledge, answer questions, and receive instant feedback through AI evaluation.

openllmetry

OpenLLMetry is a set of extensions built on top of OpenTelemetry that gives you complete observability over your LLM application. Because it uses OpenTelemetry under the hood, it can be connected to your existing observability solutions - Datadog, Honeycomb, and others. It's built and maintained by Traceloop under the Apache 2.0 license. The repo contains standard OpenTelemetry instrumentations for LLM providers and Vector DBs, as well as a Traceloop SDK that makes it easy to get started with OpenLLMetry, while still outputting standard OpenTelemetry data that can be connected to your observability stack. If you already have OpenTelemetry instrumented, you can just add any of our instrumentations directly.

For similar tasks

gurubase

Gurubase is an open-source RAG system that enables users to create AI-powered Q&A assistants ('Gurus') for various topics by integrating web pages, PDFs, YouTube videos, and GitHub repositories. It offers advanced LLM-based question answering, accurate context-aware responses through the RAG system, multiple data sources integration, easy website embedding, creation of custom AI assistants, real-time updates, personalized learning paths, and self-hosting options. Users can request Guru creation, manage existing Gurus, update datasources, and benefit from the system's features for enhancing user engagement and knowledge sharing.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.