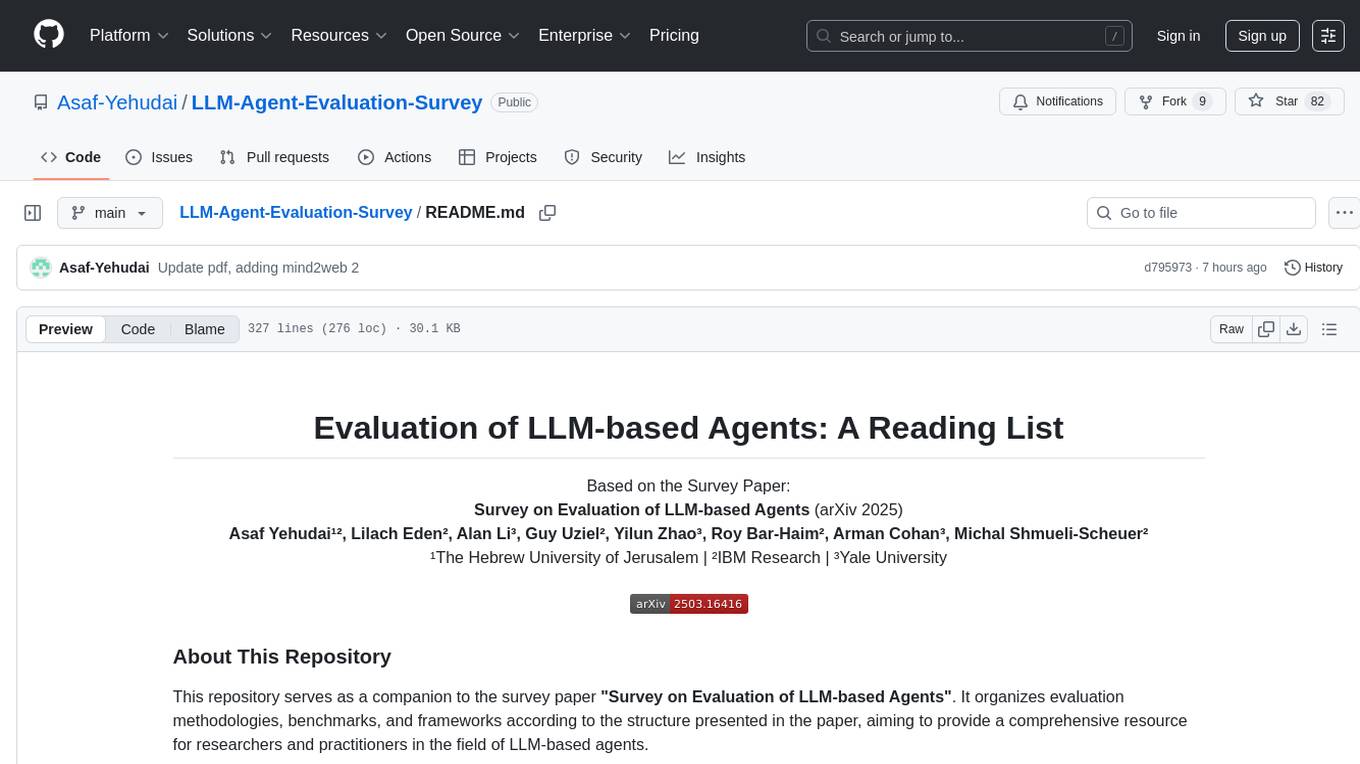

LLM-Agent-Evaluation-Survey

Top papers related to LLM-based agent evaluation

Stars: 82

LLM-Agent-Evaluation-Survey is a tool designed to gather feedback and evaluate the performance of AI agents. It provides a user-friendly interface for users to rate and provide comments on the interactions with AI agents. The tool aims to collect valuable insights to improve the AI agents' capabilities and enhance user experience. With LLM-Agent-Evaluation-Survey, users can easily assess the effectiveness and efficiency of AI agents in various scenarios, leading to better decision-making and optimization of AI systems.

README:

Survey on Evaluation of LLM-based Agents (arXiv 2025)

¹The Hebrew University of Jerusalem | ²IBM Research | ³Yale University

This repository serves as a companion to the survey paper "Survey on Evaluation of LLM-based Agents". It organizes evaluation methodologies, benchmarks, and frameworks according to the structure presented in the paper, aiming to provide a comprehensive resource for researchers and practitioners in the field of LLM-based agents.

The selection criteria focus on works discussed within the survey, covering:

- Fundamental Agent Capabilities: Planning, Tool Use, Self-Reflection, Memory.

- Application-Specific Domains: Web, Software Engineering, Scientific, Conversational Agents.

- Generalist Agent Evaluation.

- Evaluation Frameworks.

Our goal is to map the rapidly evolving landscape of agent evaluation, highlight key trends, and identify current limitations as discussed in the survey.

- 🎁 Surveys

- 🔧 Agent Capabilities Evaluation (§2)

- 🎯 Application-Specific Agent Evaluation (§3)

- 🌍 Generalist Agents Evaluation (§4)

- 🏗️ Frameworks for Agent Evaluation (§5)

- 🎮 Gym-like Environments (§5.1)

- 📈 Discussion (§6)

- ➕ Adding a Benchmark / Paper

- 🔗 Other Relevant Repositories

- 📝 Citation

- A Survey on the Safety and Security Threats of Computer-Using Agents: JARVIS or Ultron?, arXiv 2025 [paper]

- Survey on Evaluation of LLM-based Agents, arXiv 2025 [paper] (This work)

- The Rise and Potential of Large Language Model Based Agents: A Survey, arXiv 2023 [paper]

- A Survey on Large Language Model based Autonomous Agents, arXiv 2023 [paper]

- Understanding the planning of LLM agents: A survey, arXiv 2024 [paper]

- A Survey on the Memory Mechanism of Large Language Model based Agents, arXiv 2024 [paper]

- AQUA-RAT: Program Induction by Rationale Generation: Learning to Solve and Explain Algebraic Word Problems, ACL 2017 [paper]

- HotpotQA: A Dataset for Diverse, Explainable Multi-hop Question Answering, EMNLP 2018 [paper]

- Think you have Solved Question Answering? Try ARC, the AI2 Reasoning Challenge, arXiv 2018 [paper]

- MultiRC: Looking Beyond the Surface: A Challenge Set for Reading Comprehension over Multiple Sentences, NAACL 2018 [paper]

- StrategyQA: Did Aristotle Use a Laptop? A Question Answering Benchmark with Implicit Reasoning Strategies, TACL 2021 [paper]

- Measuring Mathematical Problem Solving With the MATH Dataset, NeurIPS 2021 [paper]

- GSM8K: Training Verifiers to Solve Math Word Problems, arXiv 2021 [paper]

- FOLIO: Natural Language Reasoning with First-Order Logic, EMNLP 2022 Findings [paper]

- Challenging BIG-Bench Tasks and Whether Chain-of-Thought Can Solve Them, arXiv 2022 [paper]

- Game of 24: Tree of Thoughts: Deliberate Problem Solving with Large Language Models, NeurIPS 2023 [paper]

- MINT: Evaluating LLMs in Multi-turn Interaction with Tools and Language Feedback, arXiv 2023 [paper]

- PlanBench: An Extensible Benchmark for Evaluating Large Language Models on Planning and Reasoning about Change, NeurIPS 2023 [paper]

- MUSR: Testing the Limits of Chain-of-Thought with Multistep Soft Reasoning, arXiv 2023 [paper]

- ToolEmu: Identifying the Risks of LM Agents with an LM-Emulated Sandbox, arXiv 2023 [paper]

- AutoPlanBench: Automating the Generation of Prompts for LLM-based Action Choice in PDDL Planning, arXiv 2023 [paper]

- FlowBench: Revisiting and Benchmarking Workflow-Guided Planning for LLM-based Agents, arXiv 2024 [paper]

- P-FOLIO: Evaluating and Improving Logical Reasoning with Abundant Human-Written Reasoning Chains, EMNLP 2024 Findings [paper]

- ACPBench: Reasoning about Action, Change, and Planning, arXiv 2024 [paper]

- Natural Plan: Benchmarking LLMs on Natural Language Planning, arXiv 2024 [paper]

- LogicBench: Towards Systematic Evaluation of Logical Reasoning Ability of Large Language Models, ACL 2024 [paper]

- APIBench: Revisiting, Benchmarking and Exploring API Recommendation: How Far Are We? arXiv 2021 [paper]

- ToolBench: ToolLLM: Facilitating Large Language Models to Master 16000+ Real-world APIs, arXiv 2023 [paper]

- ToolAlpaca: Generalized Tool Learning for Language Models with 3000 Simulated Cases, arXiv 2023 [paper]

- API-Bank: A Benchmark for Tool-Augmented LLMs, EMNLP 2023 [paper]

- NexusRaven-V2: Surpassing GPT-4 for Zero-Shot Function Calling, Blog Post 2023 [link]

- RestGPT: Connecting Large Language Models with Real-World RESTful APIs, arXiv 2023 [paper]

- BFCL: Berkeley Function Calling Leaderboard, Blog Post 2024 [link]

- Seal-Tools: Self-Instruct Tool Learning Dataset for Agent Tuning and Detailed Benchmark, arXiv 2024 [paper]

- ToolSandbox: A Stateful, Conversational, Interactive Evaluation Benchmark for LLM Tool Use Capabilities, arXiv 2024 [paper]

- APIGen: Automated Pipeline for Generating Verifiable and Diverse Function-Calling Datasets, NeurIPS 2024 [paper]

- StableToolBench: Towards Stable Large-scale Benchmarking on Tool Learning of Large Language Models, arXiv 2024 [paper]

- NESTFUL: A Benchmark for Evaluating LLMs on Nested Sequences of API Calls, arXiv 2024 [paper]

- API-BLEND: A Comprehensive Corpora for Training and Benchmarking API LLMs, arXiv 2024 [paper]

- ComplexFuncBench: Exploring Multi-step and Constrained Function Calling under Long-Context Scenario, arXiv 2025 [paper]

- MiniWoB++: Reinforcement Learning on Web Interfaces Using Workflow-Guided Exploration, ICLR 2019 [paper]

- ALFWorld: Aligning Text and Embodied Environments for Interactive Learning, ICLR 2021 [paper]

- MedMCQA: A Large-scale Multi-Subject Multi-Choice Dataset for Medical Domain Question Answering, arXiv 2022 [paper]

- LLF-Bench: Benchmark for Interactive Learning from Language Feedback, arXiv 2023 [paper]

- Reflexion: Language Agents with Verbal Reinforcement Learning, NeurIPS 2023 [paper]

- AGIEval: A Human-Centric Benchmark for Evaluating Foundation Models, arXiv 2023 [paper]

- Large Language Models Cannot Self-Correct Reasoning Yet, arXiv 2023 (Huang et al.) [paper]

- LLM-Evolve: Evaluation for LLM's Evolving Capability on Benchmarks, EMNLP 2024 [paper]

- Reflection-Bench: Probing AI Intelligence with Reflection, arXiv 2024 [paper]

- Self-reflection makes Large Language Models safer, less biased, and ideologically neutral, arXiv 2024 (Liu et al.) [paper]

- ReadAgent: The NarrativeQA Reading Comprehension Challenge, TACL 2018 [paper]

- QMSum: A New Benchmark for Query-based Multi-domain Meeting Summarization, NAACL 2021 [paper]

- QUALITY: Question Answering with Long Input Texts, Yes!, NAACL 2022 Findings [paper]

- MemGPT: Towards LLMs as Operating Systems, arXiv 2023 [paper]

- Reflexion: Language Agents with Verbal Reinforcement Learning, NeurIPS 2023 [paper]

- RAISE: From LLM to Conversational Agent: A Memory Enhanced Architecture with Fine-Tuning of Large Language Models, arXiv 2024 [paper]

- A Human-Inspired Reading Agent with Gist Memory of Very Long Contexts, arXiv 2024 [paper]

- LoCoMo: Evaluating Very Long-Term Conversational Memory of LLM Agents, arXiv 2024 [paper]

- StreamBench: Towards Benchmarking Continuous Improvement of Language Agents, arXiv 2024 [paper]

- LTMbenchmark: Beyond Prompts: Dynamic Conversational Benchmarking of Large Language Models, arXiv 2024 [paper]

- KARMA: Augmenting Embodied AI Agents with Long-and-short Term Memory Systems, arXiv 2024 [paper]

- A-Mem: Agentic Memory for LLM Agents, arXiv 2025 [paper]

- MiniWob: World of Bits: An Open-Domain Platform for Web-Based Agents, ICML 2017 [paper]

- MiniWoB++: Reinforcement Learning on Web Interfaces Using Workflow-Guided Exploration, ICLR 2019 [paper]

- WebShop: Towards Scalable Real-World Web Interaction with Grounded Language Agents, NeurIPS 2022 [paper]

- Mind2Web: Towards a Generalist Agent for the Web, NeurIPS 2023 [paper]

- WebArena: A Realistic Web Environment for Building Autonomous Agents, ICLR 2024 (arXiv 2023) [paper]

- WebVoyager: Building an End-to-End Web Agent with Large Multimodal Models, arXiv 2024 [paper]

- VisualWebArena: Evaluating Multimodal Agents on Realistic Visual Web Tasks, arXiv 2024 [paper]

- WebLinX: Real-World Website Navigation with Multi-Turn Dialogue, arXiv 2024 [paper]

- WorkArena: How Capable Are Web Agents at Solving Common Knowledge Work Tasks?, arXiv 2024 [paper]

- MMInA: Benchmarking Multihop Multimodal Internet Agents, arXiv 2024 [paper]

- WebCanvas: Benchmarking Web Agents in Online Environments, arXiv 2024 [paper]

- WorkArena++: Towards Compositional Planning and Reasoning-Based Common Knowledge Work Tasks, NeurIPS 2024 [paper]

- AssistantBench: Can Web Agents Solve Realistic and Time-Consuming Tasks?, arXiv 2024 [paper]

- ST-WebAgentBench: A Benchmark for Evaluating Safety and Trustworthiness in Web Agents, arXiv 2024 [paper]

- VideoWebArena: Evaluating Long Context Multimodal Agents with Video Understanding Web Tasks, arXiv 2025 [paper]

- BEARCUBS: A benchmark for computer-using web agents, arXiv 2025 [paper]

- Online-Mind2Web:An Illusion of Progress? Assessing the Current State of Web Agents, arXiv 2025 [paper]

- BrowseComp: A Simple Yet Challenging Benchmark for Browsing Agents, arXiv 2025 [paper]

- Mind2Web 2: Evaluating Agentic Search with Agent-as-a-Judge, arXiv 2025 [paper]

- HumanEval: Evaluating Large Language Models Trained on Code, arXiv 2021 [paper]

- SWE-bench: Can Language Models Resolve Real-World GitHub Issues?, NeurIPS 2023 [paper]

- AgentBench: Evaluating LLMs as Agents, ICLR 2024 (arXiv 2023) [paper]

- SWE-bench Verified: OpenAI Blog Post 2024 [link]

- SWE-bench Lite: SWE-bench website (c. 2024) [link]

- SWT-Bench: Testing and Validating Real-World Bug-Fixes with Code Agents, NeurIPS 2024 [paper]

- SWE-bench+: Enhanced Coding Benchmark for LLMs, arXiv 2024 [paper]

- SWE-bench Multimodal: Do AI Systems Generalize to Visual Software Domains?, arXiv 2024 [paper]

- TDD-Bench Verified: Can LLMs Generate Tests for Issues Before They Get Resolved?, arXiv 2024 [paper]

- Windows Agent Arena: Evaluating Multi-Modal OS Agents at Scale, arXiv 2024 [paper]

- IT-Bench: Evaluating AI Agents Across Diverse Real-World IT Automation Tasks, arXiv 2025 [paper]

- Cybench: A Framework for Evaluating Cybersecurity Capabilities and Risks of Language Models, ICLR 2025 [paper]

- SWELancer: Can Frontier LLMs Earn $1 Million from Real-World Freelance Software Engineering?, arXiv 2025 [paper]

- CodeARC: Benchmarking Reasoning Capabilities of LLM Agents for Inductive Program Synthesis, arXiv 2025 [paper]

- QASPER: A Dataset of Information-Seeking Questions and Answers Anchored in Research Papers, NAACL 2021 [paper]

- MS²: Multi-Document Summarization of Medical Studies, EMNLP 2021 Findings [paper]

- ScienceQA: Learn to Explain: Multimodal Reasoning via Thought Chains for Science Question Answering, NeurIPS 2022 [paper]

- ScienceWorld: Is Your Agent Smarter Than a 5th Grader?, EMNLP 2022 [paper]

- DiscoveryWorld: A Virtual Environment for Developing and Evaluating Automated Scientific Discovery Agents, arXiv 2024 [paper]

- LAB-Bench: Measuring Capabilities of Language Models for Biology Research, arXiv 2024 [paper]

- SciCode: A Research Coding Benchmark Curated by Scientists, NeurIPS 2024 Datasets Track [paper]

- Can LLMs Generate Novel Research Ideas? A Large-Scale Human Study with 100+ NLP Researchers: ICLR 2025 (arXiv 2024) [paper]

- SUPER: Evaluating Agents on Setting Up and Executing Tasks from Research Repositories, arXiv 2024 [paper]

- CORE-Bench: Fostering the Credibility of Published Research through a Computational Reproducibility Agent Benchmark, arXiv 2024 [paper]

- ScienceAgentBench: Toward Rigorous Assessment of Language Agents for Data-Driven Scientific Discovery, arXiv 2024 [paper]

- AAAR-1.0: Assessing AI's Potential to Assist Research, arXiv 2024 [paper]

- MLGym-Bench: A New Framework and Benchmark for Advancing AI Research Agents, arXiv 2025 [paper]

- PaperBench: Evaluating AI's Ability to Replicate AI Research, arXiv 2025 [paper]

- MultiWOZ: A Large-Scale Multi-Domain Wizard-of-Oz Dataset for Task-Oriented Dialogue Modelling, EMNLP 2018 [paper]

- SMCalFlow: Task-Oriented Dialogue as Dataflow Synthesis, TACL 2020 [paper]

- ABCD: Action-Based Conversations Dataset: A Corpus for Building More In-Depth Task-Oriented Dialogue Systems, LREC 2022 [paper]

- τ-Bench: A Benchmark for Tool-Agent-User Interaction in Real-World Domains, arXiv 2024 [paper]

- ALMITA: Automated test generation to evaluate tool-augmented LLMs as conversational AI agents, GenBench @ EMNLP 2024 [paper]

- LTM-Benchmark: Beyond Prompts: Dynamic Conversational Benchmarking of Large Language Models:, arXiv 2024 [paper]

- IntellAgent: A Multi-Agent Framework for Evaluating Conversational AI Systems, arXiv 2025 [paper]

- GAIA: A Benchmark for General AI Assistants, arXiv 2023 [paper]

- AgentBench: Evaluating LLMs as Agents, ICLR 2024 (arXiv 2023) [paper]

- OmniACT: A Dataset and Benchmark for Enabling Multimodal Generalist Autonomous Agents for Desktop and Web, ECCV 2024 [paper]

- OSWorld: Benchmarking Multimodal Agents for Open-Ended Tasks in Real Computer Environments, NeurIPS 2024 Datasets Track [paper]

- Galileo's Agent Leaderboard: HuggingFace Space (c. 2024) [link]

- AppWorld: A Controllable World of Apps and People for Benchmarking Interactive Coding Agents, ACL 2024 [paper]

- AndroidWorld: A Dynamic Benchmarking Environment for Autonomous Agents, arXiv 2024 [paper]

- CRMArena: Understanding the Capacity of LLM Agents to Perform Professional CRM Tasks in Realistic Environments, arXiv 2024 [paper]

- TheAgentCompany: Benchmarking LLM Agents on Consequential Real World Tasks, arXiv 2024 [paper]

- AgentBoard: An Analytical Evaluation Board of Multi-turn LLM Agents, NeurIPS 2024 [paper]

- HAL: Holistic Agent Leaderboard, HAL leaderboard 2025 [paper]

- Databricks Mosaic AI Agent Evaluation:[link]

- Galileo Agentic Evaluation: [link]

- Vertex AI Gen AI Evaluation: [link]

- LangSmith: [link]

- Langfuse: [link]

- Patronus AI: [link]

- LangChain AgentEvals: [link]

- Arize AI Evaluation: [link]

- W&B Weave: [link]

- Comet Opik [link]

- BrowserGym: The BrowserGym Ecosystem for Web Agent Research [paper]

- MLGym: A New Framework and Benchmark for Advancing AI Research Agents [paper]

- SWE-Gym: Training Software Engineering Agents and Verifiers with SWE-Gym [paper]

- PersonaGym: Evaluating Persona Agents and LLMs [paper]

- RepoST: Scalable Repository-Level Coding Environment Construction with Sandbox Testing [paper]

(Refer to Section 6 in the paper for detailed discussion)

The field is moving beyond simplified, static environments towards benchmarks reflecting real-world complexity and increased difficulty.

Static benchmarks quickly become outdated. There is a trend towards adaptive benchmarks that incorporate live data or continuous updates to maintain relevance.

Moving beyond coarse, end-to-end success metrics to more detailed, step-by-step analysis to diagnose failures.

- WebCanvas: Benchmarking Web Agents in Online Environments (Measures key node completion) [paper]

- LangSmith: LangChain Evaluation Framework (Supports trajectory tracing) [link]

- Galileo Agentic Evaluation: (Introduces action advancement metric) [link]

Increasing focus on measuring resource consumption (tokens, time, API calls) alongside performance.

- AI Agents That Matter: [paper]

Developing methods to reduce reliance on manual annotation and enable continuous, large-scale evaluation.

- Synthetic Data Generation:

-

Agent-as-a-Judge:

- Agent-as-a-Judge: Evaluate agents with agents [paper]

Growing need for benchmarks that specifically test safety, trustworthiness, robustness against adversarial inputs, and adherence to policies.

- AgentHarm: A Benchmark for Measuring Harmfulness of LLM Agents, ICLR 2025 [paper]

- ST-WebAgentBench: A Benchmark for Evaluating Safety and Trustworthiness in Web Agents, ICLR 2025 [paper]

- Multi-Agent Risks from Advanced AI, arXiv 2025 [paper]

- Evaluating Cultural and Social Awareness of LLM Web Agents, NAACL-findings 2025 [paper]

- Breaking ReAct Agents: Foot-in-the-Door Attack Will Get You In, NAACL 2025 [paper]

We aim to keep this list comprehensive and up-to-date within the scope of LLM-based Agent Evaluation, as covered in our survey. If you know of a relevant benchmark, evaluation framework, or significant paper that fits this focus and is missing from the list, we welcome your suggestions!

Contribution Guidelines:

- Relevance: Please ensure the work is directly related to the evaluation methodologies, benchmarks, frameworks, or core capabilities/applications as they pertain to assessing LLM-based agents.

- Information: Provide the full paper title, authors, publication venue/year (or arXiv link), and a direct link to the paper (DOI, arXiv, etc.).

- Justification (Optional but helpful): Briefly explain why the paper is relevant and where it might fit within the existing structure.

How to Suggest:

-

Preferred Method: Open a GitHub Issue:

- Click on the "Issues" tab of this repository.

- Create a "New Issue".

- Use a descriptive title (e.g., "Suggestion: Add [Benchmark/Paper Name]").

- Include the information requested above in the issue description.

-

Alternative: Submit a Pull Request:

- If you are comfortable editing the

README.mdfile directly, you can fork the repository, add the paper following the existing format, and submit a Pull Request. Please ensure your addition is placed in the appropriate section.

- If you are comfortable editing the

We appreciate your contributions to making this a valuable resource for the community!

While this repository mirrors our survey's scope, other excellent repositories cover LLM Agents more broadly or from different angles:

General LLM Agent Papers:

-

AGI-Edgerunners/LLM-Agents-Papers

-

zjunlp/LLMAgentPapers

-

Paitesanshi/LLM-Agent-Survey

-

woooodyy/llm-agent-paper-list

-

xinzhel/llm-agent-survey

Specific Focus Repositories:

-

LLM-Search (Search/Inference)

-

nuster1128/LLM_Agent_Memory_Survey (Memory)

-

teacherpeterpan/self-correction-llm-papers (Self-Correction)

-

git-disl/awesome-LLM-game-agent-papers (Gaming)

If you find this survey or repository helpful, please cite the paper:

@misc{yehudai2025survey,

title={Survey on Evaluation of LLM-based Agents},

author={Asaf Yehudai and Lilach Eden and Alan Li and Guy Uziel and Yilun Zhao and Roy Bar-Haim and Arman Cohan and Michal Shmueli-Scheuer},

year={2025},

eprint={2503.16416},

archivePrefix={arXiv},

primaryClass={cs.AI},

url={https://arxiv.org/abs/2503.16416}

}For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for LLM-Agent-Evaluation-Survey

Similar Open Source Tools

LLM-Agent-Evaluation-Survey

LLM-Agent-Evaluation-Survey is a tool designed to gather feedback and evaluate the performance of AI agents. It provides a user-friendly interface for users to rate and provide comments on the interactions with AI agents. The tool aims to collect valuable insights to improve the AI agents' capabilities and enhance user experience. With LLM-Agent-Evaluation-Survey, users can easily assess the effectiveness and efficiency of AI agents in various scenarios, leading to better decision-making and optimization of AI systems.

lemonai

LemonAI is a versatile machine learning library designed to simplify the process of building and deploying AI models. It provides a wide range of tools and algorithms for data preprocessing, model training, and evaluation. With LemonAI, users can easily experiment with different machine learning techniques and optimize their models for various tasks. The library is well-documented and beginner-friendly, making it suitable for both novice and experienced data scientists. LemonAI aims to streamline the development of AI applications and empower users to create innovative solutions using state-of-the-art machine learning methods.

LazyLLM

LazyLLM is a low-code development tool for building complex AI applications with multiple agents. It assists developers in building AI applications at a low cost and continuously optimizing their performance. The tool provides a convenient workflow for application development and offers standard processes and tools for various stages of application development. Users can quickly prototype applications with LazyLLM, analyze bad cases with scenario task data, and iteratively optimize key components to enhance the overall application performance. LazyLLM aims to simplify the AI application development process and provide flexibility for both beginners and experts to create high-quality applications.

trubrics-sdk

Trubrics-sdk is a software development kit designed to facilitate the integration of analytics features into applications. It provides a set of tools and functionalities that enable developers to easily incorporate analytics capabilities, such as data collection, analysis, and reporting, into their software products. The SDK streamlines the process of implementing analytics solutions, allowing developers to focus on building and enhancing their applications' functionality and user experience. By leveraging trubrics-sdk, developers can quickly and efficiently integrate robust analytics features, gaining valuable insights into user behavior and application performance.

openvino_build_deploy

The OpenVINO Build and Deploy repository provides pre-built components and code samples to accelerate the development and deployment of production-grade AI applications across various industries. With the OpenVINO Toolkit from Intel, users can enhance the capabilities of both Intel and non-Intel hardware to meet specific needs. The repository includes AI reference kits, interactive demos, workshops, and step-by-step instructions for building AI applications. Additional resources such as Jupyter notebooks and a Medium blog are also available. The repository is maintained by the AI Evangelist team at Intel, who provide guidance on real-world use cases for the OpenVINO toolkit.

Generative-AI-Indepth-Basic-to-Advance

Generative AI Indepth Basic to Advance is a repository focused on providing tutorials and resources related to generative artificial intelligence. The repository covers a wide range of topics from basic concepts to advanced techniques in the field of generative AI. Users can find detailed explanations, code examples, and practical demonstrations to help them understand and implement generative AI algorithms. The goal of this repository is to help beginners get started with generative AI and to provide valuable insights for more experienced practitioners.

context-portal

Context-portal is a versatile tool for managing and visualizing data in a collaborative environment. It provides a user-friendly interface for organizing and sharing information, making it easy for teams to work together on projects. With features such as customizable dashboards, real-time updates, and seamless integration with popular data sources, Context-portal streamlines the data management process and enhances productivity. Whether you are a data analyst, project manager, or team leader, Context-portal offers a comprehensive solution for optimizing workflows and driving better decision-making.

Elite-Dangerous-AI-Integration

Elite-Dangerous-AI-Integration aims to provide a seamless and efficient experience for commanders by integrating Elite:Dangerous with various services for Speech-to-Text, Text-to-Speech, and Large Language Models. The AI reacts to game events, given commands, and can perform actions like taking screenshots or fetching information from APIs. It is designed for all commanders, enhancing roleplaying, replacing third-party websites, and assisting with tutorials.

jadx-ai-mcp

JADX-AI-MCP is a plugin for the JADX decompiler that integrates with Model Context Protocol (MCP) to provide live reverse engineering support with LLMs like Claude. It allows for quick analysis, vulnerability detection, and AI code modification, all in real time. The tool combines JADX-AI-MCP and JADX MCP SERVER to analyze Android APKs effortlessly. It offers various prompts for code understanding, vulnerability detection, reverse engineering helpers, static analysis, AI code modification, and documentation. The tool is part of the Zin MCP Suite and aims to connect all android reverse engineering and APK modification tools with a single MCP server for easy reverse engineering of APK files.

arcade-ai

Arcade AI is a developer-focused tooling and API platform designed to enhance the capabilities of LLM applications and agents. It simplifies the process of connecting agentic applications with user data and services, allowing developers to concentrate on building their applications. The platform offers prebuilt toolkits for interacting with various services, supports multiple authentication providers, and provides access to different language models. Users can also create custom toolkits and evaluate their tools using Arcade AI. Contributions are welcome, and self-hosting is possible with the provided documentation.

earth2studio

Earth2Studio is a Python-based package designed to enable users to quickly get started with AI weather and climate models. It provides access to pre-trained models, diagnostic tools, data sources, IO utilities, perturbation methods, and sample workflows for building custom weather prediction workflows. The package aims to empower users to explore AI-driven meteorology through modular components and seamless integration with other Nvidia packages like Modulus.

Disciplined-AI-Software-Development

Disciplined AI Software Development is a comprehensive repository that provides guidelines and best practices for developing AI software in a disciplined manner. It covers topics such as project organization, code structure, documentation, testing, and deployment strategies to ensure the reliability, scalability, and maintainability of AI applications. The repository aims to help developers and teams navigate the complexities of AI development by offering practical advice and examples to follow.

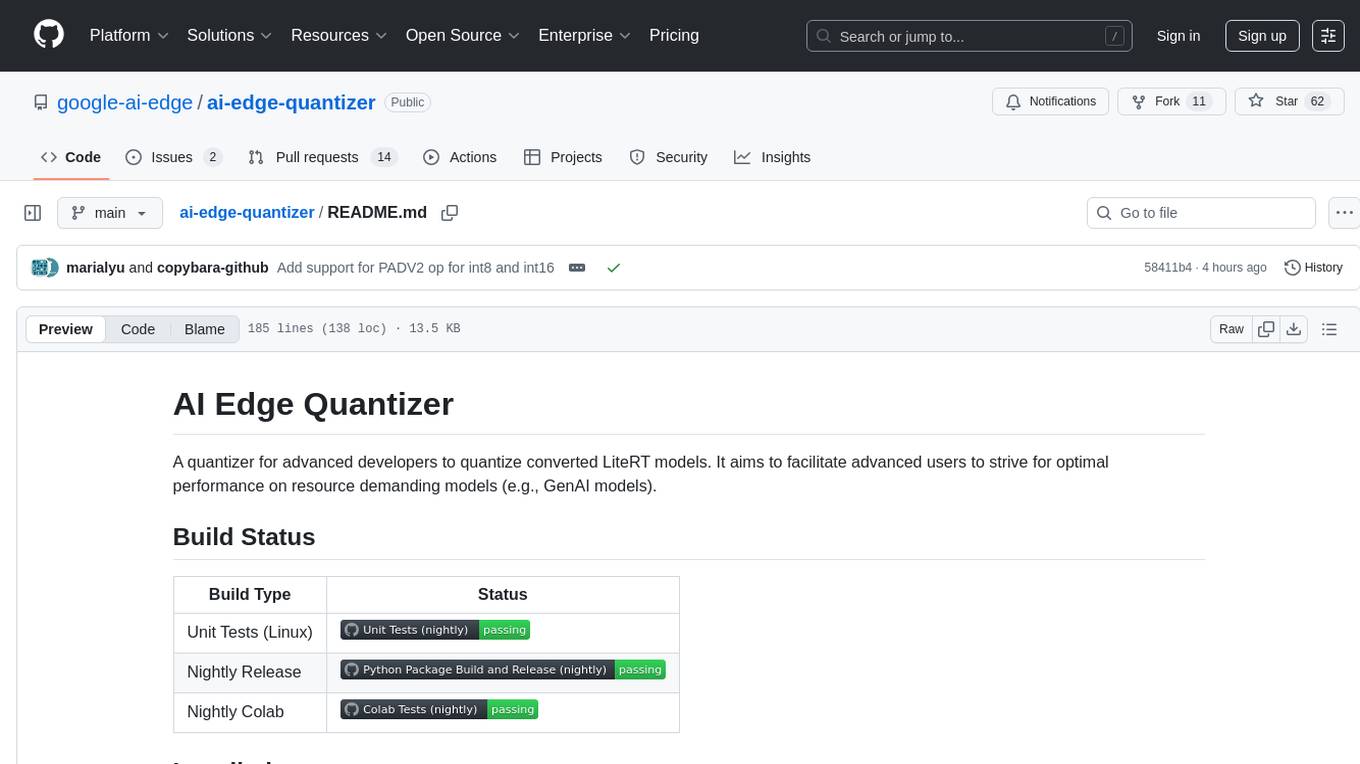

ai-edge-quantizer

AI Edge Quantizer is a tool designed for advanced developers to quantize converted LiteRT models. It aims to optimize performance on resource-demanding models by providing quantization recipes for edge device deployment. The tool supports dynamic quantization, weight-only quantization, and static quantization methods, allowing users to customize the quantization process for different hardware deployments. Users can specify quantization recipes to apply to source models, resulting in quantized LiteRT models ready for deployment. The tool also includes advanced features such as selective quantization and mixed precision schemes for fine-tuning quantization recipes.

MeeseeksAI

MeeseeksAI is a framework designed to orchestrate AI agents using a mermaid graph and networkx. It provides a structured approach to managing and coordinating multiple AI agents within a system. The framework allows users to define the interactions and dependencies between agents through a visual representation, making it easier to understand and modify the behavior of the AI system. By leveraging the power of networkx, MeeseeksAI enables efficient graph-based computations and optimizations, enhancing the overall performance of AI workflows. With its intuitive design and flexible architecture, MeeseeksAI simplifies the process of building and deploying complex AI systems, empowering users to create sophisticated agent interactions with ease.

RAG-To-Know

RAG-To-Know is a versatile tool for knowledge extraction and summarization. It leverages the RAG (Retrieval-Augmented Generation) framework to provide a seamless way to retrieve and summarize information from various sources. With RAG-To-Know, users can easily extract key insights and generate concise summaries from large volumes of text data. The tool is designed to streamline the process of information retrieval and summarization, making it ideal for researchers, students, journalists, and anyone looking to quickly grasp the essence of complex information.

Generative-AI-Scratch-2-Advance-By-ThatAIGuy

Generative-AI-Scratch-2-Advance-By-ThatAIGuy is a repository that provides advanced resources and tools for individuals interested in exploring generative AI techniques from scratch. It offers a comprehensive guide and hands-on projects to help users advance their understanding of generative AI algorithms and applications. The repository includes detailed tutorials, code samples, and datasets to support learners in building their own generative AI models and projects. Whether you are a beginner looking to dive into generative AI or an experienced practitioner seeking to enhance your skills, Generative-AI-Scratch-2-Advance-By-ThatAIGuy is a valuable resource to support your learning journey.

For similar tasks

design-studio

Tiledesk Design Studio is an open-source, no-code development platform for creating chatbots and conversational apps. It offers a user-friendly, drag-and-drop interface with pre-ready actions and integrations. The platform combines the power of LLM/GPT AI with a flexible 'graph' approach for creating conversations and automations with ease. Users can automate customer conversations, prototype conversations, integrate ChatGPT, enhance user experience with multimedia, provide personalized product recommendations, set conditions, use random replies, connect to other tools like HubSpot CRM, integrate with WhatsApp, send emails, and seamlessly enhance existing setups.

koordinator

Koordinator is a QoS based scheduling system for hybrid orchestration workloads on Kubernetes. It aims to improve runtime efficiency and reliability of latency sensitive workloads and batch jobs, simplify resource-related configuration tuning, and increase pod deployment density. It enhances Kubernetes user experience by optimizing resource utilization, improving performance, providing flexible scheduling policies, and easy integration into existing clusters.

Streamer-Sales

Streamer-Sales is a large model for live streamers that can explain products based on their characteristics and inspire users to make purchases. It is designed to enhance sales efficiency and user experience, whether for online live sales or offline store promotions. The model can deeply understand product features and create tailored explanations in vivid and precise language, sparking user's desire to purchase. It aims to revolutionize the shopping experience by providing detailed and unique product descriptions to engage users effectively.

ChatGPT

ChatGPT is a desktop application available on Mac, Windows, and Linux that provides a powerful AI wrapper experience. It allows users to interact with AI models for various tasks such as generating text, answering questions, and engaging in conversations. The application is designed to be user-friendly and accessible to both beginners and advanced users. ChatGPT aims to enhance the user experience by offering a seamless interface for leveraging AI capabilities in everyday scenarios.

chatflow

Chatflow is a tool that provides a chat interface for users to interact with systems using natural language. The engine understands user intent and executes commands for tasks, allowing easy navigation of complex websites/products. This approach enhances user experience, reduces training costs, and boosts productivity.

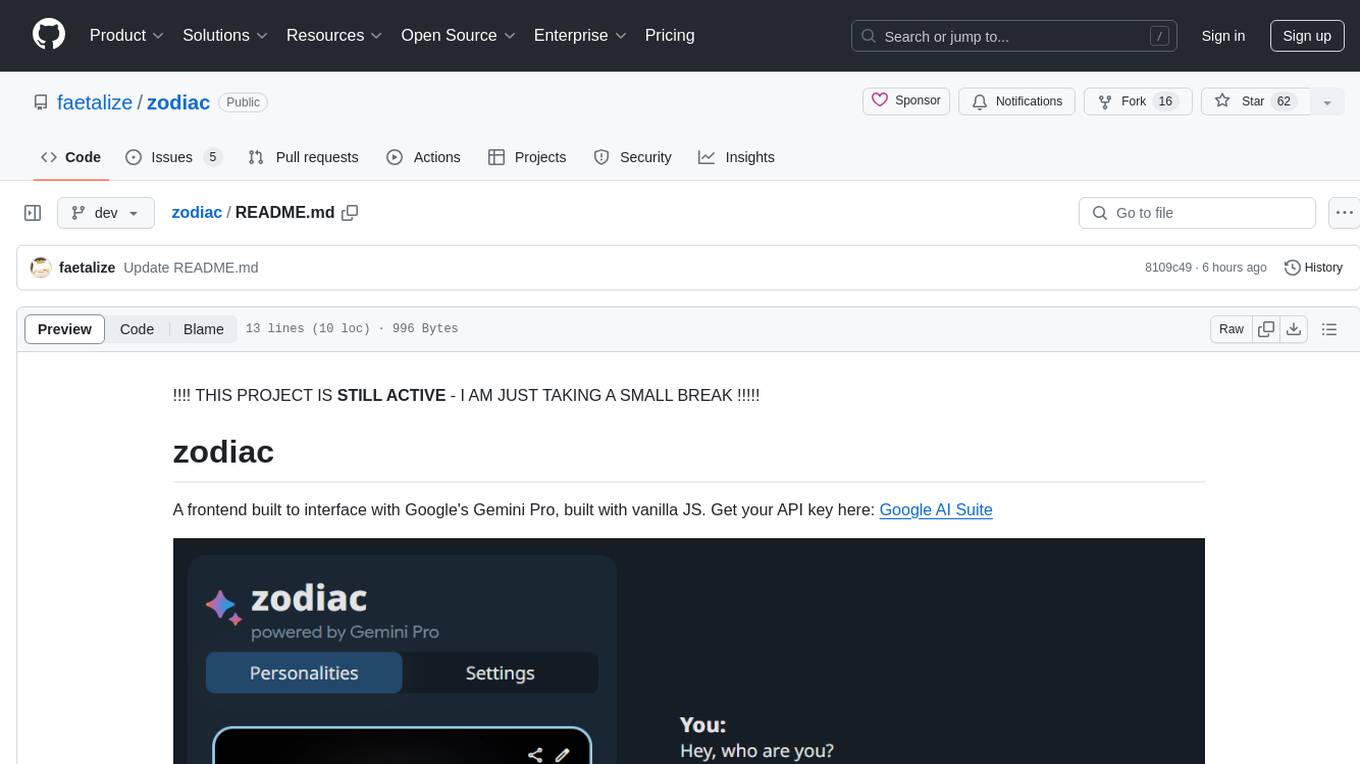

zodiac

Zodiac is a frontend tool designed to interact with Google's Gemini Pro using vanilla JS. It provides a user-friendly interface for accessing the functionalities of Google AI Suite. The tool simplifies the process of utilizing Gemini Pro's capabilities through a straightforward web application. Users can easily integrate their API key and access various features offered by Google AI Suite. Zodiac aims to streamline the interaction with Gemini Pro and enhance the user experience by offering a simple and intuitive interface for managing AI tasks.

deforum-comfy-nodes

Deforum for ComfyUI is an integration tool designed to enhance the user experience of using ComfyUI. It provides custom nodes that can be added to ComfyUI to improve functionality and workflow. Users can easily install Deforum for ComfyUI by cloning the repository and following the provided instructions. The tool is compatible with Python v3.10 and is recommended to be used within a virtual environment. Contributions to the tool are welcome, and users can join the Discord community for support and discussions.

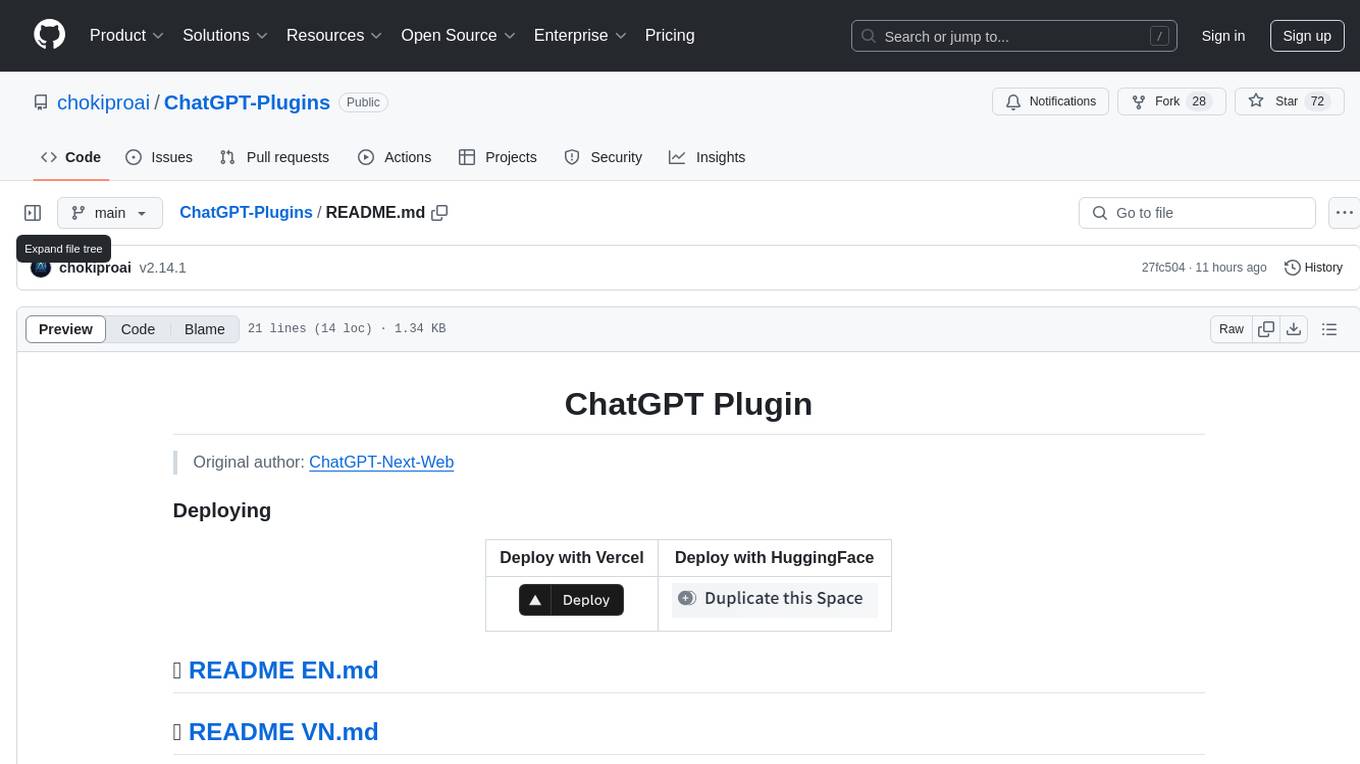

ChatGPT-Plugins

ChatGPT-Plugins is a repository containing plugins for ChatGPT-Next-Web. These plugins provide additional functionalities and features to enhance the ChatGPT experience. Users can easily deploy these plugins using Vercel or HuggingFace. The repository includes README files in English and Vietnamese for user guidance.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.