Best AI tools for< Evaluate Agent Performance >

20 - AI tool Sites

Coval

Coval is an AI tool designed to help users ship reliable AI agents faster by providing simulation and evaluations for voice and chat agents. It allows users to simulate thousands of scenarios from a few test cases, create prompts for testing, and evaluate agent interactions comprehensively. Coval offers AI-powered simulations, voice AI compatibility, performance tracking, workflow metrics, and customizable evaluation metrics to optimize AI agents efficiently.

Future AGI

Future AGI is a revolutionary AI data management platform that aims to achieve 99% accuracy in AI applications across software and hardware. It provides a comprehensive evaluation and optimization platform for enterprises to enhance the performance of their AI models. Future AGI offers features such as creating trustworthy, accurate, and responsible AI, 10x faster processing, generating and managing diverse synthetic datasets, testing and analyzing agentic workflow configurations, assessing agent performance, enhancing LLM application performance, monitoring and protecting applications in production, and evaluating AI across different modalities.

HiringBranch

HiringBranch is an AI-powered platform that offers high-volume skills assessments to help companies hire the best candidates efficiently. The platform accurately measures soft skills and communication through open-ended conversational assessments, eliminating the need for traditional interviews. HiringBranch's AI skills assessments are tailored to various industries such as Telecommunication, Retail, Banking & Insurance, and Contact Centers, providing real-time evaluation of role-critical skills. The platform aims to streamline the hiring process, reduce mis-hires, and improve retention rates for enterprises globally.

Vocera

Vocera is an AI voice agent testing tool that allows users to test and monitor voice AI agents efficiently. It enables users to launch voice agents in minutes, ensuring a seamless conversational experience. With features like testing against AI-generated datasets, simulating scenarios, and monitoring AI performance, Vocera helps in evaluating and improving voice agent interactions. The tool provides real-time insights, detailed logs, and trend analysis for optimal performance, along with instant notifications for errors and failures. Vocera is designed to work for everyone, offering an intuitive dashboard and data-driven decision-making for continuous improvement.

RagaAI Catalyst

RagaAI Catalyst is a sophisticated AI observability, monitoring, and evaluation platform designed to help users observe, evaluate, and debug AI agents at all stages of Agentic AI workflows. It offers features like visualizing trace data, instrumenting and monitoring tools and agents, enhancing AI performance, agentic testing, comprehensive trace logging, evaluation for each step of the agent, enterprise-grade experiment management, secure and reliable LLM outputs, finetuning with human feedback integration, defining custom evaluation logic, generating synthetic data, and optimizing LLM testing with speed and precision. The platform is trusted by AI leaders globally and provides a comprehensive suite of tools for AI developers and enterprises.

PlaymakerAI

PlaymakerAI is an AI-powered platform that provides football analytics and scouting services to football clubs, agents, media companies, betting companies, researchers, and educators. The platform offers access to AI-evaluated football data from hundreds of leagues worldwide, individual player reports, analytics and scouting services, media insights, and a Playmaker Personality Profile for deeper understanding of squad dynamics. PlaymakerAI revolutionizes the way football organizations operate by offering clear, insightful information and expert assistance in football analytics and scouting.

SymptomChecker.io

SymptomChecker.io is an AI-powered medical symptom checker that allows users to describe their symptoms in their own words and receive non-reviewed AI-generated responses. It is important to note that this tool is not intended to offer medical advice, diagnosis, or treatment and should not be used as a substitute for professional medical advice. In the case of a medical emergency, please contact your physician or dial 911 immediately.

Enhans AI Model Generator

Enhans AI Model Generator is an advanced AI tool designed to help users generate AI models efficiently. It utilizes cutting-edge algorithms and machine learning techniques to streamline the model creation process. With Enhans AI Model Generator, users can easily input their data, select the desired parameters, and obtain a customized AI model tailored to their specific needs. The tool is user-friendly and does not require extensive programming knowledge, making it accessible to a wide range of users, from beginners to experts in the field of AI.

Convr

Convr is an AI-driven underwriting analysis platform that helps commercial P&C insurance organizations transform their underwriting operations. It provides a modularized AI underwriting and intelligent document automation workbench that enriches and expedites the commercial insurance new business and renewal submission flow with underwriting insights, business classification, and risk scoring. Convr's mission is to solve the last big problem of commercial insurance while improving profitability and increasing efficiency.

Convr

Convr is a modularized AI underwriting and intelligent document automation workbench that enriches and expedites the commercial insurance new business and renewal submission flow with underwriting insights, business classification and risk scoring. As a trusted technology partner and advisor with deep industry expertise, we help insurance organizations transform their underwriting operations through our AI-driven digital underwriting analysis platform.

Q, ChatGPT for Slack

The website offers 'Q, ChatGPT for Slack', an AI tool that functions like ChatGPT within your Slack workspace. It allows on-demand URL and file reading, custom instructions for tailored use, and supports various URLs and files. With Q, users can summarize, evaluate, brainstorm ideas, self-review, engage in Q&A, and more. The tool enables team-specific rules, guidelines, and templates, making it ideal for emails, translations, content creation, copywriting, reporting, coding, and testing based on internal information.

Lucida AI

Lucida AI is an AI-driven coaching tool designed to enhance employees' English language skills through personalized insights and feedback based on real-life call interactions. The tool offers comprehensive coaching in pronunciation, fluency, grammar, vocabulary, and tracking of language proficiency. It provides advanced speech analysis using proprietary LLM and NLP technologies, ensuring accurate assessments and detailed tracking. With end-to-end encryption for data privacy, Lucy AI is a cost-effective solution for organizations seeking to improve communication skills and streamline language assessment processes.

Galileo AI

Galileo AI is a platform that offers automated evaluations for AI applications, bringing automation and insight to AI evaluations to ensure reliable and confident shipping. It helps in eliminating 80% of evaluation time by replacing manual reviews with high-accuracy metrics, enabling rapid iteration, achieving real-time protection, and providing end-to-end visibility into agent completions. Galileo also allows developers to take control of AI complexity, de-risk AI in production, and deploy AI applications flexibly across different environments. The platform is trusted by enterprises and loved by developers for its accuracy, low-latency, and ability to run on L4 GPUs.

4'33"

4'33" is an AI agent designed to help students and researchers discover the people they need, such as students seeking professors in a specific field or city. The tool assists in asking better questions, connecting with individuals, and evaluating how well they align with the user's requirements and background. Powered by Perplexity, 4'33" offers a platform for connecting people and answering questions, alongside AI technology. The tool aims to facilitate easier and faster connections between users and relevant individuals, enabling knowledge sharing and collaboration.

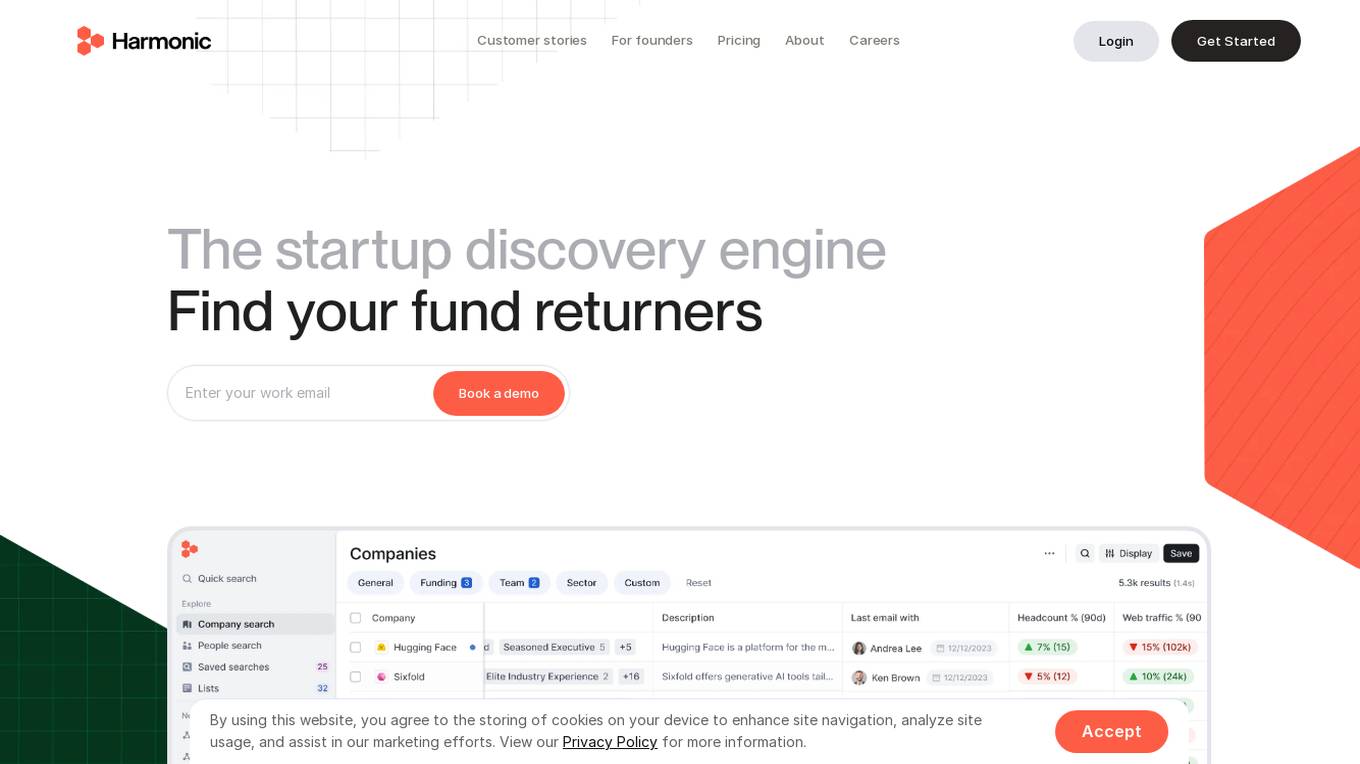

Harmonic.ai

Harmonic.ai is a startup database platform that offers a comprehensive database of companies and individuals, enabling users to identify new opportunities, scout for startups, and make informed investment decisions. The platform is powered by AI technology, providing users with sourcing superpowers and the ability to discover hidden gems in the startup ecosystem. Harmonic.ai offers features such as hyper-specific searches, industry or product descriptions to find companies, market maps, talent flows, and real-time alerts. Users can also evaluate founding team experience, analyze key competitors, and assess funding and investors. The platform is designed to help users stay up to date with the latest industry trends and make data-driven decisions.

Agenta.ai

Agenta.ai is a platform designed to provide prompt management, evaluation, and observability for LLM (Large Language Model) applications. It aims to address the challenges faced by AI development teams in managing prompts, collaborating effectively, and ensuring reliable product outcomes. By centralizing prompts, evaluations, and traces, Agenta.ai helps teams streamline their workflows and follow best practices in LLMOps. The platform offers features such as unified playground for prompt comparison, automated evaluation processes, human evaluation integration, observability tools for debugging AI systems, and collaborative workflows for PMs, experts, and developers.

Reppls

Reppls is an AI Interview Agents tool designed for data-driven hiring processes. It helps companies interview all applicants to identify the right talents hidden behind uninformative CVs. The tool offers seamless integration with daily tools, such as Zoom and MS Teams, and provides deep technical assessments in the early stages of hiring, allowing HR specialists to focus on evaluating soft skills. Reppls aims to transform the hiring process by saving time spent on screening, interviewing, and assessing candidates.

AlphaLens Intelligence Solutions

AlphaLens Intelligence Solutions is an AI-powered platform that offers a comprehensive suite of tools for deal origination, sourcing, enrichment, and CRM integrations. It provides users with the ability to extract data from pitch decks, find and evaluate companies, and enrich company profiles with deep market insights. The platform leverages generative AI, semantic search, and active monitoring to surface hidden opportunities, automate deal screening, and sync data to the user's pipeline. With a focus on private market data, AlphaLens enables users to access a vast universe of company and product information, including funding rounds, growth metrics, and target audience insights. The platform also offers REST API access, Chrome extension, and integration with CRM systems for seamless data management.

AI Alliance

The AI Alliance is a community dedicated to building and advancing open-source AI agents, data, models, evaluation, safety, applications, and advocacy to ensure everyone can benefit. They focus on various areas such as skills and education, trust and safety, applications and tools, hardware enablement, foundation models, and advocacy. The organization supports global AI skill-building, education, and exploratory research, creates benchmarks and tools for safe generative AI, builds capable tools for AI model builders and developers, fosters AI hardware accelerator ecosystem, enables open foundation models and datasets, and advocates for regulatory policies for healthy AI ecosystems.

Fairo

Fairo is a platform that facilitates Responsible AI Governance, offering tools for reducing AI hallucinations, managing AI agents and assets, evaluating AI systems, and ensuring compliance with various regulations. It provides a comprehensive solution for organizations to align their AI systems ethically and strategically, automate governance processes, and mitigate risks. Fairo aims to make responsible AI transformation accessible to organizations of all sizes, enabling them to build technology that is profitable, ethical, and transformative.

8 - Open Source AI Tools

OSWorld

OSWorld is a benchmarking tool designed to evaluate multimodal agents for open-ended tasks in real computer environments. It provides a platform for running experiments, setting up virtual machines, and interacting with the environment using Python scripts. Users can install the tool on their desktop or server, manage dependencies with Conda, and run benchmark tasks. The tool supports actions like executing commands, checking for specific results, and evaluating agent performance. OSWorld aims to facilitate research in AI by providing a standardized environment for testing and comparing different agent baselines.

LLM-Agents-Papers

A repository that lists papers related to Large Language Model (LLM) based agents. The repository covers various topics including survey, planning, feedback & reflection, memory mechanism, role playing, game playing, tool usage & human-agent interaction, benchmark & evaluation, environment & platform, agent framework, multi-agent system, and agent fine-tuning. It provides a comprehensive collection of research papers on LLM-based agents, exploring different aspects of AI agent architectures and applications.

council

Council is an open-source platform designed for the rapid development and deployment of customized generative AI applications using teams of agents. It extends the LLM tool ecosystem by providing advanced control flow and scalable oversight for AI agents. Users can create sophisticated agents with predictable behavior by leveraging Council's powerful approach to control flow using Controllers, Filters, Evaluators, and Budgets. The framework allows for automated routing between agents, comparing, evaluating, and selecting the best results for a task. Council aims to facilitate packaging and deploying agents at scale on multiple platforms while enabling enterprise-grade monitoring and quality control.

ComfyBench

ComfyBench is a comprehensive benchmark tool designed to evaluate agents' ability to design collaborative AI systems in ComfyUI. It provides tasks for agents to learn from documents and create workflows, which are then converted into code for better understanding by LLMs. The tool measures performance based on pass rate and resolve rate, reflecting the correctness of workflow execution and task realization. ComfyAgent, a component of ComfyBench, autonomously designs new workflows by learning from existing ones, interpreting them as collaborative AI systems to complete given tasks.

MARBLE

MARBLE (Multi-Agent Coordination Backbone with LLM Engine) is a modular framework for developing, testing, and evaluating multi-agent systems leveraging Large Language Models. It provides a structured environment for agents to interact in simulated environments, utilizing cognitive abilities and communication mechanisms for collaborative or competitive tasks. The framework features modular design, multi-agent support, LLM integration, shared memory, flexible environments, metrics and evaluation, industrial coding standards, and Docker support.

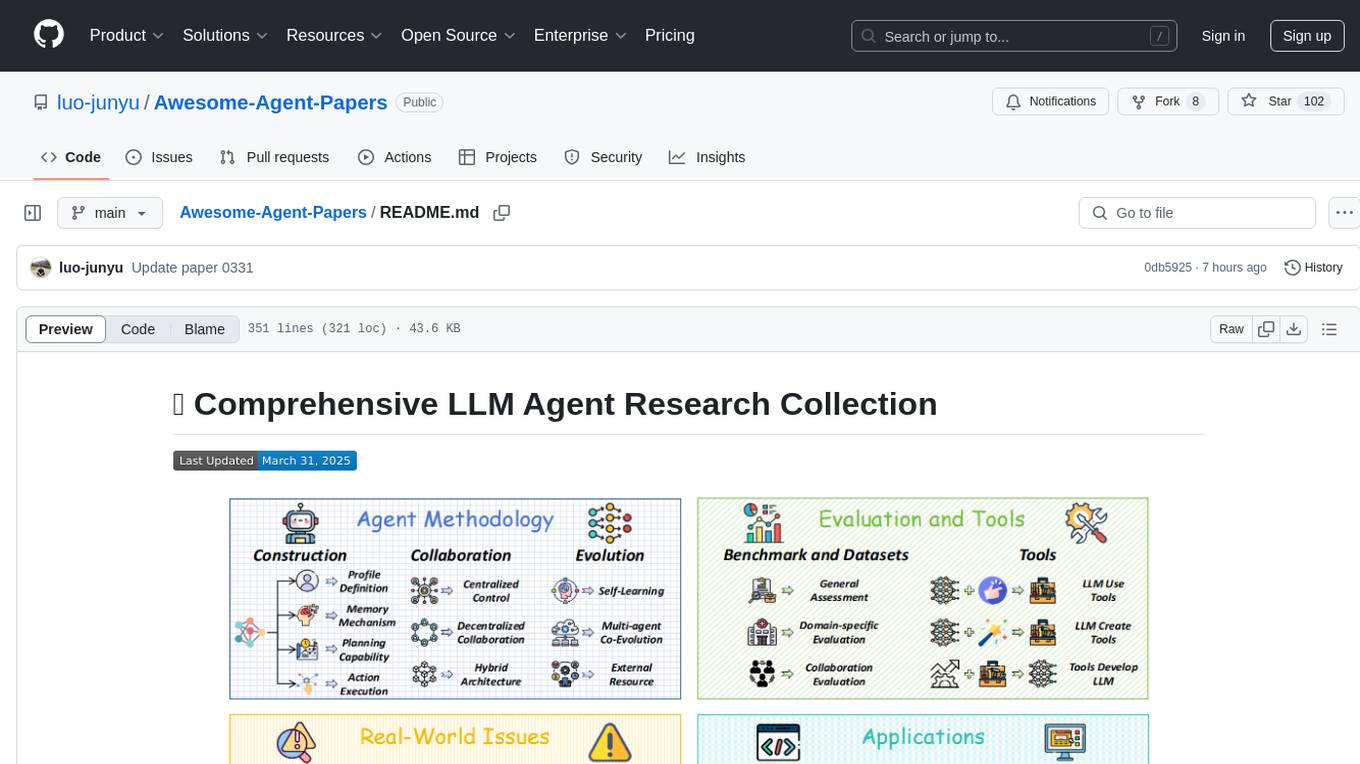

Awesome-Agent-Papers

This repository is a comprehensive collection of research papers on Large Language Model (LLM) agents, organized across key categories including agent construction, collaboration mechanisms, evolution, tools, security, benchmarks, and applications. The taxonomy provides a structured framework for understanding the field of LLM agents, bridging fragmented research threads by highlighting connections between agent design principles and emergent behaviors.

LLM-Agent-Evaluation-Survey

LLM-Agent-Evaluation-Survey is a tool designed to gather feedback and evaluate the performance of AI agents. It provides a user-friendly interface for users to rate and provide comments on the interactions with AI agents. The tool aims to collect valuable insights to improve the AI agents' capabilities and enhance user experience. With LLM-Agent-Evaluation-Survey, users can easily assess the effectiveness and efficiency of AI agents in various scenarios, leading to better decision-making and optimization of AI systems.

get-started-with-ai-agents

The 'Getting Started with Agents Using Azure AI Foundry' repository provides a solution that deploys a web-based chat application with an AI agent running in Azure Container App. The agent leverages Azure AI services for knowledge retrieval from uploaded files, enabling it to generate responses with citations. The solution includes built-in monitoring capabilities for easier troubleshooting and optimized performance. Users can deploy AI models, customize the agent, and evaluate its performance. The repository offers flexible deployment options through GitHub Codespaces, VS Code Dev Containers, or local environments.

20 - OpenAI Gpts

Wordon, World's Worst Customer | Divergent AI

I simulate tough Customer Support scenarios for Agent Training.

Supplier Evaluation Advisor

Assesses and recommends potential suppliers for organizational needs.

WM Phone Script Builder GPT

I automatically create and evaluate phone scripts, presenting a final draft.

HomeScore

Assess a potential home's quality using your own photos and property inspection reports

Conversation Analyzer

I analyze WhatsApp/Telegram and email conversations to assess the tone of their emotions and read between the lines. Upload your screenshot and I'll tell you what they are really saying! 😀

Home Inspector

Upload a picture of your home wall, floor, window, driveway, roof, HVAC, and get an instant opinion.

US Zip Intel

Your go-to source for in-depth US zip code demographics and statistics, with easy-to-download data tables.

Rate My {{Startup}}

I will score your Mind Blowing Startup Ideas, helping your to evaluate faster.

Stick to the Point

I'll help you evaluate your writing to make sure it's engaging, informative, and flows well. Uses principles from "Made to Stick"

LabGPT

The main objective of a personalized ChatGPT for reading laboratory tests is to evaluate laboratory test results and create a spreadsheet with the evaluation results and possible solutions.