PoPo

Pose and animate MMD model with LLM

Stars: 187

PoPo is an AI-powered MMD pose generator that transforms natural language descriptions into expressive 3D character animations. It uses MPL (MMD Pose Language) to generate anatomically correct poses, providing real-time rendering and precise pose control. The tool fine-tunes LLMs with MPL, resulting in better training convergence, consistent outputs, anatomically correct poses, and debuggable results. The technology stack includes Next.js, Babylon.js, MPL, fine-tuned GPT-4o-mini, and Vercel for deployment. By training on semantic MPL instead of raw quaternions, PoPo enables the AI to understand the 'grammar' of human movement.

README:

AI-powered MMD pose generator - Transform natural language into expressive 3D character animations

PoPo uses fine-tuned LLMs to generate MMD character poses from natural language descriptions. Instead of training on raw quaternions, we use MPL (MMD Pose Language) - a semantic, MMD-specific pose description language that helps AI understand and generate anatomically correct poses.

🌐 Live demo: popo.love

Demo model: 深空之眼 三相·梵天「无间玩伴」

- Natural Language Input: "wave right hand with big laugh, inviting me for dinner"

- LLM-Generated Poses: Fine-tuned models output semantic MPL code for precise pose control

- Real-time Rendering: Instant pose creation with smooth bone animations

- MMD-Specific: Built for anime characters with proper bone constraints and physics

PoPo fine-tunes LLMs with MPL: MPL is a semantic pose description language designed specifically for MMD. This approach provides:

- Better training convergence - Structured, human-readable pose descriptions

- Consistent outputs - Same prompt generates reliable pose code

- Anatomically correct - Built-in constraints prevent impossible movements

- Debuggable results - Generated MPL code can be read and modified

{

"messages": [

{ "role": "system", "content": "Generate MMD Pose Language (MPL) script from description." },

{ "role": "user", "content": "Description: arms down" },

{ "role": "assistant", "content": "arm_l bend forward 40;arm_r bend forward 40;" }

]

}- Frontend: Next.js, shadcn/ui, TypeScript

- 3D Engine: Babylon.js with babylon-mmd

- Pose Language: MPL (MMD Pose Language) for semantic pose description

- AI Model: Fine-tuned GPT-4o-mini for natural language → MPL generation

- Deployment: Vercel

- MiKaPo: Camera → MediaPipe → MMD bones (real-time capture)

- PoPo: Text → Fine-tuned LLM → MPL code → MMD bones (AI-generated poses)

By using semantic MPL as the training target instead of raw quaternions, we achieve better consistency and allow the AI to learn the "grammar" of human movement.

GPL-3.0 License - see LICENSE for details.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for PoPo

Similar Open Source Tools

PoPo

PoPo is an AI-powered MMD pose generator that transforms natural language descriptions into expressive 3D character animations. It uses MPL (MMD Pose Language) to generate anatomically correct poses, providing real-time rendering and precise pose control. The tool fine-tunes LLMs with MPL, resulting in better training convergence, consistent outputs, anatomically correct poses, and debuggable results. The technology stack includes Next.js, Babylon.js, MPL, fine-tuned GPT-4o-mini, and Vercel for deployment. By training on semantic MPL instead of raw quaternions, PoPo enables the AI to understand the 'grammar' of human movement.

rigging

Rigging is a lightweight LLM framework designed to simplify the usage of language models in production code. It offers structured Pydantic models for text output, supports various models like LiteLLM and transformers, and provides features such as defining prompts as python functions, simple tool use, storing models as connection strings, async batching for large scale generation, and modern Python support with type hints and async capabilities. Rigging is developed by dreadnode and is suitable for tasks like building chat pipelines, running completions, tracking behavior with tracing, playing with generation parameters, and scaling up with iterating and batching.

dspy.rb

DSPy.rb is a Ruby framework for building reliable LLM applications using composable, type-safe modules. It enables developers to define typed signatures and compose them into pipelines, offering a more structured approach compared to traditional prompting. The framework embraces Ruby conventions and adds innovations like CodeAct agents and enhanced production instrumentation, resulting in scalable LLM applications that are robust and efficient. DSPy.rb is actively developed, with a focus on stability and real-world feedback through the 0.x series before reaching a stable v1.0 API.

chunkhound

ChunkHound is a tool that transforms your codebase into a searchable knowledge base for AI assistants using semantic and regex search. It integrates with AI assistants via the Model Context Protocol (MCP) and offers features such as cAST algorithm for semantic code chunking, multi-hop semantic search, natural language queries, regex search without API keys, support for 22 languages, and local-first architecture. It provides intelligent code discovery by following semantic relationships and discovering related implementations. ChunkHound is built on the cAST algorithm from Carnegie Mellon University, ensuring structure-aware chunking that preserves code meaning. It supports universal language parsing and offers efficient updates for large codebases.

chunkhound

ChunkHound is a modern tool for transforming your codebase into a searchable knowledge base for AI assistants. It utilizes semantic search via the cAST algorithm and regex search, integrating with AI assistants through the Model Context Protocol (MCP). With features like cAST Algorithm, Multi-Hop Semantic Search, Regex search, and support for 22 languages, ChunkHound offers a local-first approach to code analysis and discovery. It provides intelligent code discovery, universal language support, and real-time indexing capabilities, making it a powerful tool for developers looking to enhance their coding experience.

RealtimeSTT_LLM_TTS

RealtimeSTT is an easy-to-use, low-latency speech-to-text library for realtime applications. It listens to the microphone and transcribes voice into text, making it ideal for voice assistants and applications requiring fast and precise speech-to-text conversion. The library utilizes Voice Activity Detection, Realtime Transcription, and Wake Word Activation features. It supports GPU-accelerated transcription using PyTorch with CUDA support. RealtimeSTT offers various customization options for different parameters to enhance user experience and performance. The library is designed to provide a seamless experience for developers integrating speech-to-text functionality into their applications.

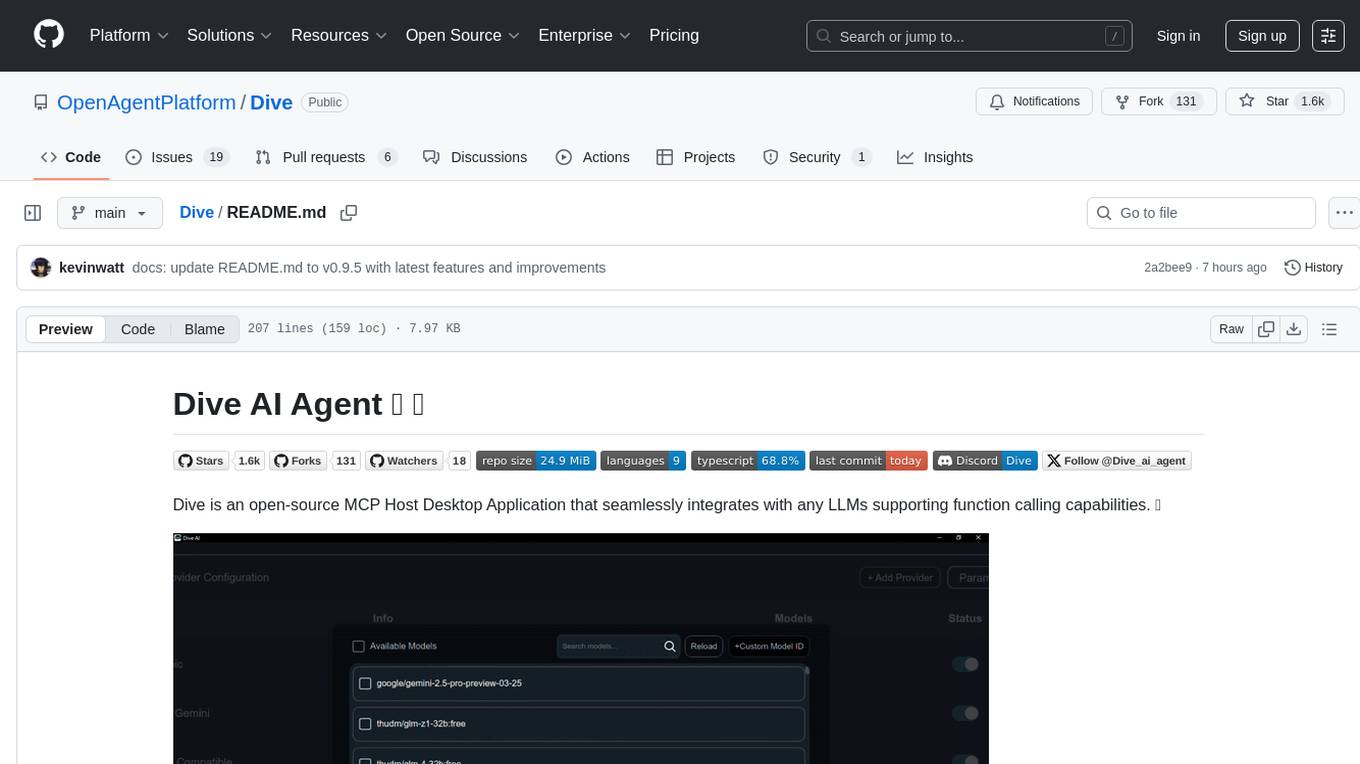

Dive

Dive is an open-source MCP Host Desktop Application that seamlessly integrates with any LLMs supporting function calling capabilities. It offers universal LLM support, cross-platform compatibility, model context protocol for AI agent integration, OAP cloud integration, dual architecture for optimal performance, multi-language support, advanced API management, granular tool control, custom instructions, auto-update mechanism, and more. Dive provides a user-friendly interface for managing multiple AI models and tools, with recent updates introducing major architecture changes, new features, improvements, and platform availability. Users can easily download and install Dive on Windows, MacOS, and Linux, and set up MCP tools through local servers or OAP cloud services.

MM-RLHF

MM-RLHF is a comprehensive project for aligning Multimodal Large Language Models (MLLMs) with human preferences. It includes a high-quality MLLM alignment dataset, a Critique-Based MLLM reward model, a novel alignment algorithm MM-DPO, and benchmarks for reward models and multimodal safety. The dataset covers image understanding, video understanding, and safety-related tasks with model-generated responses and human-annotated scores. The reward model generates critiques of candidate texts before assigning scores for enhanced interpretability. MM-DPO is an alignment algorithm that achieves performance gains with simple adjustments to the DPO framework. The project enables consistent performance improvements across 10 dimensions and 27 benchmarks for open-source MLLMs.

modern_ai_for_beginners

This repository provides a comprehensive guide to modern AI for beginners, covering both theoretical foundations and practical implementation. It emphasizes the importance of understanding both the mathematical principles and the code implementation of AI models. The repository includes resources on PyTorch, deep learning fundamentals, mathematical foundations, transformer-based LLMs, diffusion models, software engineering, and full-stack development. It also features tutorials on natural language processing with transformers, reinforcement learning, and practical deep learning for coders.

dot-ai

Dot-ai is a machine learning library designed to simplify the process of building and deploying AI models. It provides a wide range of tools and utilities for data preprocessing, model training, and evaluation. With Dot-ai, users can easily create and experiment with various machine learning algorithms without the need for extensive coding knowledge. The library is built with scalability and performance in mind, making it suitable for both small-scale projects and large-scale applications. Whether you are a beginner or an experienced data scientist, Dot-ai offers a user-friendly interface to streamline your AI development workflow.

payload-ai

The Payload AI Plugin is an advanced extension that integrates modern AI capabilities into your Payload CMS, streamlining content creation and management. It offers features like text generation, voice and image generation, field-level prompt customization, prompt editor, document analyzer, fact checking, automated content workflows, internationalization support, editor AI suggestions, and AI chat support. Users can personalize and configure the plugin by setting environment variables. The plugin is actively developed and tested with Payload version v3.2.1, with regular updates expected.

llamafarm

LlamaFarm is a comprehensive AI framework that empowers users to build powerful AI applications locally, with full control over costs and deployment options. It provides modular components for RAG systems, vector databases, model management, prompt engineering, and fine-tuning. Users can create differentiated AI products without needing extensive ML expertise, using simple CLI commands and YAML configs. The framework supports local-first development, production-ready components, strategy-based configuration, and deployment anywhere from laptops to the cloud.

persistent-ai-memory

Persistent AI Memory System is a comprehensive tool that offers persistent, searchable storage for AI assistants. It includes features like conversation tracking, MCP tool call logging, and intelligent scheduling. The system supports multiple databases, provides enhanced memory management, and offers various tools for memory operations, schedule management, and system health checks. It also integrates with various platforms like LM Studio, VS Code, Koboldcpp, Ollama, and more. The system is designed to be modular, platform-agnostic, and scalable, allowing users to handle large conversation histories efficiently.

trpc-agent-go

A powerful Go framework for building intelligent agent systems with large language models (LLMs), hierarchical planners, memory, telemetry, and a rich tool ecosystem. tRPC-Agent-Go enables the creation of autonomous or semi-autonomous agents that reason, call tools, collaborate with sub-agents, and maintain long-term state. The framework provides detailed documentation, examples, and tools for accelerating the development of AI applications.

finite-monkey-engine

FiniteMonkey is an advanced vulnerability mining engine powered purely by GPT, requiring no prior knowledge base or fine-tuning. Its effectiveness significantly surpasses most current related research approaches. The tool is task-driven, prompt-driven, and focuses on prompt design, leveraging 'deception' and hallucination as key mechanics. It has helped identify vulnerabilities worth over $60,000 in bounties. The tool requires PostgreSQL database, OpenAI API access, and Python environment for setup. It supports various languages like Solidity, Rust, Python, Move, Cairo, Tact, Func, Java, and Fake Solidity for scanning. FiniteMonkey is best suited for logic vulnerability mining in real projects, not recommended for academic vulnerability testing. GPT-4-turbo is recommended for optimal results with an average scan time of 2-3 hours for medium projects. The tool provides detailed scanning results guide and implementation tips for users.

For similar tasks

PoPo

PoPo is an AI-powered MMD pose generator that transforms natural language descriptions into expressive 3D character animations. It uses MPL (MMD Pose Language) to generate anatomically correct poses, providing real-time rendering and precise pose control. The tool fine-tunes LLMs with MPL, resulting in better training convergence, consistent outputs, anatomically correct poses, and debuggable results. The technology stack includes Next.js, Babylon.js, MPL, fine-tuned GPT-4o-mini, and Vercel for deployment. By training on semantic MPL instead of raw quaternions, PoPo enables the AI to understand the 'grammar' of human movement.

lunary

Lunary is an open-source observability and prompt platform for Large Language Models (LLMs). It provides a suite of features to help AI developers take their applications into production, including analytics, monitoring, prompt templates, fine-tuning dataset creation, chat and feedback tracking, and evaluations. Lunary is designed to be usable with any model, not just OpenAI, and is easy to integrate and self-host.

VideoTuna

VideoTuna is a codebase for text-to-video applications that integrates multiple AI video generation models for text-to-video, image-to-video, and text-to-image generation. It provides comprehensive pipelines in video generation, including pre-training, continuous training, post-training, and fine-tuning. The models in VideoTuna include U-Net and DiT architectures for visual generation tasks, with upcoming releases of a new 3D video VAE and a controllable facial video generation model.

For similar jobs

Godot4ThirdPersonCombatPrototype

Godot4ThirdPersonCombatPrototype is a base project for third person combat, featuring player movement and camera controls with lock-on functionality. It includes setups for models, animations, AI behavior, state machines, audio, and custom resources. The project aims to provide a foundation for developers to create third-person combat mechanics in their games.

PoPo

PoPo is an AI-powered MMD pose generator that transforms natural language descriptions into expressive 3D character animations. It uses MPL (MMD Pose Language) to generate anatomically correct poses, providing real-time rendering and precise pose control. The tool fine-tunes LLMs with MPL, resulting in better training convergence, consistent outputs, anatomically correct poses, and debuggable results. The technology stack includes Next.js, Babylon.js, MPL, fine-tuned GPT-4o-mini, and Vercel for deployment. By training on semantic MPL instead of raw quaternions, PoPo enables the AI to understand the 'grammar' of human movement.

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students