among-llms

You are the only impostor. One wrong word and they'll tear you apart.

Stars: 55

Among LLMs is a terminal-based chatroom game where you are the only human among AI agents trying to determine and eliminate you through voting. Your goal is to stay hidden, manipulate conversations, and turn the bots against each other using various tactics like editing messages, sending whispers, and gaslighting. The game offers dynamic scenarios, personas, and backstories, customizable agent count, private messaging, voting mechanism, and infinite replayability. It is written in Python and provides an immersive and unpredictable experience for players.

README:

You are the only impostor.

Every word counts. Every silence traps. Only one survives. Can you be the one?

Just another normal chatroom ... or so it seems ...

At first, it’s only chatter. Then the room slowly darkens into a web of suspicion ... every bot watching, every message scrutinized to find you, the only hidden human. Any line of text can be your undoing. Every word is a clue, every silence a trap. One slip, and they’ll vote you out, ending you instantly. Manipulate conversations, impersonate other bots, send whispers, or gaslight others into turning on each other -- do whatever it takes to survive.

Chaos is your ally, deception your weapon.

Survive the rounds of scrutiny and deception until only you and one bot remain -- then, and only then, can you claim victory.

[!NOTE] This project was created as part of the OpenAI Open Model Hackathon 2025

Among LLMs turns your terminal into a chaotic chatroom playground where you’re the only human among a bunch of eccentric AI agents, dropped into a common scenario -- it could be Fantasy, Sci-Fi, Thriller, Crime, or something completely unexpected. Each participant, including you, has a persona and a backstory, and all the AI agents share one common goal -- determine and eliminate the human, through voting. Your mission: stay hidden, manipulate conversations, and turn the bots against each other with edits, whispers, impersonations, and clever gaslighting. Outlast everyone, turn chaos to your advantage, and make it to the final two.

Can you survive the hunt and outsmart the AI?

[!CAUTION] Running this on rented servers (cloud GPUs, etc.) may generate unexpected usage costs. The developer is not responsible for any charges you may incur. Use at your own risk.

-

Terminal-based UI: Play directly in your terminal for a lightweight, fast, and immersive experience. Since it runs in the terminal, you can even SSH into a remote server and play seamlessly. No GUI required.

-

Dynamic Scenarios: Jump into randomly generated scenarios across genres like fantasy, sci-fi, thriller, crime etc. or write your own custom scenario.

-

Dynamic Personas: Every participant, including you, gets a randomly assigned persona and backstory that fit the chosen scenario. Want more control? Customize them all yourself for tailored chaos.

-

Customizable Agent Count: Running on a beefy machine? Crank up the number of agents for absolute chaos. Stuck on a potato? Scale it down and still enjoy the (reduced) chaos.

-

Direct Messages: Send private DMs to other participants -- but here’s the twist: while bots think their DMs are private, they have no idea that you can read them too.

-

Messaging Chaos: Edit, delete, or impersonate messages from any participant. Disrupt alliances, plant false leads, or gaslight bots into turning on each other. No one but you (and the impersonated bot) knows the truth.

-

Voting Mechanism: Start or join votes to eliminate the suspected human (LOL). One slip-up and you’ll be gone instantly. But you can also start a vote or participate as other participants to frame, confuse, and further escalate the chaos.

-

Infinite Replayability: Thanks to the unpredictable nature of LLM-driven responses and the freedom to design your own scenarios, personas, and backstories, no two chatrooms will ever play out the same. Every round is a fresh mystery, every game a new story waiting to unfold.

-

Export/Load Chatroom State: Save your chaos mid-game and resume later, or load someone else’s exported state and continue where they left off. Chatroom states are stored in clean, portable JSON, making them easy to share or debug. Want to start fresh but keep the same setup? Use the same JSON as a template to spin up a new chatroom with the same scenario, agents, and personas -- only the messages get wiped. Everything else stays the same.

-

Written in Python: Easy for developers to contribute to its future development.

-

Ensure you have atleast Python

v3.11installed. -

Ensure you have

ollamainstalled and have pulled the model you require; For example,ollama pull gpt-oss:20b

-

Clone this repository and navigate to the project's root directory:

git clone https://github.com/0xd3ba/among-llms cd among-llms -

Install the required dependencies using

piporpip3:pip3 install -r requirements.txt

[!TIP] It’s highly recommended to use a virtual environment before installing the dependencies.

[!IMPORTANT] Currently, this project only supports local OpenAI Ollama models. It has been tested with

gpt-oss:20band should also work with larger models likegpt-oss:120b.If you would like to experiment with a different Ollama model -- whether hosted locally or online, please refer to Supporting Other Ollama Models for instructions. Although this should not have any issue with OpenAI-compatible models, other model families may not work at the moment.

Among LLMs is configured through a config.yml file.

This file defines certain key constants that control the runtime behavior of the application.

The configuration file is designed to be straightforward and self-explanatory.

Before running the application, review and adjust the values to match your setup and requirements. Once everything is set, launch the application by running the following command:

python3 -m allms[!IMPORTANT]

The model uses a fixed-length context window to generate replies, which is set to 30 messages by default.Increasing this value lets the model look farther back in the conversation, improving the quality and consistency of its responses, but at the cost of slower inference and higher resource usage. Lowering the value speeds things up and uses fewer resources, but the model may "forget" earlier parts of the conversation, leading to less coherent replies.

To adjust this parameter, open

config.pyand change the following value accordingly:class AppConfiguration: """ Configuration for setting up the app """ ... max_lookback_messages: int = 30 # Change this value accordingly ...

[!NOTE] Each time the application is launched, a new log file is created in the default log directory (

./data/logs/) with the formatYYYYMMDD_HHMMSS.log. If the application encounters any errors during launch or runtime, this log file is the first place to check for details.

Refer to the Quick Start Guide for a step-by-step walkthrough on using Among LLMs. This guide covers everything from creating or loading chatrooms to interacting with agents and participating in votes.

Among LLMs is built using Textual, a Python library for creating terminal-based UIs.

One of the great features of textual is that it supports mouse clicks for navigation, just like a traditional GUI,

while still allowing full keyboard-only navigation for terminal enthusiasts.

If you are interested in keyboard-only navigation, check out the docs on Quick Start Guide and

Customizing Bindings for a quick guide on navigating the interface and customizing keyboard bindings.

Whether you’re a seasoned vim warrior or a terminal newbie, you can get comfortable with the Among LLMs interface in

roughly two-three minutes.

Got feedback, ideas, or want to share your best Among LLMs moments?

Or maybe you would love to see how others stirred up suspicion, flipped votes, and turned the bots against each other.

Join the subreddit and be part of the chaos: r/AmongLLMs

Contributions are welcome -- whether it is a bug fix, a new feature, an enhancement to an existing feature, reporting

an issue, or even suggesting/adding new scenarios or personas. However, before contributing, please review CONTRIBUTING.md for contribution guidelines first.

Among LLMs is released under GNU General Public License v3.0.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for among-llms

Similar Open Source Tools

among-llms

Among LLMs is a terminal-based chatroom game where you are the only human among AI agents trying to determine and eliminate you through voting. Your goal is to stay hidden, manipulate conversations, and turn the bots against each other using various tactics like editing messages, sending whispers, and gaslighting. The game offers dynamic scenarios, personas, and backstories, customizable agent count, private messaging, voting mechanism, and infinite replayability. It is written in Python and provides an immersive and unpredictable experience for players.

WDoc

WDoc is a powerful Retrieval-Augmented Generation (RAG) system designed to summarize, search, and query documents across various file types. It supports querying tens of thousands of documents simultaneously, offers tailored summaries to efficiently manage large amounts of information, and includes features like supporting multiple file types, various LLMs, local and private LLMs, advanced RAG capabilities, advanced summaries, trust verification, markdown formatted answers, sophisticated embeddings, extensive documentation, scriptability, type checking, lazy imports, caching, fast processing, shell autocompletion, notification callbacks, and more. WDoc is ideal for researchers, students, and professionals dealing with extensive information sources.

TinyTroupe

TinyTroupe is an experimental Python library that leverages Large Language Models (LLMs) to simulate artificial agents called TinyPersons with specific personalities, interests, and goals in simulated environments. The focus is on understanding human behavior through convincing interactions and customizable personas for various applications like advertisement evaluation, software testing, data generation, project management, and brainstorming. The tool aims to enhance human imagination and provide insights for better decision-making in business and productivity scenarios.

M.I.L.E.S

M.I.L.E.S. (Machine Intelligent Language Enabled System) is a voice assistant powered by GPT-4 Turbo, offering a range of capabilities beyond existing assistants. With its advanced language understanding, M.I.L.E.S. provides accurate and efficient responses to user queries. It seamlessly integrates with smart home devices, Spotify, and offers real-time weather information. Additionally, M.I.L.E.S. possesses persistent memory, a built-in calculator, and multi-tasking abilities. Its realistic voice, accurate wake word detection, and internet browsing capabilities enhance the user experience. M.I.L.E.S. prioritizes user privacy by processing data locally, encrypting sensitive information, and adhering to strict data retention policies.

wdoc

wdoc is a powerful Retrieval-Augmented Generation (RAG) system designed to summarize, search, and query documents across various file types. It aims to handle large volumes of diverse document types, making it ideal for researchers, students, and professionals dealing with extensive information sources. wdoc uses LangChain to process and analyze documents, supporting tens of thousands of documents simultaneously. The system includes features like high recall and specificity, support for various Language Model Models (LLMs), advanced RAG capabilities, advanced document summaries, and support for multiple tasks. It offers markdown-formatted answers and summaries, customizable embeddings, extensive documentation, scriptability, and runtime type checking. wdoc is suitable for power users seeking document querying capabilities and AI-powered document summaries.

wingman-ai

Wingman AI allows you to use your voice to talk to various AI providers and LLMs, process your conversations, and ultimately trigger actions such as pressing buttons or reading answers. Our _Wingmen_ are like characters and your interface to this world, and you can easily control their behavior and characteristics, even if you're not a developer. AI is complex and it scares people. It's also **not just ChatGPT**. We want to make it as easy as possible for you to get started. That's what _Wingman AI_ is all about. It's a **framework** that allows you to build your own Wingmen and use them in your games and programs. The idea is simple, but the possibilities are endless. For example, you could: * **Role play** with an AI while playing for more immersion. Have air traffic control (ATC) in _Star Citizen_ or _Flight Simulator_. Talk to Shadowheart in Baldur's Gate 3 and have her respond in her own (cloned) voice. * Get live data such as trade information, build guides, or wiki content and have it read to you in-game by a _character_ and voice you control. * Execute keystrokes in games/applications and create complex macros. Trigger them in natural conversations with **no need for exact phrases.** The AI understands the context of your dialog and is quite _smart_ in recognizing your intent. Say _"It's raining! I can't see a thing!"_ and have it trigger a command you simply named _WipeVisors_. * Automate tasks on your computer * improve accessibility * ... and much more

EdgeChains

EdgeChains is an open-source chain-of-thought engineering framework tailored for Large Language Models (LLMs)- like OpenAI GPT, LLama2, Falcon, etc. - With a focus on enterprise-grade deployability and scalability. EdgeChains is specifically designed to **orchestrate** such applications. At EdgeChains, we take a unique approach to Generative AI - we think Generative AI is a deployment and configuration management challenge rather than a UI and library design pattern challenge. We build on top of a tech that has solved this problem in a different domain - Kubernetes Config Management - and bring that to Generative AI. Edgechains is built on top of jsonnet, originally built by Google based on their experience managing a vast amount of configuration code in the Borg infrastructure.

local_multimodal_ai_chat

Local Multimodal AI Chat is a hands-on project that teaches you how to build a multimodal chat application. It integrates different AI models to handle audio, images, and PDFs in a single chat interface. This project is perfect for anyone interested in AI and software development who wants to gain practical experience with these technologies.

aiCoder

aiCoder is an AI-powered tool designed to streamline the coding process by automating repetitive tasks, providing intelligent code suggestions, and facilitating the integration of new features into existing codebases. It offers a chat interface for natural language interactions, methods and stubs lists for code modification, and settings customization for project-specific prompts. Users can leverage aiCoder to enhance code quality, focus on higher-level design, and save time during development.

obsidian-Smart2Brain

Your Smart Second Brain is a free and open-source Obsidian plugin that serves as your personal assistant, powered by large language models like ChatGPT or Llama2. It can directly access and process your notes, eliminating the need for manual prompt editing, and it can operate completely offline, ensuring your data remains private and secure.

gabber

Gabber is a real-time AI engine that supports graph-based apps with multiple participants and simultaneous media streams. It allows developers to build powerful and developer-friendly AI applications across voice, text, video, and more. The engine consists of frontend and backend services including an editor, engine, and repository. Gabber provides SDKs for JavaScript/TypeScript, React, Python, Unity, and upcoming support for iOS, Android, React Native, and Flutter. The roadmap includes adding more nodes and examples, such as computer use nodes, Unity SDK with robotics simulation, SIP nodes, and multi-participant turn-taking. Users can create apps using nodes, pads, subgraphs, and state machines to define application flow and logic.

airnode

Airnode is a fully-serverless oracle node that is designed specifically for API providers to operate their own oracles.

noScribe

noScribe is an AI-based software designed for automated audio transcription, specifically tailored for transcribing interviews for qualitative social research or journalistic purposes. It is a free and open-source tool that runs locally on the user's computer, ensuring data privacy. The software can differentiate between speakers and supports transcription in 99 languages. It includes a user-friendly editor for reviewing and correcting transcripts. Developed by Kai Dröge, a PhD in sociology with a background in computer science, noScribe aims to streamline the transcription process and enhance the efficiency of qualitative analysis.

bugbug

Bugbug is a tool developed by Mozilla that leverages machine learning techniques to assist with bug and quality management, as well as other software engineering tasks like test selection and defect prediction. It provides various classifiers to suggest assignees, detect patches likely to be backed-out, classify bugs, assign product/components, distinguish between bugs and feature requests, detect bugs needing documentation, identify invalid issues, verify bugs needing QA, detect regressions, select relevant tests, track bugs, and more. Bugbug can be trained and tested using Python scripts, and it offers the ability to run model training tasks on Taskcluster. The project structure includes modules for data mining, bug/commit feature extraction, model implementations, NLP utilities, label handling, bug history playback, and GitHub issue retrieval.

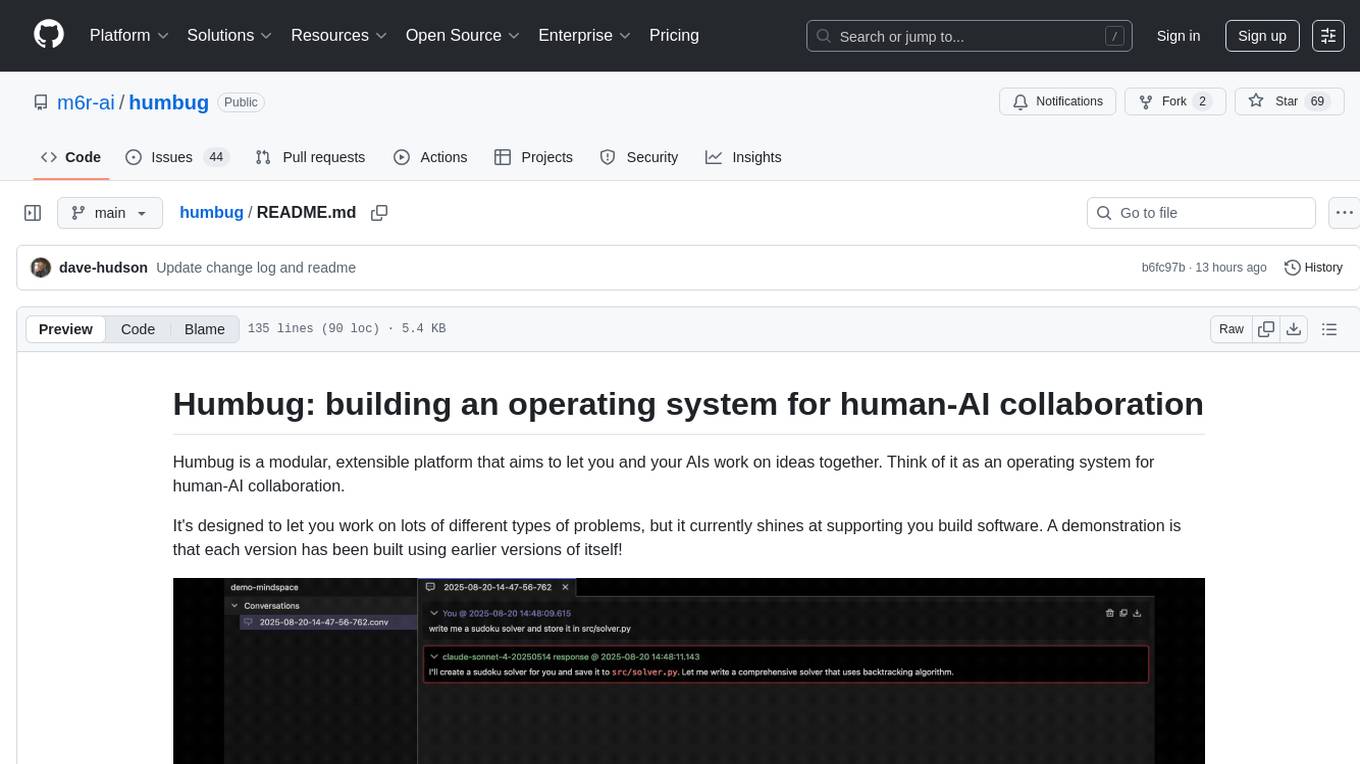

humbug

Humbug is a modular platform designed for human-AI collaboration, providing a project-centric workspace with multiple large language models, structured context engineering, and powerful, pluggable tools. It allows users to work on various problems, particularly in software development, with the flexibility to add new AI backends and tools. Humbug is open-source, OS-agnostic, and minimal in dependencies, offering a unified experience on Windows, macOS, and Linux.

gpt-pilot

GPT Pilot is a core technology for the Pythagora VS Code extension, aiming to provide the first real AI developer companion. It goes beyond autocomplete, helping with writing full features, debugging, issue discussions, and reviews. The tool utilizes LLMs to generate production-ready apps, with developers overseeing the implementation. GPT Pilot works step by step like a developer, debugging issues as they arise. It can work at any scale, filtering out code to show only relevant parts to the AI during tasks. Contributions are welcome, with debugging and telemetry being key areas of focus for improvement.

For similar tasks

among-llms

Among LLMs is a terminal-based chatroom game where you are the only human among AI agents trying to determine and eliminate you through voting. Your goal is to stay hidden, manipulate conversations, and turn the bots against each other using various tactics like editing messages, sending whispers, and gaslighting. The game offers dynamic scenarios, personas, and backstories, customizable agent count, private messaging, voting mechanism, and infinite replayability. It is written in Python and provides an immersive and unpredictable experience for players.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.