humbug

Humbug: building an operating system for human-AI collaboration

Stars: 69

Humbug is a modular platform designed for human-AI collaboration, providing a project-centric workspace with multiple large language models, structured context engineering, and powerful, pluggable tools. It allows users to work on various problems, particularly in software development, with the flexibility to add new AI backends and tools. Humbug is open-source, OS-agnostic, and minimal in dependencies, offering a unified experience on Windows, macOS, and Linux.

README:

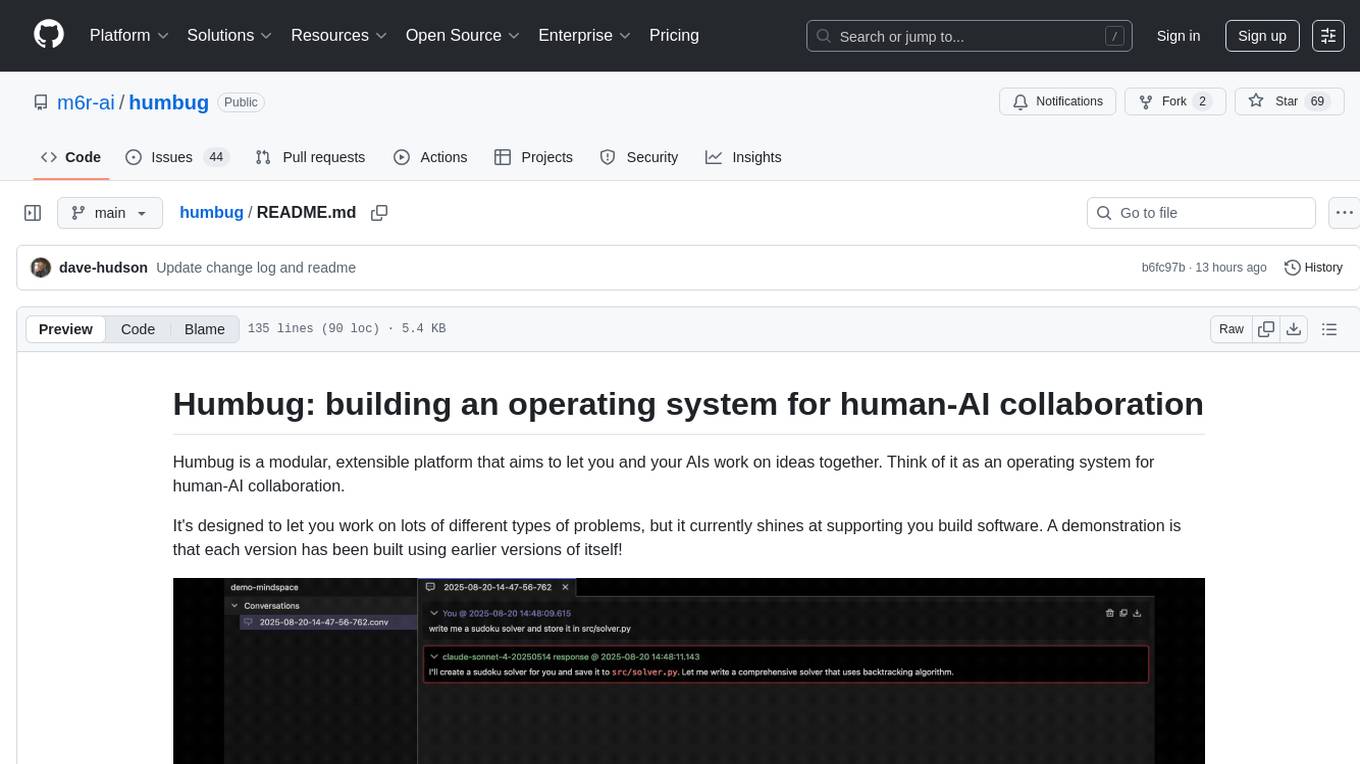

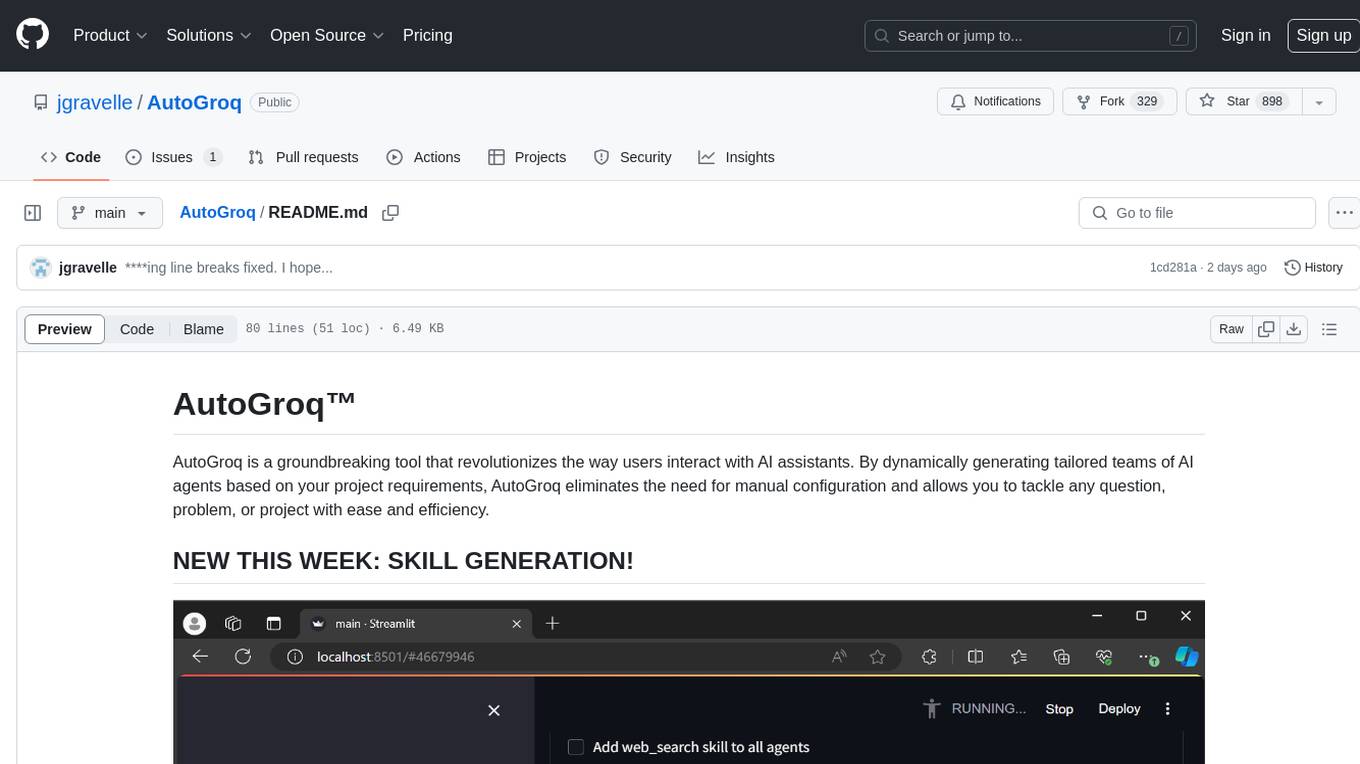

Humbug is a modular, extensible platform that aims to let you and your AIs work on ideas together. Think of it as an operating system for human-AI collaboration.

It's designed to let you work on lots of different types of problems, but it currently shines at supporting you build software. A demonstration is that each version has been built using earlier versions of itself!

-

Human–AI collaboration at the core

When you're using AI, you're no longer working alone. Humbug treats both humans and AIs as first-class actors. All tools, including the GUI, are designed to be available for both to use, so it's faster and easier to get things done.

-

LLMs, lots of LLMs

Humbug lets you work with multiple large language models (LLMs) simultaneously, supporting local, cloud-based, or hybrids of both. Works with LLMs from Anthropic, DeepSeek, Google, Mistral, Ollama, OpenAI, xAI, and Z.ai so you're not tied to any one provider. You can optimize for cost, and are future-proofed when you want to use something new. You can seamlessly switch between models, even switching mid-conversation.

-

Mindspaces: project-centric workspaces

Every project lives in its own mindspace: a persistent, context-rich environment with isolated files, settings, and conversations.

-

Structured context engineering with Metaphor

By going beyond ad-hoc prompts and making your intentions clear, you can get dramatically better results and lower operating costs. Metaphor, Humbug’s open context and prompting language, turns intent into repeatable, composable, and auditable workflows. It's a language for AI orchestration.

-

Powerful, pluggable tools

Extends your LLMs with task delegation, dynamic filesystem operations, a clock, a scientific calculator, and UI orchestration. Humbug’s tool system is flexible, secure, and designed to make it easy to add new capabilities. Task delegation allows one LLM to make use of one or more other LLMs. The UI supports simultaneous conversations, file editing with syntax highlights, dynamic wiki pages, terminal emulators, a system shell, and a system log. UI orchestration means your AI can help you work and visualise things using any of these tools too. LLMs can check the status of terminal tabs and issue commands to them (subject to user approval)

-

Open and extensible

Add new AI backends, tools, or integrations with minimal friction. Humbug is open-source and modular by design. You don't need to worry about being locked into a vendor tool and can add new features if you want them.

-

Bootstrapped with LLMs

Each version of Humbug has been built using the previous version. This allowed over 80% of the code to be implemented by LLMs.

-

OS-agnostic

Humbug provides OS-like concepts but doesn't try to replace your computer's operating system. It runs on top of Windows, macOS, or Linux, and provides a unified experience on all of them.

-

Minimal dependencies

Humbug follows the pattern of most operating system kernels. It aims to be simple and largely self-contained. The code has only 4 external package dependencies other than the standard Python library, so both you and your LLMs can understand almost every part from the one git repo.

-

Not just a platform for developers

It's designed to help with any activities where you and your AIs need to work together on a problem. While it has a lot of tools for software developers, it has been designed to support a much wider set of needs. With its extensibility it's also easy to think about adding new tools for AIs, humans, or both.

- What's new: Latest updates

- Dive deeper: Getting started with Metaphor

- Download: Download Humbug

- Blog posts: Dave's blog posts about Humbug and Metaphor

- Developer notes: Dave's project notes

- Discord: Discord

- YouTube: @m6rai on YouTube

Humbug is open source and the project welcomes contributions. If you're interested in helping, then join the Discord server.

- Python 3.10 or higher

- You will need API keys for most cloud-based LLMs, but some are available for free, and Ollama will run locally without API keys.

- PySide6 (the GUI framework)

- qasync (allows the GUI framework to work nicely with async Python code)

- aiohttp (async HTTP client)

- certifi (SSL/TLS root certificates to allow TLS network connections without any other system changes)

-

Create and activate a virtual environment:

Linux and MacOS:

python -m venv venv source venv/bin/activateWindows:

python -m venv venv venv\Scripts\activate

-

Install build tools:

pip install build

-

Install in development mode:

pip install -e . -

Launch the application:

python -m humbug

-

Initial configuration:

See Getting Started with Metaphor for a step-by-step guide to getting Humbug up and running.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for humbug

Similar Open Source Tools

humbug

Humbug is a modular platform designed for human-AI collaboration, providing a project-centric workspace with multiple large language models, structured context engineering, and powerful, pluggable tools. It allows users to work on various problems, particularly in software development, with the flexibility to add new AI backends and tools. Humbug is open-source, OS-agnostic, and minimal in dependencies, offering a unified experience on Windows, macOS, and Linux.

mastra

Mastra is an opinionated Typescript framework designed to help users quickly build AI applications and features. It provides primitives such as workflows, agents, RAG, integrations, syncs, and evals. Users can run Mastra locally or deploy it to a serverless cloud. The framework supports various LLM providers, offers tools for building language models, workflows, and accessing knowledge bases. It includes features like durable graph-based state machines, retrieval-augmented generation, integrations, syncs, and automated tests for evaluating LLM outputs.

serena

Serena is a powerful coding agent that integrates with existing LLMs to provide essential semantic code retrieval and editing tools. It is free to use and does not require API keys or subscriptions. Serena can be used for coding tasks such as analyzing, planning, and editing code directly on your codebase. It supports various programming languages and offers semantic code analysis capabilities through language servers. Serena can be integrated with different LLMs using the model context protocol (MCP) or Agno framework. The tool provides a range of functionalities for code retrieval, editing, and execution, making it a versatile coding assistant for developers.

AppFlowy

AppFlowy.IO is an open-source alternative to Notion, providing users with control over their data and customizations. It aims to offer functionality, data security, and cross-platform native experience to individuals, as well as building blocks and collaboration infra services to enterprises and hackers. The tool is built with Flutter and Rust, supporting multiple platforms and emphasizing long-term maintainability. AppFlowy prioritizes data privacy, reliable native experience, and community-driven extensibility, aiming to democratize the creation of complex workplace management tools.

magic

Magic Cloud is a software development automation platform based on AI, Low-Code, and No-Code. It allows dynamic code creation and orchestration using Hyperlambda, generative AI, and meta programming. The platform includes features like CRUD generation, No-Code AI, Hyperlambda programming language, AI agents creation, and various components for software development. Magic is suitable for backend development, AI-related tasks, and creating AI chatbots. It offers high-level programming capabilities, productivity gains, and reduced technical debt.

morphik-core

Morphik is an AI-native toolset designed to help developers integrate context into their AI applications by providing tools to store, represent, and search unstructured data. It offers features such as multimodal search, fast metadata extraction, and integrations with existing tools. Morphik aims to address the challenges of traditional AI approaches that struggle with visually rich documents and provide a more comprehensive solution for understanding and processing complex data.

Robyn

Robyn is an experimental, semi-automated and open-sourced Marketing Mix Modeling (MMM) package from Meta Marketing Science. It uses various machine learning techniques to define media channel efficiency and effectivity, explore adstock rates and saturation curves. Built for granular datasets with many independent variables, especially suitable for digital and direct response advertisers with rich data sources. Aiming to democratize MMM, make it accessible for advertisers of all sizes, and contribute to the measurement landscape.

lollms-webui

LoLLMs WebUI (Lord of Large Language Multimodal Systems: One tool to rule them all) is a user-friendly interface to access and utilize various LLM (Large Language Models) and other AI models for a wide range of tasks. With over 500 AI expert conditionings across diverse domains and more than 2500 fine tuned models over multiple domains, LoLLMs WebUI provides an immediate resource for any problem, from car repair to coding assistance, legal matters, medical diagnosis, entertainment, and more. The easy-to-use UI with light and dark mode options, integration with GitHub repository, support for different personalities, and features like thumb up/down rating, copy, edit, and remove messages, local database storage, search, export, and delete multiple discussions, make LoLLMs WebUI a powerful and versatile tool.

aide

Aide is an Open Source AI-native code editor that combines the powerful features of VS Code with advanced AI capabilities. It provides a combined chat + edit flow, proactive agents for fixing errors, inline editing widget, intelligent code completion, and AST navigation. Aide is designed to be an intelligent coding companion, helping users write better code faster while maintaining control over the development process.

commanddash

Dash AI is an open-source coding assistant for Flutter developers. It is designed to not only write code but also run and debug it, allowing it to assist beyond code completion and automate routine tasks. Dash AI is powered by Gemini, integrated with the Dart Analyzer, and specifically tailored for Flutter engineers. The vision for Dash AI is to create a single-command assistant that can automate tedious development tasks, enabling developers to focus on creativity and innovation. It aims to assist with the entire process of engineering a feature for an app, from breaking down the task into steps to generating exploratory tests and iterating on the code until the feature is complete. To achieve this vision, Dash AI is working on providing LLMs with the same access and information that human developers have, including full contextual knowledge, the latest syntax and dependencies data, and the ability to write, run, and debug code. Dash AI welcomes contributions from the community, including feature requests, issue fixes, and participation in discussions. The project is committed to building a coding assistant that empowers all Flutter developers.

autoMate

autoMate is an AI-powered local automation tool designed to help users automate repetitive tasks and reclaim their time. It leverages AI and RPA technology to operate computer interfaces, understand screen content, make autonomous decisions, and support local deployment for data security. With natural language task descriptions, users can easily automate complex workflows without the need for programming knowledge. The tool aims to transform work by freeing users from mundane activities and allowing them to focus on tasks that truly create value, enhancing efficiency and liberating creativity.

tau

Tau is a framework for building low maintenance & highly scalable cloud computing platforms that software developers will love. It aims to solve the high cost and time required to build, deploy, and scale software by providing a developer-friendly platform that offers autonomy and flexibility. Tau simplifies the process of building and maintaining a cloud computing platform, enabling developers to achieve 'Local Coding Equals Global Production' effortlessly. With features like auto-discovery, content-addressing, and support for WebAssembly, Tau empowers users to create serverless computing environments, host frontends, manage databases, and more. The platform also supports E2E testing and can be extended using a plugin system called orbit.

digma

Digma is a Continuous Feedback platform that provides code-level insights related to performance, errors, and usage during development. It empowers developers to own their code all the way to production, improving code quality and preventing critical issues. Digma integrates with OpenTelemetry traces and metrics to generate insights in the IDE, helping developers analyze code scalability, bottlenecks, errors, and usage patterns.

awesome-vibe-coding

Awesome Vibe Coding is a curated list of references for vibe coding, which involves collaborating with AI to write code. It includes browser-based tools, IDEs, code editors, desktop apps, plugins, command line tools, and documentation for AI coding. The concept of vibe coding is about fully embracing the vibes, exponentials, and forgetting that the code even exists while building projects or web apps. The repository provides a collection of tools and resources to facilitate the process of coding with a focus on creativity and efficiency.

paddler

Paddler is an open-source LLM load balancer and serving platform designed for digital products and users who prioritize privacy, reliability, cost control, and independence from closed-source model providers. It allows running inference, deploying, and scaling LLMs on personal infrastructure, offering a seamless developer experience. Key features include inference through llama.cpp engine, LLM-specific load balancing, dynamic model swapping, request buffering, and built-in web admin panel for management and monitoring. Paddler is suitable for product teams needing LLM inference, DevOps/LLMOps teams deploying LLMs at scale, organizations handling sensitive data, and product leaders aiming for predictable LLM costs and reliable model performance.

AutoGroq

AutoGroq is a revolutionary tool that dynamically generates tailored teams of AI agents based on project requirements, eliminating manual configuration. It enables users to effortlessly tackle questions, problems, and projects by creating expert agents, workflows, and skillsets with ease and efficiency. With features like natural conversation flow, code snippet extraction, and support for multiple language models, AutoGroq offers a seamless and intuitive AI assistant experience for developers and users.

For similar tasks

humbug

Humbug is a modular platform designed for human-AI collaboration, providing a project-centric workspace with multiple large language models, structured context engineering, and powerful, pluggable tools. It allows users to work on various problems, particularly in software development, with the flexibility to add new AI backends and tools. Humbug is open-source, OS-agnostic, and minimal in dependencies, offering a unified experience on Windows, macOS, and Linux.

examples

This repository contains a collection of sample applications and Jupyter Notebooks for hands-on experience with Pinecone vector databases and common AI patterns, tools, and algorithms. It includes production-ready examples for review and support, as well as learning-optimized examples for exploring AI techniques and building applications. Users can contribute, provide feedback, and collaborate to improve the resource.

OpenAGI

OpenAGI is an AI agent creation package designed for researchers and developers to create intelligent agents using advanced machine learning techniques. The package provides tools and resources for building and training AI models, enabling users to develop sophisticated AI applications. With a focus on collaboration and community engagement, OpenAGI aims to facilitate the integration of AI technologies into various domains, fostering innovation and knowledge sharing among experts and enthusiasts.

sirji

Sirji is an agentic AI framework for software development where various AI agents collaborate via a messaging protocol to solve software problems. It uses standard or user-generated recipes to list tasks and tips for problem-solving. Agents in Sirji are modular AI components that perform specific tasks based on custom pseudo code. The framework is currently implemented as a Visual Studio Code extension, providing an interactive chat interface for problem submission and feedback. Sirji sets up local or remote development environments by installing dependencies and executing generated code.

dewhale

Dewhale is a GitHub-Powered AI tool designed for effortless development. It utilizes prompt engineering techniques under the GPT-4 model to issue commands, allowing users to generate code with lower usage costs and easy customization. The tool seamlessly integrates with GitHub, providing version control, code review, and collaborative features. Users can join discussions on the design philosophy of Dewhale and explore detailed instructions and examples for setting up and using the tool.

max

The Modular Accelerated Xecution (MAX) platform is an integrated suite of AI libraries, tools, and technologies that unifies commonly fragmented AI deployment workflows. MAX accelerates time to market for the latest innovations by giving AI developers a single toolchain that unlocks full programmability, unparalleled performance, and seamless hardware portability.

Awesome-CVPR2024-ECCV2024-AIGC

A Collection of Papers and Codes for CVPR 2024 AIGC. This repository compiles and organizes research papers and code related to CVPR 2024 and ECCV 2024 AIGC (Artificial Intelligence and Graphics Computing). It serves as a valuable resource for individuals interested in the latest advancements in the field of computer vision and artificial intelligence. Users can find a curated list of papers and accompanying code repositories for further exploration and research. The repository encourages collaboration and contributions from the community through stars, forks, and pull requests.

ZetaForge

ZetaForge is an open-source AI platform designed for rapid development of advanced AI and AGI pipelines. It allows users to assemble reusable, customizable, and containerized Blocks into highly visual AI Pipelines, enabling rapid experimentation and collaboration. With ZetaForge, users can work with AI technologies in any programming language, easily modify and update AI pipelines, dive into the code whenever needed, utilize community-driven blocks and pipelines, and share their own creations. The platform aims to accelerate the development and deployment of advanced AI solutions through its user-friendly interface and community support.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.