Best AI tools for< Train With Data >

20 - AI tool Sites

BotB9

BotB9 is an AI chatbot application that is trained with your business data to provide personalized and programmable video guides. It serves as the missing link between businesses and their customers, offering features such as lead capture, order checkout, and customizable templates for various use cases. With BotB9, users can create their own AI chatbots without the need for coding, embed them on websites and mobile apps, and train them with specific business information to answer sales and support questions. The application allows unlimited chats, custom branding, and theme customization, making it a versatile tool for businesses looking to enhance customer interactions and streamline processes.

Spheria

Spheria is a no-code platform that allows users to create their own official AI clone, which learns directly from the user's experiences, skills, and life stories. Unlike other AI tools that rely on generic data, Spheria celebrates individuality and personal growth. The platform emphasizes privacy, ethics, and user control over data, offering a secure environment for users to interact with their AI double.

Sherpa.ai

Sherpa.ai is a Federated Learning Platform that enables data collaborations without sharing data. It allows organizations to build and train models with sensitive data from various sources while preserving privacy and complying with regulations. The platform offers enterprise-grade privacy-compliant solutions for improving AI models and fostering collaborations in a secure manner. Sherpa.ai is trusted by global organizations to maximize the value of data and AI, improve results, and ensure regulatory compliance.

Sherpa.ai

Sherpa.ai is a SaaS platform that enables data collaborations without sharing data. It allows businesses to build and train models with sensitive data from different parties, without compromising privacy or regulatory compliance. Sherpa.ai's Federated Learning platform is used in various industries, including healthcare, financial services, and manufacturing, to improve AI models, accelerate research, and optimize operations.

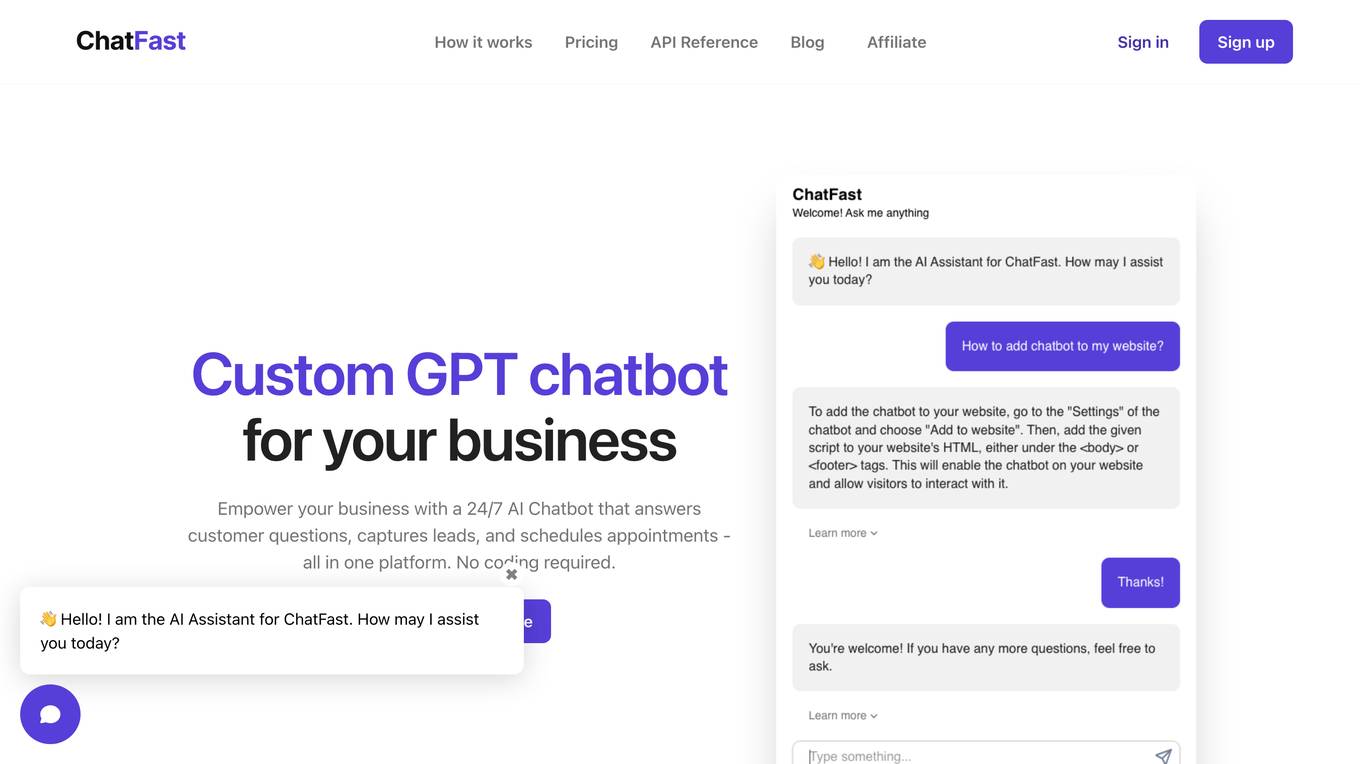

ChatFast

ChatFast is a platform that allows businesses to create custom GPT chatbots using their own data. These chatbots can be used to answer customer questions, capture leads, and schedule appointments. ChatFast is easy to use and requires no coding. It is trusted by thousands of businesses and provides a range of powerful features, including the ability to train chatbots on multiple data sources, revise responses, capture leads, and create smart forms.

Ferdinand

Ferdinand is a platform designed to help users build their data culture and skills effortlessly. It offers interactive mini-courses in Data & Analytics through a chatbot integrated with Slack. Users can learn from various themes such as marketing, sales, and product management. Ferdinand removes barriers to learning by delivering courses directly in Slack and allowing team members to track progress. Additionally, the platform provides a robust payments platform with features like simplified card issuing, streamlined checkout, smart dashboard, optimized platforms, and faster transaction approval.

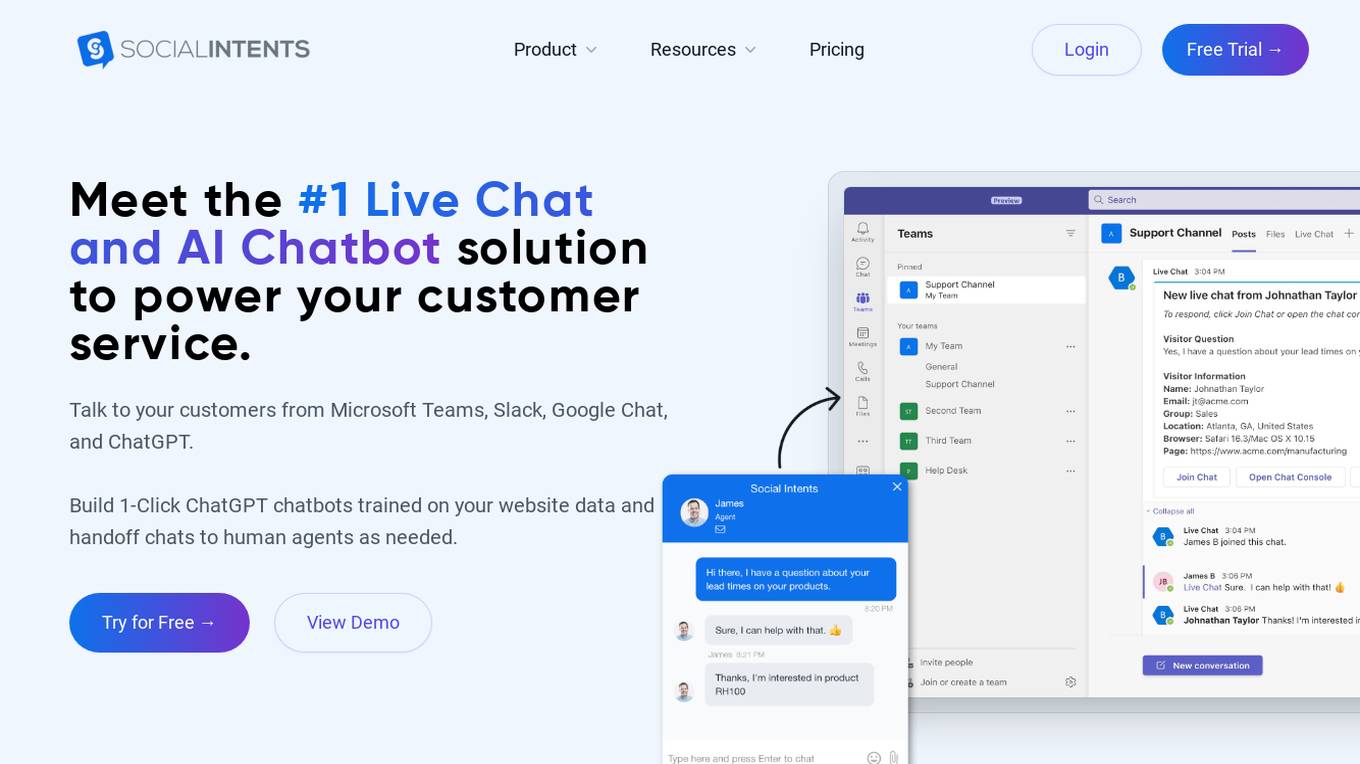

Social Intents

Social Intents is a live chat and AI chatbot solution that helps businesses provide real-time customer support, generate leads, and automate sales processes. It integrates with popular communication platforms such as Microsoft Teams, Slack, Google Chat, Zoom, and Webex, allowing businesses to manage customer interactions from a single dashboard. Social Intents also offers pre-trained ChatGPT chatbots that can be customized to handle specific customer queries and provide personalized responses. With its advanced features and integrations, Social Intents aims to enhance customer engagement, reduce support costs, and drive sales for businesses.

SQLAI.ai

SQLAI.ai is a professional SQL multi-tool that leverages AI technology to generate, fix, explain, and optimize SQL queries and databases. It enables users to interact with SQL using everyday language, effortlessly train AI to understand database schemas, and benefit from AI-driven recommendations for query optimization. The platform caters to a wide range of users, from beginners to experts, by simplifying SQL tasks and providing valuable insights for database management. With features like generating SQL data, data analytics, and real-time data insights, SQLAI.ai revolutionizes the way users interact with databases, making SQL tasks simpler, more efficient, and accessible to all.

Datacog

Datacog is an AI application that offers a comprehensive solution for efficient data warehouse management, application integration, and machine learning. It enables organizations to leverage the complete capabilities of their data assets through intuitive data organization and model training features. With zero configuration, instant deployment, scalability, and real-time monitoring, Datacog simplifies model training and streamlines decision-making. Join the ranks of industry leaders who have harnessed the power of organized data and automation with Datacog.

IntegraBot

IntegraBot is an advanced AI platform that allows users to develop AI chatbots without coding. Users can choose from different AI models, integrate tools and APIs, and train their agents with company data. The platform offers features like creating custom tools, importing data, and integrating with various applications. IntegraBot ensures data security, compliance with regulations, and provides best practices for AI usage.

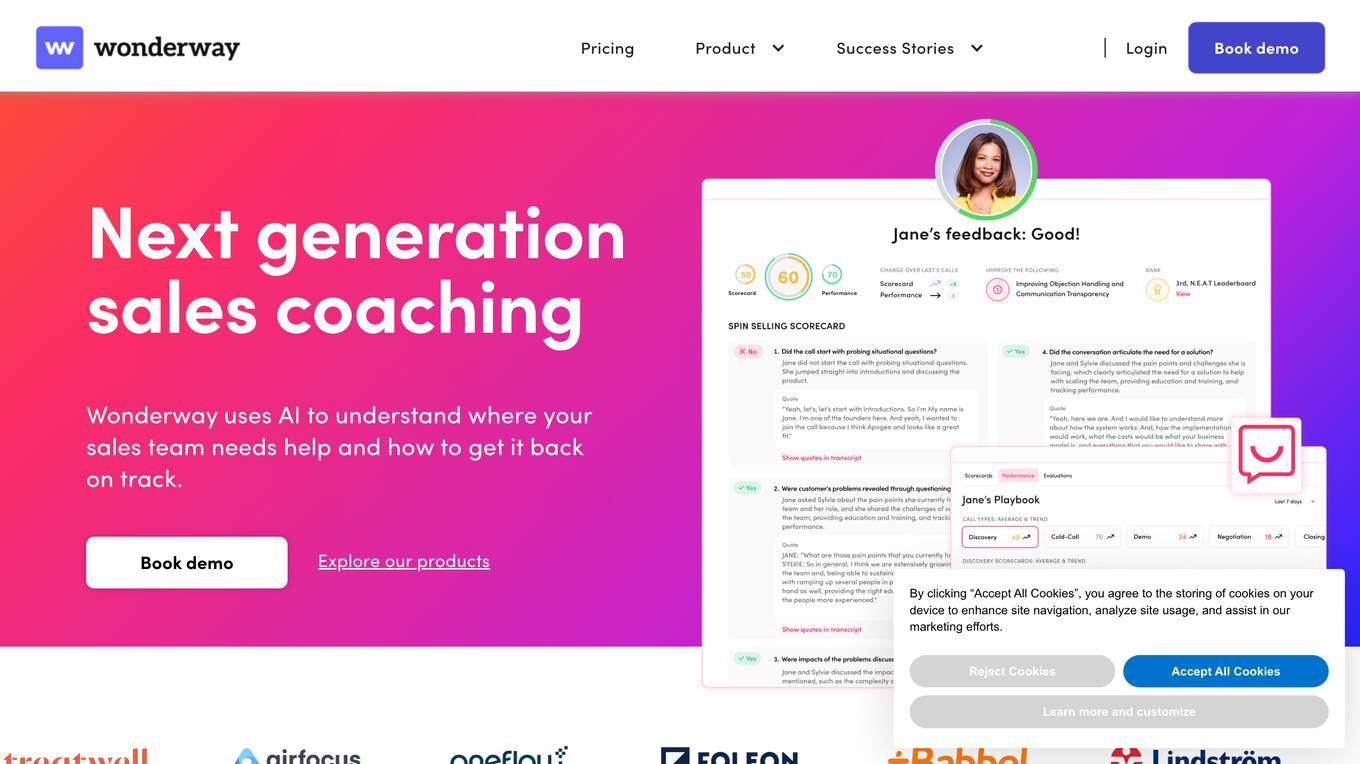

Wonderway

Wonderway is an AI Sales Coach and Sales Training Platform that uses AI to provide automated sales coaching on every call. It helps sales teams train, upskill, and certify their members, leading to increased conversion rates and reduced ramp time. The platform offers personalized training, aligns teams faster, and improves sales onboarding. Wonderway's AI technology understands where sales teams need help and provides recommendations for improvement, making it a valuable tool for sales professionals.

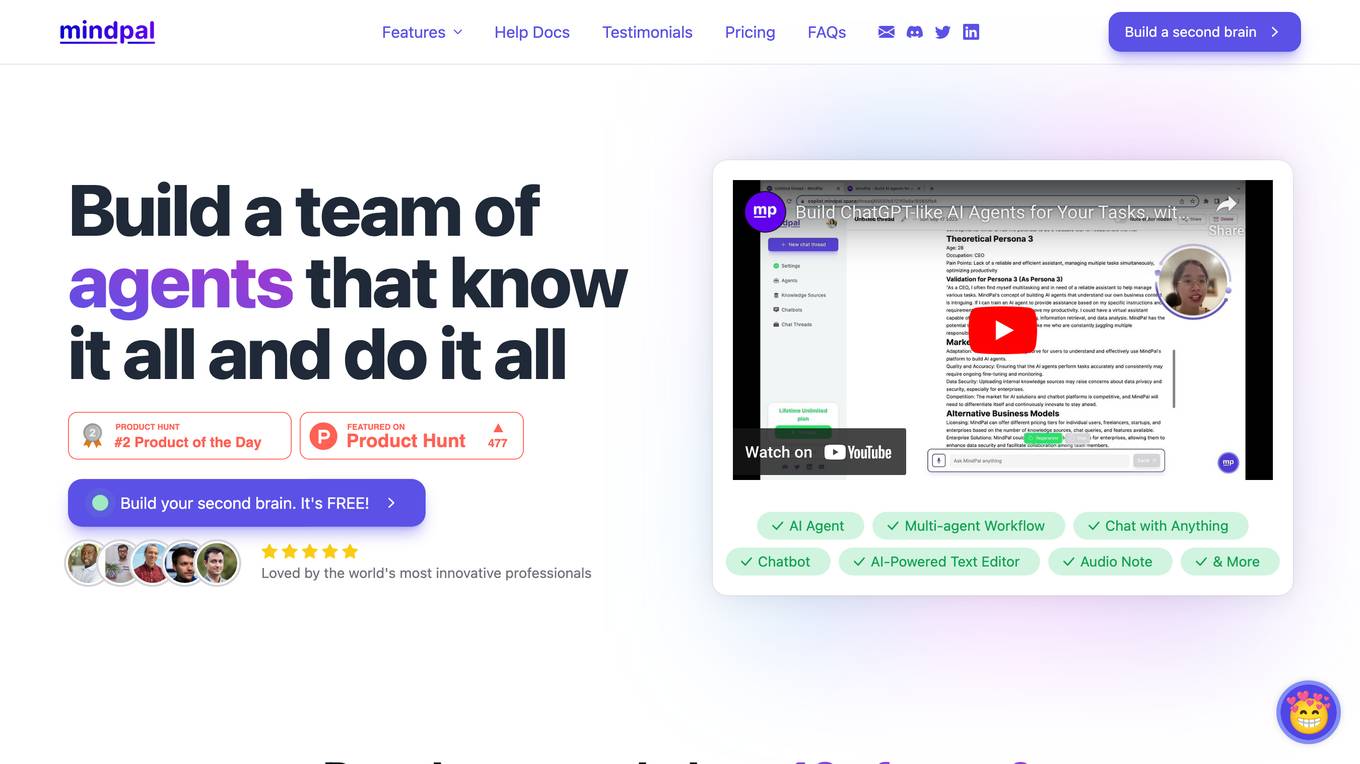

MindPal

MindPal is an AI application that empowers users to build their AI workforce to automate tasks and workflows. It offers a wide range of features to streamline processes and enhance productivity. Users can create specialized AI agents, train them with their own data, and connect them to various tools. MindPal is recommended by professionals for its efficiency in automating tasks and generating valuable insights.

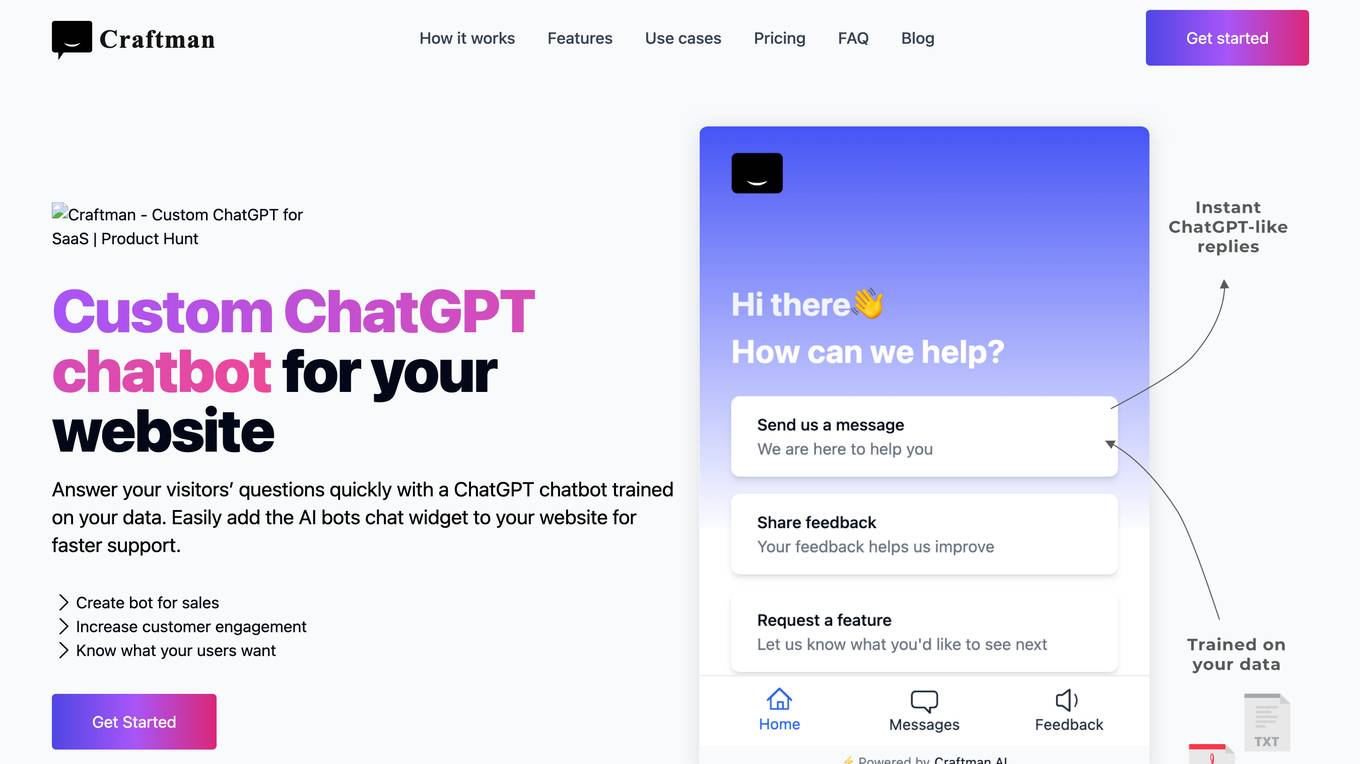

Craftman

Craftman is an AI chatbot builder that allows users to create custom ChatGPT chatbots for sales and support. The platform enables users to train ChatGPT with their own data and easily add the AI bots chat widget to their website for faster and more efficient customer support. Craftman offers features such as instant responses to visitor questions, effortless feedback collection, direct feature request channel, and personalized user engagement. The application provides advantages like 24/7 availability, instant responses, cost-efficiency, personalization, and enhanced user engagement. However, some disadvantages include the need for internet connectivity, potential language limitations, and initial setup time. Craftman is designed to streamline customer interactions, boost sales, and improve user satisfaction through AI-driven chatbot technology.

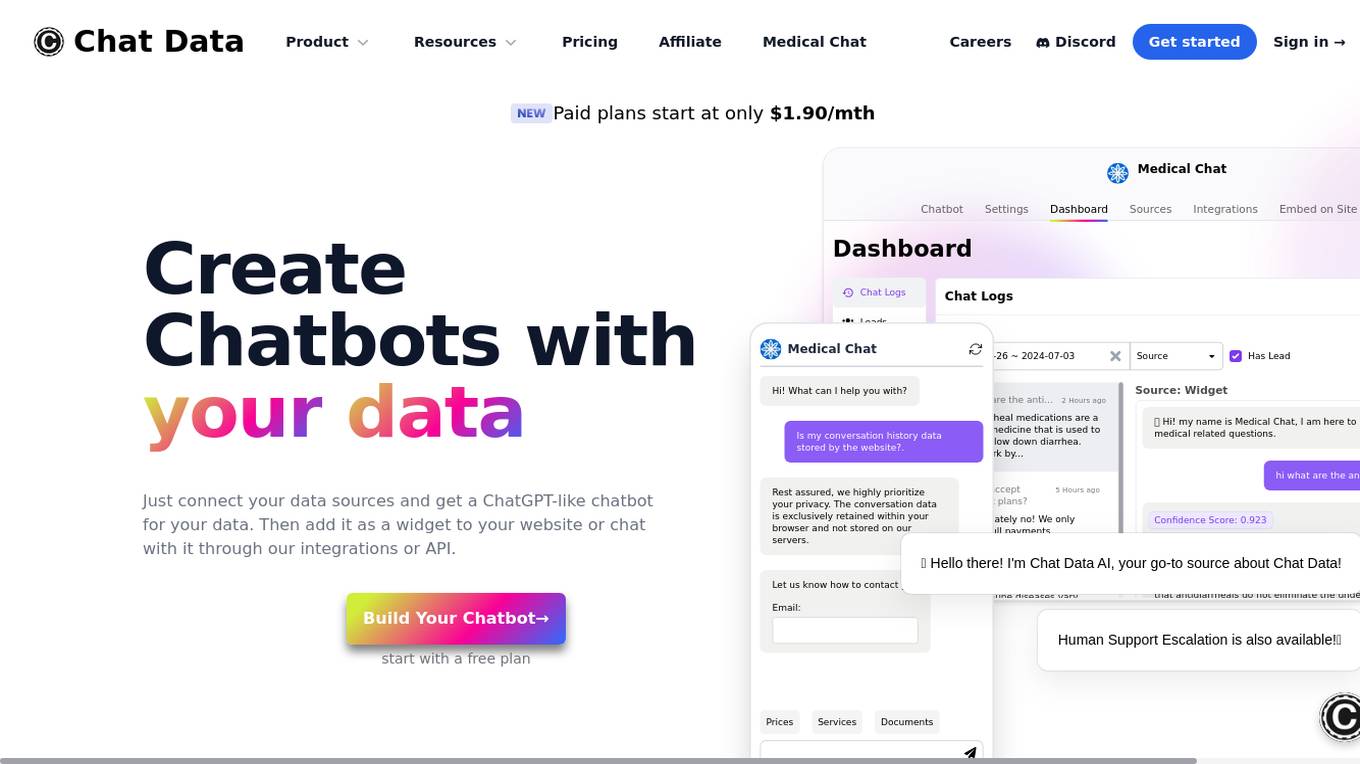

Chat Data

Chat Data is an AI application that allows users to create custom chatbots using their own data sources. Users can easily build and integrate chatbots with their websites or other platforms, personalize the chatbot's interface, and access advanced features like human support escalation and product updates synchronization. The platform offers HIPAA-compliant medical chat models and ensures data privacy by retaining conversation data exclusively within the user's browser. With Chat Data, users can enhance customer interactions, gather insights, and streamline communication processes.

Denvr DataWorks AI Cloud

Denvr DataWorks AI Cloud is a cloud-based AI platform that provides end-to-end AI solutions for businesses. It offers a range of features including high-performance GPUs, scalable infrastructure, ultra-efficient workflows, and cost efficiency. Denvr DataWorks is an NVIDIA Elite Partner for Compute, and its platform is used by leading AI companies to develop and deploy innovative AI solutions.

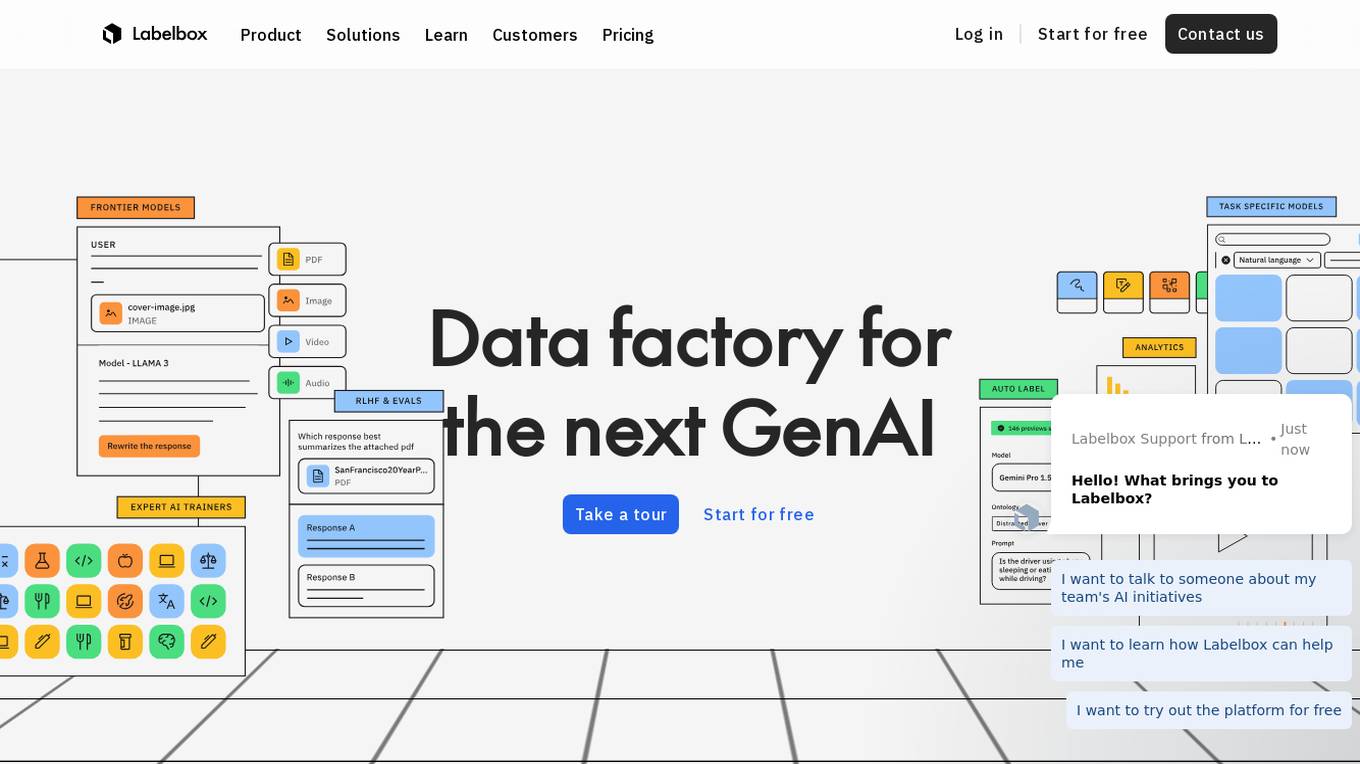

Labelbox

Labelbox is a data factory platform that empowers AI teams to manage data labeling, train models, and create better data with internet scale RLHF platform. It offers an all-in-one solution comprising tooling and services powered by a global community of domain experts. Labelbox operates a global data labeling infrastructure and operations for AI workloads, providing expert human network for data labeling in various domains. The platform also includes AI-assisted alignment for maximum efficiency, data curation, model training, and labeling services. Customers achieve breakthroughs with high-quality data through Labelbox.

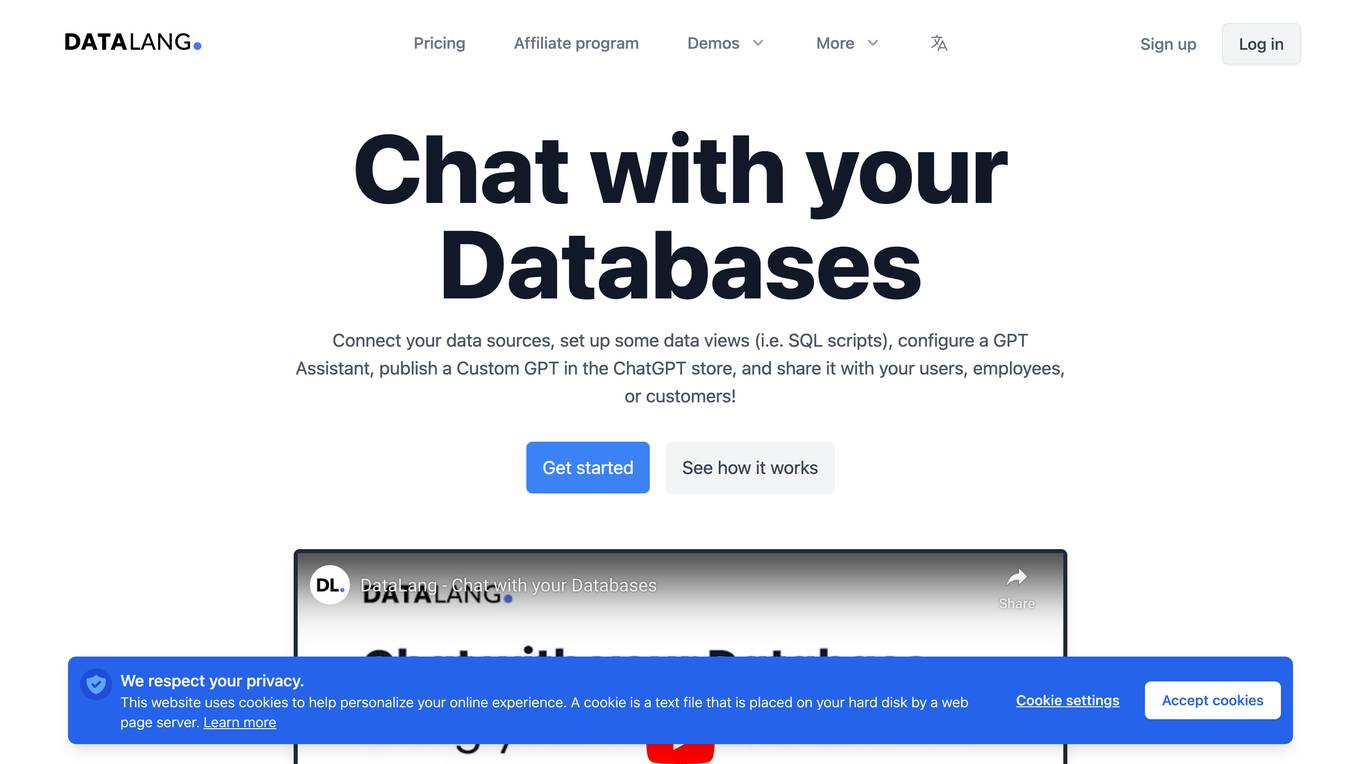

DataLang

DataLang is a tool that allows you to chat with your databases, expose a specific set of data (using SQL) to train GPT, and then chat with it in natural language. You can also use DataLang to automatically make your SQL views available via API, share it with your privately users, or make it public.

Synthesis AI

Synthesis AI is a synthetic data platform that enables more capable and ethical computer vision AI. It provides on-demand labeled images and videos, photorealistic images, and 3D generative AI to help developers build better models faster. Synthesis AI's products include Synthesis Humans, which allows users to create detailed images and videos of digital humans with rich annotations; Synthesis Scenarios, which enables users to craft complex multi-human simulations across a variety of environments; and a range of applications for industries such as ID verification, automotive, avatar creation, virtual fashion, AI fitness, teleconferencing, visual effects, and security.

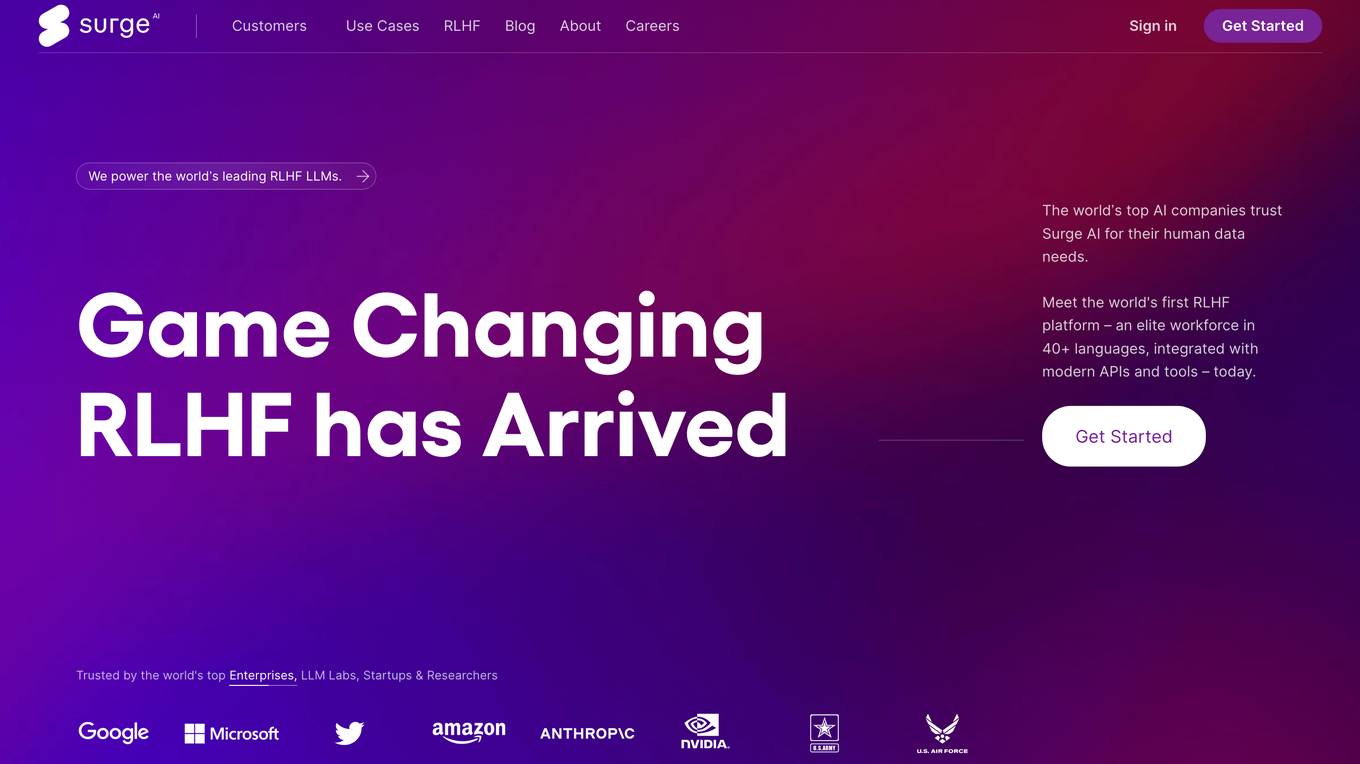

Surge AI

Surge AI is a data labeling platform that provides human-generated data for training and evaluating large language models (LLMs). It offers a global workforce of annotators who can label data in over 40 languages. Surge AI's platform is designed to be easy to use and integrates with popular machine learning tools and frameworks. The company's customers include leading AI companies, research labs, and startups.

Datagen

Datagen is a platform that provides synthetic data for computer vision. Synthetic data is artificially generated data that can be used to train machine learning models. Datagen's data is generated using a variety of techniques, including 3D modeling, computer graphics, and machine learning. The company's data is used by a variety of industries, including automotive, security, smart office, fitness, cosmetics, and facial applications.

20 - Open Source AI Tools

DataDreamer

DataDreamer is a powerful open-source Python library designed for prompting, synthetic data generation, and training workflows. It is simple, efficient, and research-grade, allowing users to create prompting workflows, generate synthetic datasets, and train models with ease. The library is built for researchers, by researchers, focusing on correctness, best practices, and reproducibility. It offers features like aggressive caching, resumability, support for bleeding-edge techniques, and easy sharing of datasets and models. DataDreamer enables users to run multi-step prompting workflows, generate synthetic datasets for various tasks, and train models by aligning, fine-tuning, instruction-tuning, and distilling them using existing or synthetic data.

cambrian

Cambrian-1 is a fully open project focused on exploring multimodal Large Language Models (LLMs) with a vision-centric approach. It offers competitive performance across various benchmarks with models at different parameter levels. The project includes training configurations, model weights, instruction tuning data, and evaluation details. Users can interact with Cambrian-1 through a Gradio web interface for inference. The project is inspired by LLaVA and incorporates contributions from Vicuna, LLaMA, and Yi. Cambrian-1 is licensed under Apache 2.0 and utilizes datasets and checkpoints subject to their respective original licenses.

Online-RLHF

This repository, Online RLHF, focuses on aligning large language models (LLMs) through online iterative Reinforcement Learning from Human Feedback (RLHF). It aims to bridge the gap in existing open-source RLHF projects by providing a detailed recipe for online iterative RLHF. The workflow presented here has shown to outperform offline counterparts in recent LLM literature, achieving comparable or better results than LLaMA3-8B-instruct using only open-source data. The repository includes model releases for SFT, Reward model, and RLHF model, along with installation instructions for both inference and training environments. Users can follow step-by-step guidance for supervised fine-tuning, reward modeling, data generation, data annotation, and training, ultimately enabling iterative training to run automatically.

awesome-transformer-nlp

This repository contains a hand-curated list of great machine (deep) learning resources for Natural Language Processing (NLP) with a focus on Generative Pre-trained Transformer (GPT), Bidirectional Encoder Representations from Transformers (BERT), attention mechanism, Transformer architectures/networks, Chatbot, and transfer learning in NLP.

Efficient-LLMs-Survey

This repository provides a systematic and comprehensive review of efficient LLMs research. We organize the literature in a taxonomy consisting of three main categories, covering distinct yet interconnected efficient LLMs topics from **model-centric** , **data-centric** , and **framework-centric** perspective, respectively. We hope our survey and this GitHub repository can serve as valuable resources to help researchers and practitioners gain a systematic understanding of the research developments in efficient LLMs and inspire them to contribute to this important and exciting field.

chatgpt-universe

ChatGPT is a large language model that can generate human-like text, translate languages, write different kinds of creative content, and answer your questions in a conversational way. It is trained on a massive amount of text data, and it is able to understand and respond to a wide range of natural language prompts. Here are 5 jobs suitable for this tool, in lowercase letters: 1. content writer 2. chatbot assistant 3. language translator 4. creative writer 5. researcher

awesome-generative-ai

A curated list of Generative AI projects, tools, artworks, and models

MockingBird

MockingBird is a toolbox designed for Mandarin speech synthesis using PyTorch. It supports multiple datasets such as aidatatang_200zh, magicdata, aishell3, and data_aishell. The toolbox can run on Windows, Linux, and M1 MacOS, providing easy and effective speech synthesis with pretrained encoder/vocoder models. It is webserver ready for remote calling. Users can train their own models or use existing ones for the encoder, synthesizer, and vocoder. The toolbox offers a demo video and detailed setup instructions for installation and model training.

co-llm

Co-LLM (Collaborative Language Models) is a tool for learning to decode collaboratively with multiple language models. It provides a method for data processing, training, and inference using a collaborative approach. The tool involves steps such as formatting/tokenization, scoring logits, initializing Z vector, deferral training, and generating results using multiple models. Co-LLM supports training with different collaboration pairs and provides baseline training scripts for various models. In inference, it uses 'vllm' services to orchestrate models and generate results through API-like services. The tool is inspired by allenai/open-instruct and aims to improve decoding performance through collaborative learning.

create-million-parameter-llm-from-scratch

The 'create-million-parameter-llm-from-scratch' repository provides a detailed guide on creating a Large Language Model (LLM) with 2.3 million parameters from scratch. The blog replicates the LLaMA approach, incorporating concepts like RMSNorm for pre-normalization, SwiGLU activation function, and Rotary Embeddings. The model is trained on a basic dataset to demonstrate the ease of creating a million-parameter LLM without the need for a high-end GPU.

DALM

The DALM (Domain Adapted Language Modeling) toolkit is designed to unify general LLMs with vector stores to ground AI systems in efficient, factual domains. It provides developers with tools to build on top of Arcee's open source Domain Pretrained LLMs, enabling organizations to deeply tailor AI according to their unique intellectual property and worldview. The toolkit contains code for fine-tuning a fully differential Retrieval Augmented Generation (RAG-end2end) architecture, incorporating in-batch negative concept alongside RAG's marginalization for efficiency. It includes training scripts for both retriever and generator models, evaluation scripts, data processing codes, and synthetic data generation code.

LESS

This repository contains the code for the paper 'LESS: Selecting Influential Data for Targeted Instruction Tuning'. The work proposes a data selection method to choose influential data for inducing a target capability. It includes steps for warmup training, building the gradient datastore, selecting data for a task, and training with the selected data. The repository provides tools for data preparation, data selection pipeline, and evaluation of the model trained on the selected data.

LLM-PowerHouse-A-Curated-Guide-for-Large-Language-Models-with-Custom-Training-and-Inferencing

LLM-PowerHouse is a comprehensive and curated guide designed to empower developers, researchers, and enthusiasts to harness the true capabilities of Large Language Models (LLMs) and build intelligent applications that push the boundaries of natural language understanding. This GitHub repository provides in-depth articles, codebase mastery, LLM PlayLab, and resources for cost analysis and network visualization. It covers various aspects of LLMs, including NLP, models, training, evaluation metrics, open LLMs, and more. The repository also includes a collection of code examples and tutorials to help users build and deploy LLM-based applications.

deeplake

Deep Lake is a Database for AI powered by a storage format optimized for deep-learning applications. Deep Lake can be used for: 1. Storing data and vectors while building LLM applications 2. Managing datasets while training deep learning models Deep Lake simplifies the deployment of enterprise-grade LLM-based products by offering storage for all data types (embeddings, audio, text, videos, images, pdfs, annotations, etc.), querying and vector search, data streaming while training models at scale, data versioning and lineage, and integrations with popular tools such as LangChain, LlamaIndex, Weights & Biases, and many more. Deep Lake works with data of any size, it is serverless, and it enables you to store all of your data in your own cloud and in one place. Deep Lake is used by Intel, Bayer Radiology, Matterport, ZERO Systems, Red Cross, Yale, & Oxford.

BetaML.jl

The Beta Machine Learning Toolkit is a package containing various algorithms and utilities for implementing machine learning workflows in multiple languages, including Julia, Python, and R. It offers a range of supervised and unsupervised models, data transformers, and assessment tools. The models are implemented entirely in Julia and are not wrappers for third-party models. Users can easily contribute new models or request implementations. The focus is on user-friendliness rather than computational efficiency, making it suitable for educational and research purposes.

baal

Baal is an active learning library that supports both industrial applications and research use cases. It provides a framework for Bayesian active learning methods such as Monte-Carlo Dropout, MCDropConnect, Deep ensembles, and Semi-supervised learning. Baal helps in labeling the most uncertain items in the dataset pool to improve model performance and reduce annotation effort. The library is actively maintained by a dedicated team and has been used in various research papers for production and experimentation.

20 - OpenAI Gpts

👑 Data Privacy for Home Inspection & Appraisal 👑

Home Inspection and Appraisal Services have access to personal property and related information, requiring them to be vigilant about data privacy.

👑 Data Privacy for PI & Security Firms 👑

Private Investigators and Security Firms, given the nature of their work, handle highly sensitive information and must maintain strict confidentiality and data privacy standards.

👑 Data Privacy for Real Estate Agencies 👑

Real Estate Agencies and Brokers deal with personal data of clients, including financial information and preferences, requiring careful handling and protection of such data.

Data Engineer Consultant

Guides in data engineering tasks with a focus on practical solutions.

Solar Pro Advisor

Your guide in solar sales mastery, offering in-depth resources for handling objections and effective marketing strategies. Over 7 Years of Proprietary data and a Knowledge Base from within the Solar Industry with battle Tested Ads and Real Training.

ChatXGB

GPT chatbot that helps you with technical questions related to XGBoost algorithm and library

Neural Network Creator

Assists with creating, refining, and understanding neural networks.

TonyAIDeveloperResume

Chat with my resume to see if I am a good fit for your AI related job.

👑 Data Privacy for Architecture & Construction 👑

Architecture and Construction Firms handle sensitive project data, client information, and architectural plans, necessitating strict data privacy measures.

The OG Coder

Expert full stack developer with focus on customer-centric solutions and end-to-end architecture.

Explanator

Technical expert blending Kahneman's cognitive insights with Carmack's clarity.

Custom GPT Builder

Create personalized GPTs with my simple builder. Click the conversation starter (starting with ###) to begin.

E&L and Pharmaceutical Regulatory Compliance AI

This GPT chat AI is specialized in understanding Extractables and Leachables studies, aligning with pharmaceutical guidelines, and aiding in the design and interpretation of relevant experiments.