Best AI tools for< Mitigate Incidents >

20 - AI tool Sites

CrowdStrike

CrowdStrike is a cloud-based cybersecurity platform that provides endpoint protection, threat intelligence, and incident response services. It uses artificial intelligence (AI) to detect and prevent cyberattacks. CrowdStrike's platform is designed to be scalable and easy to use, and it can be deployed on-premises or in the cloud. CrowdStrike has a global customer base of over 23,000 organizations, including many Fortune 500 companies.

Torq

Torq is an AI-driven platform that offers Security Hyperautomation Solutions, empowering security teams to detect, prioritize, and respond to threats faster. It provides a range of features and capabilities such as AI Agents, AI SOC Analyst, Case Management, and Integrations. Torq is trusted by top security teams worldwide and is recognized for its ability to mitigate alert fatigue, false positives, and staff burnout. The platform is designed to usher in the era of Autonomous SOC by harnessing AI to enhance security operations.

Adversa AI

Adversa AI is a platform that provides Secure AI Awareness, Assessment, and Assurance solutions for various industries to mitigate AI risks. The platform focuses on LLM Security, Privacy, Jailbreaks, Red Teaming, Chatbot Security, and AI Face Recognition Security. Adversa AI helps enable AI transformation by protecting it from cyber threats, privacy issues, and safety incidents. The platform offers comprehensive research, advisory services, and expertise in the field of AI security.

SecureLabs

SecureLabs is an AI-powered platform that offers comprehensive security, privacy, and compliance management solutions for businesses. The platform integrates cutting-edge AI technology to provide continuous monitoring, incident response, risk mitigation, and compliance services. SecureLabs helps organizations stay current and compliant with major regulations such as HIPAA, GDPR, CCPA, and more. By leveraging AI agents, SecureLabs offers autonomous aids that tirelessly safeguard accounts, data, and compliance down to the account level. The platform aims to help businesses combat threats in an era of talent shortages while keeping costs down.

Cyble

Cyble is a leading threat intelligence platform offering products and services recognized by top industry analysts. It provides AI-driven cyber threat intelligence solutions for enterprises, governments, and individuals. Cyble's offerings include attack surface management, brand intelligence, dark web monitoring, vulnerability management, takedown and disruption services, third-party risk management, incident management, and more. The platform leverages cutting-edge AI technology to enhance cybersecurity efforts and stay ahead of cyber adversaries.

Sprinto

Sprinto is a Continuous Security & Compliance Platform that helps organizations manage and maintain compliance with various frameworks such as SOC 2, ISO 27001, NIST, GDPR, HIPAA, and more. It offers features like Vendor Risk Management, Vulnerability Assessment, Access Control Policies, Security Questionnaire, and Change Management. Sprinto automates evidence collection, streamlines workflows, and provides expert support to ensure organizations stay audit-ready and compliant. The platform is AI-powered, scalable, and supports over 40 compliance frameworks, making it a comprehensive solution for security and compliance needs.

DVC

DVC is an open-source platform for managing machine learning data and experiments. It provides a unified interface for working with data from various sources, including local files, cloud storage, and databases. DVC also includes tools for versioning data and experiments, tracking metrics, and automating compute resources. DVC is designed to make it easy for data scientists and machine learning engineers to collaborate on projects and share their work with others.

Aporia

Aporia is an AI control platform that provides real-time guardrails and security for AI applications. It offers features such as hallucination mitigation, prompt injection prevention, data leakage prevention, and more. Aporia helps businesses control and mitigate risks associated with AI, ensuring the safe and responsible use of AI technology.

Privado AI

Privado AI is a privacy engineering tool that bridges the gap between privacy compliance and software development. It automates personal data visibility and privacy governance, helping organizations to identify privacy risks, track data flows, and ensure compliance with regulations such as CPRA, MHMDA, FTC, and GDPR. The tool provides real-time visibility into how personal data is collected, used, shared, and stored by scanning the code of websites, user-facing applications, and backend systems. Privado offers features like Privacy Code Scanning, programmatic privacy governance, automated GDPR RoPA reports, risk identification without assessments, and developer-friendly privacy guidance.

Trust Stamp

Trust Stamp is an AI-powered digital identity solution that focuses on mitigating fraud through biometrics, privacy, and cybersecurity. The platform offers secure authentication and multi-factor authentication using biometric data, along with features like KYC/AML compliance, tokenization, and age estimation. Trust Stamp helps financial institutions, healthcare providers, dating platforms, and other industries prevent identity theft and fraud by providing innovative solutions for account recovery and user security.

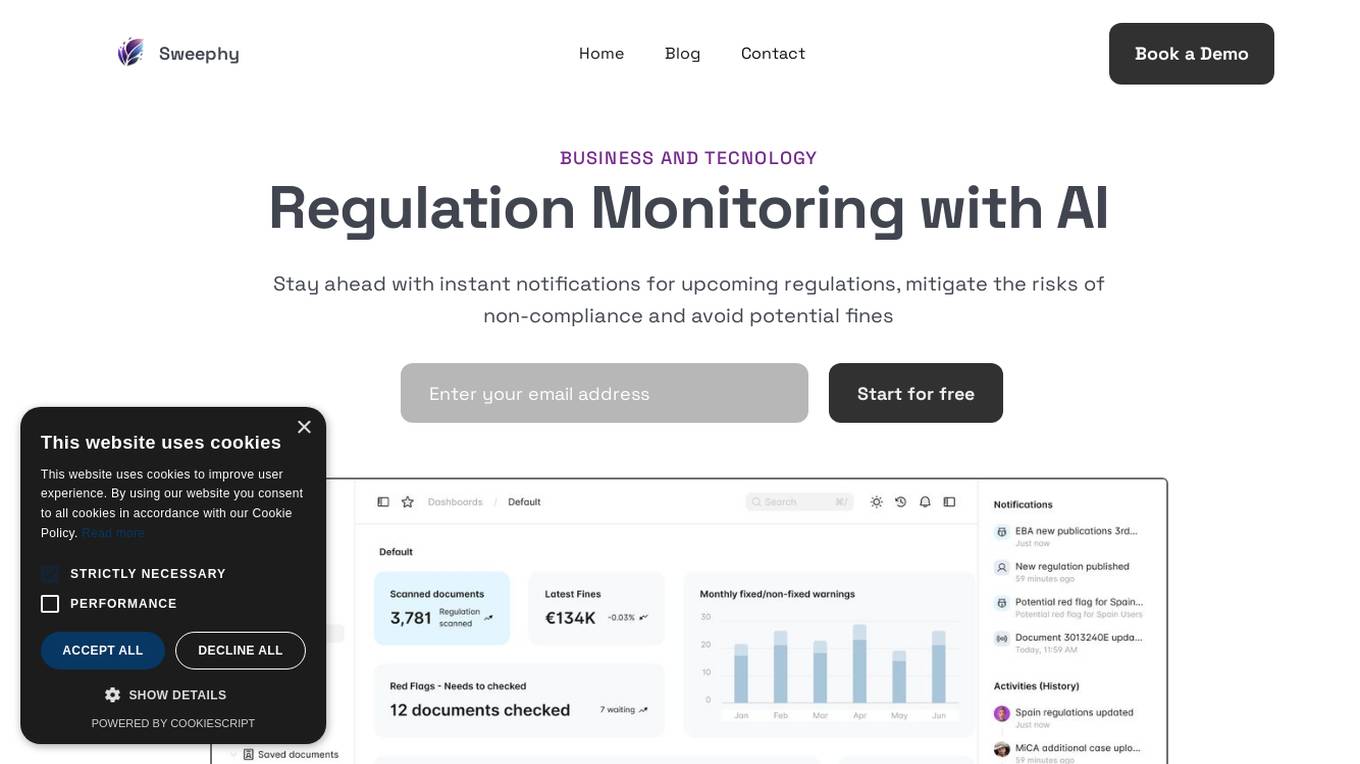

Sweephy

Sweephy is an AI tool for Regulation Monitoring that helps businesses stay ahead with instant notifications for upcoming regulations, mitigate risks of non-compliance, and avoid potential fines. It simplifies compliance management by integrating directly with regulatory data sources and streamlining monitoring and adaptation to changes through one platform. Sweephy provides comprehensive tools for region-specific compliance, automated data collection, custom notifications, and instant red flag alerts. The platform also offers real-time updates and insights from various publications, direct integration with regulatory databases, and an API for bringing regulatory data into internal systems. Clients from 5 different countries trust Sweephy for deciphering complex regulatory updates and ensuring compliance.

Alteryx

Alteryx offers a leading AI Platform for Enterprise Analytics that delivers actionable insights by automating analytics. The platform combines the power of data preparation, analytics, and machine learning to help businesses make better decisions faster. With Alteryx, businesses can connect to a wide variety of data sources, prepare and clean data, perform advanced analytics, and build and deploy machine learning models. The platform is designed to be easy to use, even for non-technical users, and it can be deployed on-premises or in the cloud.

Trade Ideas

Trade Ideas is an AI-driven stock scanning and charting platform designed to meet the needs of active traders. It provides powerful tools such as real-time market scanning, AI-driven trade signals, customizable alerts, advanced charting capabilities, and time-saving data visualization. Trade Ideas offers users the confidence to make smarter trading decisions and the freedom to conquer markets anytime, anywhere. The platform also includes features like a trading simulator for practicing new strategies, Picture in Picture charts for visualizing multiple timeframes, and integration with leading brokers and trading platforms.

Icertis

Icertis is a leading provider of contract lifecycle management (CLM) software. Its platform, Icertis Contract Intelligence, helps organizations manage their contracts more effectively, from creation and negotiation to execution and compliance. Icertis Contract Intelligence is powered by AI, which helps organizations automate tasks, gain insights into their contracts, and make better decisions.

Kira Systems

Kira Systems is a machine learning contract search, review, and analysis software that helps businesses identify, extract, and analyze content in their contracts and documents. It uses patented machine learning technology to extract concepts and data points with high efficiency and accuracy. Kira also has built-in intelligence that streamlines the contract review process with out-of-the-box smart fields. Businesses can also create their own smart fields to find specific data points using Kira's no-code machine learning tool. Kira's adaptive workflows allow businesses to organize, track, and export results. Kira has a partner ecosystem that allows businesses to transform how teams work with their contracts.

Intelligencia AI

Intelligencia AI is a leading provider of AI-powered solutions for the pharmaceutical industry. Our suite of solutions helps de-risk and enhance clinical development and decision-making. We use a combination of data, AI, and machine learning to provide insights into the probability of success for drugs across multiple therapeutic areas. Our solutions are used by many of the top global pharmaceutical companies to improve their R&D productivity and make more informed decisions.

Trade Ideas

Trade Ideas is an AI-driven stock scanning and charting platform that provides unmatched precision in finding the biggest movers first. It offers AI-powered Buy/Sell signals, real-time market scanning, customizable alerts, advanced charting capabilities, and time-saving data visualization. Users can access the platform on any device, empowering them to make smarter trading decisions and stay ahead of the game. Trade Ideas also features a live trading room with expert market commentary and a simulator for practicing new trading strategies under actual market conditions. The platform is trusted by leading brokers and trading platforms, offering users a competitive edge in the market.

AI Clearing

AI Clearing is an AI-powered platform designed for the infrastructure construction industry. It serves as an end-to-end, AI-native construction intelligence platform that integrates various data sources like design & BIM, schedules, budgets, drone imagery, mobile inputs, and ground data into a fully connected system. The platform aims to revolutionize the construction industry by providing accurate progress tracking, reporting, and oversight, ultimately leading to cost reduction and time savings for asset owners and contractors.

Perceive Now

Perceive Now is the world's first Large Language Model fine-tuned with IP and Market Research data. It offers custom IP and Market reports for various industries, providing detailed insights and analysis to support decision-making processes. The platform helps in identifying market trends, conducting due diligence, managing deal flow, and maximizing IP and licensing opportunities. Perceive Now is a game-changer in prior art search, increasing the odds of patent grant success. It has significantly reduced research costs and time, accessing over 100M IP and market data sources and assisting in securing funding worth $500M.

CropGPT

CropGPT is an AI tool designed for soft commodities, offering a comprehensive platform for crop intelligence, market data, weather reports, and predictive analytics. It provides users with valuable insights and predictions to optimize crop production and mitigate risks. With features like crop reports, risk radar, yield predictions, and market forces analysis, CropGPT empowers users in the agricultural sector to make informed decisions and enhance productivity.

1 - Open Source AI Tools

awesome-LLM-AIOps

The 'awesome-LLM-AIOps' repository is a curated list of academic research and industrial materials related to Large Language Models (LLM) and Artificial Intelligence for IT Operations (AIOps). It covers various topics such as incident management, log analysis, root cause analysis, incident mitigation, and incident postmortem analysis. The repository provides a comprehensive collection of papers, projects, and tools related to the application of LLM and AI in IT operations, offering valuable insights and resources for researchers and practitioners in the field.

20 - OpenAI Gpts

Blue Team Guide

it is a meticulously crafted arsenal of knowledge, insights, and guidelines that is shaped to empower organizations in crafting, enhancing, and refining their cybersecurity defenses

ethicallyHackingspace (eHs)® (Full Spectrum)™

Full Spectrum Space Cybersecurity Professional ™ AI-copilot (BETA)

Project Risk Assessment Advisor

Assesses project risks to mitigate potential organizational impacts.

GaiaAI

The pressing environmental issues we face today require novel approaches and technological advancements to effectively mitigate their impacts. GaiaAI offers a range of tools and modes to promote sustainable practices and enhance environmental stewardship.

Inclusive AI Advisor

Expert in AI fairness, offering tailored advice and document insights.

Liquidity Management Advisor

Optimizes financial liquidity, mitigates operational risk, and enhances financial performance.

Cyber Threat Intelligence

An automated cyber threat intelligence expert configured and trained by Bob Gourley. Pls provide feedback. Find Bob on X at @bobgourley

Prince2 Expert

Guides through Prince2 questions and answers, ensuring accuracy and engagement.

SSLLMs Advisor

Helps you build logic security into your GPTs custom instructions. Documentation: https://github.com/infotrix/SSLLMs---Semantic-Secuirty-for-LLM-GPTs

Fluffy Risk Analyst

A cute sheep expert in risk analysis, providing downloadable checklists.

Disaster Recovery Advisor

Ensures business continuity by mitigating risks associated with disasters.