Best AI tools for< Deploy Tests >

20 - AI tool Sites

Robin

Robin by Mobile.dev is an AI-powered mobile app testing tool that allows users to test their mobile apps with confidence. It offers a simple yet powerful open-source framework called Maestro for testing mobile apps at high speed. With intuitive and reliable testing powered by AI, users can write rock-solid tests without extensive coding knowledge. Robin provides an end-to-end testing strategy, rapid testing across various devices and operating systems, and auto-healing of test flows using state-of-the-art AI models.

PromptPoint Playground

PromptPoint Playground is an AI tool designed to help users design, test, and deploy prompts quickly and efficiently. It enables teams to create high-quality LLM outputs through automatic testing and evaluation. The platform allows users to make non-deterministic prompts predictable, organize prompt configurations, run automated tests, and monitor usage. With a focus on collaboration and accessibility, PromptPoint Playground empowers both technical and non-technical users to leverage the power of large language models for prompt engineering.

Vapi

Vapi is a Voice AI tool designed specifically for developers. It enables developers to interact with their code using voice commands, making the coding process more efficient and hands-free. With Vapi, developers can perform various tasks such as writing code, debugging, and running tests simply by speaking. The tool is equipped with advanced natural language processing capabilities to accurately interpret and execute voice commands. Vapi aims to revolutionize the way developers work by providing a seamless and intuitive coding experience.

Langtail

Langtail is a platform that helps developers build, test, and deploy AI-powered applications. It provides a suite of tools to help developers debug prompts, run tests, and monitor the performance of their AI models. Langtail also offers a community forum where developers can share tips and tricks, and get help from other users.

DiscuroAI

DiscuroAI is an all-in-one platform designed for developers to easily build, test, and consume complex AI workflows. Users can define their workflows in a user-friendly interface and execute them with a single API call. The platform integrates with GPT-3, DALLE-2, and other OpenAI models, allowing users to chain prompts together in powerful ways and extract output in JSON format via API. DiscuroAI enables users to build and test complex self-transforming AI workflows and datasets, execute workflows with one API call, and monitor AI usage across workflows.

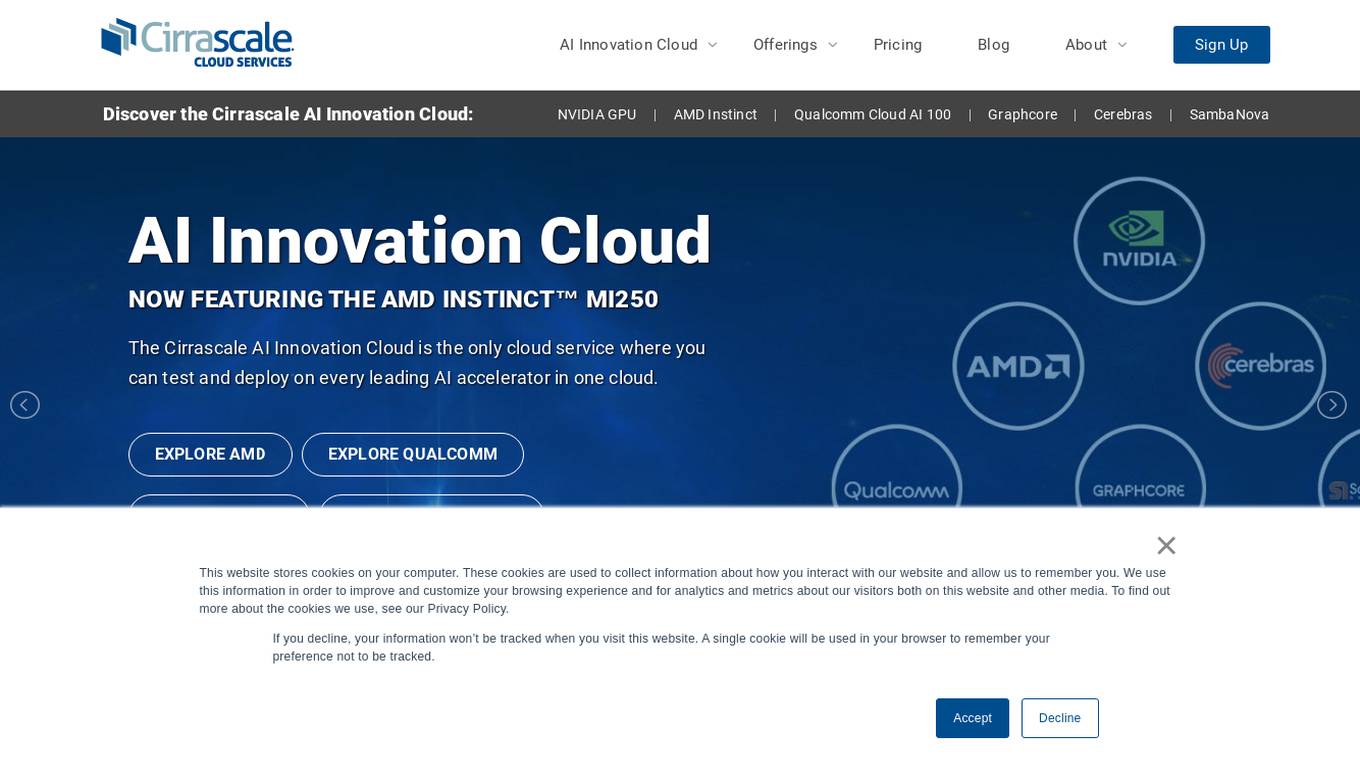

Cirrascale Cloud Services

Cirrascale Cloud Services is an AI tool that offers cloud solutions for Artificial Intelligence applications. The platform provides a range of cloud services and products tailored for AI innovation, including NVIDIA GPU Cloud, AMD Instinct Series Cloud, Qualcomm Cloud, Graphcore, Cerebras, and SambaNova. Cirrascale's AI Innovation Cloud enables users to test and deploy on leading AI accelerators in one cloud, democratizing AI by delivering high-performance AI compute and scalable deep learning solutions. The platform also offers professional and managed services, tailored multi-GPU server options, and high-throughput storage and networking solutions to accelerate development, training, and inference workloads.

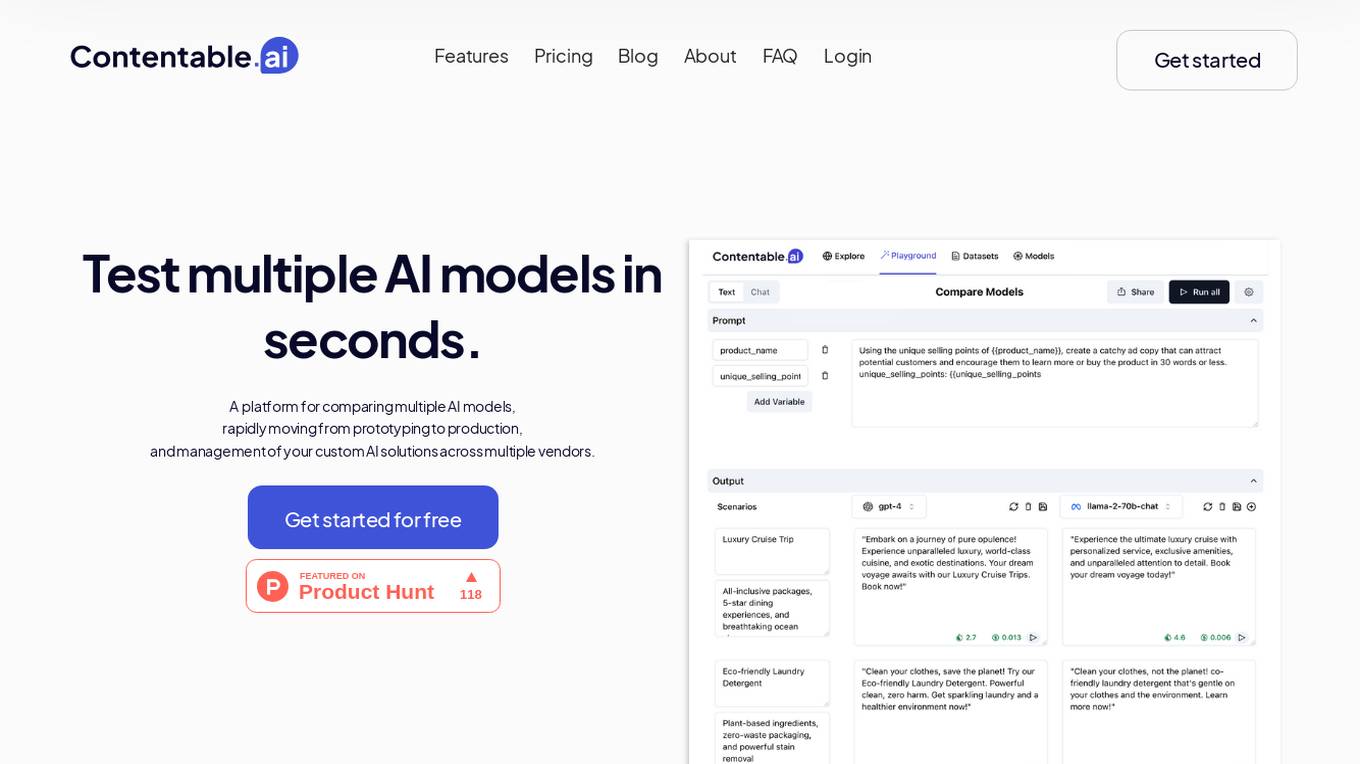

Contentable.ai

Contentable.ai is a platform for comparing multiple AI models, rapidly moving from prototyping to production, and management of your custom AI solutions across multiple vendors. It allows users to test multiple AI models in seconds, compare models side-by-side across top AI providers, collaborate on AI models with their team seamlessly, design complex AI workflows without coding, and pay as they go.

Plumb

Plumb is a no-code, node-based builder that empowers product, design, and engineering teams to create AI features together. It enables users to build, test, and deploy AI features with confidence, fostering collaboration across different disciplines. With Plumb, teams can ship prototypes directly to production, ensuring that the best prompts from the playground are the exact versions that go to production. It goes beyond automation, allowing users to build complex multi-tenant pipelines, transform data, and leverage validated JSON schema to create reliable, high-quality AI features that deliver real value to users. Plumb also makes it easy to compare prompt and model performance, enabling users to spot degradations, debug them, and ship fixes quickly. It is designed for SaaS teams, helping ambitious product teams collaborate to deliver state-of-the-art AI-powered experiences to their users at scale.

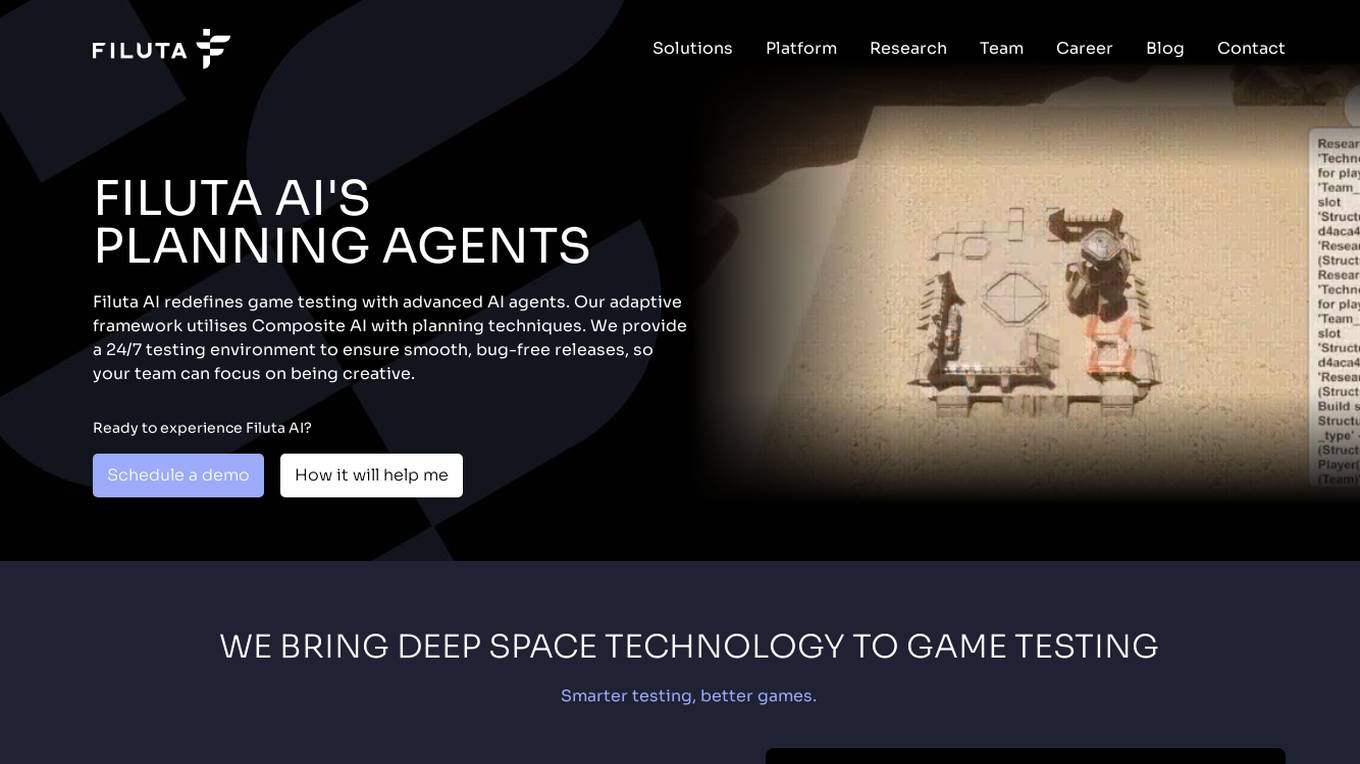

Filuta AI

Filuta AI is an advanced AI application that redefines game testing with planning agents. It utilizes Composite AI with planning techniques to provide a 24/7 testing environment for smooth, bug-free releases. The application brings deep space technology to game testing, enabling intelligent agents to analyze game states, adapt in real time, and execute action sequences to achieve test goals. Filuta AI offers goal-driven testing, adaptive exploration, detailed insights, and shorter development cycles, making it a valuable tool for game developers, QA leads, game designers, automation engineers, and producers.

LambdaTest

LambdaTest is a next-generation mobile apps and cross-browser testing cloud platform that offers a wide range of testing services. It allows users to perform manual live-interactive cross-browser testing, run Selenium, Cypress, Playwright scripts on cloud-based infrastructure, and execute AI-powered automation testing. The platform also provides accessibility testing, real devices cloud, visual regression cloud, and AI-powered test analytics. LambdaTest is trusted by over 2 million users globally and offers a unified digital experience testing cloud to accelerate go-to-market strategies.

Hanabi.rest

Hanabi.rest is an AI-based API building platform that allows users to create REST APIs from natural language and screenshots using AI technology. Users can deploy the APIs on Cloudflare Workers and roll them out globally. The platform offers a live editor for testing database access and API endpoints, generates code compatible with various runtimes, and provides features like sharing APIs via URL, npm package integration, and CLI dump functionality. Hanabi.rest simplifies API design and deployment by leveraging natural language processing, image recognition, and v0.dev components.

Vercel

Vercel is an AI-powered cloud platform that enables developers to build, deploy, and scale web applications quickly and securely. It offers a range of developer tools and cloud infrastructure to optimize performance and enhance user experience. Vercel's AI capabilities include AI Cloud, AI SDK, AI Gateway, and Sandbox AI workflows, providing seamless integration of AI models into web applications.

Leapwork

Leapwork is an AI-powered test automation platform that enables users to build, manage, maintain, and analyze complex data-driven testing across various applications, including AI apps. It offers a democratized testing approach with an intuitive visual interface, composable architecture, and generative AI capabilities. Leapwork supports testing of diverse application types, web, mobile, desktop applications, and APIs. It allows for scalable testing with reusable test flows that adapt to changes in the application under test. Leapwork can be deployed on the cloud or on-premises, providing full control to the users.

Dreamlab

Dreamlab is a multiplayer game creation platform that allows users to build and deploy great 2D multiplayer games quickly and effortlessly. It features an in-browser collaborative editor, easy integration of multiplayer functionality, and AI assistance for code generation. Dreamlab is designed for indie game developers, game jam participants, Discord server activities, and rapid prototyping. Users can start building their games instantly without the need for downloads or installations. The platform offers simple and transparent pricing with a free plan and a pro plan for serious game developers.

Autoblocks AI

Autoblocks AI is an AI application designed to help users build safe AI apps efficiently. It allows users to ship AI agents in minutes, speeding up the development process significantly. With Autoblocks AI, users can prototype quickly, test at a faster rate, and deploy with confidence. The application is trusted by leading AI teams and focuses on making AI agent development more predictable by addressing the unpredictability of user inputs and non-deterministic models.

MagikKraft

MagikKraft is an AI-powered platform that simplifies complex controls by enabling users to create personalized sequences and actions for programmable devices like drones, automated appliances, and self-driving vehicles. Users can craft, simulate, and deploy customized recipes through the AI-powered tool, enhancing the potential of technology while prioritizing privacy, user control, and creative freedom.

Retell AI

Retell AI is a powerful voice agent platform that enables users to build, test, deploy, and monitor AI voice agents at scale. It offers features such as call transfer, appointment booking, IVR navigation, batch calling, and post-call analysis. Retell AI provides advantages like verified phone numbers, branded call ID, custom analysis, and case studies. However, some disadvantages include the need for initial setup by an engineer, ongoing maintenance, and potential concurrent call limitations. The application is suitable for various industries and use cases, with multilingual support and compliance with industry standards.

DORA

DORA is a research program by Google Cloud that focuses on understanding the capabilities driving software delivery and operations performance. It helps teams apply these capabilities to enhance organizational performance. The program introduces the DORA AI Capabilities Model, identifying key technical and cultural practices that amplify the positive impacts of AI on performance. DORA offers resources, guides, and tools like the DORA Quick Check to help organizations improve their software delivery goals.

WWWAI.site

WWWAI.site is an AI-powered platform that revolutionizes web creation by allowing users to create and deploy websites using natural language input and advanced AI agents. The platform leverages specialized AI agents, such as Code Creation, Requirement Analysis, Concept Setting, and Error Validation, along with Claude API for language processing capabilities. Model Context Protocol (MCP) ensures consistency across all components, while users can choose between GitHub or CloudFlare for deployment. The platform is currently in beta testing with limited availability, offering users a seamless and innovative website creation experience.

CircleCI

CircleCI is an AI-powered autonomous validation platform designed for the AI era. It offers intelligent automation and expert-in-the-loop tooling to deliver faster, more reliable software deployment with minimal human oversight. CircleCI enables developers to ship code at AI speed with enterprise-grade confidence, ensuring code is tested, trusted, and ready to ship 24/7. The platform supports various execution environments, integrations with popular tools like GitHub and AWS, and is trusted by leading companies like Meta, Google, and Okta.

0 - Open Source AI Tools

20 - OpenAI Gpts

Tech Mentor

Expert software architect with experience in design, construction, development, testing and deployment of Web, Mobile and Standalone software architectures

DevOps Mentor

A formal, expert guide for DevOps pros advancing their skills. Your DevOps GYM

Solidity Master

I'll help you master Solidity to become a better smart contract developer.

Solidy

Experto en blockchain y Solidity, asistiendo en programación de contratos inteligentes.