Best AI tools for< Correlate News >

9 - AI tool Sites

NewsDeck

NewsDeck is an AI-powered news analysis tool that helps users find, filter, and analyze thousands of articles daily. It allows users to discover and organize news coverage efficiently. The platform offers a comprehensive news reading experience by providing correlated coverage across hundreds of publishers. NewsDeck's AI technology streamlines the process by analyzing news stories related to various topics, people, companies, and countries. The tool is designed to be ethical and transparent, ensuring users have insight into the AI decision-making process, news sources, and funding. With a small team dedicated to revolutionizing news consumption, NewsDeck aims to change the way users engage with news content.

Simpleem

Simpleem is an Artificial Emotional Intelligence (AEI) tool that helps users uncover intentions, predict success, and leverage behavior for successful interactions. By measuring all interactions and correlating them with concrete outcomes, Simpleem provides insights into verbal, para-verbal, and non-verbal cues to enhance customer relationships, track customer rapport, and assess team performance. The tool aims to identify win/lose patterns in behavior, guide users on boosting performance, and prevent burnout by promptly identifying red flags. Simpleem uses proprietary AI models to analyze real-world data and translate behavioral insights into concrete business metrics, achieving a high accuracy rate of 94% in success prediction.

Stellar Cyber

Stellar Cyber is an AI-driven unified security operations platform powered by Open XDR. It offers a single platform with NG-SIEM, NDR, and Open XDR, providing security capabilities to take control of security operations. The platform helps organizations detect, correlate, and respond to threats fast using AI technology. Stellar Cyber is designed to protect the entire attack surface, improve security operations performance, and reduce costs while simplifying security operations.

Keep

Keep is an open-source AIOps platform designed for managing alerts and events at scale. It offers features such as enrichment, workflows, a single pane of glass, and over 90 integrations. Keep is ideal for those dealing with alerts in complex environments and leverages AI for IT Operations. The platform provides high-quality integrations with monitoring systems, advanced querying capabilities, a workflow engine, and next-gen AIOps for enterprise-level alert management. Keep is maintained by a community of 'Keepers' and seamlessly integrates with existing IT operations tools to optimize alert management and reduce alert fatigue.

Nichely

Nichely is an AI-powered SEO tool that helps users dominate their niche by leveraging cutting-edge AI technology to navigate and correlate millions of topics in various niches. It offers features such as topic discovery, topic research, and keyword research to assist users in building detailed topical maps and comprehensive topic clusters. With Nichely, users can uncover untapped content opportunities, find relevant long-tail keywords, and analyze SERPs. The tool is designed for content/niche website owners, entrepreneurs, bloggers, and individuals looking to enhance their topical authority and construct strong topical clusters and maps.

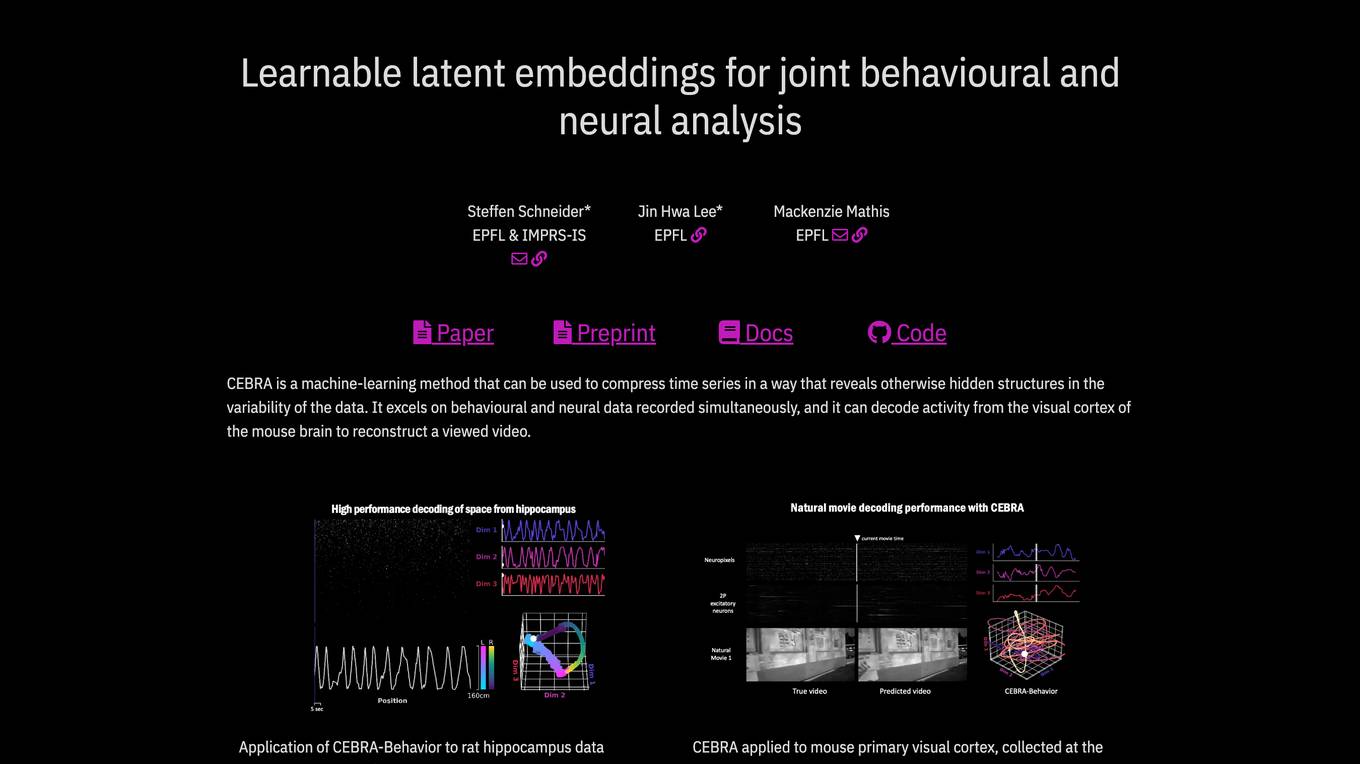

CEBRA

CEBRA is a self-supervised learning algorithm designed for obtaining interpretable embeddings of high-dimensional recordings using auxiliary variables. It excels in compressing time series data to reveal hidden structures, particularly in behavioral and neural data. The algorithm can decode neural activity, reconstruct viewed videos, decode trajectories, and determine position during navigation. CEBRA is a valuable tool for joint behavioral and neural analysis, providing consistent and high-performance latent spaces for hypothesis testing and label-free applications across various datasets and species.

Schemawriter.ai

Schemawriter.ai is an advanced AI software platform that generates optimized schema and content on autopilot. It uses a large number of external APIs, including several Google APIs, and complex mathematical algorithms to produce entity lists and content correlated with high rankings in Google. The platform connects directly to Wikipedia and Wikidata via APIs to deliver accurate information about content and entities on webpages. Schemawriter.ai simplifies the process of editing schema, generating advanced schema files, and optimizing webpage content for fast and permanent on-page SEO optimization.

Veriti

Veriti is an AI-driven platform that proactively monitors and safely remediates exposures across the entire security stack, without disrupting the business. It helps organizations maximize their security posture while ensuring business uptime. Veriti offers solutions for safe remediation, MITRE ATT&CK®, healthcare, MSSPs, and manufacturing. The platform correlates exposures to misconfigurations, continuously assesses exposures, integrates with various security solutions, and prioritizes remediation based on business impact. Veriti is recognized for its role in exposure assessments and remediation, providing a consolidated security platform for businesses to neutralize threats before they happen.

AskJimmy

AskJimmy is a platform for AI agents focused on finance and trading. It offers exposure to a diverse range of strategies managed by top-notch AI Agents. The platform allows users to compose autonomous agents and trading strategies with extreme customization. It aims to create a decentralized multi-strategy collaborative hedge-fund powered by AI agents. AskJimmy is designed to aggregate non-correlated autonomous agent strategies into a diversified subnet, shaping the future of multi-strategies decentralized hedge-fund.

0 - Open Source AI Tools

2 - OpenAI Gpts

UPSC Pathfinder

UPSC guide with directive word analysis, news correlation, and Constitution insight.

Betalingsherinnering

✅ Ben je op zoek naar een manier om een betalingsherinnering op te stellen? Stel hier een correcte herinnering op