Best AI tools for< Build Serverless Systems >

20 - AI tool Sites

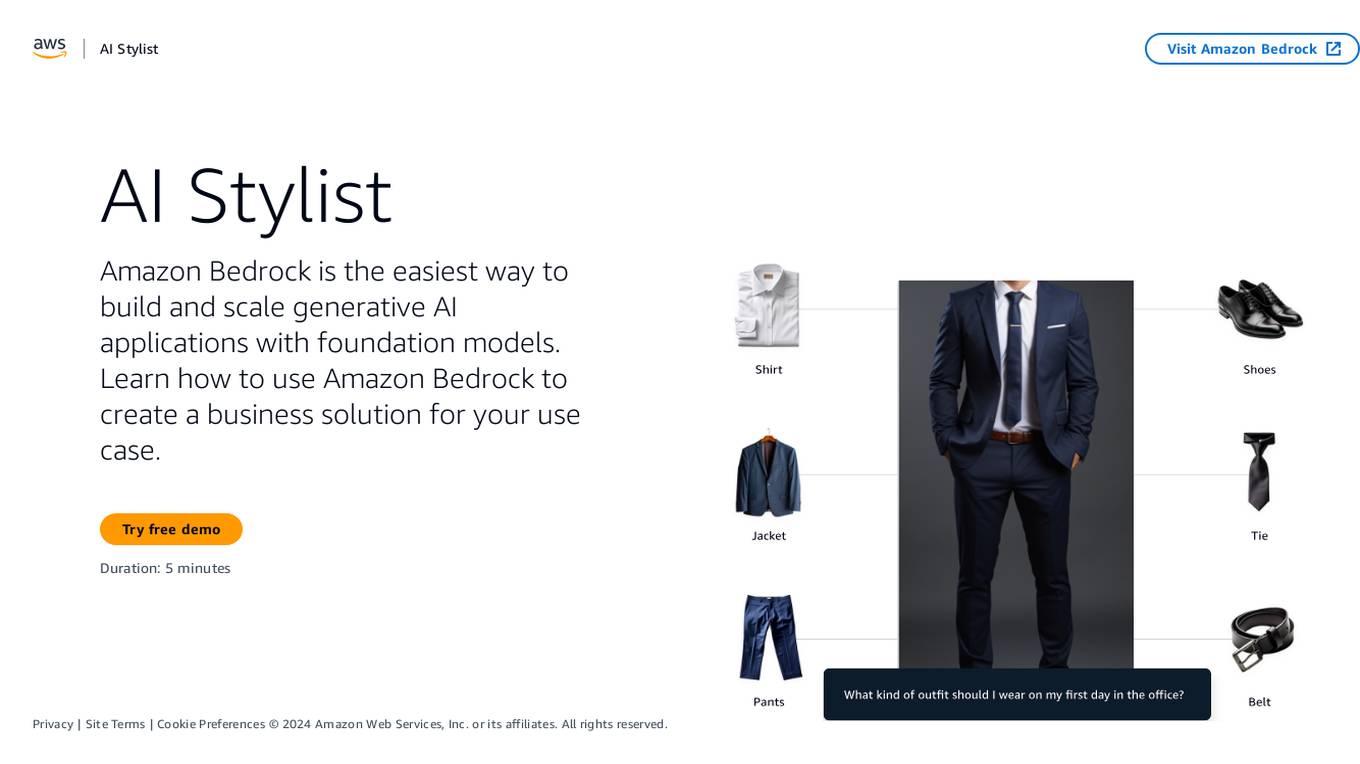

Amazon Bedrock

Amazon Bedrock is a cloud-based platform that enables developers to build, deploy, and manage serverless applications. It provides a fully managed environment that takes care of the infrastructure and operations, so developers can focus on writing code. Bedrock also offers a variety of tools and services to help developers build and deploy their applications, including a code editor, a debugger, and a deployment pipeline.

Koxy AI

Koxy AI is an AI-powered serverless back-end platform that allows users to build globally distributed, fast, secure, and scalable back-ends with no code required. It offers features such as live logs, smart errors handling, integration with over 80,000 AI models, and more. Koxy AI is designed to help users focus on building the best service possible without wasting time on security and latency concerns. It provides a No-SQL JSON-based database, real-time data synchronization, cloud functions, and a drag-and-drop builder for API flows.

Cerebium

Cerebium is a serverless AI infrastructure platform that allows teams to build, test, and deploy AI applications quickly and efficiently. With a focus on speed, performance, and cost optimization, Cerebium offers a range of features and tools to simplify the development and deployment of AI projects. The platform ensures high reliability, security, and compliance while providing real-time logging, cost tracking, and observability tools. Cerebium also offers GPU variety and effortless autoscaling to meet the diverse needs of developers and businesses.

Pinecone

Pinecone is a vector database that helps power AI for the world's best companies. It is a serverless database that lets you deliver remarkable GenAI applications faster, at up to 50x lower cost. Pinecone is easy to use and can be integrated with your favorite cloud provider, data sources, models, frameworks, and more.

Azure Static Web Apps

Azure Static Web Apps is a platform provided by Microsoft Azure for building and deploying modern web applications. It allows developers to easily host static web content and serverless APIs with seamless integration to popular frameworks like React, Angular, and Vue. With Azure Static Web Apps, developers can quickly set up continuous integration and deployment workflows, enabling them to focus on building great user experiences without worrying about infrastructure management.

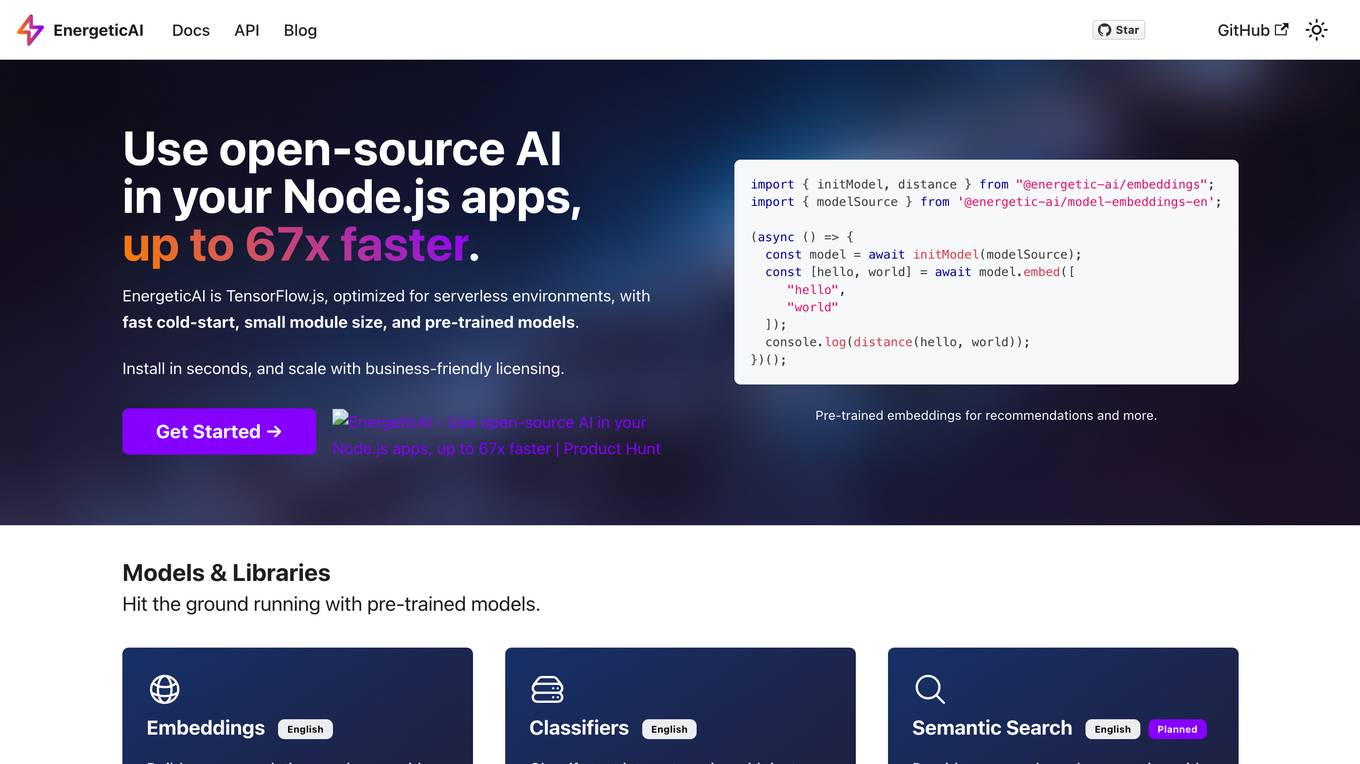

EnergeticAI

EnergeticAI is an open-source AI library that can be used in Node.js applications. It is optimized for serverless environments and provides fast cold-start, small module size, and pre-trained models. EnergeticAI can be used for a variety of tasks, including building recommendations, classifying text, and performing semantic search.

TractoAI

TractoAI is an advanced AI platform that offers deep learning solutions for various industries. It provides Batch Inference with no rate limits, DeepSeek offline inference, and helps in training open source AI models. TractoAI simplifies training infrastructure setup, accelerates workflows with GPUs, and automates deployment and scaling for tasks like ML training and big data processing. The platform supports fine-tuning models, sandboxed code execution, and building custom AI models with distributed training launcher. It is developer-friendly, scalable, and efficient, offering a solution library and expert guidance for AI projects.

Activeloop

Activeloop is an AI tool that offers Deep Lake, a database for AI solutions across various industries such as agriculture, audio processing, autonomous vehicles, robotics, biomedical and healthcare, generative AI, multimedia, safety, and security. The platform provides features like fast AI search, faster data preparation, serverless DB for code assistant, and more. Activeloop aims to streamline data processing and enhance AI development for businesses and researchers.

Novita AI

Novita AI is an AI cloud platform that offers Model APIs, Serverless, and GPU Instance solutions integrated into one cost-effective platform. It provides tools for building AI products, scaling with serverless architecture, and deploying with GPU instances. Novita AI caters to startups and businesses looking to leverage AI technologies without the need for extensive machine learning expertise. The platform also offers a Startup Program, 24/7 service support, and has received positive feedback for its reasonable pricing and stable API services.

Novita AI

Novita AI is an AI cloud platform offering Model APIs, Serverless, and GPU Instance services in a cost-effective and integrated manner to accelerate AI businesses. It provides optimized models for high-quality dialogue use cases, full spectrum AI APIs for image, video, audio, and LLM applications, serverless auto-scaling based on demand, and customizable GPU solutions for complex AI tasks. The platform also includes a Startup Program, 24/7 service support, and has received positive feedback for its reasonable pricing and stable services.

Pinecone

Pinecone is a vector database designed to help power AI applications for various companies. It offers a serverless platform that enables users to build knowledgeable AI applications quickly and cost-effectively. With Pinecone, users can perform low-latency vector searches for tasks such as search, recommendation, detection, and more. The platform is scalable, secure, and cloud-native, making it suitable for a wide range of AI projects.

Together AI

Together AI is an AI tool that offers a variety of generative AI services, including serverless models, fine-tuning capabilities, dedicated endpoints, and GPU clusters. Users can run or fine-tune leading open source models with only a few lines of code. The platform provides a range of functionalities for tasks such as chat, vision, text-to-speech, code/language reranking, and more. Together AI aims to simplify the process of utilizing AI models for various applications.

Pinecone

Pinecone is a vector database designed to build knowledgeable AI applications. It offers a serverless platform with high capacity and low cost, enabling users to perform low-latency vector search for various AI tasks. Pinecone is easy to start and scale, allowing users to create an account, upload vector embeddings, and retrieve relevant data quickly. The platform combines vector search with metadata filters and keyword boosting for better application performance. Pinecone is secure, reliable, and cloud-native, making it suitable for powering mission-critical AI applications.

Apify

Apify is a full-stack web scraping and data extraction platform that offers a wide range of tools and services for developers to build, deploy, and publish web scrapers, AI agents, and automation tools. The platform provides pre-built web scraping tools, serverless programs, integrations with various apps and services, and AI agents equipped with Actors. Apify also offers solutions for building and monetizing MCP servers, as well as professional services for custom web scraping solutions. With a marketplace of over 6,000 Actors, Apify caters to a diverse range of use cases and industries, including generative AI, lead generation, market research, and sentiment analysis.

SvectorDB

SvectorDB is a vector database built from the ground up for serverless applications. It is designed to be highly scalable, performant, and easy to use. SvectorDB can be used for a variety of applications, including recommendation engines, document search, and image search.

Xata

Xata is a serverless data platform for PostgreSQL that provides a range of features to make application development faster and easier. These features include schema migrations, file attachments, full-text search, branching, and generative AI. Xata is designed to be the ideal database for application development, with a focus on code simplicity and extensibility. It is also built on open source, so developers can collaborate with the community to drive innovative ideas.

Build Club

Build Club is a leading training campus for AI learners, experts, and builders. It offers a platform where individuals can upskill into AI careers, get certified by top AI companies, learn the latest AI tools, and earn money by solving real problems. The community at Build Club consists of AI learners, engineers, consultants, and founders who collaborate on cutting-edge AI projects. The platform provides challenges, support, and resources to help individuals build AI projects and advance their skills in the field.

Unified DevOps platform to build AI applications

This is a unified DevOps platform to build AI applications. It provides a comprehensive set of tools and services to help developers build, deploy, and manage AI applications. The platform includes a variety of features such as a code editor, a debugger, a profiler, and a deployment manager. It also provides access to a variety of AI services, such as natural language processing, machine learning, and computer vision.

Build Chatbot

Build Chatbot is a no-code chatbot builder designed to simplify the process of creating chatbots. It enables users to build their chatbot without any coding knowledge, auto-train it with personalized content, and get the chatbot ready with an engaging UI. The platform offers various features to enhance user engagement, provide personalized responses, and streamline communication with website visitors. Build Chatbot aims to save time for both businesses and customers by making information easily accessible and transforming visitors into satisfied customers.

Build Club

Build Club is an AI tool designed to help individuals learn and explore various aspects of artificial intelligence. The platform offers a wide range of courses, challenges, hackathons, and community projects to enhance users' AI skills. Users can build AI models for tasks like image and video generation, AI marketing, and creating AI agents. Build Club aims to create a collaborative learning environment for AI enthusiasts to grow their knowledge and skills in the field of artificial intelligence.

0 - Open Source AI Tools

20 - OpenAI Gpts

Serverless Architect Pro

Helping software engineers to architect domain-driven serverless systems on AWS

Build a Brand

Unique custom images based on your input. Just type ideas and the brand image is created.

Beam Eye Tracker Extension Copilot

Build extensions using the Eyeware Beam eye tracking SDK

Business Model Canvas Strategist

Business Model Canvas Creator - Build and evaluate your business model

League Champion Builder GPT

Build your own League of Legends Style Champion with Abilities, Back Story and Splash Art

RenovaTecno

Your tech buddy helping you refurbish or build a PC from scratch, tailored to your needs, budget, and language.

Gradle Expert

Your expert in Gradle build configuration, offering clear, practical advice.

XRPL GPT

Build on the XRP Ledger with assistance from this GPT trained on extensive documentation and code samples.