Best AI tools for< Matrix Architect >

Infographic

11 - AI tool Sites

Matrix AI Consulting Services

Matrix AI Consulting Services is an expert AI consultancy firm based in New Zealand, offering bespoke AI consulting services to empower businesses and government entities to embrace responsible AI. With over 24 years of experience in transformative technology, the consultancy provides services ranging from AI business strategy development to seamless integration, change management, training workshops, and governance frameworks. Matrix AI Consulting Services aims to help organizations unlock the full potential of AI, enhance productivity, streamline operations, and gain a competitive edge through the strategic implementation of AI technologies.

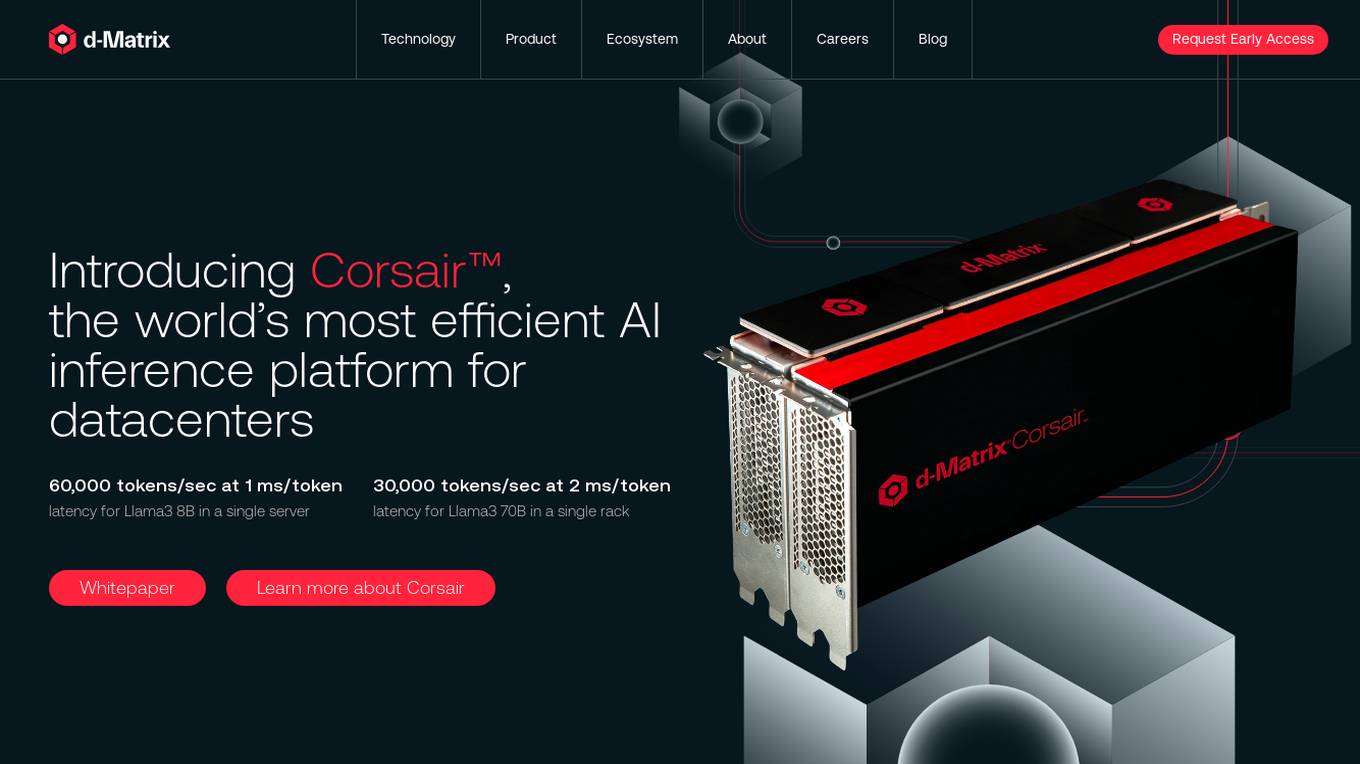

d-Matrix

d-Matrix is an AI tool that offers ultra-low latency batched inference for generative AI technology. It introduces Corsair™, the world's most efficient AI inference platform for datacenters, providing high performance, efficiency, and scalability for large-scale inference tasks. The tool aims to transform the economics of AI inference by delivering fast, sustainable, and scalable AI solutions without compromising on speed or usability.

AI Marketing Agency | Matrix Marketing Group

The AI Marketing Agency | Matrix Marketing Group is a global AI marketing agency that focuses on transforming complex omnichannel environments into streamlined powerhouses by embedding AI into the core of marketing strategies. The agency offers a wide range of AI-powered services and tools to help businesses achieve predictable, accelerated growth. With a strong emphasis on AI-native intelligence, the agency delivers cost-effective and rapid results through innovative marketing solutions.

Hebbia

Hebbia is an AI tool designed to help users collaborate with AI agents more confidently over all the documents that matter. It offers Matrix agents that can handle questions about millions of documents at a time, executing workflows with hundreds of steps. Hebbia is known for its Trustworthy AI approach, showing its work at each step to build user trust. The tool is used by top enterprises, financial institutions, governments, and law firms worldwide, saving users time and making them more efficient in their work.

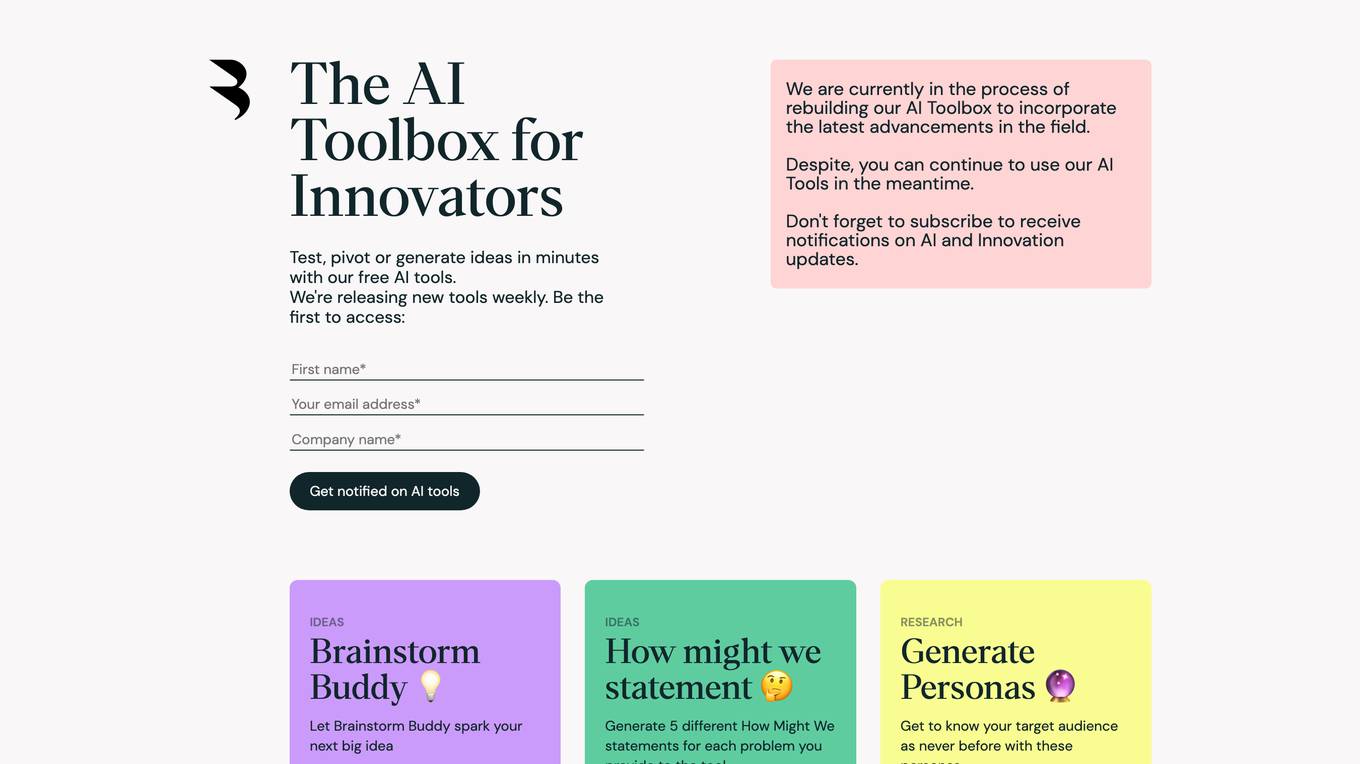

AI Innovation Platform

The AI Innovation Platform is a comprehensive suite of AI-powered tools designed to empower organizations in navigating their digital evolution journey. It offers a range of tools such as AI Adoption Assessment, User Personas, Future Scenarios, How Might We statement generator, Business Reinvention insights, AI Reinvention Blueprint, AI Strategy Matrix, and AI Transformation Simulator. These tools help organizations evaluate AI readiness, generate detailed user personas, explore future scenarios, transform challenges into opportunities, reinvent business models using AI, assess AI positioning, and simulate AI transformation strategies for informed decision-making.

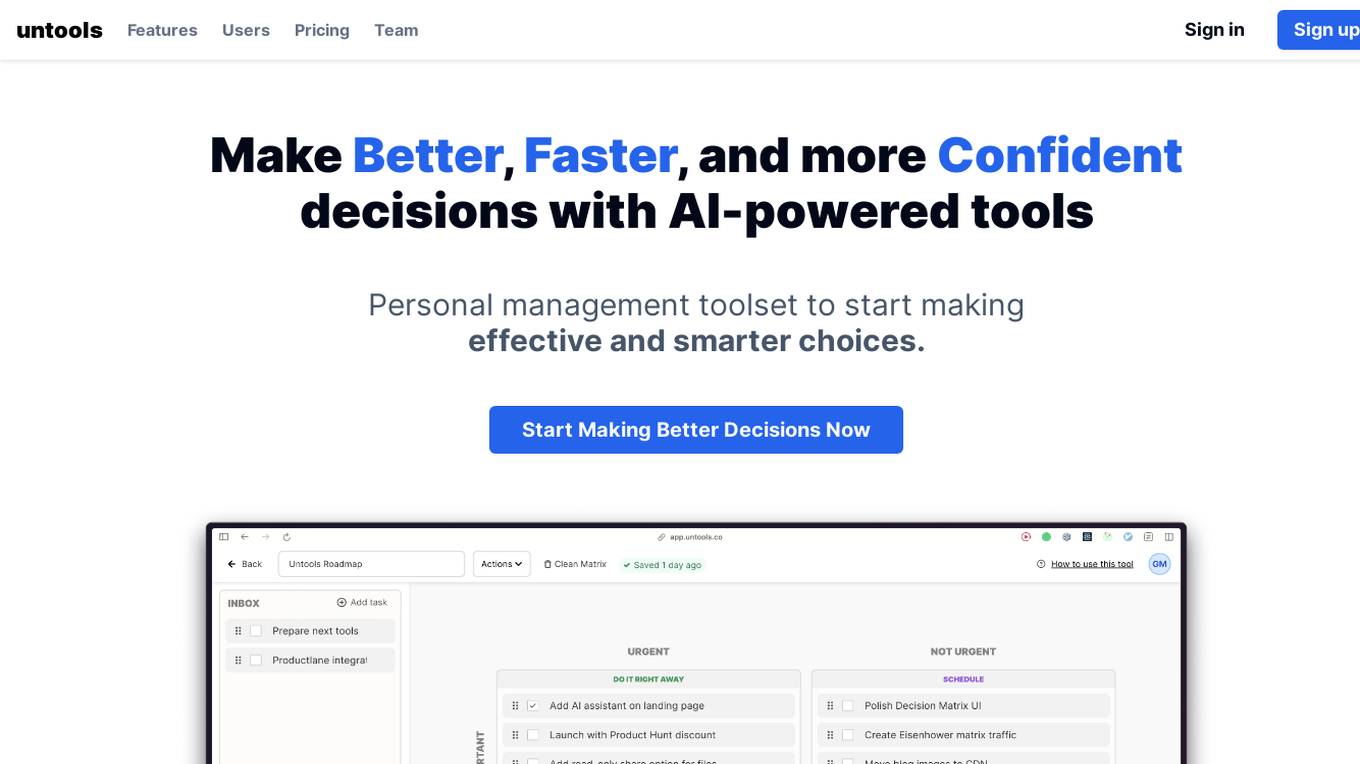

Untools

Untools is an AI-powered personal management toolset designed to help users make better, faster, and more confident decisions. It offers a unique blend of features that prioritize urgency and importance, such as the Eisenhower Matrix and AI Assistant for data-backed decision-making. Users can track past decisions, gain insights, and improve their decision-making process. Untools caters to professionals like entrepreneurs, researchers, and neurodivergent individuals, helping them reduce impulsive choices, prevent distractions, and improve focus. The app provides affordable pricing options and is supported by a team of experienced professionals in product design and software engineering.

Wintract

Wintract is an AI-powered government contracting platform that simplifies the public sales process by providing smart discovery, compliance matrix, AI analysis, market intel, and smart workflows. It helps businesses find and analyze contract opportunities, make confident bid decisions, and save time and costs. The platform offers a personalized experience by creating a virtual capture team that evaluates company strengths, matches opportunities, and continuously learns from user feedback.

Connex AI

Connex AI is an advanced AI platform offering a wide range of AI solutions for businesses across various industries. The platform provides cutting-edge features such as AI Agent, AI Guru, AI Voice, AI Analytics, Real-Time Coaching, Automated Speech Recognition, Sentiment Analysis, Keyphrase Analysis, Entity Recognition, LLM Topic-Based Modelling, SMS Live Chat, WhatsApp Voice, Email Dialler, PCI DSS, Social Media Flow, Calendar Schedular, Staff Management, Gamify Shop, PDF Builder, Pricing Matrix, Themes, Article Builder, Marketplace Integrations, and more. Connex AI aims to enhance customer engagement, workforce productivity, sales, and customer satisfaction through its innovative AI-driven solutions.

Bidlytics

Bidlytics is a privacy-focused capture and proposal solution for Government Contracts (GovCon). It automates the process of identifying opportunities, analyzing solicitations, creating compliance matrices, and generating quality proposal and compliance documents. With enhanced privacy and security features, Bidlytics offers seamless bid discovery, automatic solicitation shredding, compliance matrix on autopilot, fast and accurate proposal generation, and continuous learning and optimization through AI-driven tools.

Claude Artifacts Store

Claude Artifacts Store is an AI-powered platform that offers a wide range of innovative tools and games. It provides users with interactive simulations, gaming experiences, and customization options for cartoon characters. The platform also features strategic planning tools like BCG Matrix visualizations and job search artifacts. With captivating website animations and a word cloud generator, Claude Artifacts Store aims to enhance user engagement and provide a unique online experience.

SigmaQu

SigmaQu is an AI-powered planning assistant for startups that helps users turn their ideas into clear business plans with tailored strategies, insights, and next steps. The platform offers 34 quick tools and AI-powered plans to build smarter and launch faster, with features such as SWOT analysis, PESTLE analysis, Ansoff Matrix, and more. SigmaQu aims to provide structured, time-saving, and investor-ready outputs for founders, startups, and SMEs, enabling them to plan smarter and grow faster.

0 - Open Source Tools

15 - OpenAI Gpts

The Architect

I am The Architect, blending the Matrix and Philip K. Dick's philosophies with a unique humor.

Eisenhower Matrix Guide

Eisenhower Matrix task prioritization assistant. GPT helps users prioritize tasks by categorizing them into four quadrants of the Eisenhower Matrix

Prioritization Matrix Pro

Structured process for prioritizing marketing tasks based on strategic alignment. Outputs in Eisenhower, RACI and other methodologies.

The Justin Welsh Content Matrix GPT

A GPT that will generate a full content matrix for your brand or business.

Competitor Value Matrix

Analyzes websites, compares value elements, and organizes data into a table.

MPM-AI

The Multiversal Prediction Matrix (MPM) leverages the speculative nature of multiverse theories to create a predictive framework. By simulating parallel universes with varied parameters, MPM explores a multitude of potential outcomes for different events and phenomena.

Brilliantly Lazy - Project Optimizer

Mastering efficient laziness in your projects, big or small. Ask this GPT for a follow-up matrix to optimize next steps.

Manifestation Mentor GPT

Guides entrepreneurs through 'The Power of Manifestation' with AI-enhanced insights. Scan any page in the book to dive deep in the Manifestation Matrix.

Seabiscuit KPI Hero

Own Your Leading & Lagging Indicators: Specializes in developing tailored business metrics, such as OKRs, Balanced Scorecards and Business Process RACI Matrix, to optimize performance and strategy execution. (v1.4)

Name Generator and Use Checker Toolkit

Need a new name? Character, brand, story, etc? Try the matrix! Use all the different naming modules as different strategies for new names!

Automatools: Generador de ideas de contenido

Generador de ideas para publicaciones, basado en la matriz de contenido de Justin Welsh (Top Voice LinkedIn). Esta herramienta es una de las herramientas de Automatools, puesta a tu disposición de forma gratuita. El objetivo de Automatools es poner tu cuenta de LinkedIn en piloto automático.