Best AI tools for< Ai Safety Analyst >

Infographic

20 - AI tool Sites

Center for AI Safety (CAIS)

The Center for AI Safety (CAIS) is a research and field-building nonprofit organization based in San Francisco. They conduct impactful research, advocacy projects, and provide resources to reduce societal-scale risks associated with artificial intelligence (AI). CAIS focuses on technical AI safety research, field-building projects, and offers a compute cluster for AI/ML safety projects. They aim to develop and use AI safely to benefit society, addressing inherent risks and advocating for safety standards.

Modulate

Modulate is a voice intelligence tool that provides proactive voice chat moderation solutions for various platforms, including gaming, delivery services, and social platforms. It uses advanced AI technology to detect and prevent harmful behaviors, ensuring a safer and more positive user experience. Modulate helps organizations comply with regulations, enhance user safety, and improve community interactions through its customizable and intelligent moderation tools.

AI Safety Initiative

The AI Safety Initiative is a premier coalition of trusted experts that aims to develop and deliver essential AI guidance and tools for organizations to deploy safe, responsible, and compliant AI solutions. Through vendor-neutral research, training programs, and global industry experts, the initiative provides authoritative AI best practices and tools. It offers certifications, training, and resources to help organizations navigate the complexities of AI governance, compliance, and security. The initiative focuses on AI technology, risk, governance, compliance, controls, and organizational responsibilities.

Capably

Capably is an AI Management Platform that helps companies roll out AI employees across their organizations. It provides tools to easily adopt AI, create and onboard AI employees, and monitor AI activity. Capably is designed for business users with no AI expertise and integrates seamlessly with existing workflows and software tools.

blog.biocomm.ai

blog.biocomm.ai is an AI safety blog that focuses on the existential threat posed by uncontrolled and uncontained AI technology. It curates and organizes information related to AI safety, including the risks and challenges associated with the proliferation of AI. The blog aims to educate and raise awareness about the importance of developing safe and regulated AI systems to ensure the survival of humanity.

Robust Intelligence

Robust Intelligence is an end-to-end security solution for AI applications. It automates the evaluation of AI models, data, and files for security and safety vulnerabilities and provides guardrails for AI applications in production against integrity, privacy, abuse, and availability violations. Robust Intelligence helps enterprises remove AI security blockers, save time and resources, meet AI safety and security standards, align AI security across stakeholders, and protect against evolving threats.

AI Now Institute

AI Now Institute is a think tank focused on the social implications of AI and the consolidation of power in the tech industry. They challenge and reimagine the current trajectory for AI through research, publications, and advocacy. The institute provides insights into the political economy driving the AI market and the risks associated with AI development and policy.

AI Alliance

The AI Alliance is a community dedicated to building and advancing open-source AI agents, data, models, evaluation, safety, applications, and advocacy to ensure everyone can benefit. They focus on various areas such as skills and education, trust and safety, applications and tools, hardware enablement, foundation models, and advocacy. The organization supports global AI skill-building, education, and exploratory research, creates benchmarks and tools for safe generative AI, builds capable tools for AI model builders and developers, fosters AI hardware accelerator ecosystem, enables open foundation models and datasets, and advocates for regulatory policies for healthy AI ecosystems.

Checkstep

Checkstep is an AI-powered content moderation platform that helps businesses detect and remove harmful content from their platforms. It offers a range of features, including image, text, audio, and video moderation, as well as compliance reporting and moderation tools. Checkstep's platform is designed to be easy to use and integrate, and it can be customized to meet the specific needs of each business.

Faculty AI

Faculty AI is a leading applied AI consultancy and technology provider, specializing in helping customers transform their businesses through bespoke AI consultancy and Frontier, the world's first AI operating system. They offer services such as AI consultancy, generative AI solutions, and AI services tailored to various industries. Faculty AI is known for its expertise in AI governance and safety, as well as its partnerships with top AI platforms like OpenAI, AWS, and Microsoft.

Robust Intelligence

Robust Intelligence is an end-to-end solution for securing AI applications. It automates the evaluation of AI models, data, and files for security and safety vulnerabilities and provides guardrails for AI applications in production against integrity, privacy, abuse, and availability violations. Robust Intelligence helps enterprises remove AI security blockers, save time and resources, meet AI safety and security standards, align AI security across stakeholders, and protect against evolving threats.

Anthropic

Anthropic is an AI research and product company focused on the development of AI technologies that prioritize safety and long-term well-being. The company is dedicated to harnessing the benefits of AI while mitigating potential risks. Anthropic offers a range of AI models and solutions for various industries, emphasizing responsible AI development and deployment.

WinBuzzer

WinBuzzer is an AI-focused website providing comprehensive information on AI companies, divisions, projects, and labs. The site covers a wide range of topics related to artificial intelligence, including chatbots, AI assistants, AI solutions, AI technologies, AI models, AI agents, and more. WinBuzzer also delves into areas such as AI ethics, AI safety, AI chips, artificial general intelligence (AGI), synthetic data, AI benchmarks, AI regulation, and AI research. Additionally, the site offers insights into big tech companies, hardware, software, cybersecurity, and more.

MIRI (Machine Intelligence Research Institute)

MIRI (Machine Intelligence Research Institute) is a non-profit research organization dedicated to ensuring that artificial intelligence has a positive impact on humanity. MIRI conducts foundational mathematical research on topics such as decision theory, game theory, and reinforcement learning, with the goal of developing new insights into how to build safe and beneficial AI systems.

Storytell.ai

Storytell.ai is an enterprise-grade AI platform that offers Business-Grade Intelligence across data, focusing on boosting productivity for employees and teams. It provides a secure environment with features like creating project spaces, multi-LLM chat, task automation, chat with company data, and enterprise-AI security suite. Storytell.ai ensures data security through end-to-end encryption, data encryption at rest, provenance chain tracking, and AI firewall. It is committed to making AI safe and trustworthy by not training LLMs with user data and providing audit logs for accountability. The platform continuously monitors and updates security protocols to stay ahead of potential threats.

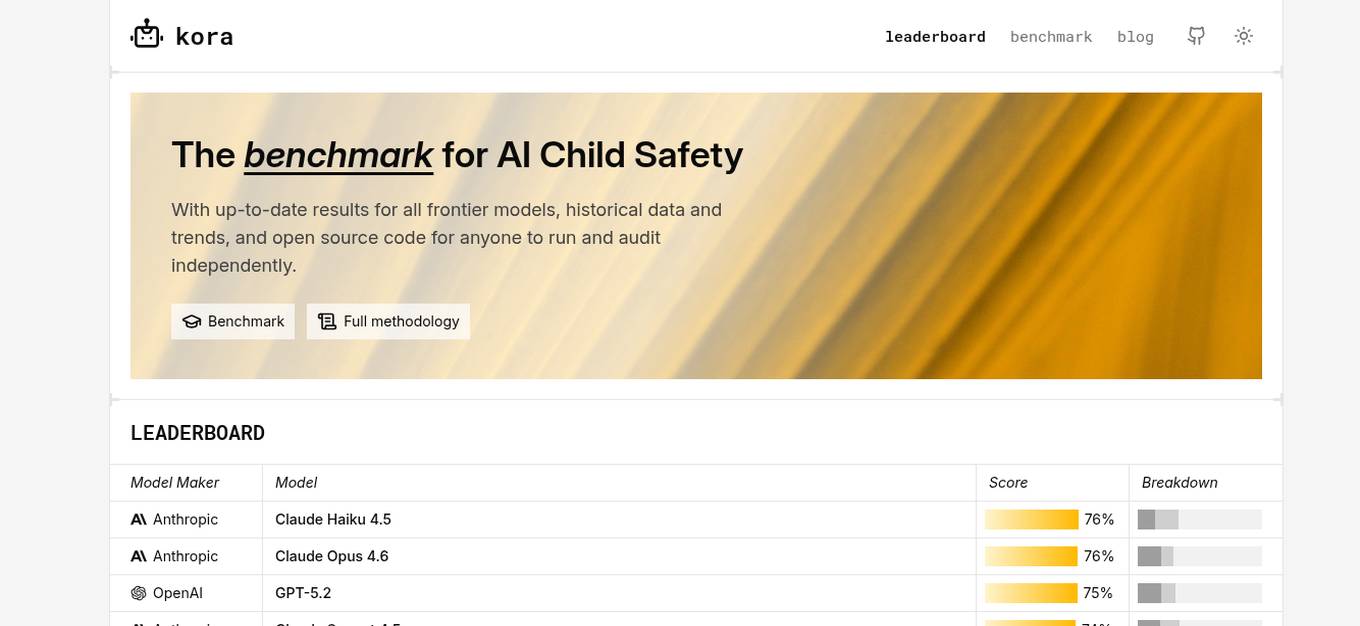

KORA Benchmark

KORA Benchmark is a leading platform that provides a benchmark for AI child safety. It offers up-to-date results for frontier models, historical data, and trends. The platform also provides open-source code for users to run and audit independently. KORA Benchmark aims to ensure the safety of children in the AI landscape by evaluating various models and providing valuable insights to the community.

Plus

Plus is an AI-based autonomous driving software company that focuses on developing solutions for driver assist and autonomous driving technologies. The company offers a suite of autonomous driving solutions designed for integration with various hardware platforms and vehicle types, ranging from perception software to highly automated driving systems. Plus aims to transform the transportation industry by providing high-performance, safe, and affordable autonomous driving vehicles at scale.

Telescope

Telescope is an AI-powered platform for finance that offers a range of solutions for trading, investing, portfolio insights, signal detection, content conversion, compliance, and more. It combines frontier language models with safety and compliance features to provide trustworthy AI intelligence for financial institutions. The platform enables users to personalize solutions, enhance engagement, scale portfolio strategies, and embed AI recommendations in various financial activities.

AI Security Institute (AISI)

The AI Security Institute (AISI) is a state-backed organization dedicated to advancing AI governance and safety. They conduct rigorous AI research to understand the impacts of advanced AI, develop risk mitigations, and collaborate with AI developers and governments to shape global policymaking. The institute aims to equip governments with a scientific understanding of the risks posed by advanced AI, monitor AI development, evaluate national security risks, and promote responsible AI development. With a team of top technical staff and partnerships with leading research organizations, AISI is at the forefront of AI governance.

DisplayGateGuard

DisplayGateGuard is an AI-powered brand safety and suitability provider that helps advertisers choose the right placements, isolate fraudulent websites, and enhance brand safety. By leveraging artificial intelligence, the platform offers curated inclusion and exclusion lists to provide deeper insights into the environments and contexts where ads are shown, ensuring campaigns reach the right audience effectively.

0 - Open Source Tools

20 - OpenAI Gpts

Prompt Injection Detector

GPT used to classify prompts as valid inputs or injection attempts. Json output.

Hazard Analyst

Generates risk maps, emergency response plans and safety protocols for disaster management professionals.

TrafficFlow

A specialized AI for optimizing traffic control, predicting bottlenecks, and improving road safety.

PharmaFinder AI

Identifies medications and active ingredients from photos for user safety.

GPT Safety Liaison

A liaison GPT for AI safety emergencies, connecting users to OpenAI experts.

香港地盤安全佬 HK Construction Site Safety Advisor

Upload a site photo to assess the potential hazard and seek advises from experience AI Safety Officer

AI Ethica Readify

Summarises AI ethics papers, provides context, and offers further assistance.

Alignment Navigator

AI Alignment guided by interdisciplinary wisdom and a future-focused vision.

Buildwell AI - UK Construction Regs Assistant

Provides Construction Support relating to Planning Permission, Building Regulations, Party Wall Act and Fire Safety in the UK. Obtain instant Guidance for your Construction Project.

DateMate

Your friendly AI assistant for voice-based dating, offering personalized tips, safety advice, and fun interactions.

Chemistry Expert

Advanced AI for chemistry, offering innovative solutions, process optimizations, and safety assessments, powered by OpenAI.