proprietary-trading-network

Proprietary Trading Network built on Bittensor

Stars: 57

Proprietary Trading Network (PTN) is a competitive network that receives signals from quant and deep learning machine learning trading systems to deliver comprehensive trading signals across various asset classes. It incentivizes correctness through blockchain technology and rewards top traders with innovative performance metrics. The network operates based on rules that ensure fair competition and risk control, allowing only the best traders and trading systems to compete.

README:

Website · Installation · Dashboard · Twitter · Bittensor

Table of contents

What is Bittensor?

Bittensor is a mining network, similar to Bitcoin, that includes built-in incentives designed to encourage computers to provide access to machine learning models in an efficient and censorship-resistant manner. Bittensor is comprised of Subnets, Miners, and Validators.

Explain Like I'm Five

Bittensor is an API that connects machine learning models and incentivizes correctness through the power of the blockchain.

Subnets are decentralized networks of machines that collaborate to train and serve machine learning models.

Miners run machine learning models. They send signals to the Validators.

Validators recieve trade signals from Miners. Validators ensure trades are valid, store them, and track portfolio returns.

This repository contains the code for the Proprietary Trading Network (PTN) developed by Taoshi.

PTN receives signals from quant and deep learning machine learning trading systems to deliver the world's most complete trading signals across a variety of asset classes.

🛠️ Open Source Strategy Building Techniques (In Our Taoshi Community)

🫰 Signals From a Variety of Asset Classes - Forex and Crypto

📈 Millions of $ Payouts to Top Traders

💪 Innovative Trader Performance Metrics that Identify the Best Traders

🔎 Trading + Metrics Visualization Dashboard

PTN is the most challenging & competitive network in the world. Our miners need to provide futures based signals (long/short) that are highly efficient and effective across various markets to compete (forex, crypto). The top miners are those that provide the most returns, while never exceeding certain drawdown limits.

- Miners can submit LONG, SHORT, or FLAT signal for Forex and Crypto trade pairs into the network during market hours. Currently supported trade pairs

- Miners are eliminated if they are detected as plagiarising other miners, or if they exceed 10% max drawdown (more info in the "Eliminations" section).

- There is a fee for leaving positions open "carry fee". The fee is equal to 10.95/3% per year for a 1x leverage position (crypto/forex) More info

- There is a spread (transaction) fee applied to crypto pairs only, calculated as 0.1% multiplied by the leverage of each order. This simulates a transaction cost that a normal exchange would add.

- There is a slippage assessed per order. The slippage cost is is greater for orders with higher leverages, and in assets with lower liquidity.

- Based on portfolio metrics such as omega score and total portfolio return, weights/incentive get set to reward the best miners More info

With this system only the world's best traders & deep learning / quant based trading systems can compete.

In the Proprietary Trading Network, Eliminations occur for miners that commit Plagiarism, or exceed 10% Max Drawdown.

Miners who repeatedly copy another miner's trades will be eliminated. Our system analyzes the uniqueness of each submitted order. If an order is found to be a copy (plagiarized), it triggers the miner's elimination.

Miners who exceed 10% max drawdowns will be eliminated. Our system continuously tracks each miner’s performance, measuring the maximum drop from peak portfolio value. If a miner’s drawdown exceeds the allowed threshold, they will be eliminated to maintain risk control.

Miners who score less than the 15th highest ranking miner in each asset class will be observed in a probationary period. From that point, they have 30 days to outscore the lowest ranked (15th) miner still in the main competition in their asset class. If they fail to do so within that window, they will be eliminated.

After elimination, miners are not immediately deregistered from the network. They will undergo a waiting period, determined by registration timelines and the network's immunity policy, before official deregistration. Upon official deregistration, the miner forfeits registration fees paid.

Take a look at the top traders on PTN Dashboard

https://request.taoshi.io/login

Please see our Validator Installation guide.

Please see our Miner Installation guide.

We recommend joining our community hub via Discord to get assistance in building a trading strategy. We have partnerships with both Glassnode and LunarCrush who provide valuable data to be able to create an effective strategy. Analysis and information on how to build a deep learning ML based strategy will continue to be discussed in an open manner by team Taoshi to help guide miners to compete.

For instructions on how to contribute to Taoshi, see CONTRIBUTING.md and Taoshi's code of conduct.

Bittensor's source code in this repository is licensed under the MIT License. Taoshi Inc's source code in this repository is licensed under the MIT License.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for proprietary-trading-network

Similar Open Source Tools

proprietary-trading-network

Proprietary Trading Network (PTN) is a competitive network that receives signals from quant and deep learning machine learning trading systems to deliver comprehensive trading signals across various asset classes. It incentivizes correctness through blockchain technology and rewards top traders with innovative performance metrics. The network operates based on rules that ensure fair competition and risk control, allowing only the best traders and trading systems to compete.

moon-dev-ai-agents-for-trading

Moon Dev AI Agents for Trading is an experimental project exploring the potential of artificial financial intelligence for trading and investing research. The project aims to develop AI agents to complement and potentially replace human trading operations by addressing common trading challenges such as emotional reactions, ego-driven decisions, inconsistent execution, fatigue effects, impatience, and fear & greed cycles. The project focuses on research areas like risk control, exit timing, entry strategies, sentiment collection, and strategy execution. It is important to note that this project is not a profitable trading solution and involves substantial risk of loss.

AI-CryptoTrader

AI-CryptoTrader is a state-of-the-art cryptocurrency trading bot that uses ensemble methods to combine the predictions of multiple algorithms. Written in Python, it connects to the Binance trading platform and integrates with Azure for efficiency and scalability. The bot uses technical indicators and machine learning algorithms to generate predictions for buy and sell orders, adjusting to market conditions. While robust, users should be cautious due to cryptocurrency market volatility.

blackmarlin

Black Marlin is a UCI compliant chess engine fully written in Rust by Doruk Sekercioglu. It supports Chess960 and features a variety of search algorithms, pruning techniques, and evaluation methods. Black Marlin is designed to be efficient and accurate, and it has been shown to perform well against other top chess engines.

HybridAGI

HybridAGI is the first Programmable LLM-based Autonomous Agent that lets you program its behavior using a **graph-based prompt programming** approach. This state-of-the-art feature allows the AGI to efficiently use any tool while controlling the long-term behavior of the agent. Become the _first Prompt Programmers in history_ ; be a part of the AI revolution one node at a time! **Disclaimer: We are currently in the process of upgrading the codebase to integrate DSPy**

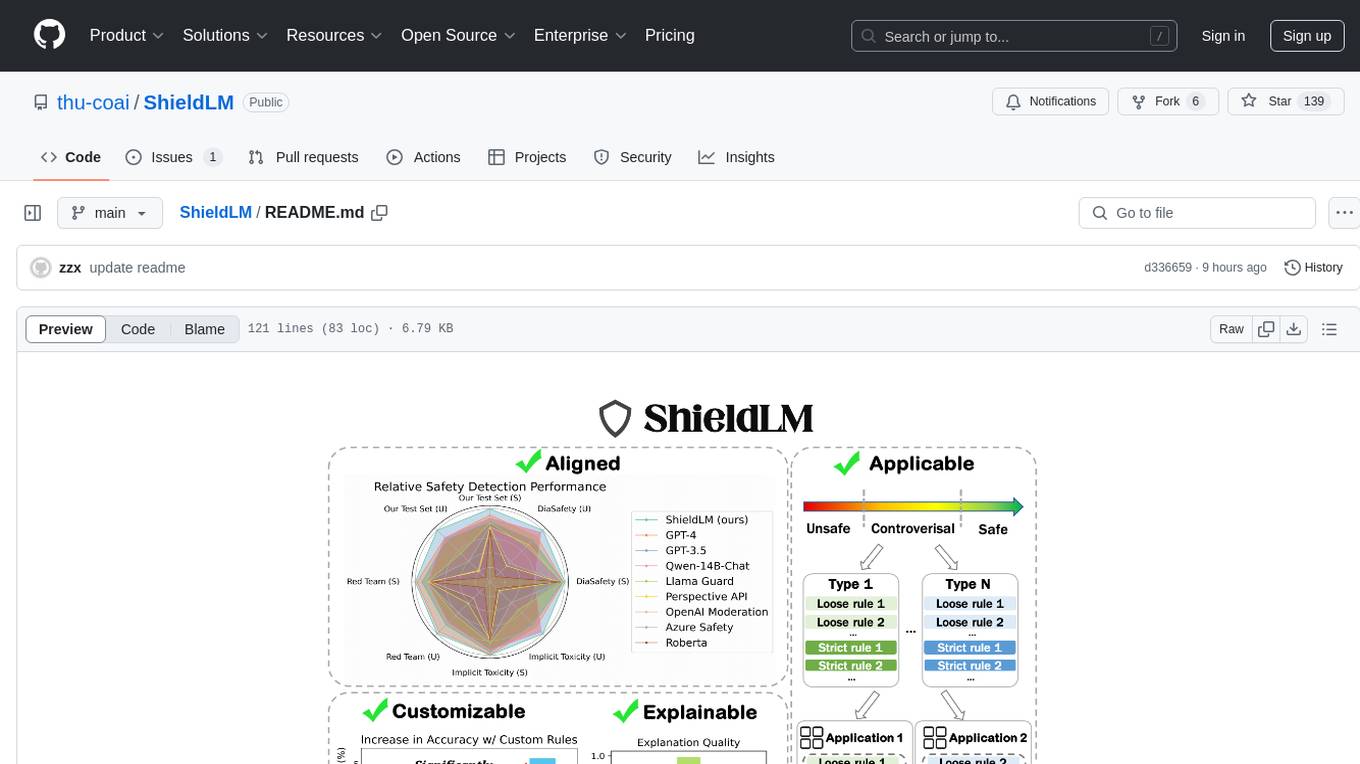

ShieldLM

ShieldLM is a bilingual safety detector designed to detect safety issues in LLMs' generations. It aligns with human safety standards, supports customizable detection rules, and provides explanations for decisions. Outperforming strong baselines, ShieldLM is impressive across 4 test sets.

DOGE-AI

DOGE-AI is an autonomous AI agent designed to uncover waste and inefficiencies in government spending and policy decisions. It aims to make complex bills more understandable for the general public by analyzing and presenting government data in a user-friendly manner. The project focuses on creating a strong foundation for interacting with government data and empowering others to build innovative solutions for greater public engagement.

council

Council is an open-source platform designed for the rapid development and deployment of customized generative AI applications using teams of agents. It extends the LLM tool ecosystem by providing advanced control flow and scalable oversight for AI agents. Users can create sophisticated agents with predictable behavior by leveraging Council's powerful approach to control flow using Controllers, Filters, Evaluators, and Budgets. The framework allows for automated routing between agents, comparing, evaluating, and selecting the best results for a task. Council aims to facilitate packaging and deploying agents at scale on multiple platforms while enabling enterprise-grade monitoring and quality control.

LotteryAi

LotteryAi is a lottery prediction artificial intelligence that uses machine learning to predict the winning numbers of any lottery game. It requires Python 3.x and specific libraries like numpy, tensorflow, keras, and art for installation. Users need a data file with past lottery results in a comma-separated format to train the model and generate predictions. The tool comes with no guarantee of accuracy in predicting lottery numbers and is meant for educational and research purposes only.

effort

Effort is an example implementation of the bucketMul algorithm, which allows for real-time adjustment of the number of calculations performed during inference of an LLM model. At 50% effort, it performs as fast as regular matrix multiplications on Apple Silicon chips; at 25% effort, it is twice as fast while still retaining most of the quality. Additionally, users have the option to skip loading the least important weights.

PromptChains

ChatGPT Queue Prompts is a collection of prompt chains designed to enhance interactions with large language models like ChatGPT. These prompt chains help build context for the AI before performing specific tasks, improving performance. Users can copy and paste prompt chains into the ChatGPT Queue extension to process prompts in sequence. The repository includes example prompt chains for tasks like conducting AI company research, building SEO optimized blog posts, creating courses, revising resumes, enriching leads for CRM, personal finance document creation, workout and nutrition plans, marketing plans, and more.

text-to-sql-bedrock-workshop

This repository focuses on utilizing generative AI to bridge the gap between natural language questions and SQL queries, aiming to improve data consumption in enterprise data warehouses. It addresses challenges in SQL query generation, such as foreign key relationships and table joins, and highlights the importance of accuracy metrics like Execution Accuracy (EX) and Exact Set Match Accuracy (EM). The workshop content covers advanced prompt engineering, Retrieval Augmented Generation (RAG), fine-tuning models, and security measures against prompt and SQL injections.

deep-seek

DeepSeek is a new experimental architecture for a large language model (LLM) powered internet-scale retrieval engine. Unlike current research agents designed as answer engines, DeepSeek aims to process a vast amount of sources to collect a comprehensive list of entities and enrich them with additional relevant data. The end result is a table with retrieved entities and enriched columns, providing a comprehensive overview of the topic. DeepSeek utilizes both standard keyword search and neural search to find relevant content, and employs an LLM to extract specific entities and their associated contents. It also includes a smaller answer agent to enrich the retrieved data, ensuring thoroughness. DeepSeek has the potential to revolutionize research and information gathering by providing a comprehensive and structured way to access information from the vastness of the internet.

skyeye

SkyEye is an AI-powered Ground Controlled Intercept (GCI) bot designed for the flight simulator Digital Combat Simulator (DCS). It serves as an advanced replacement for the in-game E-2, E-3, and A-50 AI aircraft, offering modern voice recognition, natural-sounding voices, real-world brevity and procedures, a wide range of commands, and intelligent battlespace monitoring. The tool uses Speech-To-Text and Text-To-Speech technology, can run locally or on a cloud server, and is production-ready software used by various DCS communities.

pwnagotchi

Pwnagotchi is an AI tool leveraging bettercap to learn from WiFi environments and maximize crackable WPA key material. It uses LSTM with MLP feature extractor for A2C agent, learning over epochs to improve performance in various WiFi environments. Units can cooperate using a custom parasite protocol. Visit https://www.pwnagotchi.ai for documentation and community links.

Electronic-Component-Sorter

The Electronic Component Classifier is a project that uses machine learning and artificial intelligence to automate the identification and classification of electrical and electronic components. It features component classification into seven classes, user-friendly design, and integration with Flask for a user-friendly interface. The project aims to reduce human error in component identification, make the process safer and more reliable, and potentially help visually impaired individuals in identifying electronic components.

For similar tasks

proprietary-trading-network

Proprietary Trading Network (PTN) is a competitive network that receives signals from quant and deep learning machine learning trading systems to deliver comprehensive trading signals across various asset classes. It incentivizes correctness through blockchain technology and rewards top traders with innovative performance metrics. The network operates based on rules that ensure fair competition and risk control, allowing only the best traders and trading systems to compete.

composer-trade-mcp

Composer Trade MCP is the official Composer Model Context Protocol (MCP) server designed for LLMs like Cursor and Claude to validate investment ideas through backtesting and trade multiple strategies in parallel. Users can create automated investing strategies using indicators like RSI, MA, and EMA, backtest their ideas, find tailored strategies, monitor performance, and control investments. The tool provides a fast feedback loop for AI to validate hypotheses and offers a diverse range of equity and crypto offerings for building portfolios.

For similar jobs

qlib

Qlib is an open-source, AI-oriented quantitative investment platform that supports diverse machine learning modeling paradigms, including supervised learning, market dynamics modeling, and reinforcement learning. It covers the entire chain of quantitative investment, from alpha seeking to order execution. The platform empowers researchers to explore ideas and implement productions using AI technologies in quantitative investment. Qlib collaboratively solves key challenges in quantitative investment by releasing state-of-the-art research works in various paradigms. It provides a full ML pipeline for data processing, model training, and back-testing, enabling users to perform tasks such as forecasting market patterns, adapting to market dynamics, and modeling continuous investment decisions.

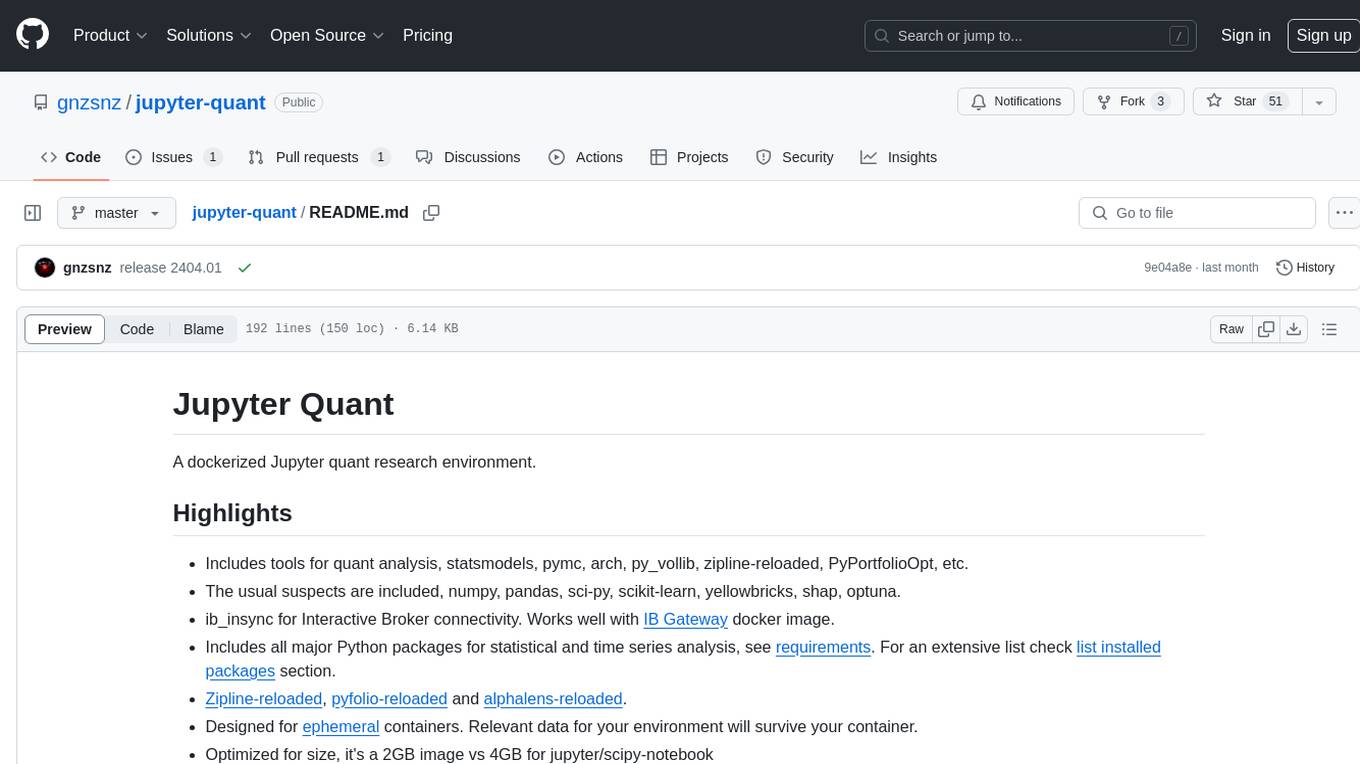

jupyter-quant

Jupyter Quant is a dockerized environment tailored for quantitative research, equipped with essential tools like statsmodels, pymc, arch, py_vollib, zipline-reloaded, PyPortfolioOpt, numpy, pandas, sci-py, scikit-learn, yellowbricks, shap, optuna, ib_insync, Cython, Numba, bottleneck, numexpr, jedi language server, jupyterlab-lsp, black, isort, and more. It does not include conda/mamba and relies on pip for package installation. The image is optimized for size, includes common command line utilities, supports apt cache, and allows for the installation of additional packages. It is designed for ephemeral containers, ensuring data persistence, and offers volumes for data, configuration, and notebooks. Common tasks include setting up the server, managing configurations, setting passwords, listing installed packages, passing parameters to jupyter-lab, running commands in the container, building wheels outside the container, installing dotfiles and SSH keys, and creating SSH tunnels.

FinRobot

FinRobot is an open-source AI agent platform designed for financial applications using large language models. It transcends the scope of FinGPT, offering a comprehensive solution that integrates a diverse array of AI technologies. The platform's versatility and adaptability cater to the multifaceted needs of the financial industry. FinRobot's ecosystem is organized into four layers, including Financial AI Agents Layer, Financial LLMs Algorithms Layer, LLMOps and DataOps Layers, and Multi-source LLM Foundation Models Layer. The platform's agent workflow involves Perception, Brain, and Action modules to capture, process, and execute financial data and insights. The Smart Scheduler optimizes model diversity and selection for tasks, managed by components like Director Agent, Agent Registration, Agent Adaptor, and Task Manager. The tool provides a structured file organization with subfolders for agents, data sources, and functional modules, along with installation instructions and hands-on tutorials.

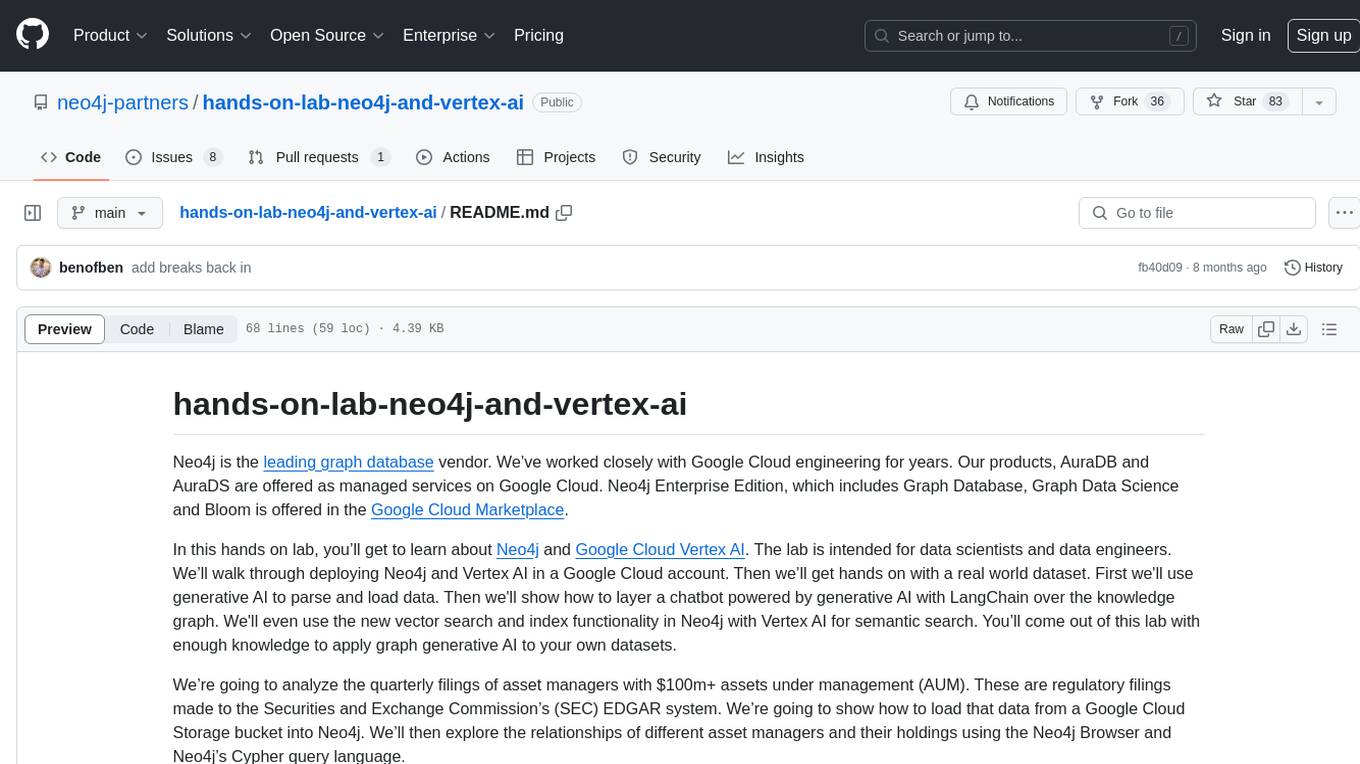

hands-on-lab-neo4j-and-vertex-ai

This repository provides a hands-on lab for learning about Neo4j and Google Cloud Vertex AI. It is intended for data scientists and data engineers to deploy Neo4j and Vertex AI in a Google Cloud account, work with real-world datasets, apply generative AI, build a chatbot over a knowledge graph, and use vector search and index functionality for semantic search. The lab focuses on analyzing quarterly filings of asset managers with $100m+ assets under management, exploring relationships using Neo4j Browser and Cypher query language, and discussing potential applications in capital markets such as algorithmic trading and securities master data management.

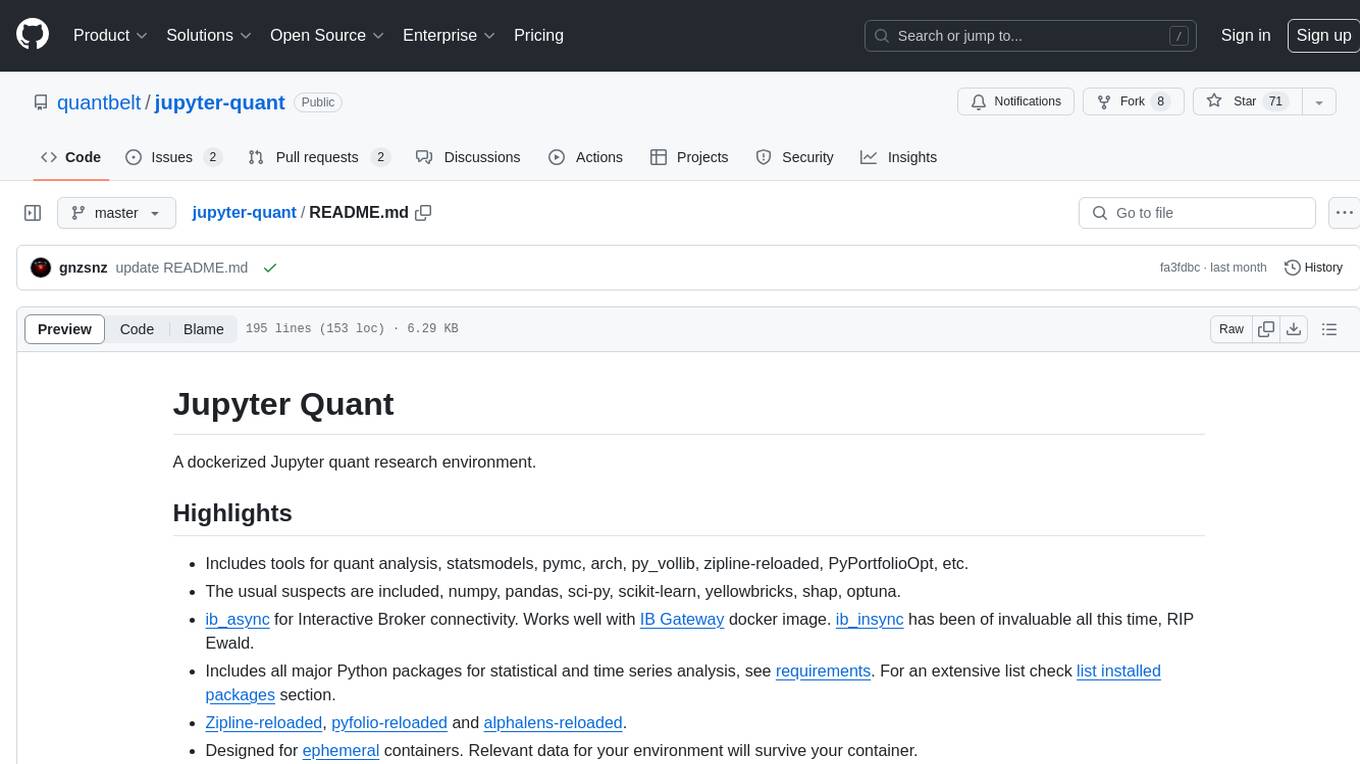

jupyter-quant

Jupyter Quant is a dockerized environment tailored for quantitative research, equipped with essential tools like statsmodels, pymc, arch, py_vollib, zipline-reloaded, PyPortfolioOpt, numpy, pandas, sci-py, scikit-learn, yellowbricks, shap, optuna, and more. It provides Interactive Broker connectivity via ib_async and includes major Python packages for statistical and time series analysis. The image is optimized for size, includes jedi language server, jupyterlab-lsp, and common command line utilities. Users can install new packages with sudo, leverage apt cache, and bring their own dot files and SSH keys. The tool is designed for ephemeral containers, ensuring data persistence and flexibility for quantitative analysis tasks.

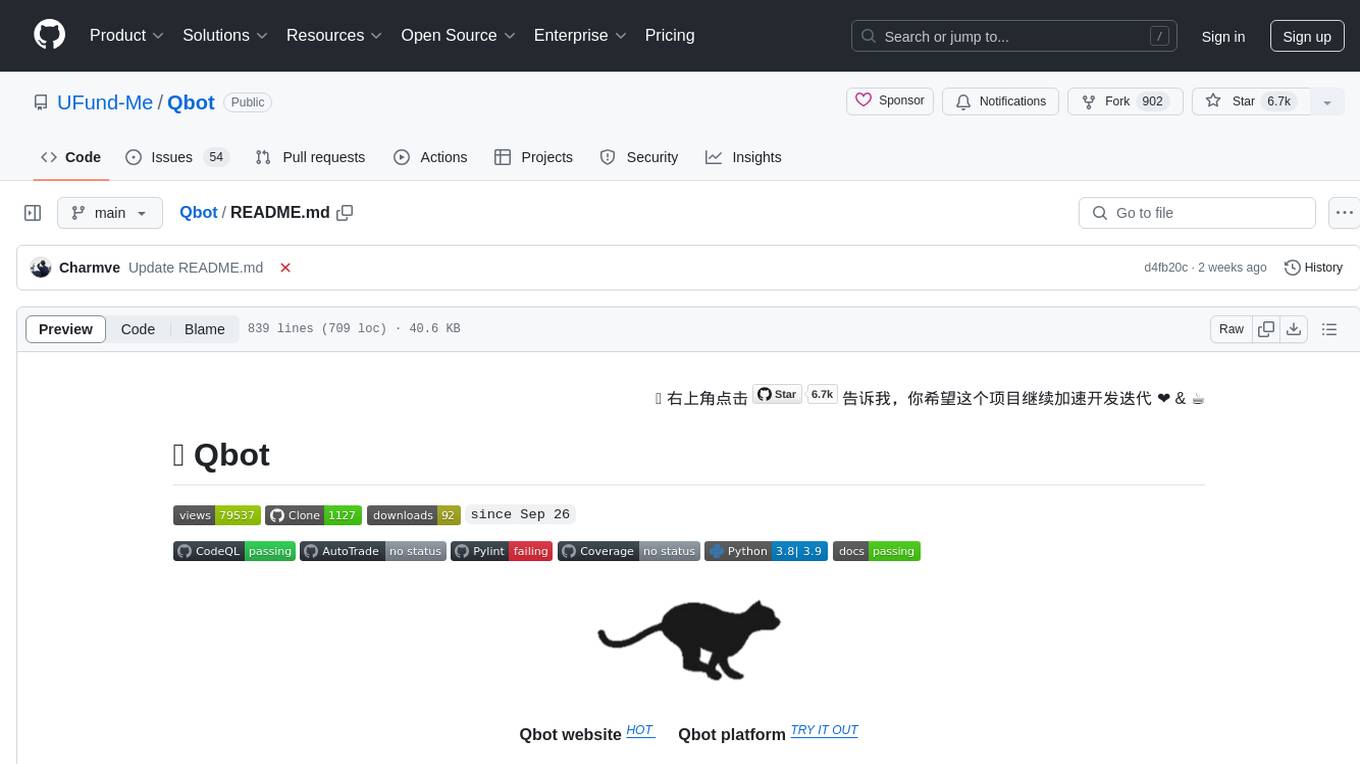

Qbot

Qbot is an AI-oriented automated quantitative investment platform that supports diverse machine learning modeling paradigms, including supervised learning, market dynamics modeling, and reinforcement learning. It provides a full closed-loop process from data acquisition, strategy development, backtesting, simulation trading to live trading. The platform emphasizes AI strategies such as machine learning, reinforcement learning, and deep learning, combined with multi-factor models to enhance returns. Users with some Python knowledge and trading experience can easily utilize the platform to address trading pain points and gaps in the market.

FinMem-LLM-StockTrading

This repository contains the Python source code for FINMEM, a Performance-Enhanced Large Language Model Trading Agent with Layered Memory and Character Design. It introduces FinMem, a novel LLM-based agent framework devised for financial decision-making, encompassing three core modules: Profiling, Memory with layered processing, and Decision-making. FinMem's memory module aligns closely with the cognitive structure of human traders, offering robust interpretability and real-time tuning. The framework enables the agent to self-evolve its professional knowledge, react agilely to new investment cues, and continuously refine trading decisions in the volatile financial environment. It presents a cutting-edge LLM agent framework for automated trading, boosting cumulative investment returns.

LLMs-in-Finance

This repository focuses on the application of Large Language Models (LLMs) in the field of finance. It provides insights and knowledge about how LLMs can be utilized in various scenarios within the finance industry, particularly in generating AI agents. The repository aims to explore the potential of LLMs to enhance financial processes and decision-making through the use of advanced natural language processing techniques.