toolhive

ToolHive makes deploying MCP servers easy, secure and fun

Stars: 1598

ToolHive is a tool designed to simplify and secure Model Context Protocol (MCP) servers. It allows users to easily discover, deploy, and manage MCP servers by launching them in isolated containers with minimal setup and security concerns. The tool offers instant deployment, secure default settings, compatibility with Docker and Kubernetes, seamless integration with popular clients, and availability as a GUI desktop app, CLI, and Kubernetes Operator.

README:

Run any Model Context Protocol (MCP) server: securely, instantly, anywhere.

ToolHive includes everything you need to use MCP servers in production. Rather than build or combine components yourself, use ToolHive's Registry Server, Runtime, Gateway, and Portal to get up and running quickly and safely.

ToolHive keeps you in control of your MCP estate. Integrate with popular clients in seconds and deploy pre-vetted MCP servers in locked-down containers with a single click or command. ToolHive is available as a desktop app, web app, CLI, and Kubernetes operator.

- 📥 Downloads

- 📚 Documentation

- 🚀 Quickstart guides:

- 💬 Discord

- 🤝 Contributing

ToolHive architecture: Gateway, Registry Server, Runtime, and Portal

ToolHive is built on a modular architecture to streamline secure MCP server management and integration. Here's how the main components work.

Define dedicated endpoints from which your teams can securely and efficiently access tools.

- Orchestrate multiple tools into a virtual MCP with a deterministic workflow engine

- Define access policies and network endpoints

- Centralize control of security policy, authentication, authorization, auditing, etc.

- Integrate with your IdP for SSO (OIDC/OAuth compatible)

- Customize and filter tools and descriptions to improve performance and reduce token usage

- Connect with local clients like Claude Desktop, Cursor, VS Code, and VS Code Server

Curate a catalog of trusted servers your teams can quickly discover and deploy.

- Integrate with the official MCP registry

- Add custom MCP servers

- Group servers based on role or use case

- Manage your registry with an API-driven interface (or embed in existing workflows for seamless integration and governance)

- Verify provenance and sign servers with built-in security controls

- Preset configurations and permissions for a frictionless user experience

Deploy, run, and manage MCP servers locally or in a Kubernetes cluster with security guardrails.

- Deploy MCP servers in the cloud via Kubernetes for enterprise scalability

- Run MCP servers locally via Docker or Podman

- Proxy remote MCP servers securely for unified management

- Kubernetes Operator for fleet and resource management

- Leverage OpenTelemetry and Prometheus for monitoring and audit logging

Simplify MCP adoption for developers and knowledge workers across your enterprise

- Cross-platform desktop app and browser-based cloud UI

- Make it easy for admins to curate MCP servers and tools

- Automate server discovery

- Install MCP servers with a single click

- Compatible with hundreds of AI clients

- Admins curate and organize MCP servers in the Registry, configuring access and policies.

- Users discover and request MCP servers from the Portal, and ToolHive orchestrates installation and access.

- Runtime securely deploys and manages MCP servers across local and cloud environments, integrating seamlessly with existing SDLC workflows, exporting analytics, and enforcing fine-grained access control.

- Gateway handles all inbound traffic, secures context and credentials, optimizes tool selection, and applies organizational policies.

Individual developers can get started in minutes with the desktop UI or CLI, then apply the same concepts in enterprise environments.

Key features:

- Run any MCP server from a container image, or build one dynamically from common package managers

- Manage encrypted secrets and control network isolation with simple, local tooling

- Test and validate MCP servers using built-in tools like the official MCP Inspector

- Optimize token usage and tool execution with the MCP Optimizer

Get started with the UI: Quickstart, How-to guides

Get started with the CLI: Quickstart, How-to guides, Command reference

MCP guides: learn how to run common MCP servers with ToolHive

Teams and organizations manage MCP servers and registries centrally using familiar Kubernetes workflows.

Key features:

- Custom Resource Definitions for MCP servers, registries, and other ToolHive components

- Secure execution with container-based isolation and multi-namespace support

- Automated service creation and discovery, with ingress integration for secure access

- Enterprise-grade security and observability: OIDC/OAuth SSO, secure token exchange, audit logging, OpenTelemetry, and Prometheus metrics

- Hybrid registry server: curate from upstream registries, dynamically register local MCP servers, or proxy trusted remote services

Get started: Quickstart, How-to guides, CRD reference, Example manifests

ToolHive's complete solution for teams and enterprises supports MCP servers across all environments: on developer machines, inside your Kubernetes clusters, or hosted externally by trusted SaaS providers.

End users access approved MCP servers through a secure, browser-based cloud UI. Developers can also connect using the ToolHive CLI or desktop UI for advanced integration and testing workflows.

Enterprise teams can also leverage ToolHive to integrate MCP servers into custom internal tools, agentic workflows, or chat-based interfaces, using the same runtime and access controls.

We welcome contributions and feedback from the community!

If you have ideas, suggestions, or want to get involved, check out our contributing guide or open an issue. Join us in making ToolHive even better!

|

Contribute to the CLI, API, and Kubernetes Operator (this repo): Contribute to the UI, registry, and docs: |

|

This project is licensed under the Apache 2.0 License.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for toolhive

Similar Open Source Tools

toolhive

ToolHive is a tool designed to simplify and secure Model Context Protocol (MCP) servers. It allows users to easily discover, deploy, and manage MCP servers by launching them in isolated containers with minimal setup and security concerns. The tool offers instant deployment, secure default settings, compatibility with Docker and Kubernetes, seamless integration with popular clients, and availability as a GUI desktop app, CLI, and Kubernetes Operator.

obot

Obot is an open source AI agent platform that allows users to build agents for various use cases such as copilots, assistants, and autonomous workflows. It offers integration with leading LLM providers, built-in RAG for data, easy integration with custom web services and APIs, and OAuth 2.0 authentication.

metorial-platform

Metorial Platform is an open source integration platform designed for developers to easily connect their AI applications to external data sources, APIs, and tools. It provides one-liner SDKs for JavaScript/TypeScript and Python, is powered by the Model Context Protocol (MCP), and offers features like self-hosting, large server catalog, embedded MCP Explorer, monitoring and debugging capabilities. The platform is built to scale for enterprise-grade applications and offers customizable options, open-source flexibility, multi-instance support, powerful SDKs, detailed documentation, full API access, and an advanced dashboard for managing integrations.

dreamfactory

DreamFactory is a self-hosted platform that provides governed API access to any data source for enterprise apps and local LLMs. It is a secure enterprise data access platform built on top of the Laravel framework, offering role-based access control, identity passthrough, and customization of API behavior using PHP, Python, and NodeJS scripting languages. DreamFactory allows users to generate powerful APIs for SQL and NoSQL databases, files, email, and push notifications in seconds, while ensuring security with features like user management, SSO authentication, OAuth, and Active Directory integration.

suna

Kortix is an open-source platform designed to build, manage, and train AI agents for various tasks. It allows users to create autonomous agents, from general-purpose assistants to specialized automation tools. The platform offers capabilities such as browser automation, file management, web intelligence, system operations, API integrations, and agent building tools. Users can create custom agents tailored to specific domains, workflows, or business needs, enabling tasks like research & analysis, browser automation, file & document management, data processing & analysis, and system administration.

countly-server

Countly is a privacy-first, AI-ready analytics and customer engagement platform built for organizations that require full data ownership and deployment flexibility. It can be deployed on-premises or in a private cloud, giving complete control over data, infrastructure, compliance, and security. Teams use Countly to understand user behavior across mobile, web, desktop, and connected devices, optimize product and customer experiences in real time, and automate and personalize customer engagement across channels. With flexible data tracking, customizable dashboards, and a modular plugin-based architecture, Countly scales with the product while ensuring long-term autonomy and zero vendor lock-in. Built for privacy, designed for flexibility, and ready for AI-driven innovation.

baserow

Baserow is a secure, open-source platform that allows users to build databases, applications, automations, and AI agents without writing any code. With enterprise-grade security compliance and both cloud and self-hosted deployment options, Baserow empowers teams to structure data, automate processes, create internal tools, and build custom dashboards. It features a spreadsheet database hybrid, AI Assistant for natural language database creation, GDPR, HIPAA, and SOC 2 Type II compliance, and seamless integration with existing tools. Baserow is API-first, extensible, and uses frameworks like Django, Vue.js, and PostgreSQL.

superglue

superglue is an AI-powered tool builder that abstracts away authentication, documentation handling, and data mapping between systems. It self-heals tools by auto-repairing failures due to API changes. Users can build lightweight data syncing tools, migrate SQL procedures to REST API calls, and create enterprise GPT tools. Interfaces include a web application, superglue SDK for CRUD functionality, and MCP Server for discoverability and execution of pre-built tools. Detailed documentation is available at docs.superglue.cloud.

kodit

Kodit is a Code Indexing MCP Server that connects AI coding assistants to external codebases, providing accurate and up-to-date code snippets. It improves AI-assisted coding by offering canonical examples, indexing local and public codebases, integrating with AI coding assistants, enabling keyword and semantic search, and supporting OpenAI-compatible or custom APIs/models. Kodit helps engineers working with AI-powered coding assistants by providing relevant examples to reduce errors and hallucinations.

open-wearables

Open Wearables is an open-source platform that unifies wearable device data from multiple providers and enables AI-powered health insights through natural language automations. It provides a single API for building health applications faster, with embeddable widgets and webhook notifications. Developers can integrate multiple wearable providers, access normalized health data, and build AI-powered insights. The platform simplifies the process of supporting multiple wearables, handling OAuth flows, data mapping, and sync logic, allowing users to focus on product development. Use cases include fitness coaching apps, healthcare platforms, wellness applications, research projects, and personal use.

cossistant

Cossistant is an open source chat support widget tailored for the React ecosystem. It offers headless components for building customizable chat interfaces, real-time messaging with WebSocket technology, and tools for managing customer conversations. The tool is API-first, self-hosted, developer-friendly with TypeScript support, and provides complete integration flexibility. It uses technologies like Next.js, TailwindCSS, and WebSockets, and supports databases like PlanetScale for production and DBgin for local development. Cossistant is ideal for developers seeking a versatile chat solution that can be easily integrated into their applications.

deepflow

DeepFlow is an open-source project that provides deep observability for complex cloud-native and AI applications. It offers Zero Code data collection with eBPF for metrics, distributed tracing, request logs, and function profiling. DeepFlow is integrated with SmartEncoding to achieve Full Stack correlation and efficient access to all observability data. With DeepFlow, cloud-native and AI applications automatically gain deep observability, removing the burden of developers continually instrumenting code and providing monitoring and diagnostic capabilities covering everything from code to infrastructure for DevOps/SRE teams.

neuro-san-studio

Neuro SAN Studio is an open-source library for building agent networks across various industries. It simplifies the development of collaborative AI systems by enabling users to create sophisticated multi-agent applications using declarative configuration files. The tool offers features like data-driven configuration, adaptive communication protocols, safe data handling, dynamic agent network designer, flexible tool integration, robust traceability, and cloud-agnostic deployment. It has been used in various use-cases such as automated generation of multi-agent configurations, airline policy assistance, banking operations, market analysis in consumer packaged goods, insurance claims processing, intranet knowledge management, retail operations, telco network support, therapy vignette supervision, and more.

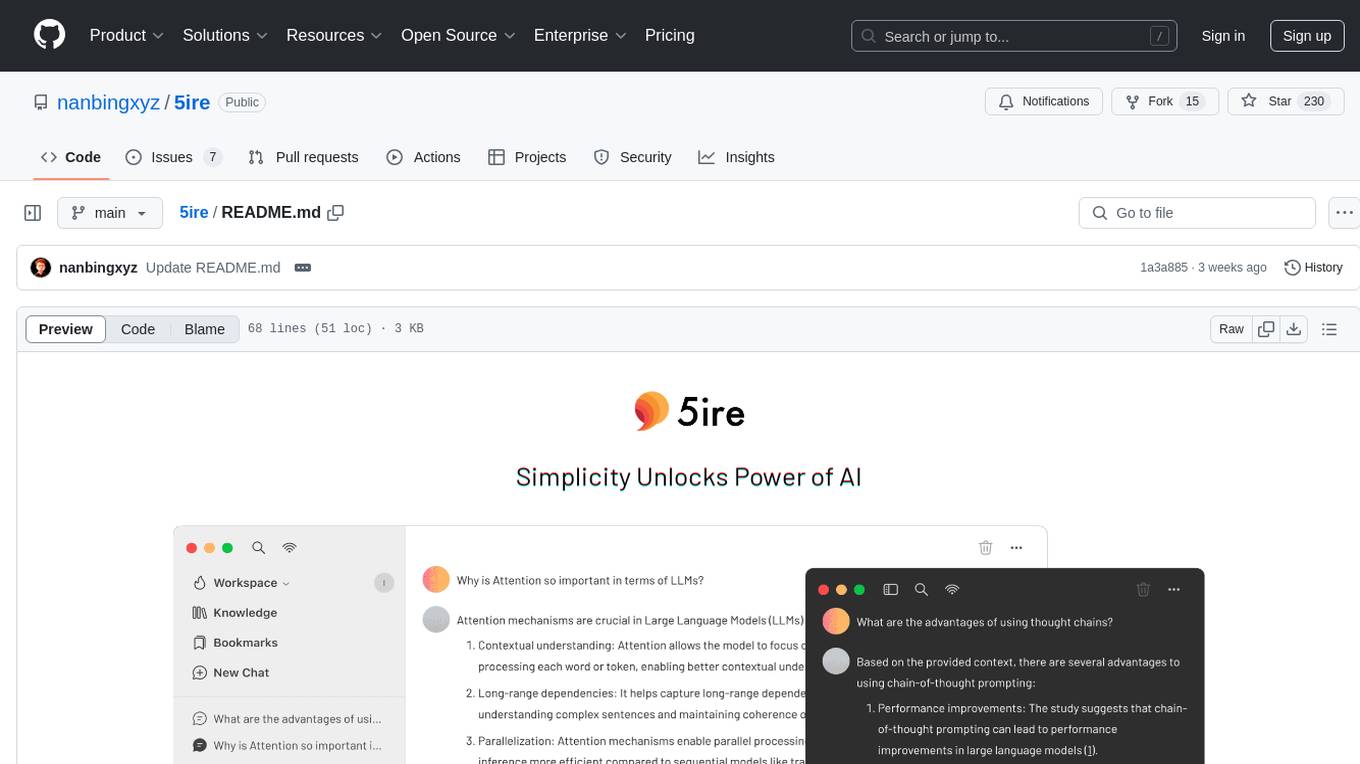

5ire

5ire is a cross-platform desktop client that integrates a local knowledge base for multilingual vectorization, supports parsing and vectorization of various document formats, offers usage analytics to track API spending, provides a prompts library for creating and organizing prompts with variable support, allows bookmarking of conversations, and enables quick keyword searches across conversations. It is licensed under the GNU General Public License version 3.

trigger.dev

Trigger.dev is an open source platform and SDK for creating long-running background jobs. It provides features like JavaScript and TypeScript SDK, no timeouts, retries, queues, schedules, observability, React hooks, Realtime API, custom alerts, elastic scaling, and works with existing tech stack. Users can create tasks in their codebase, deploy tasks using the SDK, manage tasks in different environments, and have full visibility of job runs. The platform offers a trace view of every task run for detailed monitoring. Getting started is easy with account creation, project setup, and onboarding instructions. Self-hosting and development guides are available for users interested in contributing or hosting Trigger.dev.

genkit

Firebase Genkit (beta) is a framework with powerful tooling to help app developers build, test, deploy, and monitor AI-powered features with confidence. Genkit is cloud optimized and code-centric, integrating with many services that have free tiers to get started. It provides unified API for generation, context-aware AI features, evaluation of AI workflow, extensibility with plugins, easy deployment to Firebase or Google Cloud, observability and monitoring with OpenTelemetry, and a developer UI for prototyping and testing AI features locally. Genkit works seamlessly with Firebase or Google Cloud projects through official plugins and templates.

For similar tasks

AppFlowy-Cloud

AppFlowy Cloud is a secure user authentication, file storage, and real-time WebSocket communication tool written in Rust. It is part of the AppFlowy ecosystem, providing an efficient and collaborative user experience. The tool offers deployment guides, development setup with Rust and Docker, debugging tips for components like PostgreSQL, Redis, Minio, and Portainer, and guidelines for contributing to the project.

toolhive

ToolHive is a tool designed to simplify and secure Model Context Protocol (MCP) servers. It allows users to easily discover, deploy, and manage MCP servers by launching them in isolated containers with minimal setup and security concerns. The tool offers instant deployment, secure default settings, compatibility with Docker and Kubernetes, seamless integration with popular clients, and availability as a GUI desktop app, CLI, and Kubernetes Operator.

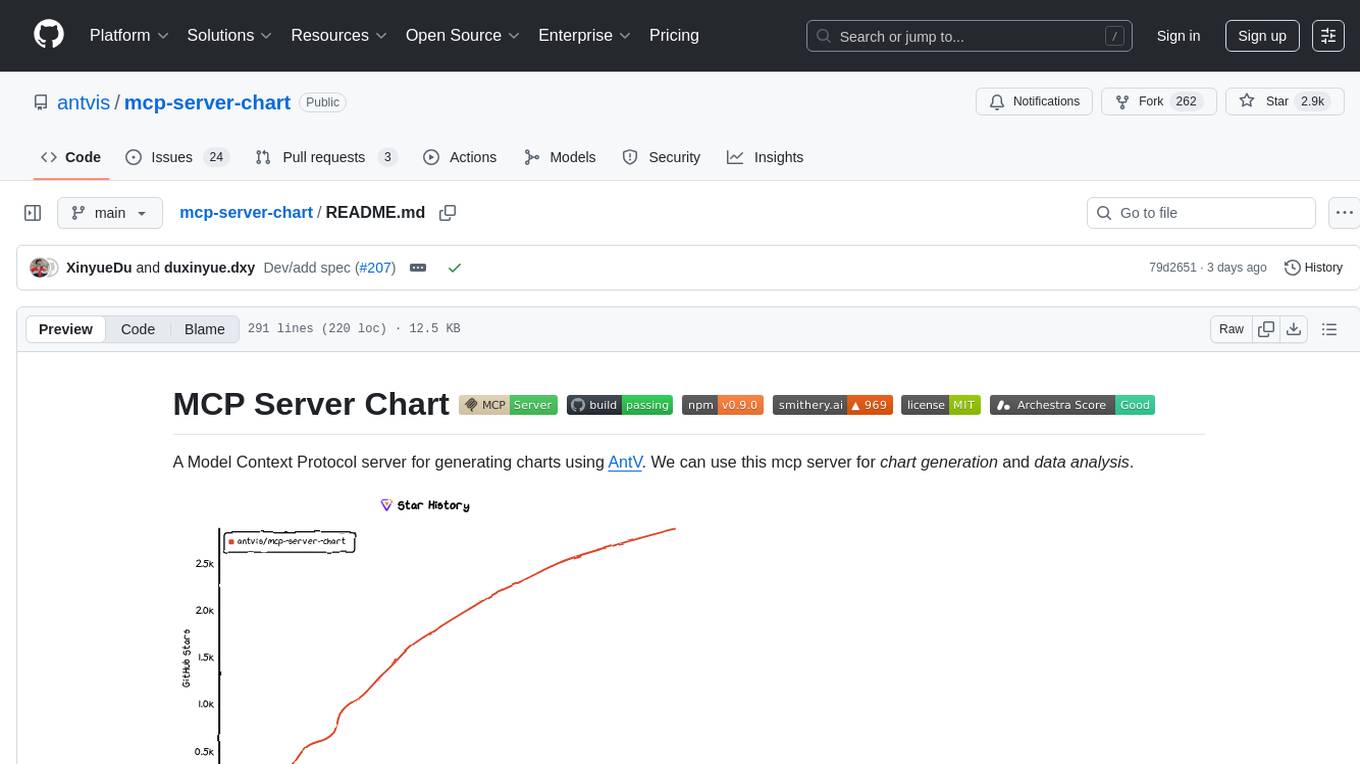

mcp-server-chart

mcp-server-chart is a Helm chart for deploying a Minecraft server on Kubernetes. It simplifies the process of setting up and managing a Minecraft server in a Kubernetes environment. The chart includes configurations for specifying server settings, resource limits, and persistent storage options. With mcp-server-chart, users can easily deploy and scale Minecraft servers on Kubernetes clusters, ensuring high availability and performance for multiplayer gaming experiences.

HMusic

HMusic is an intelligent music player that supports Xiaomi AI speakers. It offers dual modes: xiaomusic server mode and Xiaomi IoT direct connection mode. Users can choose between these modes based on their preferences and needs. The direct connection mode is suitable for regular users who want a plug-and-play experience, while the xiaomusic mode is ideal for users with NAS/servers who require advanced features like a local music library, playlists, and progress control. The tool provides functionalities such as music search, playback, and volume control, catering to a wide range of music enthusiasts.

toolhive-studio

ToolHive Studio is an experimental project under active development and testing, providing an easy way to discover, deploy, and manage Model Context Protocol (MCP) servers securely. Users can launch any MCP server in a locked-down container with just a few clicks, eliminating manual setup, security concerns, and runtime issues. The tool ensures instant deployment, default security measures, cross-platform compatibility, and seamless integration with popular clients like GitHub Copilot, Cursor, and Claude Code.

sealos

Sealos is a cloud operating system distribution based on the Kubernetes kernel, designed for a seamless development lifecycle. It allows users to spin up full-stack environments in seconds, effortlessly push releases, and scale production seamlessly. With core features like easy application management, quick database creation, and cloud universality, Sealos offers efficient and economical cloud management with high universality and ease of use. The platform also emphasizes agility and security through its multi-tenancy sharing model. Sealos is supported by a community offering full documentation, Discord support, and active development roadmap.

For similar jobs

AirGo

AirGo is a front and rear end separation, multi user, multi protocol proxy service management system, simple and easy to use. It supports vless, vmess, shadowsocks, and hysteria2.

mosec

Mosec is a high-performance and flexible model serving framework for building ML model-enabled backend and microservices. It bridges the gap between any machine learning models you just trained and the efficient online service API. * **Highly performant** : web layer and task coordination built with Rust 🦀, which offers blazing speed in addition to efficient CPU utilization powered by async I/O * **Ease of use** : user interface purely in Python 🐍, by which users can serve their models in an ML framework-agnostic manner using the same code as they do for offline testing * **Dynamic batching** : aggregate requests from different users for batched inference and distribute results back * **Pipelined stages** : spawn multiple processes for pipelined stages to handle CPU/GPU/IO mixed workloads * **Cloud friendly** : designed to run in the cloud, with the model warmup, graceful shutdown, and Prometheus monitoring metrics, easily managed by Kubernetes or any container orchestration systems * **Do one thing well** : focus on the online serving part, users can pay attention to the model optimization and business logic

llm-code-interpreter

The 'llm-code-interpreter' repository is a deprecated plugin that provides a code interpreter on steroids for ChatGPT by E2B. It gives ChatGPT access to a sandboxed cloud environment with capabilities like running any code, accessing Linux OS, installing programs, using filesystem, running processes, and accessing the internet. The plugin exposes commands to run shell commands, read files, and write files, enabling various possibilities such as running different languages, installing programs, starting servers, deploying websites, and more. It is powered by the E2B API and is designed for agents to freely experiment within a sandboxed environment.

pezzo

Pezzo is a fully cloud-native and open-source LLMOps platform that allows users to observe and monitor AI operations, troubleshoot issues, save costs and latency, collaborate, manage prompts, and deliver AI changes instantly. It supports various clients for prompt management, observability, and caching. Users can run the full Pezzo stack locally using Docker Compose, with prerequisites including Node.js 18+, Docker, and a GraphQL Language Feature Support VSCode Extension. Contributions are welcome, and the source code is available under the Apache 2.0 License.

learn-generative-ai

Learn Cloud Applied Generative AI Engineering (GenEng) is a course focusing on the application of generative AI technologies in various industries. The course covers topics such as the economic impact of generative AI, the role of developers in adopting and integrating generative AI technologies, and the future trends in generative AI. Students will learn about tools like OpenAI API, LangChain, and Pinecone, and how to build and deploy Large Language Models (LLMs) for different applications. The course also explores the convergence of generative AI with Web 3.0 and its potential implications for decentralized intelligence.

gcloud-aio

This repository contains shared codebase for two projects: gcloud-aio and gcloud-rest. gcloud-aio is built for Python 3's asyncio, while gcloud-rest is a threadsafe requests-based implementation. It provides clients for Google Cloud services like Auth, BigQuery, Datastore, KMS, PubSub, Storage, and Task Queue. Users can install the library using pip and refer to the documentation for usage details. Developers can contribute to the project by following the contribution guide.

fluid

Fluid is an open source Kubernetes-native Distributed Dataset Orchestrator and Accelerator for data-intensive applications, such as big data and AI applications. It implements dataset abstraction, scalable cache runtime, automated data operations, elasticity and scheduling, and is runtime platform agnostic. Key concepts include Dataset and Runtime. Prerequisites include Kubernetes version > 1.16, Golang 1.18+, and Helm 3. The tool offers features like accelerating remote file accessing, machine learning, accelerating PVC, preloading dataset, and on-the-fly dataset cache scaling. Contributions are welcomed, and the project is under the Apache 2.0 license with a vendor-neutral approach.

aiges

AIGES is a core component of the Athena Serving Framework, designed as a universal encapsulation tool for AI developers to deploy AI algorithm models and engines quickly. By integrating AIGES, you can deploy AI algorithm models and engines rapidly and host them on the Athena Serving Framework, utilizing supporting auxiliary systems for networking, distribution strategies, data processing, etc. The Athena Serving Framework aims to accelerate the cloud service of AI algorithm models and engines, providing multiple guarantees for cloud service stability through cloud-native architecture. You can efficiently and securely deploy, upgrade, scale, operate, and monitor models and engines without focusing on underlying infrastructure and service-related development, governance, and operations.