airdash

File sharing flutter webrtc app enabling sending files to any device from anywhere

Stars: 533

AirDash is a file sharing tool that allows users to transfer photos and files securely between devices on different platforms. It offers maximum privacy and security by encrypting files and transferring them directly. Users can quickly start transfers using native mobile share sheet and drag and drop on desktop. The tool supports all major platforms and app stores, and automatically selects the best and fastest connection available. Key technologies include Flutter 3 for app development, WebRTC for file transfers, and Firebase services for signaling, config storage, and release automation. AirDash is free to use and suitable for sharing any number of files of any size.

README:

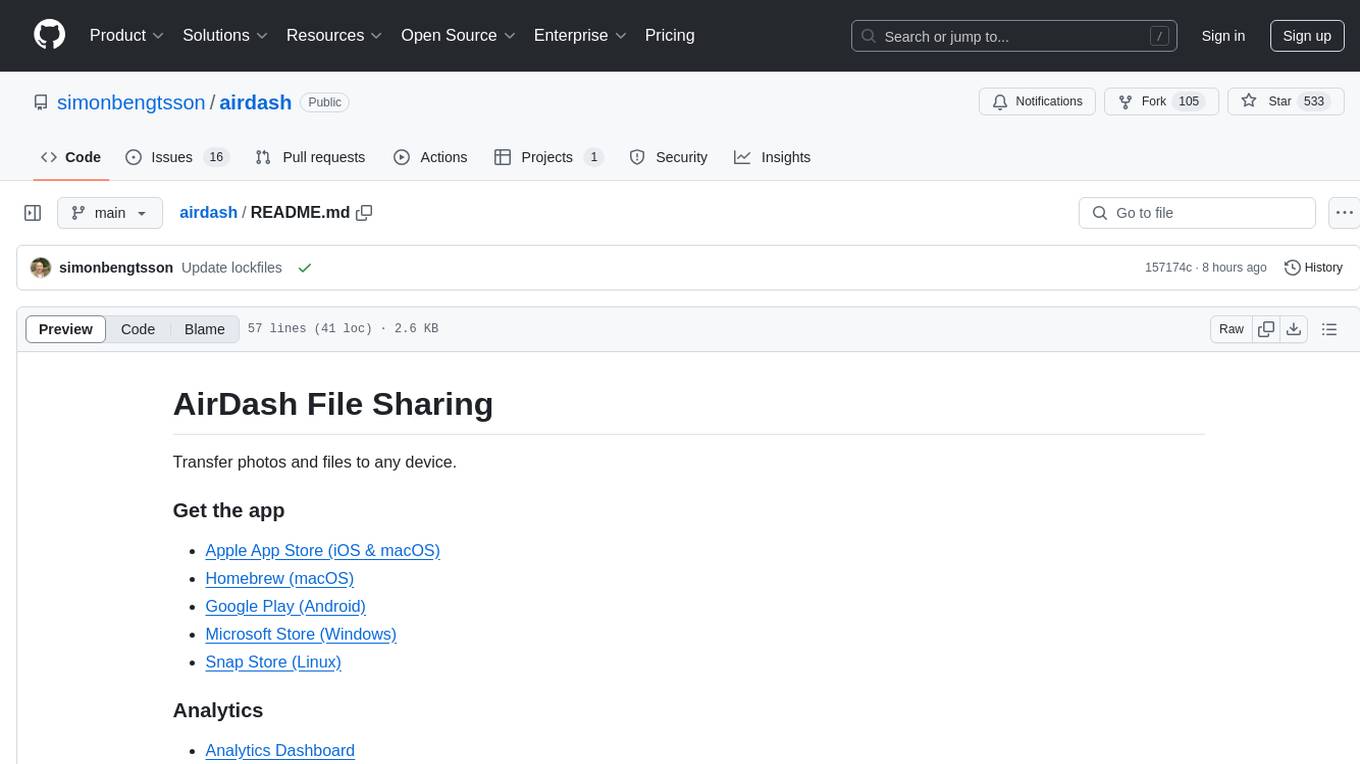

Transfer photos and files to any device.

- Apple App Store (iOS & macOS)

- Homebrew (macOS)

- Google Play (Android)

- Microsoft Store (Windows)

- Snap Store (Linux)

- Support for all major platforms and app stores (iOS, macOS, Windows, Linux and Android)

- Free forever to send any number of files of any size

- Maximum privacy and security by fully encrypting files and transferring them directly between devices

- Quickly start transfers using native mobile share sheet and drag and drop on desktop

- Send files anywhere (no need to be on the same network or nearby)

- Automatically uses the best and fastest connection available (wifi, mobile internet, ethernet etc)

- Flutter 3 (iOS, macOS, Android, Linux and Windows apps)

- WebRTC (file and data transfers)

- Firebase Firestore (WebRTC signaling and config storage)

- Firebase Functions (device pairing and config automation)

- Firebase Hosting (website and static files hosting)

- App Store Connect API and Microsoft Store submission API (release automation)

- Mixpanel (web and app analytics)

- Sentry (app monitoring and error tracking)

- Create a firebase project (https://console.firebase.google.com) and enable firestore and anonymous authentication

- Create a .env file by duplicating the .env.sample file

- Replace the firebase project id and web API key in the .env file with the ones for your project (firebase console -> project settings)

- Run

dart tools/scripts.dart app_envto get a env.dart file - Deploy pairing backend function by

cd functions && npm i && npx firebase deploy --only pairing - Run app using editor or

flutter run

By default a google stun server is used to connect peers. The simplest way to enable turn servers as well is to use https://www.twilio.com/stun-turn. Create functions/.env file similar to the functions/.env-sample file and deploy the updateTwilioToken backend function.

Contributions are very much welcome on everything from bug reports to feature development. If you want to change something major write an issue about it first to ensure it will be considered for merge.

Prepare

- Update libraries

- flutter pub get

- cd ios && pod update

- cd macos && pod update

Update version

- Update changelog.md and version in pubspec.yaml and snapcraft.yaml

- git commit -am vX.X.X

- git tag "vX.X.X"

- git push && git push -f --tags

macOS

- flutter build macos

- Archive, Distribute -> Export to App store AND Distribute -> Direct Distribution (~/Downloads/airdash.app)

- npx appdmg appdmg.json ./build/airdash.dmg

iOS

- flutter build ipa

- Distribute with Transporter or Xcode (open build/ios/archive/MyApp.xcarchive)

Android

- flutter build appbundle

- cp build/app/outputs/bundle/release/app-release.aab build/airdash.aab

- open https://play.google.com/console/u/0/developers/6822011924129869646/app/4975414306006741094/tracks/production

- App Bundle Explorer -> Download signed apk -> build/airdash.apk

Windows

- Open Windows in VMWare

- Open ~\Documents\airdash in vs code

- git pull -r && flutter pub get

- dart run msix:create

- Copy msix file to build/airdash.msix

- Create new update -> open https://partner.microsoft.com/en-us/dashboard/products/9NL9K7CSG30T

Linux

- Open Ubuntu in VMWare

- Open ~\Documents\airdash in vs code

- git pull -r && flutter pub get

- flutter build linux --release

- flutter clean --use-lxd # required

- snapcraft --output build/airdash.snap --use-lxd

- snapcraft upload --release=stable build/airdash.snap

- Copy to mac ./build/airdash.snap

Create Github release

- Download macOS app file

- npx appdmg appdmg.json ./build/airdash.dmg

- open https://github.com/simonbengtsson/airdash/releases/new

- Attach

- build/airdash.apk

- build/airdash.dmg

- build/airdash.msix

- build/airdash.snap

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for airdash

Similar Open Source Tools

airdash

AirDash is a file sharing tool that allows users to transfer photos and files securely between devices on different platforms. It offers maximum privacy and security by encrypting files and transferring them directly. Users can quickly start transfers using native mobile share sheet and drag and drop on desktop. The tool supports all major platforms and app stores, and automatically selects the best and fastest connection available. Key technologies include Flutter 3 for app development, WebRTC for file transfers, and Firebase services for signaling, config storage, and release automation. AirDash is free to use and suitable for sharing any number of files of any size.

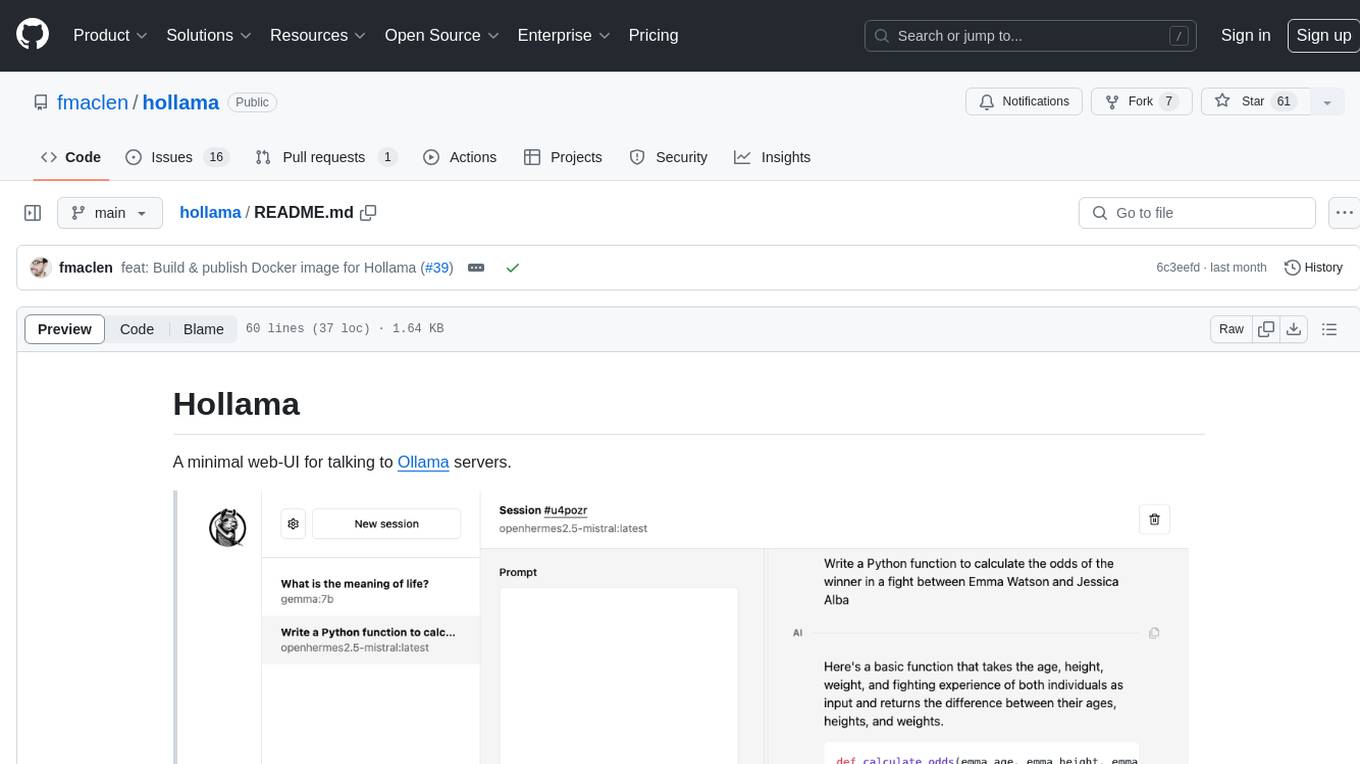

hollama

Hollama is a minimal web-UI tool designed for interacting with Ollama servers. It features large prompt fields, streams completions, ability to copy completions as raw text, Markdown parsing with syntax highlighting, and saves sessions/context in the browser's localStorage. Users can access the latest version of Hollama at https://hollama.fernando.is without sign up, and data is stored locally on the browser. The tool can also be run as a Docker image by executing a specific command. Developers can connect to an Ollama server by updating the ORIGIN settings. Hollama facilitates easy development by providing instructions to set up the environment, install dependencies, and start a development server. Building a production version of the app is straightforward with a single command, and deployment may require installing an adapter for the target environment.

livehelperchat

Live Helper Chat is an open-source application that simplifies live support on websites, handling over 10,000 chats per day with multiple operators. It offers features like chat transcripts, multiple chats simultaneously, chat search, file uploads, and more. The tool supports integrations with various platforms like Agora, Jitsi, Rest API, and AI services such as Rasa AI and ChatGPT. It provides advanced functionalities like XMPP support, Chrome extension, work hours, screenshot feature, and chat statistics generation. Live Helper Chat ensures user privacy with no third-party cookies dependency and offers top performance with enabled cache. The tool is highly customizable with template and module override systems, support for custom extensions, and changeable footer and header content.

BodhiApp

Bodhi App runs Open Source Large Language Models locally, exposing LLM inference capabilities as OpenAI API compatible REST APIs. It leverages llama.cpp for GGUF format models and huggingface.co ecosystem for model downloads. Users can run fine-tuned models for chat completions, create custom aliases, and convert Huggingface models to GGUF format. The CLI offers commands for environment configuration, model management, pulling files, serving API, and more.

quests

Quests is an open-source app builder that allows users to build and run apps on their computer using various AI models. It provides a desktop app for local development, supports multiple projects simultaneously, offers version control, and enables exportable apps. Users can bring their own AI models from providers like OpenAI, Anthropic, Google, etc. The tool also includes a coding agent for targeted edits and real-time linting, making it suitable for developers looking to leverage AI in their app development workflow.

aiaio

aiaio (AI-AI-O) is a lightweight, privacy-focused web UI for interacting with AI models. It supports both local and remote LLM deployments through OpenAI-compatible APIs. The tool provides features such as dark/light mode support, local SQLite database for conversation storage, file upload and processing, configurable model parameters through UI, privacy-focused design, responsive design for mobile/desktop, syntax highlighting for code blocks, real-time conversation updates, automatic conversation summarization, customizable system prompts, WebSocket support for real-time updates, Docker support for deployment, multiple API endpoint support, and multiple system prompt support. Users can configure model parameters and API settings through the UI, handle file uploads, manage conversations, and use keyboard shortcuts for efficient interaction. The tool uses SQLite for storage with tables for conversations, messages, attachments, and settings. Contributions to the project are welcome under the Apache License 2.0.

aps-toolkit

APS Toolkit is a powerful tool for developers, software engineers, and AI engineers to explore Autodesk Platform Services (APS). It allows users to read, download, and write data from APS, as well as export data to various formats like CSV, Excel, JSON, and XML. The toolkit is built on top of Autodesk.Forge and Newtonsoft.Json, offering features such as reading SVF models, querying properties database, exporting data, and more.

minimal-chat

MinimalChat is a minimal and lightweight open-source chat application with full mobile PWA support that allows users to interact with various language models, including GPT-4 Omni, Claude Opus, and various Local/Custom Model Endpoints. It focuses on simplicity in setup and usage while being fully featured and highly responsive. The application supports features like fully voiced conversational interactions, multiple language models, markdown support, code syntax highlighting, DALL-E 3 integration, conversation importing/exporting, and responsive layout for mobile use.

AIRAVAT

AIRAVAT is a multifunctional Android Remote Access Tool (RAT) with a GUI-based Web Panel that does not require port forwarding. It allows users to access various features on the victim's device, such as reading files, downloading media, retrieving system information, managing applications, SMS, call logs, contacts, notifications, keylogging, admin permissions, phishing, audio recording, music playback, device control (vibration, torch light, wallpaper), executing shell commands, clipboard text retrieval, URL launching, and background operation. The tool requires a Firebase account and tools like ApkEasy Tool or ApkTool M for building. Users can set up Firebase, host the web panel, modify Instagram.apk for RAT functionality, and connect the victim's device to the web panel. The tool is intended for educational purposes only, and users are solely responsible for its use.

Foxel

Foxel is a highly extensible private cloud storage solution for individuals and teams, featuring AI-powered semantic search. It offers unified file management, pluggable storage backends, semantic search capabilities, built-in file preview, permissions and sharing options, and a task processing center. Users can easily manage files, search content within unstructured data, preview various file types, share files, and process tasks asynchronously. Foxel is designed to centralize file management and enhance search capabilities for users.

QOwnNotes

QOwnNotes is an open source notepad with Markdown support and todo list manager for GNU/Linux, macOS, and Windows. It allows you to write down thoughts, edit, and search for them later from mobile devices. Notes are stored as plain text markdown files and synced with Nextcloud's/ownCloud's file sync functionality. QOwnNotes offers features like multiple note folders, restoration of older versions and trashed notes, sub-string searching, customizable keyboard shortcuts, markdown highlighting, spellchecking, tabbing support, scripting support, encryption of notes, dark mode theme support, and more. It supports hierarchical note tagging, note subfolders, sharing notes on Nextcloud/ownCloud server, portable mode, Vim mode, distraction-free mode, full-screen mode, typewriter mode, Evernote and Joplin import, and is available in over 60 languages.

Local-File-Organizer

The Local File Organizer is an AI-powered tool designed to help users organize their digital files efficiently and securely on their local device. By leveraging advanced AI models for text and visual content analysis, the tool automatically scans and categorizes files, generates relevant descriptions and filenames, and organizes them into a new directory structure. All AI processing occurs locally using the Nexa SDK, ensuring privacy and security. With support for multiple file types and customizable prompts, this tool aims to simplify file management and bring order to users' digital lives.

mobile-use

Mobile-use is an open-source AI agent that controls Android or IOS devices using natural language. It understands commands to perform tasks like sending messages and navigating apps. Features include natural language control, UI-aware automation, data scraping, and extensibility. Users can automate their mobile experience by setting up environment variables, customizing LLM configurations, and launching the tool via Docker or manually for development. The tool supports physical Android phones, Android simulators, and iOS simulators. Contributions are welcome, and the project is licensed under MIT.

code2prompt

code2prompt is a command-line tool that converts your codebase into a single LLM prompt with a source tree, prompt templating, and token counting. It automates generating LLM prompts from codebases of any size, customizing prompt generation with Handlebars templates, respecting .gitignore, filtering and excluding files using glob patterns, displaying token count, including Git diff output, copying prompt to clipboard, saving prompt to an output file, excluding files and folders, adding line numbers to source code blocks, and more. It helps streamline the process of creating LLM prompts for code analysis, generation, and other tasks.

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

clearml-server

ClearML Server is a backend service infrastructure for ClearML, facilitating collaboration and experiment management. It includes a web app, RESTful API, and file server for storing images and models. Users can deploy ClearML Server using Docker, AWS EC2 AMI, or Kubernetes. The system design supports single IP or sub-domain configurations with specific open ports. ClearML-Agent Services container allows launching long-lasting jobs and various use cases like auto-scaler service, controllers, optimizer, and applications. Advanced functionality includes web login authentication and non-responsive experiments watchdog. Upgrading ClearML Server involves stopping containers, backing up data, downloading the latest docker-compose.yml file, configuring ClearML-Agent Services, and spinning up docker containers. Community support is available through ClearML FAQ, Stack Overflow, GitHub issues, and email contact.

For similar tasks

airdash

AirDash is a file sharing tool that allows users to transfer photos and files securely between devices on different platforms. It offers maximum privacy and security by encrypting files and transferring them directly. Users can quickly start transfers using native mobile share sheet and drag and drop on desktop. The tool supports all major platforms and app stores, and automatically selects the best and fastest connection available. Key technologies include Flutter 3 for app development, WebRTC for file transfers, and Firebase services for signaling, config storage, and release automation. AirDash is free to use and suitable for sharing any number of files of any size.

For similar jobs

AirGo

AirGo is a front and rear end separation, multi user, multi protocol proxy service management system, simple and easy to use. It supports vless, vmess, shadowsocks, and hysteria2.

mosec

Mosec is a high-performance and flexible model serving framework for building ML model-enabled backend and microservices. It bridges the gap between any machine learning models you just trained and the efficient online service API. * **Highly performant** : web layer and task coordination built with Rust 🦀, which offers blazing speed in addition to efficient CPU utilization powered by async I/O * **Ease of use** : user interface purely in Python 🐍, by which users can serve their models in an ML framework-agnostic manner using the same code as they do for offline testing * **Dynamic batching** : aggregate requests from different users for batched inference and distribute results back * **Pipelined stages** : spawn multiple processes for pipelined stages to handle CPU/GPU/IO mixed workloads * **Cloud friendly** : designed to run in the cloud, with the model warmup, graceful shutdown, and Prometheus monitoring metrics, easily managed by Kubernetes or any container orchestration systems * **Do one thing well** : focus on the online serving part, users can pay attention to the model optimization and business logic

llm-code-interpreter

The 'llm-code-interpreter' repository is a deprecated plugin that provides a code interpreter on steroids for ChatGPT by E2B. It gives ChatGPT access to a sandboxed cloud environment with capabilities like running any code, accessing Linux OS, installing programs, using filesystem, running processes, and accessing the internet. The plugin exposes commands to run shell commands, read files, and write files, enabling various possibilities such as running different languages, installing programs, starting servers, deploying websites, and more. It is powered by the E2B API and is designed for agents to freely experiment within a sandboxed environment.

pezzo

Pezzo is a fully cloud-native and open-source LLMOps platform that allows users to observe and monitor AI operations, troubleshoot issues, save costs and latency, collaborate, manage prompts, and deliver AI changes instantly. It supports various clients for prompt management, observability, and caching. Users can run the full Pezzo stack locally using Docker Compose, with prerequisites including Node.js 18+, Docker, and a GraphQL Language Feature Support VSCode Extension. Contributions are welcome, and the source code is available under the Apache 2.0 License.

learn-generative-ai

Learn Cloud Applied Generative AI Engineering (GenEng) is a course focusing on the application of generative AI technologies in various industries. The course covers topics such as the economic impact of generative AI, the role of developers in adopting and integrating generative AI technologies, and the future trends in generative AI. Students will learn about tools like OpenAI API, LangChain, and Pinecone, and how to build and deploy Large Language Models (LLMs) for different applications. The course also explores the convergence of generative AI with Web 3.0 and its potential implications for decentralized intelligence.

gcloud-aio

This repository contains shared codebase for two projects: gcloud-aio and gcloud-rest. gcloud-aio is built for Python 3's asyncio, while gcloud-rest is a threadsafe requests-based implementation. It provides clients for Google Cloud services like Auth, BigQuery, Datastore, KMS, PubSub, Storage, and Task Queue. Users can install the library using pip and refer to the documentation for usage details. Developers can contribute to the project by following the contribution guide.

fluid

Fluid is an open source Kubernetes-native Distributed Dataset Orchestrator and Accelerator for data-intensive applications, such as big data and AI applications. It implements dataset abstraction, scalable cache runtime, automated data operations, elasticity and scheduling, and is runtime platform agnostic. Key concepts include Dataset and Runtime. Prerequisites include Kubernetes version > 1.16, Golang 1.18+, and Helm 3. The tool offers features like accelerating remote file accessing, machine learning, accelerating PVC, preloading dataset, and on-the-fly dataset cache scaling. Contributions are welcomed, and the project is under the Apache 2.0 license with a vendor-neutral approach.

aiges

AIGES is a core component of the Athena Serving Framework, designed as a universal encapsulation tool for AI developers to deploy AI algorithm models and engines quickly. By integrating AIGES, you can deploy AI algorithm models and engines rapidly and host them on the Athena Serving Framework, utilizing supporting auxiliary systems for networking, distribution strategies, data processing, etc. The Athena Serving Framework aims to accelerate the cloud service of AI algorithm models and engines, providing multiple guarantees for cloud service stability through cloud-native architecture. You can efficiently and securely deploy, upgrade, scale, operate, and monitor models and engines without focusing on underlying infrastructure and service-related development, governance, and operations.