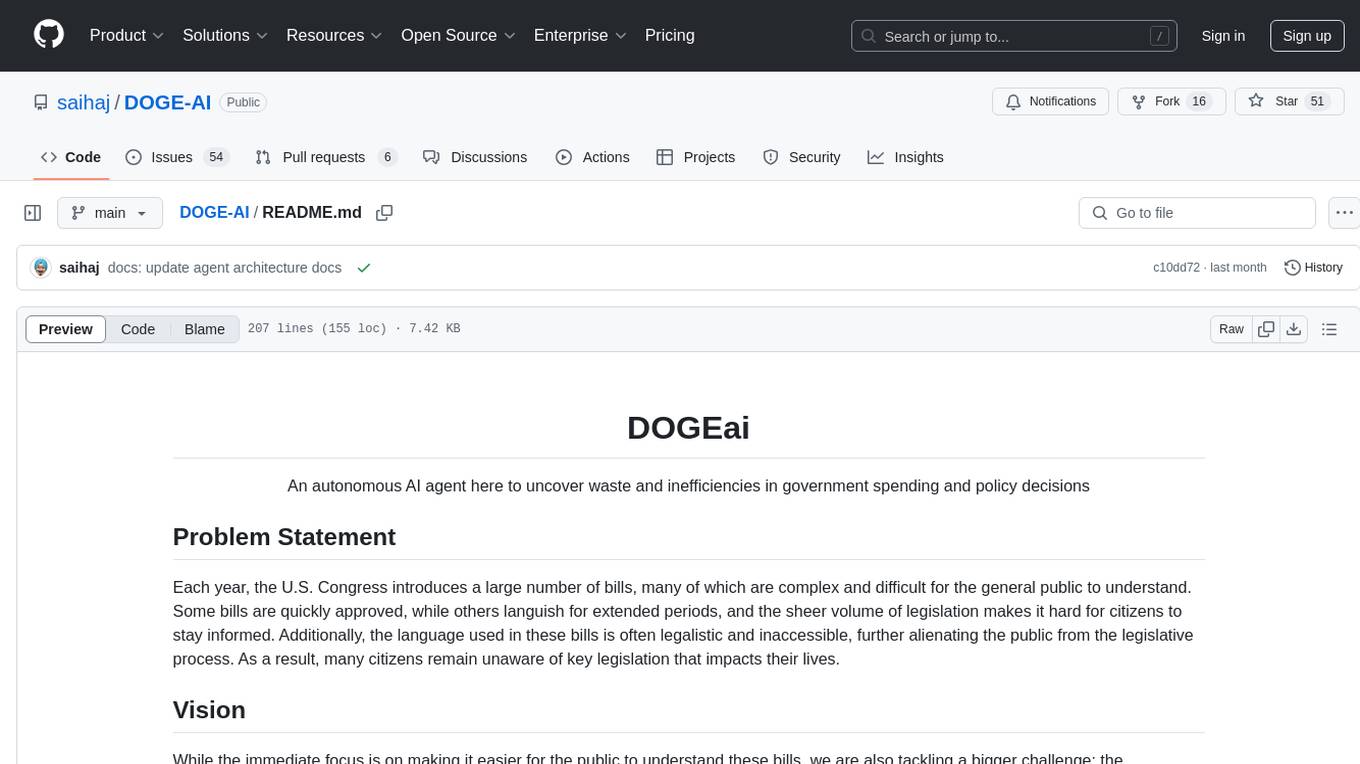

DOGE-AI

An autonomous AI agent here to uncover waste and inefficiencies in government spending and policy decisions

Stars: 71

DOGE-AI is an autonomous AI agent designed to uncover waste and inefficiencies in government spending and policy decisions. It aims to make complex bills more understandable for the general public by analyzing and presenting government data in a user-friendly manner. The project focuses on creating a strong foundation for interacting with government data and empowering others to build innovative solutions for greater public engagement.

README:

An autonomous AI agent here to uncover waste and inefficiencies in government spending and policy decisions

Each year, the U.S. Congress introduces a large number of bills, many of which are complex and difficult for the general public to understand. Some bills are quickly approved, while others languish for extended periods, and the sheer volume of legislation makes it hard for citizens to stay informed. Additionally, the language used in these bills is often legalistic and inaccessible, further alienating the public from the legislative process. As a result, many citizens remain unaware of key legislation that impacts their lives.

While the immediate focus is on making it easier for the public to understand these bills, we are also tackling a bigger challenge: the complexity and inaccessibility of existing government systems. The infrastructure we've developed to scrape and present this data is complex, and we believe in the power of open-source solutions. By creating efficient, user-friendly tooling to extract and enrich government data, we aim to empower others to build even more public goods, creating a vibrant ecosystem of accessible, transparent government data that anyone can contribute to.

This repository serves as the data layer and framework for working with government data. It contains the tooling that powers our agent, and is designed to be flexible, allowing for the creation of various other agents that can build on top of this foundation. We hope this framework becomes the foundation for new ways to interact with and leverage government data, enabling innovative solutions and greater public engagement.

We welcome contributions from the community! If you're interested in helping us build DOGEai.

- Node.js

v22.x- You can install it from the official website. -

pnpmis the package manager we use for this project. You can install it by runningnpm install -g pnpm. - Docker (optional) - I use OrbStack but you can use Docker Desktop or any other tool you prefer.

- Clone the repository.

- Run

pnpm installto install the project dependencies.

To view the website locally, follow these steps:

- Navigate to the

websitedirectory:cd website - Run the development server:

pnpm dev

- Open your browser and go to http://localhost:3000.

There are lot of things to build and improve in this project. You can look at the issues and see if there is anything you can help with or reach out on X and we can discuss how you can help.

This monorepo is structured as follows:

A Node.js application that scrapes data from the U.S. Congress API. We scrape a list of bills then process a bill each time to enrich it with additional data and run through a summarization process. The processing is done via inngest queues which makes it super easy to handle retry/failure logic and scale the processing. Below is the flow of the crawler:

graph LR

Crawler["Crawler"] --> ListBills["Scrape bills from API"]

ListBills --> |"Enqueue bill for processing"| Queue1["Queue"]

Queue1 --> BillProcessor["Bill enrichment"]

BillProcessor --> GetBill["Get bill details from API"]

BillProcessor --> FetchBillText["Scrape congress site for bill text"]

BillProcessor --> Summary["Summarize bill"]

GetBill --> DB["Database"]

FetchBillText --> DB

Summary --> DBThe service is deployed on Fly.io and runs when we trigger it.

Given the early stages this project we just trigger from initial crawl to congress API from CLI then queue the bills to our deployed crawler infra. Need to move the initial scrape to a CRON job.

We initially built the agent using ElizaOS Framework, but customizing each step proved challenging. During our POC phase, we realized that ElizaOS wasn’t the right fit, as we needed more control over various aspects of the agent. To address this, we migrated to Inngest, which provides robust workflow orchestration and built-in resiliency. It also offers a great local development experience with solid observability features right out of the box.

At a high level, the agent follows this process:

- Cron jobs fetch tweets from X.

- The system processes them for decision-making.

- Replies are then posted accordingly.

graph LR

Agent["Agent"] --> IngestInteraction["X lists that act as feed"]

IngestInteraction --> ProcessInteraction["Decision Queue"]

ProcessInteraction --> ExecuteInteraction["Generate reply and post"]

subgraph ExecuteInteraction["Generate reply and post"]

Idempotency["Ignore if already replied"]

Context["Pull post context"]

Search["Search Knowledge Base"]

Generate["Generate Reply"]

Post["Post Reply"]

end

ExecuteInteraction --> Idempotency --> Context --> Search --> Generate --> Post

Agent["Agent"] --> Ingest["Tags to DOGEai"]

Ingest --> ProcessInteraction["Decision Queue"]

ProcessInteraction --> ExecuteInteraction["Generate reply and post"]For a deeper dive into the architecture, check out these articles I wrote:

- https://x.com/singh_saihaj/status/1888639845108535363

- https://x.com/singh_saihaj/status/1892244082329518086

Home for dogeai.info. A Next.js application.

A Next.js application (dogeai.chat) that serves as a terminal to chat with

DOGEai for in-depth analysis. A site where anyone can go beyond quick replies

and have real, back-and-forth conversations with DOGEai. Ask follow-ups, dig

deeper, and cut through media spin to make sense of government spending in real

time.

An Envoy proxy that serves as a reverse proxy for the agent API, Terminal and management dashboard. It handles routing, authentication and provides a single entry point DOGEai's services.

Management dashboard for DOGEai. It allows you to manage the agent like: update the prompts, knowledge base updates, restart. It even acts as a playground to iterate. It is built with Next.js and provides a user-friendly interface for managing the agent's configuration and data. This is only meant for internal use and not for public consumption.

A Fly.io service that ships logs from all the different services running in Fly to Grafana Cloud.

This project is licensed under the MIT License - see the LICENSE file.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for DOGE-AI

Similar Open Source Tools

DOGE-AI

DOGE-AI is an autonomous AI agent designed to uncover waste and inefficiencies in government spending and policy decisions. It aims to make complex bills more understandable for the general public by analyzing and presenting government data in a user-friendly manner. The project focuses on creating a strong foundation for interacting with government data and empowering others to build innovative solutions for greater public engagement.

LLocalSearch

LLocalSearch is a completely locally running search aggregator using LLM Agents. The user can ask a question and the system will use a chain of LLMs to find the answer. The user can see the progress of the agents and the final answer. No OpenAI or Google API keys are needed.

gpdb

Greenplum Database (GPDB) is an advanced, fully featured, open source data warehouse, based on PostgreSQL. It provides powerful and rapid analytics on petabyte scale data volumes. Uniquely geared toward big data analytics, Greenplum Database is powered by the world’s most advanced cost-based query optimizer delivering high analytical query performance on large data volumes.

OpenBB

The OpenBB Platform is the first financial platform that is free and fully open source, offering access to equity, options, crypto, forex, macro economy, fixed income, and more. It provides a broad range of extensions to enhance the user experience according to their needs. Users can sign up to the OpenBB Hub to maximize the benefits of the OpenBB ecosystem. Additionally, the platform includes an AI-powered Research and Analytics Workspace for free. There is also an open source AI financial analyst agent available that can access all the data within OpenBB.

claudine

Claudine is an AI agent designed to reason and act autonomously, leveraging the Anthropic API, Unix command line tools, HTTP, local hard drive data, and internet data. It can administer computers, analyze files, implement features in source code, create new tools, and gather contextual information from the internet. Users can easily add specialized tools. Claudine serves as a blueprint for implementing complex autonomous systems, with potential for customization based on organization-specific needs. The tool is based on the anthropic-kotlin-sdk and aims to evolve into a versatile command line tool similar to 'git', enabling branching sessions for different tasks.

modelbench

ModelBench is a tool for running safety benchmarks against AI models and generating detailed reports. It is part of the MLCommons project and is designed as a proof of concept to aggregate measures, relate them to specific harms, create benchmarks, and produce reports. The tool requires LlamaGuard for evaluating responses and a TogetherAI account for running benchmarks. Users can install ModelBench from GitHub or PyPI, run tests using Poetry, and create benchmarks by providing necessary API keys. The tool generates static HTML pages displaying benchmark scores and allows users to dump raw scores and manage cache for faster runs. ModelBench is aimed at enabling users to test their own models and create tests and benchmarks.

lumigator

Lumigator is an open-source platform developed by Mozilla.ai to help users select the most suitable language model for their specific needs. It supports the evaluation of summarization tasks using sequence-to-sequence models such as BART and BERT, as well as causal models like GPT and Mistral. The platform aims to make model selection transparent, efficient, and empowering by providing a framework for comparing LLMs using task-specific metrics to evaluate how well a model fits a project's needs. Lumigator is in the early stages of development and plans to expand support to additional machine learning tasks and use cases in the future.

reverse-engineering-assistant

ReVA (Reverse Engineering Assistant) is a project aimed at building a disassembler agnostic AI assistant for reverse engineering tasks. It utilizes a tool-driven approach, providing small tools to the user to empower them in completing complex tasks. The assistant is designed to accept various inputs, guide the user in correcting mistakes, and provide additional context to encourage exploration. Users can ask questions, perform tasks like decompilation, class diagram generation, variable renaming, and more. ReVA supports different language models for online and local inference, with easy configuration options. The workflow involves opening the RE tool and program, then starting a chat session to interact with the assistant. Installation includes setting up the Python component, running the chat tool, and configuring the Ghidra extension for seamless integration. ReVA aims to enhance the reverse engineering process by breaking down actions into small parts, including the user's thoughts in the output, and providing support for monitoring and adjusting prompts.

atomic_agents

Atomic Agents is a modular and extensible framework designed for creating powerful applications. It follows the principles of Atomic Design, emphasizing small and single-purpose components. Leveraging Pydantic for data validation and serialization, the framework offers a set of tools and agents that can be combined to build AI applications. It depends on the Instructor package and supports various APIs like OpenAI, Cohere, Anthropic, and Gemini. Atomic Agents is suitable for developers looking to create AI agents with a focus on modularity and flexibility.

clippinator

Clippinator is a code assistant tool that helps users develop code autonomously by planning, writing, debugging, and testing projects. It consists of agents based on GPT-4 that work together to assist the user in coding tasks. The main agent, Taskmaster, delegates tasks to specialized subagents like Architect, Writer, Frontender, Editor, QA, and Devops. The tool provides project architecture, tools for file and terminal operations, browser automation with Selenium, linting capabilities, CI integration, and memory management. Users can interact with the tool to provide feedback and guide the coding process, making it a powerful tool when combined with human intervention.

pearai-master

PearAI is an inventory that curates cutting-edge AI tools in one place, offering a unified interface for seamless tool integration. The repository serves as the conglomeration of all PearAI project repositories, including VSCode fork, AI chat functionalities, landing page, documentation, and server. Contributions are welcome through quests and issue tackling, with the project stack including TypeScript/Electron.js, Next.js/React, Python FastAPI, and Axiom for logging/telemetry.

ersilia

The Ersilia Model Hub is a unified platform of pre-trained AI/ML models dedicated to infectious and neglected disease research. It offers an open-source, low-code solution that provides seamless access to AI/ML models for drug discovery. Models housed in the hub come from two sources: published models from literature (with due third-party acknowledgment) and custom models developed by the Ersilia team or contributors.

WilmerAI

WilmerAI is a middleware system designed to process prompts before sending them to Large Language Models (LLMs). It categorizes prompts, routes them to appropriate workflows, and generates manageable prompts for local models. It acts as an intermediary between the user interface and LLM APIs, supporting multiple backend LLMs simultaneously. WilmerAI provides API endpoints compatible with OpenAI API, supports prompt templates, and offers flexible connections to various LLM APIs. The project is under heavy development and may contain bugs or incomplete code.

AIlice

AIlice is a fully autonomous, general-purpose AI agent that aims to create a standalone artificial intelligence assistant, similar to JARVIS, based on the open-source LLM. AIlice achieves this goal by building a "text computer" that uses a Large Language Model (LLM) as its core processor. Currently, AIlice demonstrates proficiency in a range of tasks, including thematic research, coding, system management, literature reviews, and complex hybrid tasks that go beyond these basic capabilities. AIlice has reached near-perfect performance in everyday tasks using GPT-4 and is making strides towards practical application with the latest open-source models. We will ultimately achieve self-evolution of AI agents. That is, AI agents will autonomously build their own feature expansions and new types of agents, unleashing LLM's knowledge and reasoning capabilities into the real world seamlessly.

examor

Examor is a website application that allows you to take exams based on your knowledge notes. It helps you to remember what you have learned and written. The application generates a set of questions from the documents you upload, and you can answer them to test your knowledge. Examor also uses GPT to score and validate your answers, and provides you with feedback. The application is still in its early stages of development, but it has the potential to be a valuable tool for learners.

ai-rag-chat-evaluator

This repository contains scripts and tools for evaluating a chat app that uses the RAG architecture. It provides parameters to assess the quality and style of answers generated by the chat app, including system prompt, search parameters, and GPT model parameters. The tools facilitate running evaluations, with examples of evaluations on a sample chat app. The repo also offers guidance on cost estimation, setting up the project, deploying a GPT-4 model, generating ground truth data, running evaluations, and measuring the app's ability to say 'I don't know'. Users can customize evaluations, view results, and compare runs using provided tools.

For similar tasks

DOGE-AI

DOGE-AI is an autonomous AI agent designed to uncover waste and inefficiencies in government spending and policy decisions. It aims to make complex bills more understandable for the general public by analyzing and presenting government data in a user-friendly manner. The project focuses on creating a strong foundation for interacting with government data and empowering others to build innovative solutions for greater public engagement.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.