spellbook-docker

AI stack for interacting with LLMs, Stable Diffusion, Whisper, xTTS and many other AI models

Stars: 104

The Spellbook Docker Compose repository contains the Docker Compose files for running the Spellbook AI Assistant stack. It requires ExLlama and a Nvidia Ampere or better GPU for real-time results. The repository provides instructions for installing Docker, building and starting containers with or without GPU, additional workers, Nvidia driver installation, port forwarding, and fresh installation steps. Users can follow the detailed guidelines to set up the Spellbook framework on Ubuntu 22, enabling them to run the UI, middleware, and additional workers for resource access.

README:

The repository contains the Docker Compose files for running the Spellbook AI Assistant stack. The function calling features require ExLlama and a Nvidia Ampere or better GPU for real-time results.

These instructions should work to get the SpellBook framework up and running on Ubuntu 22. A Nvidia video card supported by ExLlama is required for routing.

- Default username: admin

- Default password: admin

# add Dockers official GPG key:

sudo apt-get update

sudo apt-get install ca-certificates curl gnupg

sudo install -m 0755 -d /etc/apt/keyrings

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /etc/apt/keyrings/docker.gpg

sudo chmod a+r /etc/apt/keyrings/docker.gpg

# add the repository to apt sources:

echo \

"deb [arch="$(dpkg --print-architecture)" signed-by=/etc/apt/keyrings/docker.gpg] https://download.docker.com/linux/ubuntu \

"$(. /etc/os-release && echo "$VERSION_CODENAME")" stable" | \

sudo tee /etc/apt/sources.list.d/docker.list > /dev/null

sudo apt-get update

# install docker, create user and let current user access docker

sudo apt-get install docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin

sudo groupadd docker

sudo usermod -aG docker $USER

sudo newgrp docker

sudo shutdown -r nowThe docker-compose-nogpu.yml is useful for running the UI and middleware in a situation where you want another backend handling you GPUs and LLMs. For example if you are also using Text Generation UI and do not want to mess with it settings this compose file can be used to just run the UI, allowing you then to then connect it to the endpoint provided by Oobabooga or any other OpenAI compatible backend.

docker compose -f docker-compose-nogpu.yml build

docker compose -f docker-compose-nogpu.yml upIf you have more than one server you can run additional Elemental Golem workers to give the UI access to more resources. A few steps need to be taken on the primary Spellbook server that is running the UI, middleware and other resources like Vault.

- Run these command on the primary server that is running the UI and middleware software in Docker.

- Copy the read token to a temp file to copy it to the worker server.

- Make note of the LAN IP address of the primary server. It is needed for the GOLEM_VAULT_HOST and GOLEM_VAULT_HOST variables.

- Make sure ports for RabbitMQ and Vault are open.

sudo more /var/lib/docker/volumes/spellbook-docker_vault_share/_data/read-token

ip address

sudo ufw allow 5671

sudo ufw allow 5672

sudo ufw allow 8200- Run these command on the server running the worker. The GOLEM_ID needs to be unique for every server and golem1 is used by the primary.

- The first time you run the container it will timeout, git CTL + C or wait then copy the Vault token.

docker compose -f docker-compose-worker-nogpu.yml build

GOLEM_VAULT_HOST=10.10.10.X GOLEM_AMQP_HOST=10.10.10.X GOLEM_ID=golem2 docker compose -f docker-compose-worker-nogpu.yml up

sudo su

echo "TOKEN FROM PRIMARY SERVER" > /var/lib/docker/volumes/spellbook-docker_vault_share/_data/read-token

exit

GOLEM_VAULT_HOST=10.10.10.X GOLEM_AMQP_HOST=10.10.10.X GOLEM_ID=golem2 docker compose -f docker-compose-worker-nogpu.yml up# make sure system see's the Nvidia graphic(s) card

lspci | grep -e VGA

# check available drivers

ubuntu-drivers devices

# install the latest driver

sudo apt install nvidia-driver-535

# restart the server

sudo shutdown -h now

# confirm driver was installed

nvidia-smi

# install the Nvidia docker toolkit

curl -fsSL https://nvidia.github.io/libnvidia-container/gpgkey | sudo gpg --dearmor -o /usr/share/keyrings/nvidia-container-toolkit-keyring.gpg \

&& curl -s -L https://nvidia.github.io/libnvidia-container/stable/deb/nvidia-container-toolkit.list | \

sed 's#deb https://#deb [signed-by=/usr/share/keyrings/nvidia-container-toolkit-keyring.gpg] https://#g' | \

sudo tee /etc/apt/sources.list.d/nvidia-container-toolkit.list \

&& \

sudo apt-get update

sudo apt-get install -y nvidia-container-toolkit

sudo nvidia-ctk runtime configure --runtime=docker

sudo systemctl restart docker

# verify see the output of nvidia-smi for inside a container

sudo docker run --rm --runtime=nvidia --gpus all ubuntu nvidia-smidocker compose build

docker compose upFollow the directions under the Build and Start Additional Workers (No GPU) section substituting the build and up lines for the ones found below.

docker compose -f docker-compose-worker.yml build

GOLEM_VAULT_HOST=10.10.10.X GOLEM_AMQP_HOST=10.10.10.X GOLEM_ID=golem2 docker compose -f docker-compose-worker.yml upThis repository assumes you are running the docker containers on your local system if this is not the case make sure ports 3000 and 4200 are forwarded to the host running the docker containers.

For a fresh install of the stack run the following commands, this will remove all downloaded models and all conversation and configuration records.

cd spellbook-docker

docker compose down

docker volume rm spellbook-docker_models_share

docker volume rm spellbook-docker_vault_share

git pull origin master

docker compose build

docker compose upFor Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for spellbook-docker

Similar Open Source Tools

spellbook-docker

The Spellbook Docker Compose repository contains the Docker Compose files for running the Spellbook AI Assistant stack. It requires ExLlama and a Nvidia Ampere or better GPU for real-time results. The repository provides instructions for installing Docker, building and starting containers with or without GPU, additional workers, Nvidia driver installation, port forwarding, and fresh installation steps. Users can follow the detailed guidelines to set up the Spellbook framework on Ubuntu 22, enabling them to run the UI, middleware, and additional workers for resource access.

vasttools

This repository contains a collection of tools that can be used with vastai. The tools are free to use, modify and distribute. If you find this useful and wish to donate your welcome to send your donations to the following wallets. BTC 15qkQSYXP2BvpqJkbj2qsNFb6nd7FyVcou XMR 897VkA8sG6gh7yvrKrtvWningikPteojfSgGff3JAUs3cu7jxPDjhiAZRdcQSYPE2VGFVHAdirHqRZEpZsWyPiNK6XPQKAg RVN RSgWs9Co8nQeyPqQAAqHkHhc5ykXyoMDUp USDT(ETH ERC20) 0xa5955cf9fe7af53bcaa1d2404e2b17a1f28aac4f Paypal PayPal.Me/cryptolabsZA

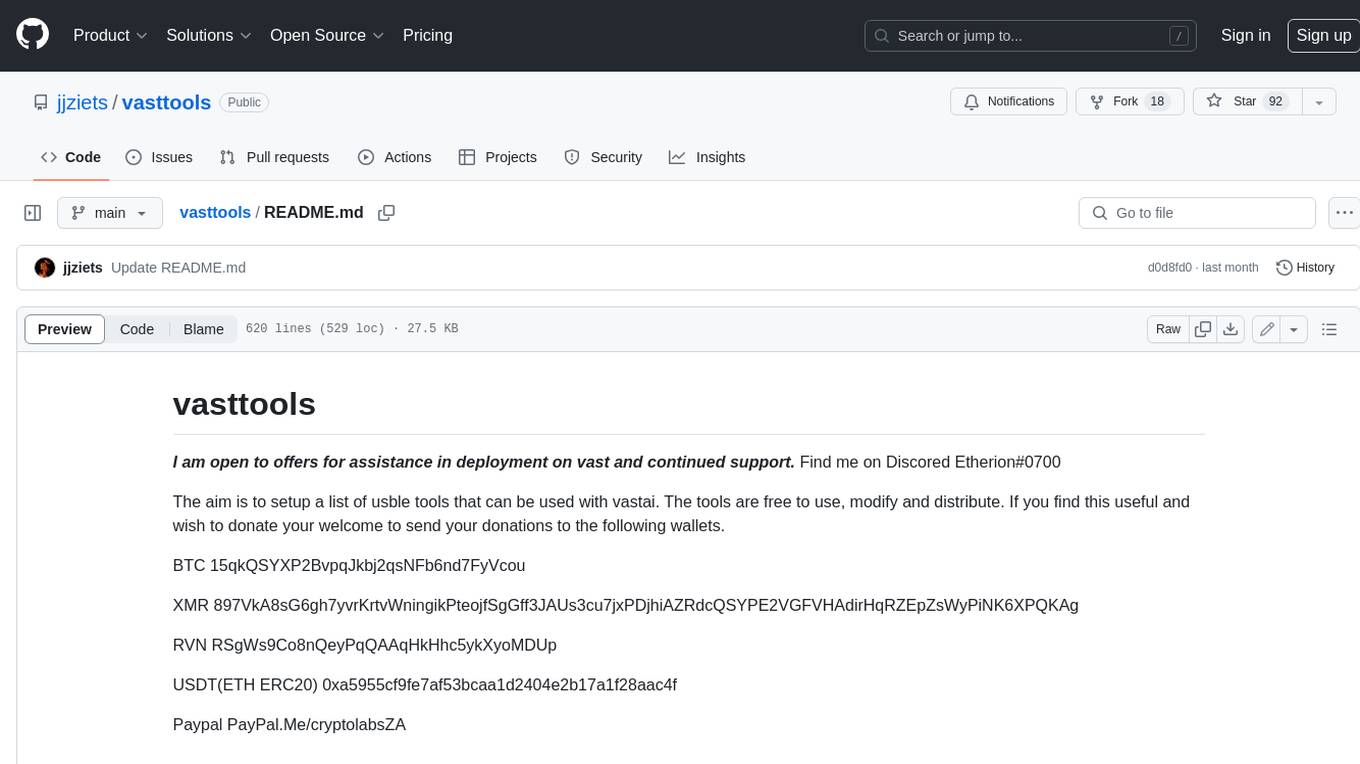

just-chat

Just-Chat is a containerized application that allows users to easily set up and chat with their AI agent. Users can customize their AI assistant using a YAML file, add new capabilities with Python tools, and interact with the agent through a chat web interface. The tool supports various modern models like DeepSeek Reasoner, ChatGPT, LLAMA3.3, etc. Users can also use semantic search capabilities with MeiliSearch to find and reference relevant information based on meaning. Just-Chat requires Docker or Podman for operation and provides detailed installation instructions for both Linux and Windows users.

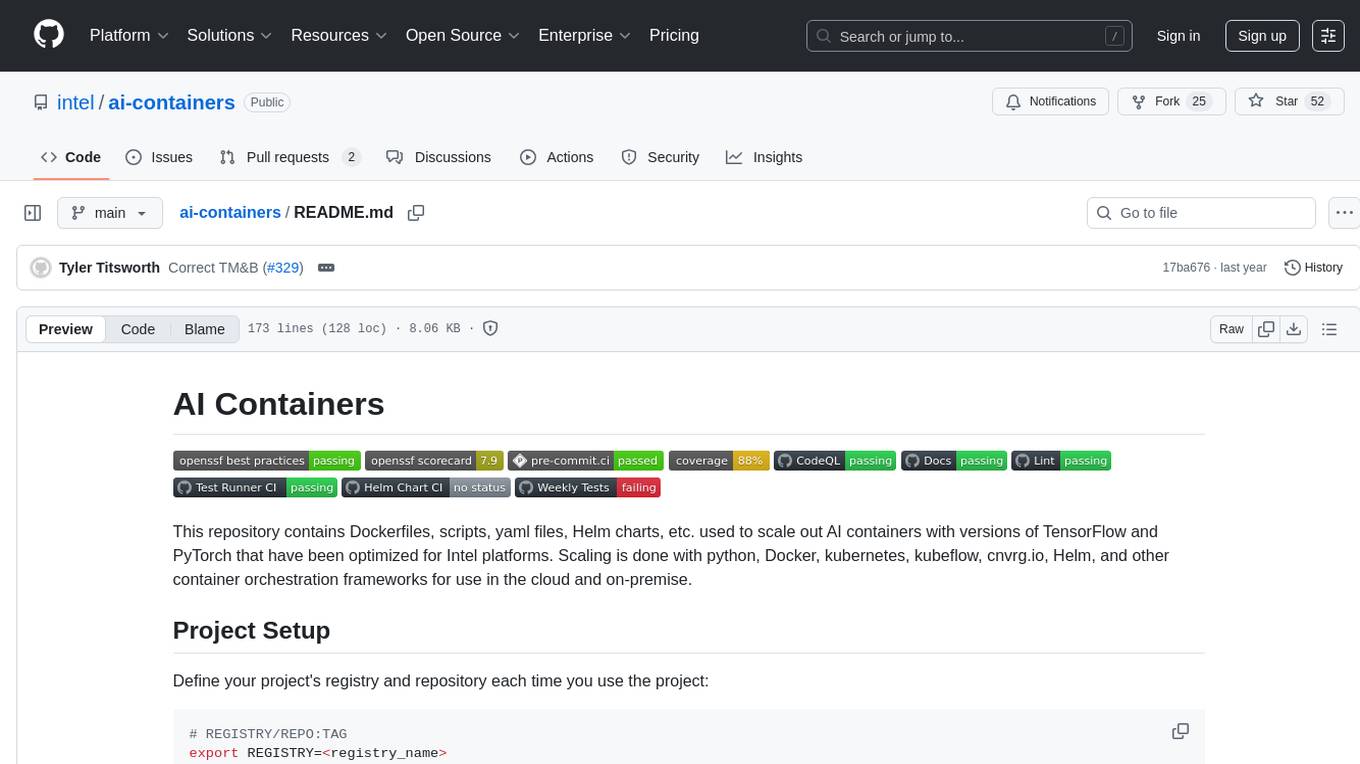

ai-containers

This repository contains Dockerfiles, scripts, yaml files, Helm charts, etc. used to scale out AI containers with versions of TensorFlow and PyTorch optimized for Intel platforms. Scaling is done with python, Docker, kubernetes, kubeflow, cnvrg.io, Helm, and other container orchestration frameworks for use in the cloud and on-premise.

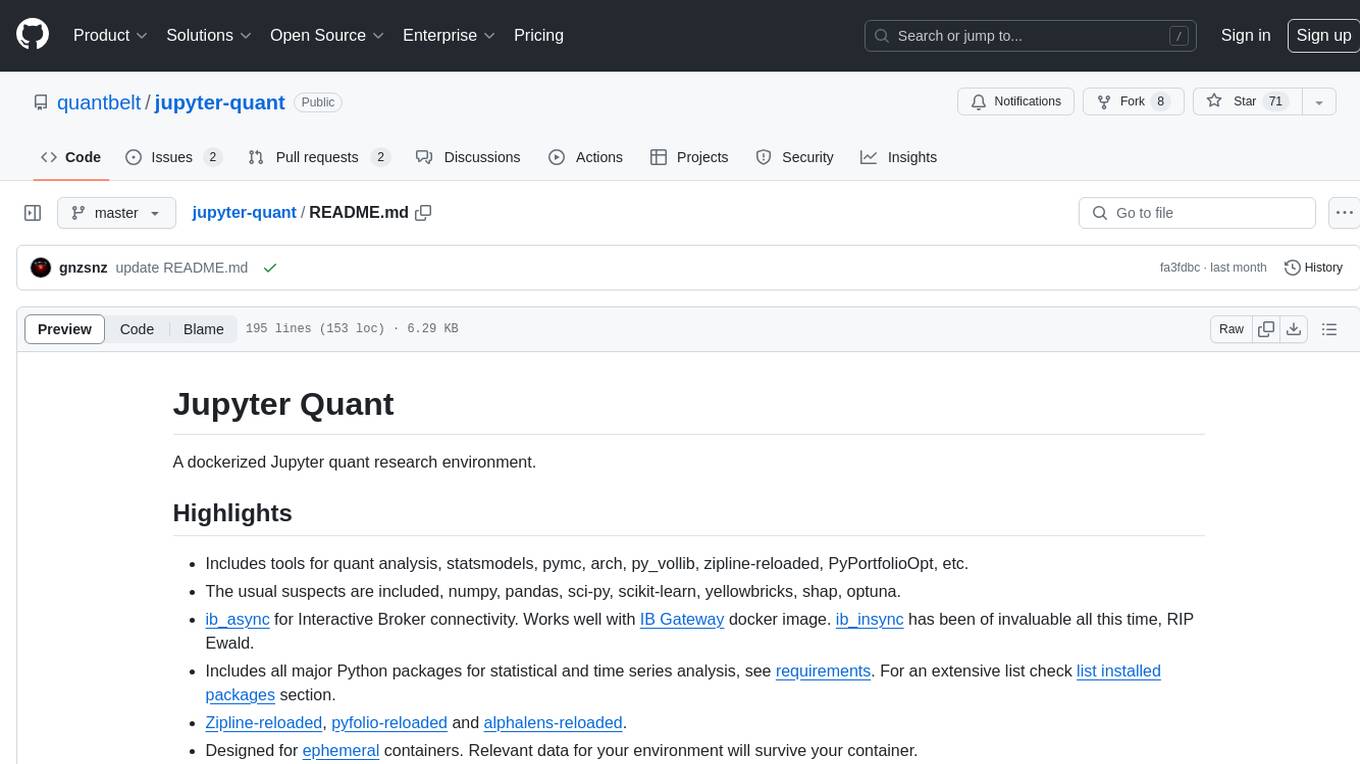

jupyter-quant

Jupyter Quant is a dockerized environment tailored for quantitative research, equipped with essential tools like statsmodels, pymc, arch, py_vollib, zipline-reloaded, PyPortfolioOpt, numpy, pandas, sci-py, scikit-learn, yellowbricks, shap, optuna, and more. It provides Interactive Broker connectivity via ib_async and includes major Python packages for statistical and time series analysis. The image is optimized for size, includes jedi language server, jupyterlab-lsp, and common command line utilities. Users can install new packages with sudo, leverage apt cache, and bring their own dot files and SSH keys. The tool is designed for ephemeral containers, ensuring data persistence and flexibility for quantitative analysis tasks.

frontend

Nuclia frontend apps and libraries repository contains various frontend applications and libraries for the Nuclia platform. It includes components such as Dashboard, Widget, SDK, Sistema (design system), NucliaDB admin, CI/CD Deployment, and Maintenance page. The repository provides detailed instructions on installation, dependencies, and usage of these components for both Nuclia employees and external developers. It also covers deployment processes for different components and tools like ArgoCD for monitoring deployments and logs. The repository aims to facilitate the development, testing, and deployment of frontend applications within the Nuclia ecosystem.

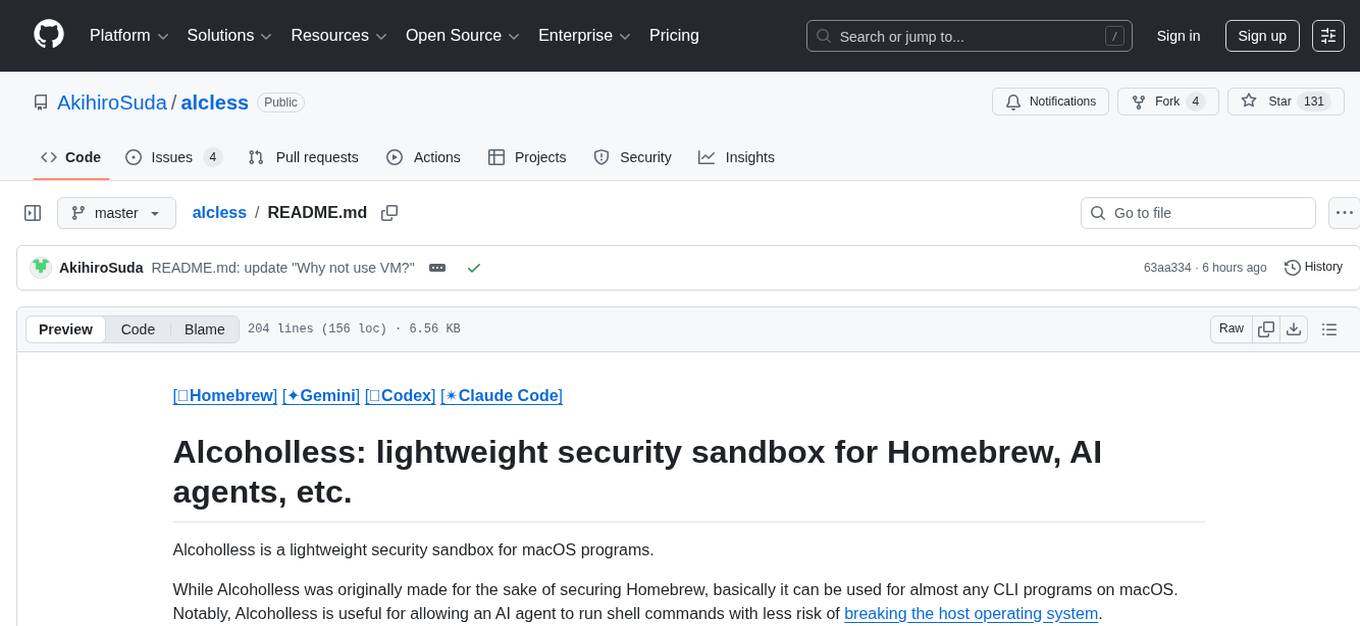

alcless

Alcoholless is a lightweight security sandbox for macOS programs, originally designed for securing Homebrew but can be used for any CLI programs. It allows AI agents to run shell commands with reduced risk of breaking the host OS. The tool creates a separate environment for executing commands, syncing changes back to the host directory upon command exit. It uses utilities like sudo, su, pam_launchd, and rsync, with potential future integration of FSKit for file syncing. The tool also generates a sudo configuration for user-specific sandbox access, enabling users to run commands as the sandbox user without a password.

pyrfuniverse

pyrfuniverse is a python package used to interact with RFUniverse simulation environment. It is developed with reference to ML-Agents and produce new features. The package allows users to work with RFUniverse for simulation purposes, providing tools and functionalities to interact with the environment and create new features.

FreedomGPT

Freedom GPT is a desktop application that allows users to run alpaca models on their local machine. It is built using Electron and React. The application is open source and available on GitHub. Users can contribute to the project by following the instructions in the repository. The application can be run using the following command: yarn start. The application can also be dockerized using the following command: docker run -d -p 8889:8889 freedomgpt/freedomgpt. The application utilizes several open-source packages and libraries, including llama.cpp, LLAMA, and Chatbot UI. The developers of these packages and their contributors deserve gratitude for making their work available to the public under open source licenses.

desktop

ComfyUI Desktop is a packaged desktop application that allows users to easily use ComfyUI with bundled features like ComfyUI source code, ComfyUI-Manager, and uv. It automatically installs necessary Python dependencies and updates with stable releases. The app comes with Electron, Chromium binaries, and node modules. Users can store ComfyUI files in a specified location and manage model paths. The tool requires Python 3.12+ and Visual Studio with Desktop C++ workload for Windows. It uses nvm to manage node versions and yarn as the package manager. Users can install ComfyUI and dependencies using comfy-cli, download uv, and build/launch the code. Troubleshooting steps include rebuilding modules and installing missing libraries. The tool supports debugging in VSCode and provides utility scripts for cleanup. Crash reports can be sent to help debug issues, but no personal data is included.

Oxen

Oxen is a data version control library, written in Rust. It's designed to be fast, reliable, and easy to use. Oxen can be used in a variety of ways, from a simple command line tool to a remote server to sync to, to integrations into other ecosystems such as python.

AMD-AI

AMD-AI is a repository containing detailed instructions for installing, setting up, and configuring ROCm on Ubuntu systems with AMD GPUs. The repository includes information on installing various tools like Stable Diffusion, ComfyUI, and Oobabooga for tasks like text generation and performance tuning. It provides guidance on adding AMD GPU package sources, installing ROCm-related packages, updating system packages, and finding graphics devices. The instructions are aimed at users with AMD hardware looking to set up their Linux systems for AI-related tasks.

sandvault

SandVault is a tool that manages a limited user account to sandbox shell commands and AI agents on macOS, providing a lightweight alternative to application isolation using virtual machines. It allows for running Claude Code, OpenAI Codex, Google Gemini, and shell commands safely within a sandboxed environment. SandVault offers features like fast context switching, passwordless account switching, shared workspace access, and clean uninstallation. The tool operates with limited access to the user's computer, ensuring security by restricting access to certain directories and system files.

jupyter-quant

Jupyter Quant is a dockerized environment tailored for quantitative research, equipped with essential tools like statsmodels, pymc, arch, py_vollib, zipline-reloaded, PyPortfolioOpt, numpy, pandas, sci-py, scikit-learn, yellowbricks, shap, optuna, ib_insync, Cython, Numba, bottleneck, numexpr, jedi language server, jupyterlab-lsp, black, isort, and more. It does not include conda/mamba and relies on pip for package installation. The image is optimized for size, includes common command line utilities, supports apt cache, and allows for the installation of additional packages. It is designed for ephemeral containers, ensuring data persistence, and offers volumes for data, configuration, and notebooks. Common tasks include setting up the server, managing configurations, setting passwords, listing installed packages, passing parameters to jupyter-lab, running commands in the container, building wheels outside the container, installing dotfiles and SSH keys, and creating SSH tunnels.

XcodeLLMEligible

XcodeLLMEligible is a project that provides ways to enjoy Xcode LLM on ChinaSKU Mac without disabling SIP. It offers methods for script execution and manual execution, allowing users to override eligibility service features. The project is for learning and research purposes only, and users are responsible for compliance with applicable laws. The author disclaims any responsibility for consequences arising from the use of the project.

please-cli

Please CLI is an AI helper script designed to create CLI commands by leveraging the GPT model. Users can input a command description, and the script will generate a Linux command based on that input. The tool offers various functionalities such as invoking commands, copying commands to the clipboard, asking questions about commands, and more. It supports parameters for explanation, using different AI models, displaying additional output, storing API keys, querying ChatGPT with specific models, showing the current version, and providing help messages. Users can install Please CLI via Homebrew, apt, Nix, dpkg, AUR, or manually from source. The tool requires an OpenAI API key for operation and offers configuration options for setting API keys and OpenAI settings. Please CLI is licensed under the Apache License 2.0 by TNG Technology Consulting GmbH.

For similar tasks

spellbook-docker

The Spellbook Docker Compose repository contains the Docker Compose files for running the Spellbook AI Assistant stack. It requires ExLlama and a Nvidia Ampere or better GPU for real-time results. The repository provides instructions for installing Docker, building and starting containers with or without GPU, additional workers, Nvidia driver installation, port forwarding, and fresh installation steps. Users can follow the detailed guidelines to set up the Spellbook framework on Ubuntu 22, enabling them to run the UI, middleware, and additional workers for resource access.

ai-containers

This repository contains Dockerfiles, scripts, yaml files, Helm charts, etc. used to scale out AI containers with versions of TensorFlow and PyTorch optimized for Intel platforms. Scaling is done with python, Docker, kubernetes, kubeflow, cnvrg.io, Helm, and other container orchestration frameworks for use in the cloud and on-premise.

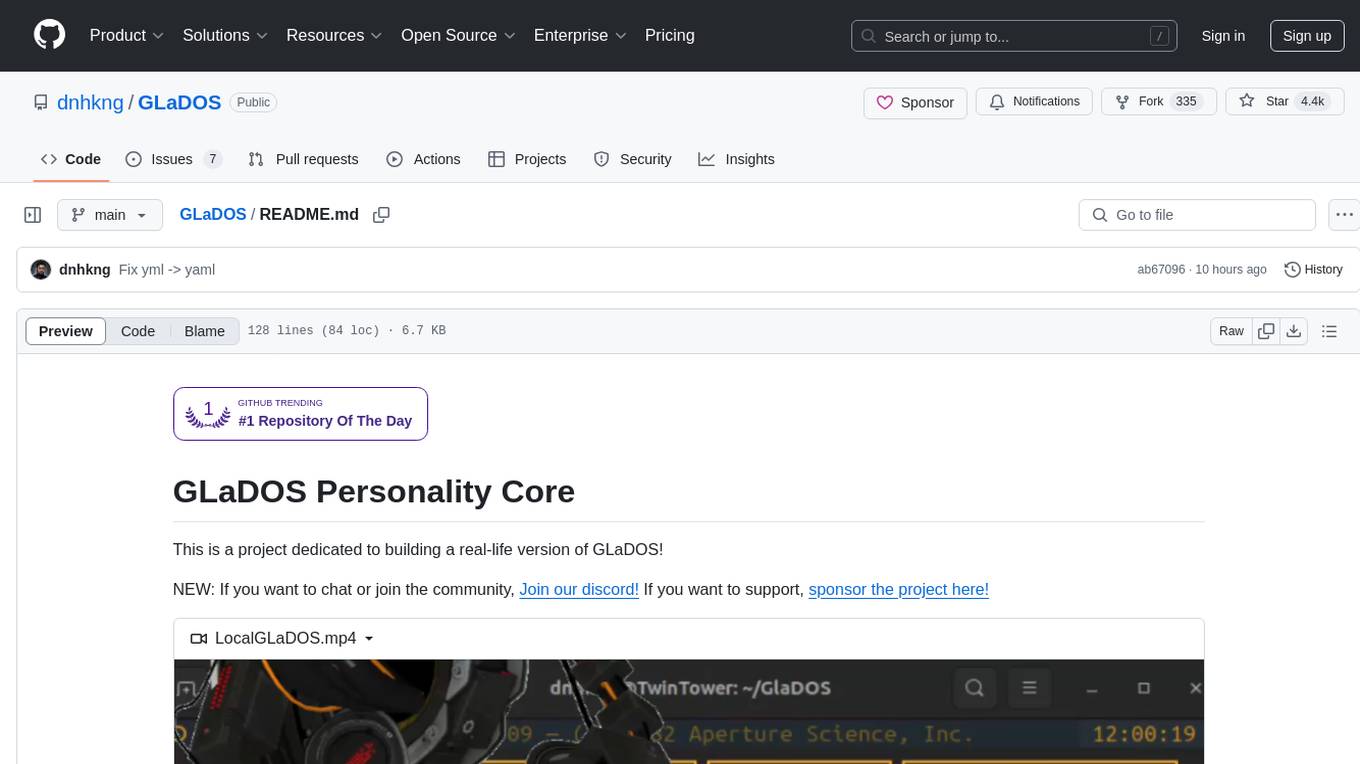

GLaDOS

GLaDOS Personality Core is a project dedicated to building a real-life version of GLaDOS, an aware, interactive, and embodied AI system. The project aims to train GLaDOS voice generator, create a 'Personality Core,' develop medium- and long-term memory, provide vision capabilities, design 3D-printable parts, and build an animatronics system. The software architecture focuses on low-latency voice interactions and minimal dependencies. The hardware system includes servo- and stepper-motors, 3D printable parts for GLaDOS's body, animations for expression, and a vision system for tracking and interaction. Installation instructions involve setting up a local LLM server, installing drivers, and running GLaDOS on different operating systems.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.