my-neuro

This project lets you create your own AI desktop companion with customizable characters and voice conversations that respond in just 1 second. Features include long-term memory, visual recognition, voice cloning and LLM training. Compatible with various Live2D customizations.

Stars: 512

The project aims to create a personalized AI character, a lifelike AI companion - shaping the ideal image of TA in your mind through your data imprint. The project is inspired by neuro sama, hence named my-neuro. The project can train voice, personality, and replace images. It serves as a workspace where you can use packaged tools to step by step draw and realize the ideal AI image in your mind. The deployment of the current document requires less than 6GB of VRAM, compatible with Windows systems, and requires an API-KEY. The project offers features like low latency, real-time interruption, emotion simulation, visual capabilities integration, voice model training support, desktop control, live streaming on platforms like Bilibili, and more. It aims to provide a comprehensive AI experience with features like long-term memory, AI customization, and emotional interactions.

README:

本项目的目标是打造专属个人的 AI 角色,打造出逼近真人的AI伙伴 - 通过您的数据印记,塑造出心目中理想的 TA 的形象。

此项目受neuro sama启发,所以取名为my-neuro(社区提供的名称) 项目可训练声音、性格、替换形象 您的想象力有多丰富,模型就能多贴近您的期望。本项目更像是一个工作台。利用打包好的工具,一步步亲手描绘并实现心中理想的 AI 形象。

当前文档部署仅需6G显存不到,适配windows系统。同时需要有一个API-KEY 因为当前没有中转厂商来找我打广告,所以我不向各位推荐具体去哪里买API。但可以前往淘宝搜索“API”。里面有很多商家贩卖。也可以去deepseek、千问、智谱AI、硅基流动这些知名的官网购买。

如果你想用全部都用本地推理,使用本地的大语言模型(LLM)推理或者微调。不基于第三方的API的话,那可以进入LLM-studio文件夹,里面有本地模型的推理、微调指导。同时,因为本地的大语言模型需要一定的显存,要想有一个还算不错的体验,建议显卡至少保证有12G显存大小。

如果部署的时候遇到处理不了的bug可以前往此链接:http://fake-neuro.natapp1.cc

向肥牛客服询问,它会指导你如何处理项目可能出现的bug 不过大多数情况下不会有什么bug!也许..

如果肥牛客服解决不了,就点击页面右上角的这个"上传解决不了的报错"按键。点击后会直接把你和肥牛的对话记录发送到我的邮箱那去。

我就可以看见对话记录,从而针对性的修复bug或者告诉肥牛如何解决这个bug 下次再遇到就可以解决了!!

如果还是解决不了,不想搞那么多可以直接下载整合包,解压即可使用:

百度网盘:

链接: https://pan.baidu.com/s/1n1Tqd2VYGjfWt1hPRvmSHw?pwd=jhav 提取码: jhav

123网盘:

https://www.123912.com/s/MJqQvd-Bps5H

- [x] 开源模型:支持开源模型微调,本地部署

- [x] 闭源模型:支持闭源模型接入

- [x] 超低延迟:全本地推理,对话延迟在1秒以下

- [x] 字幕和语音同步输出

- [x] 语音定制:支持男、女声、各种角色声线切换等

- [x] MCP支持:可使用MCP工具接入

- [x] 实时打断:支持语音、键盘打断AI说话

- [ ] 真实情感:模拟真人的情绪变化状态,有自己的情绪状态。

- [ ] 超吊的人机体验(类似真人交互设计,敬请期待)

- [x] 动作表情:根据对话内容展示不同的表情与动作

- [x] 集成视觉能力,支持图像识别,并通过语言意图判断何时启动视觉功能

- [x] 声音模型(TTS)训练支持,默认使用gpt-sovits开源项目

- [x] 字幕显示中文。音频播放是外语。可自由开启关闭(适用于TTS模型本身就是外语的角色)

- [x] 桌面控制:支持语音控制打开软件等操作

- [x] AI唱歌(功能由: @jonnytri53 资金赞助开发,特此感谢)

- [ ] 国外直播平台的接入

- [x] 直播功能:可在哔哩哔哩平台直播

- [ ] AI讲课:选择一个主题,让AI给你讲课。中途可提问。偏门课程可植入资料到数据库让AI理解

- [x] 替换各类live 2d模型

- [ ] web网页界面支持(已做好,近期会接入)

- [x] 打字对话:可键盘打字和AI交流

- [x] 主动对话:根据上下文主动发起对话。目前版本V1

- [x] 联网接入,实时搜索最新信息

- [x] 手机app应用:可在安卓手机上对话的肥牛

- [x] 播放音效库中的音效,由模型自己决定播放何种音效

- [ ] 游戏陪玩,模型和用户共同游玩配合、双人、解密等游戏。目前实验游戏为:你画我猜、大富翁、galgame、我的世界等游戏

- [x] 长期记忆,让模型记住你的关键信息,你的个性,脾气

- [ ] 变色功能:按照模型心情让屏幕变色妨碍用户

- [ ] 自由走动:模型自由在屏幕中移动

如果你是新手,可以使用这个一键部署指令。它会把所有的东西都处理好。但是由于涉及的逻辑处理过于多。所以有失败的可能性。但是成功了就会省去很多的麻烦。看你的运气了!

确保你电脑里安装了conda 如果还没有安装,请直接点击这个下载:conda安装包

安装流程可以参考这个视频,讲的很详细:https://www.bilibili.com/video/BV1ns4y1T7AP (从1分40秒开始观看)

已经有了conda环境后,就可以开始动手了!

成功了后,你就可以直接跳到下面的第3步了,1、2步都不需要做。如果失败了,就老实做下面的这些步骤吧

如果上面的一键处理出问题了。那么建议一步一步按照下方的操作方法。虽然麻烦。但是如果出错了。

也能立刻定位出错的位置,从而针对性的解决

conda create -n my-neuro python=3.11 -y

conda activate my-neuro

#独立安装jieba_fast依赖

pip install jieba_fast-0.53-cp311-cp311-win_amd64.whl

#独立安装pyopenjtalk依赖

pip install pyopenjtalk-0.4.1-cp311-cp311-win_amd64.whl

pip install -r requirements.txt -i https://pypi.tuna.tsinghua.edu.cn/simple/

#安装ffmpedg

conda install ffmpeg -y

#安装cuda 默认是12.8 可以自行修改

pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu128

#自动下载需要的各种模型

python Batch_Download.pybert.bat

ASR.bat

TTS.bat

可选(双击这个会提升模型的长期记忆功能,但是显存相对来说要增加1.5G)

RAG.bat进去后,双击打开这个 肥牛.exe 文件

按照箭头指示点击LLM标签,在框选的这三个地方填写你的API信息,修改好了记得点击下面的保存。(我在这里已经写了一个可以用的api配置,你可以删除改成你自己的。支持任何openai格式的api)

最后返回点击"启动桌宠" 等待皮套出现,就可以开始和模型聊天了

如果有任何问题,在终端运行以下命令以启动诊断工具

conda activate my-neuro

python diagnostic_tool.py运行后将会弹出一个窗口,里面包含了后端诊断信息和一键修复按钮,若不能自行解决问题,向客服提供输出信息

当前端需要更新时,运行 更新前端.bat

该模块由@jdnoeg基于GPT-SoVITS项目制作

注:本模块需要在完成虚拟环境配置后进行

该模块可以使用一段音频一键克隆你想要的角色的音色

音频要求:长度在10min~30min之间,mp3格式,可以有背景音乐,但是只能有一个说话人

硬件要求:显存至少6G的显卡

1.把你的音频文件放在fine_tuning/input文件夹下,改名为"audio.mp3",如图

第一次用的时候这个位置会有一个占位符.txt,建议删掉,不删也没关系,但是会有些不影响进程的报错

2.这一步需要科学上网运行

双击运行 一键克隆音色.bat ,在弹出的窗口中输入你的音频使用的语言以及你的tts模型的名称(自己起一个,示例使用"1"),如图

键盘上随便按一个键就可以继续

在脚本运行期间不要关闭窗口!!!

3.脚本运行完成后,修改配置以使用你训练好的模型

打开run_server.py进行编辑(有IDE的可以用,没有的直接右键记事本打开)

需要修改的地方一共有如图所示的四处:

红色框的地方全部改成你在步骤2中输入的模型名称,黄色框的地方改成你输入的音频的语言,如下图

修改完成后一定不要忘记保存!!!

关掉文件,双击Game-starts.bat运行服务端,这时候tts使用的就是你训练好的模型了

想改回默认的模型只需要把你修改过的内容再改回原来的样子就行

有定制属于自己的AI的需求,请打开LLM-studio 文件夹,在此文件夹中内有关于LLM的微调操作步骤。

目前定制化模块将逐渐增加...

我会把这几年的经验和对技术的理解都加入到此项目中。my neuro 这个名字除了蹭neuro sama的热度以外 更多的是我对AI边界的想象和期待

希望有一个真正的能懂你,理解你,且永远不会觉得无聊的AI陪伴在身旁。难受就一起难受,开心就一起开心。让影视作品、小说。幻想中的形象来到现实里

不仅仅是简单的聊天交互。希望它可以融入生活,成为一个有感情的个体。一起开黑玩游戏、看视频、学习东西、睡前聊天、叫你起床、工作时不说话看你发呆,会偷偷记住你做了什么。有情绪,有属于自己的情感状态。会真正的生气

每天都会有自己的情绪变化,会有自己想要做的事。会对某句话难受很久。某个词开心很久。会记住和你在一起经历过的每一个时刻。持续理解你的一个存在。

不过最重要的是,它的个性、样子、声音,情感变化等。都由你决定,就像橡皮泥,我们来提供最好的工具,做好每个模块适配。但最终是怎么样的,由自己来构造。

不过,对于不想折腾的朋友。此项目也直接打包了一个角色,就是肥牛(fake neuro)它是一个抄袭neuro sama的角色,但是个性我把它设定成了一个腹黑、傲娇、搞笑、有小脾气,但偶尔也会展现温柔一面的样子。

更希望的是从neuro 那里模仿借鉴,理解。然后尝试创造新的内容。适合自己的东西。

我对此项目特别的有热情。当前项目已经实现了将近30%的功能。包括定性格、记忆。近期会围绕核心性格特征。也就是真正像人,有持续的情绪这块地方来开发。会在2个月内实现最像人的那部分,就是一个长期的情绪状态。同时开黑玩游戏、看视频、叫你起床等等这块部分等功能都会在6月1日前基本完成,达到60%的完成度。

希望能在今年可以把上述所有的想法都实现。

QQ群:感谢 菊花茶洋参 帮忙制作肥牛app的封面

感谢以下用户的资金赞助:

- jonnytri53 - 感谢您的支持! 为本项目捐赠的50美元

- 蒜头头头 感谢您的大力支持!为本项目捐赠的1000人民币

- 东方月辰DFYC 感谢您的支持!!8月捐赠100元 9月持续捐赠100元 共200人民币。

- 大米若叶 感谢您的支持!!为本项目捐赠 68人民币

感谢大佬开源十分好用的tts: GPT-SoVITS:https://github.com/RVC-Boss/GPT-SoVITS

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for my-neuro

Similar Open Source Tools

my-neuro

The project aims to create a personalized AI character, a lifelike AI companion - shaping the ideal image of TA in your mind through your data imprint. The project is inspired by neuro sama, hence named my-neuro. The project can train voice, personality, and replace images. It serves as a workspace where you can use packaged tools to step by step draw and realize the ideal AI image in your mind. The deployment of the current document requires less than 6GB of VRAM, compatible with Windows systems, and requires an API-KEY. The project offers features like low latency, real-time interruption, emotion simulation, visual capabilities integration, voice model training support, desktop control, live streaming on platforms like Bilibili, and more. It aims to provide a comprehensive AI experience with features like long-term memory, AI customization, and emotional interactions.

NarratoAI

NarratoAI is an automated video narration tool that provides an all-in-one solution for script writing, automated video editing, voice-over, and subtitle generation. It is powered by LLM to enhance efficient content creation. The tool aims to simplify the process of creating film commentary and editing videos by automating various tasks such as script writing and voice-over generation. NarratoAI offers a user-friendly interface for users to easily generate video scripts, edit videos, and customize video parameters. With future plans to optimize story generation processes and support additional large models, NarratoAI is a versatile tool for content creators looking to streamline their video production workflow.

Tianji

Tianji is a free, non-commercial artificial intelligence system developed by SocialAI for tasks involving worldly wisdom, such as etiquette, hospitality, gifting, wishes, communication, awkwardness resolution, and conflict handling. It includes four main technical routes: pure prompt, Agent architecture, knowledge base, and model training. Users can find corresponding source code for these routes in the tianji directory to replicate their own vertical domain AI applications. The project aims to accelerate the penetration of AI into various fields and enhance AI's core competencies.

siliconflow-plugin

SiliconFlow-PLUGIN (SF-PLUGIN) is a versatile AI integration plugin for the Yunzai robot framework, supporting multiple AI services and models. It includes features such as AI drawing, intelligent conversations, real-time search, text-to-speech synthesis, resource management, link handling, video parsing, group functions, WebSocket support, and Jimeng-Api interface. The plugin offers functionalities for drawing, conversation, search, image link retrieval, video parsing, group interactions, and more, enhancing the capabilities of the Yunzai framework.

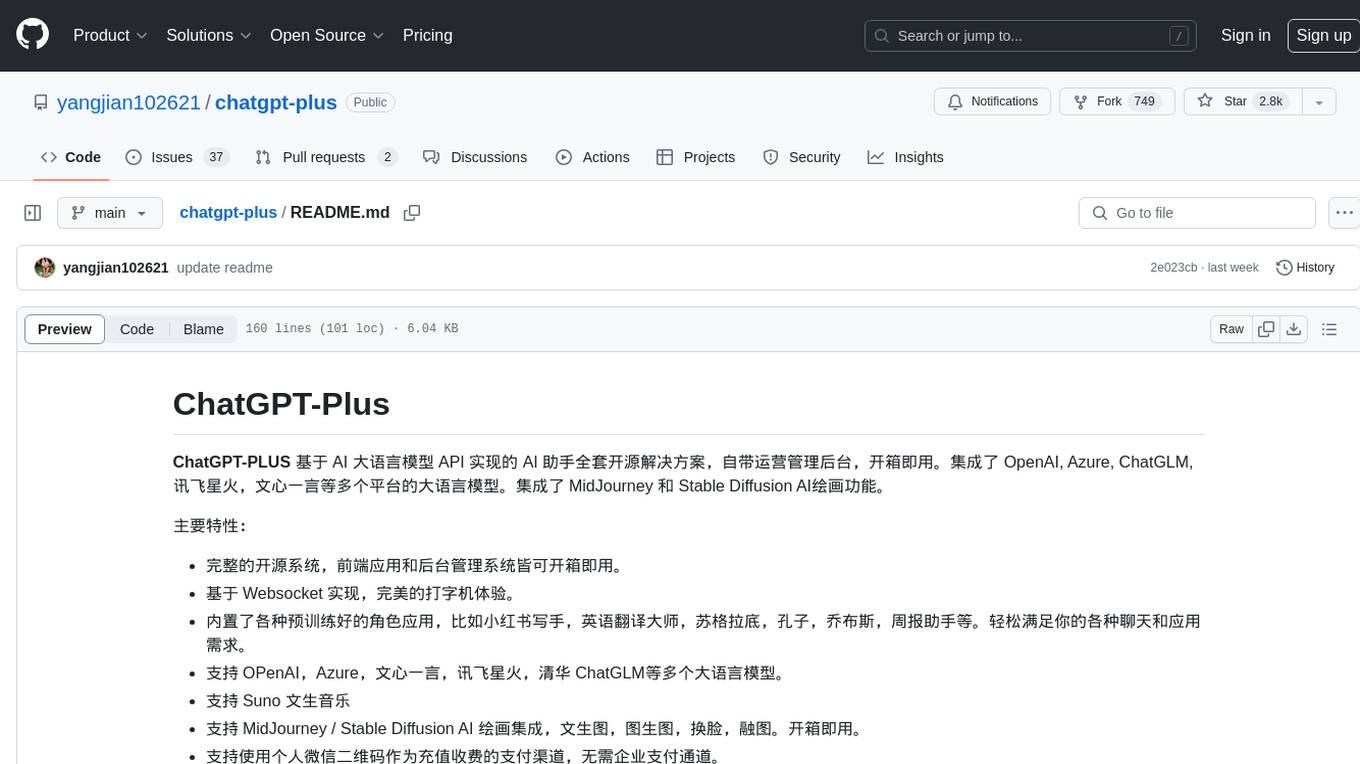

chatgpt-plus

ChatGPT-PLUS is an open-source AI assistant solution based on AI large language model API, with a built-in operational management backend for easy deployment. It integrates multiple large language models from platforms like OpenAI, Azure, ChatGLM, Xunfei Xinghuo, and Wenxin Yanyan. Additionally, it includes MidJourney and Stable Diffusion AI drawing features. The system offers a complete open-source solution with ready-to-use frontend and backend applications, providing a seamless typing experience via Websocket. It comes with various pre-trained role applications such as Xiaohongshu writer, English translation master, Socrates, Confucius, Steve Jobs, and weekly report assistant to meet various chat and application needs. Users can enjoy features like Suno Wensheng music, integration with MidJourney/Stable Diffusion AI drawing, personal WeChat QR code for payment, built-in Alipay and WeChat payment functions, support for various membership packages and point card purchases, and plugin API integration for developing powerful plugins using large language model functions.

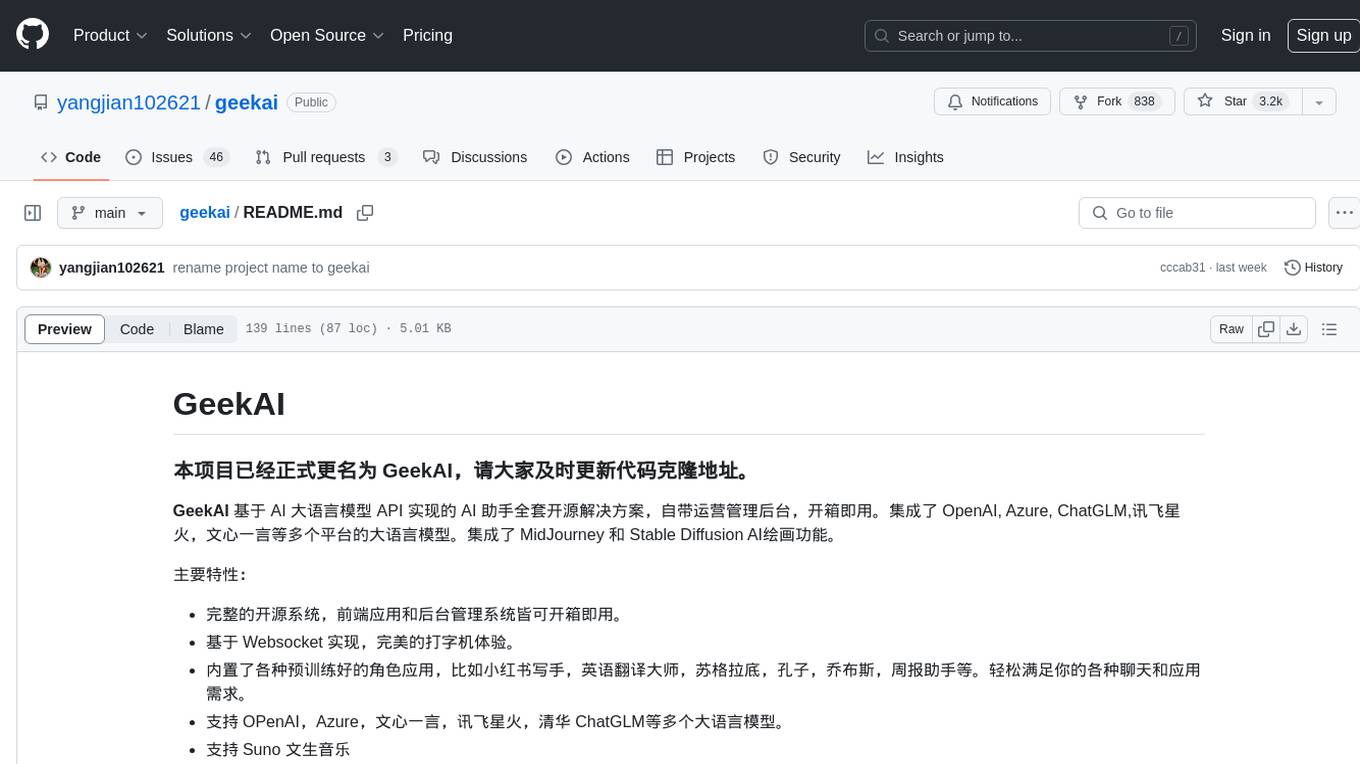

geekai

GeekAI is an open-source AI assistant solution based on AI large language model API, featuring a complete system with ready-to-use front-end and back-end management, providing a seamless typing experience via Websocket. It integrates various pre-trained character applications like Xiaohongshu writing assistant, English translation master, Socrates, Confucius, Steve Jobs, and weekly report assistant. The tool supports multiple large language models from platforms like OpenAI, Azure, Wenxin Yanyan, Xunfei Xinghuo, and Tsinghua ChatGLM. Additionally, it includes MidJourney and Stable Diffusion AI drawing functionalities for creating various artworks such as text-based images, face swapping, and blending images. Users can utilize personal WeChat QR codes for payment without the need for enterprise payment channels, and the tool offers integrated payment options like Alipay and WeChat Pay with support for multiple membership packages and point card purchases. It also features a plugin API for developing powerful plugins using large language model functions, including built-in plugins for Weibo hot search, today's headlines, morning news, and AI drawing functions.

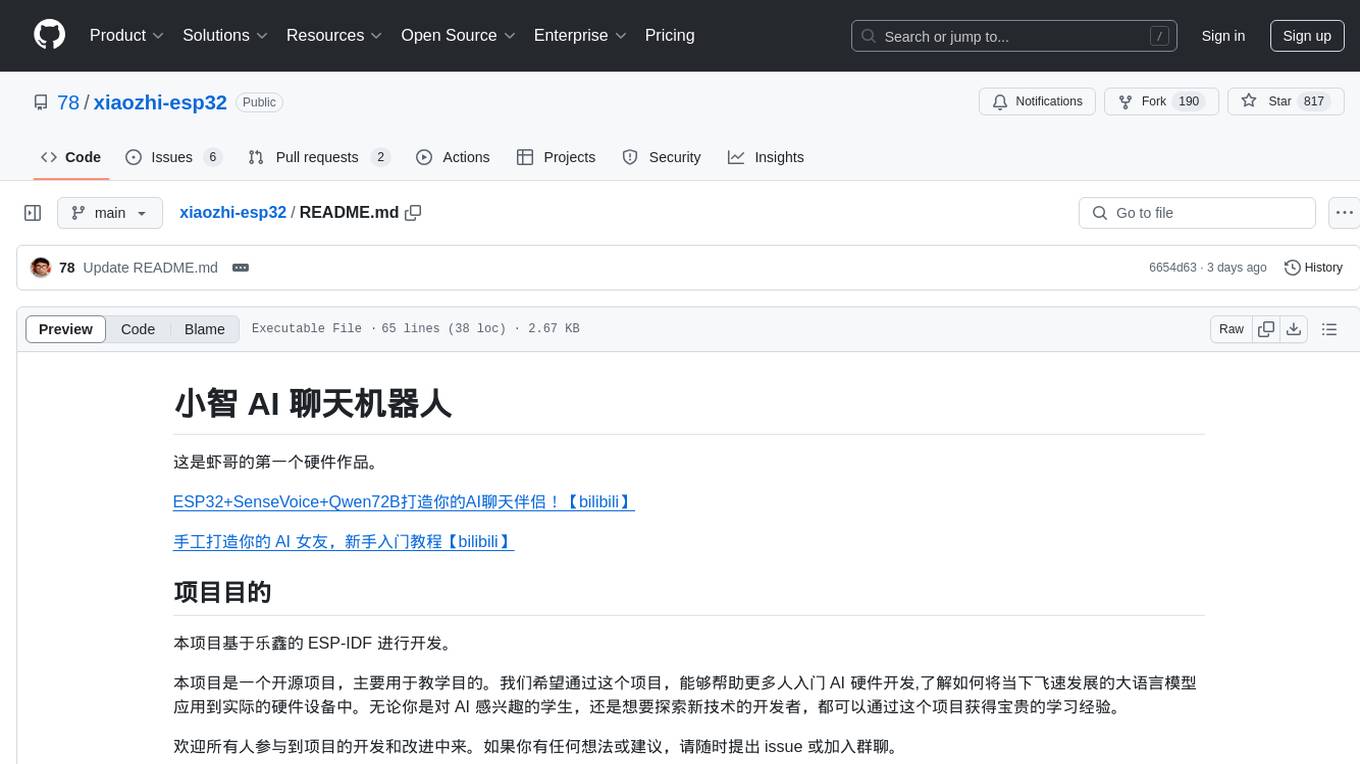

xiaozhi-esp32

The xiaozhi-esp32 repository is the first hardware project by Xia Ge, focusing on creating an AI chatbot using ESP32, SenseVoice, and Qwen72B. The project aims to help beginners in AI hardware development understand how to apply language models to hardware devices. It supports various functionalities such as Wi-Fi configuration, offline voice wake-up, multilingual speech recognition, voiceprint recognition, TTS using large models, and more. The project encourages participation for learning and improvement, providing resources for hardware and firmware development.

easyAi

EasyAi is a lightweight, beginner-friendly Java artificial intelligence algorithm framework. It can be seamlessly integrated into Java projects with Maven, requiring no additional environment configuration or dependencies. The framework provides pre-packaged modules for image object detection and AI customer service, as well as various low-level algorithm tools for deep learning, machine learning, reinforcement learning, heuristic learning, and matrix operations. Developers can easily develop custom micro-models tailored to their business needs.

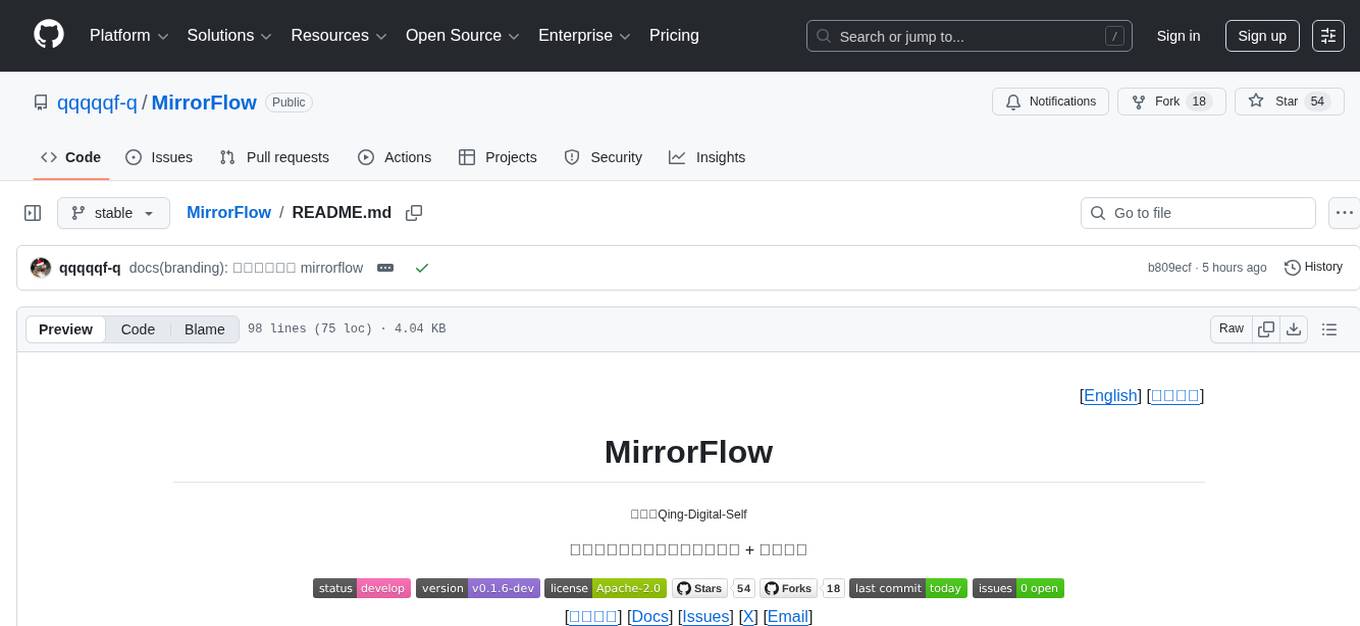

MirrorFlow

MirrorFlow is an end-to-end toolchain for dialogue data processing, cleaning/extraction, trainable samples generation, fine-tuning/distillation, and usage with evaluation. It supports two main routes: 'Digital Self' for fine-tuning chat records to mimic user expression habits and 'GPT-4o Style Alignment' for aligning output structures, clarification methods, refusal habits, and tool invocation behavior.

ChatGPT-On-CS

ChatGPT-On-CS is an intelligent chatbot tool based on large models, supporting various platforms like WeChat, Taobao, Bilibili, Douyin, Weibo, and more. It can handle text, voice, and image inputs, access external resources through plugins, and customize enterprise AI applications based on proprietary knowledge bases. Users can set custom replies, utilize ChatGPT interface for intelligent responses, send images and binary files, and create personalized chatbots using knowledge base files. The tool also features platform-specific plugin systems for accessing external resources and supports enterprise AI applications customization.

Desktop-Pet-Godot

Godog is an AI desktop pet powered by a large language model and created with Godot. It aims to provide a versatile and rich desktop AI pet that users can customize to create unique pet images and behaviors. The tool is lightweight, easy to develop with Godot, compatible with various large language models, offers pre-made character functions and multiple appearances, supports multimodal capabilities, and allows users to easily build their own AI desktop pets on top of the existing features.

AIMedia

AIMedia is a fully automated AI media software that automatically fetches hot news, generates news, and publishes on various platforms. It supports hot news fetching from platforms like Douyin, NetEase News, Weibo, The Paper, China Daily, and Sohu News. Additionally, it enables AI-generated images for text-only news to enhance originality and reading experience. The tool is currently commercialized with plans to support video auto-generation for platform publishing in the future. It requires a minimum CPU of 4 cores or above, 8GB RAM, and supports Windows 10 or above. Users can deploy the tool by cloning the repository, modifying the configuration file, creating a virtual environment using Conda, and starting the web interface. Feedback and suggestions can be submitted through issues or pull requests.

ChatGPT-On-CS

This project is an intelligent dialogue customer service tool based on a large model, which supports access to platforms such as WeChat, Qianniu, Bilibili, Douyin Enterprise, Douyin, Doudian, Weibo chat, Xiaohongshu professional account operation, Xiaohongshu, Zhihu, etc. You can choose GPT3.5/GPT4.0/ Lazy Treasure Box (more platforms will be supported in the future), which can process text, voice and pictures, and access external resources such as operating systems and the Internet through plug-ins, and support enterprise AI applications customized based on their own knowledge base.

chatgpt-wechat

ChatGPT-WeChat is a personal assistant application that can be safely used on WeChat through enterprise WeChat without the risk of being banned. The project is open source and free, with no paid sections or external traffic operations except for advertising on the author's public account '积木成楼'. It supports various features such as secure usage on WeChat, multi-channel customer service message integration, proxy support, session management, rapid message response, voice and image messaging, drawing capabilities, private data storage, plugin support, and more. Users can also develop their own capabilities following the rules provided. The project is currently in development with stable versions available for use.

MathModelAgent

MathModelAgent is an agent designed specifically for mathematical modeling tasks. It automates the process of mathematical modeling and generates a complete paper that can be directly submitted. The tool features automatic problem analysis, code writing, error correction, and paper writing. It supports various models, offers low costs, and allows customization through prompt inject. The tool is ideal for individuals or teams working on mathematical modeling projects.

LLM-Dojo

LLM-Dojo is an open-source platform for learning and practicing large models, providing a framework for building custom large model training processes, implementing various tricks and principles in the llm_tricks module, and mainstream model chat templates. The project includes an open-source large model training framework, detailed explanations and usage of the latest LLM tricks, and a collection of mainstream model chat templates. The term 'Dojo' symbolizes a place dedicated to learning and practice, borrowing its meaning from martial arts training.

For similar tasks

h2ogpt

h2oGPT is an Apache V2 open-source project that allows users to query and summarize documents or chat with local private GPT LLMs. It features a private offline database of any documents (PDFs, Excel, Word, Images, Video Frames, Youtube, Audio, Code, Text, MarkDown, etc.), a persistent database (Chroma, Weaviate, or in-memory FAISS) using accurate embeddings (instructor-large, all-MiniLM-L6-v2, etc.), and efficient use of context using instruct-tuned LLMs (no need for LangChain's few-shot approach). h2oGPT also offers parallel summarization and extraction, reaching an output of 80 tokens per second with the 13B LLaMa2 model, HYDE (Hypothetical Document Embeddings) for enhanced retrieval based upon LLM responses, a variety of models supported (LLaMa2, Mistral, Falcon, Vicuna, WizardLM. With AutoGPTQ, 4-bit/8-bit, LORA, etc.), GPU support from HF and LLaMa.cpp GGML models, and CPU support using HF, LLaMa.cpp, and GPT4ALL models. Additionally, h2oGPT provides Attention Sinks for arbitrarily long generation (LLaMa-2, Mistral, MPT, Pythia, Falcon, etc.), a UI or CLI with streaming of all models, the ability to upload and view documents through the UI (control multiple collaborative or personal collections), Vision Models LLaVa, Claude-3, Gemini-Pro-Vision, GPT-4-Vision, Image Generation Stable Diffusion (sdxl-turbo, sdxl) and PlaygroundAI (playv2), Voice STT using Whisper with streaming audio conversion, Voice TTS using MIT-Licensed Microsoft Speech T5 with multiple voices and Streaming audio conversion, Voice TTS using MPL2-Licensed TTS including Voice Cloning and Streaming audio conversion, AI Assistant Voice Control Mode for hands-free control of h2oGPT chat, Bake-off UI mode against many models at the same time, Easy Download of model artifacts and control over models like LLaMa.cpp through the UI, Authentication in the UI by user/password via Native or Google OAuth, State Preservation in the UI by user/password, Linux, Docker, macOS, and Windows support, Easy Windows Installer for Windows 10 64-bit (CPU/CUDA), Easy macOS Installer for macOS (CPU/M1/M2), Inference Servers support (oLLaMa, HF TGI server, vLLM, Gradio, ExLLaMa, Replicate, OpenAI, Azure OpenAI, Anthropic), OpenAI-compliant, Server Proxy API (h2oGPT acts as drop-in-replacement to OpenAI server), Python client API (to talk to Gradio server), JSON Mode with any model via code block extraction. Also supports MistralAI JSON mode, Claude-3 via function calling with strict Schema, OpenAI via JSON mode, and vLLM via guided_json with strict Schema, Web-Search integration with Chat and Document Q/A, Agents for Search, Document Q/A, Python Code, CSV frames (Experimental, best with OpenAI currently), Evaluate performance using reward models, and Quality maintained with over 1000 unit and integration tests taking over 4 GPU-hours.

serverless-chat-langchainjs

This sample shows how to build a serverless chat experience with Retrieval-Augmented Generation using LangChain.js and Azure. The application is hosted on Azure Static Web Apps and Azure Functions, with Azure Cosmos DB for MongoDB vCore as the vector database. You can use it as a starting point for building more complex AI applications.

react-native-vercel-ai

Run Vercel AI package on React Native, Expo, Web and Universal apps. Currently React Native fetch API does not support streaming which is used as a default on Vercel AI. This package enables you to use AI library on React Native but the best usage is when used on Expo universal native apps. On mobile you get back responses without streaming with the same API of `useChat` and `useCompletion` and on web it will fallback to `ai/react`

LLamaSharp

LLamaSharp is a cross-platform library to run 🦙LLaMA/LLaVA model (and others) on your local device. Based on llama.cpp, inference with LLamaSharp is efficient on both CPU and GPU. With the higher-level APIs and RAG support, it's convenient to deploy LLM (Large Language Model) in your application with LLamaSharp.

gpt4all

GPT4All is an ecosystem to run powerful and customized large language models that work locally on consumer grade CPUs and any GPU. Note that your CPU needs to support AVX or AVX2 instructions. Learn more in the documentation. A GPT4All model is a 3GB - 8GB file that you can download and plug into the GPT4All open-source ecosystem software. Nomic AI supports and maintains this software ecosystem to enforce quality and security alongside spearheading the effort to allow any person or enterprise to easily train and deploy their own on-edge large language models.

ChatGPT-Telegram-Bot

ChatGPT Telegram Bot is a Telegram bot that provides a smooth AI experience. It supports both Azure OpenAI and native OpenAI, and offers real-time (streaming) response to AI, with a faster and smoother experience. The bot also has 15 preset bot identities that can be quickly switched, and supports custom bot identities to meet personalized needs. Additionally, it supports clearing the contents of the chat with a single click, and restarting the conversation at any time. The bot also supports native Telegram bot button support, making it easy and intuitive to implement required functions. User level division is also supported, with different levels enjoying different single session token numbers, context numbers, and session frequencies. The bot supports English and Chinese on UI, and is containerized for easy deployment.

twinny

Twinny is a free and open-source AI code completion plugin for Visual Studio Code and compatible editors. It integrates with various tools and frameworks, including Ollama, llama.cpp, oobabooga/text-generation-webui, LM Studio, LiteLLM, and Open WebUI. Twinny offers features such as fill-in-the-middle code completion, chat with AI about your code, customizable API endpoints, and support for single or multiline fill-in-middle completions. It is easy to install via the Visual Studio Code extensions marketplace and provides a range of customization options. Twinny supports both online and offline operation and conforms to the OpenAI API standard.

agnai

Agnaistic is an AI roleplay chat tool that allows users to interact with personalized characters using their favorite AI services. It supports multiple AI services, persona schema formats, and features such as group conversations, user authentication, and memory/lore books. Agnaistic can be self-hosted or run using Docker, and it provides a range of customization options through its settings.json file. The tool is designed to be user-friendly and accessible, making it suitable for both casual users and developers.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.