Awesome-LLM-Ensemble

A curated list of Awesome-LLM-Ensemble papers for the survey "Harnessing Multiple Large Language Models: A Survey on LLM Ensemble"

Stars: 123

Awesome-LLM-Ensemble is a collection of papers on LLM Ensemble, focusing on the comprehensive use of multiple large language models to benefit from their individual strengths. It provides a systematic review of recent developments in LLM Ensemble, including taxonomy, methods for ensemble before, during, and after inference, benchmarks, applications, and related surveys.

README:

"Harnessing Multiple Large Language Models: A Survey on LLM Ensemble" (ArXiv 2025)

Zhijun Chen, Jingzheng Li, Pengpeng Chen, Zhuoran Li, Kai Sun, Yuankai Luo, Qianren Mao, Ming Li, Likang Xiao, Dingqi Yang, Yikun Ban, Hailong Sun, Philip S. Yu

[Always] [Add your paper in this repo] Thank you to all the papers that have cited our survey! We will add all related citing papers to this GitHub repo, in a timely manner, to help increase the visibility of your contributions.

[Always] [Maintain] We will make this list updated frequently (at least until 12/31/2025)!

If you found any error or any missed/new paper, please don't hesitate to contact us or Pull requests.

- Contents

Paper Abstract:

LLM Ensemble---which involves the comprehensive use of multiple large language models (LLMs), each aimed at handling user queries during the downstream inference, to benefit from their individual strengths---has gained substantial attention recently. The widespread availability of LLMs, coupled with their varying strengths and out-of-the-box usability, has profoundly advanced the field of LLM Ensemble. This paper presents the first systematic review of recent developments in LLM Ensemble. First, we introduce our taxonomy of LLM Ensemble and discuss several related research problems. Then, we provide a more in-depth classification of methods under the broad categories of ``ensemble-before-inference, ensemble-during-inference, ensemble-after-inference'', and review all relevant methods. Finally, we introduce related benchmarks and applications, summarize existing studies, and suggest several future research directions. A curated list of papers on LLM Ensemble is available at https://github.com/junchenzhi/Awesome-LLM-Ensemble.

Figure 1: Illustration of LLM Ensemble Taxonomy. (Note that for (b) ensemble-during-inference paradigm, there is also a process-level ensemble approach that we have not represented in the figure, mainly because that this approach is instantiated by a single method.)

Figure 2: Taxonomy of All LLM Ensemble Methods. (Please note that this figure may not be fully updated to include all the papers listed below.)

-

(a) Ensemble before inference.

In essence, this approach employs a routing algorithm prior to LLM inference to allocate a specific query to the most suitable model, allowing the selected model that is specialized for the query and typically more cost-efficient inference to perform the task. Existing methods can be classified into two categories, depending on whether the router necessitates the use of pre-customized data for pre-training:- (a1) Pre-training router;

- (a2) Non pre-training router.

-

(b) Ensemble during inference.

As the most granular form of ensemble among the three broad categories, this type of approach encompasses:- (b1) Token-level ensemble methods, which integrate the token-level outputs of multiple models at the finest granularity of decoding;

- (b2) Span-level ensemble methods, which conduct ensemble at the level of a sequence fragment (e.g., a span of four words);

- (b3) Process-level ensemble methods, which select the optimal reasoning process step-by-step within the reasoning chain for a given complex reasoning task. Note that for these ensemble-during-inference methods, the aggregated text segments will be concatenated with the previous text and fed again to models.

-

(c) Ensemble after inference.

These methods can be classified into two categories:- (c1) Non cascade methods, which perform ensemble using multiple complete responses contributed from all LLM candidates;

- (c2) Cascade methods, which consider both performance and inference costs, progressively reasoning through a chain of LLM candidates largely sorted by model size to find the most suitable inference response.

Figure 3: Summary analysis of the key attributes of ensemble-before-inference methods. (Please note that this table may not be fully updated to include all the papers listed below.)

-

LLM Routing with Benchmark Datasets. (2023)

Name: -, Code: - -

RouteLLM: Learning to Route LLMs with Preference Data. (2024)

Name: RouteLLM, -

Hybrid LLM: Cost-Efficient and Quality-Aware Query Routing. (2024)

Name: Hybrid-LLM, -

LLM Bandit: Cost-Efficient LLM Generation via Preference-Conditioned Dynamic Routing. (2025)

Name: -, Code: - -

Harnessing the Power of Multiple Minds: Lessons Learned from LLM Routing. (2024)

Name: -, -

MetaLLM: A High-performant and Cost-efficient Dynamic Framework for Wrapping LLMs. (2024)

Name: MetaLLM, -

SelectLLM: Query-Aware Efficient Selection Algorithm for Large Language Models. (2024)

Name: SelectLLM, Code: - -

Bench-CoE: a Framework for Collaboration of Experts from Benchmark. (2024)

Name: Bench-CoE, -

Routing to the Expert: Efficient Reward-guided Ensemble of Large Language Models. (2023)

Name: ZOOTER, Code: - -

TensorOpera Router: A Multi-Model Router for Efficient LLM Inference. (2024)

Name: TO-Router, Code: - -

Query Routing for Homogeneous Tools: An Instantiation in the RAG Scenario. (2024)

Name: HomoRouter, Code: - -

Fly-Swat or Cannon? Cost-Effective Language Model Choice via Meta-Modeling. (2023)

Name: FORC, -

Routoo: Learning to Route to Large Language Models Effectively. (2024)

Name: Routoo, Code: - -

(Newly added paper, March 2025:) RouterDC: Query-Based Router by Dual Contrastive Learning for Assembling Large Language Models. (2024)

Name: RouterDC, -

(Newly added paper, May 2025:) Rethinking Predictive Modeling for LLM Routing: When Simple kNN Beats Complex Learned Routers. (2025)

Name: -, Code: - -

(Newly added paper, May 2025:) InferenceDynamics: Efficient Routing Across LLMs through Structured Capability and Knowledge Profiling. (2025)

Name: InferenceDynamics, Code: - -

(Newly added paper, May 2025:) CO-OPTIMIZING RECOMMENDATION AND EVALUATION FOR LLM SELECTION. (2025)

Name: RELM, Code: - -

(Newly added paper, May 2025:) Route to Reason: Adaptive Routing for LLM and Reasoning Strategy Selection. (2025)

Name: RTR, -

(Newly added paper, June 2025:) RadialRouter: Structured Representation for Efficient and Robust Large Language Models Routing. (2025)

Name: RadialRouter, Code: - -

(Newly added paper, June 2025:) TAGROUTER: Learning Route to LLMs through Tags for Open-Domain Text Generation Tasks. (2025)

Name: TagRouter, Code: -

-

PickLLM: Context-Aware RL-Assisted Large Language Model Routing. (2024)

Name: PickLLM, Code: - -

Eagle: Efficient Training-Free Router for Multi-LLM Inference. (2024)

Name: Eagle, Code: - -

Blending Is All You Need: Cheaper, Better Alternative to Trillion-Parameters LLM. (2024)

Name: Blending, Code: - -

(Newly added paper, June 2025:) The Avengers: A Simple Recipe for Uniting Smaller Language Models to Challenge Proprietary Giants. (2025)

Name: Avengers,

Figure 4: Summary analysis of the key attributes of ensemble-during-inference methods. (Please note that this table may not be fully updated to include all the papers listed below.)

-

Breaking the Ceiling of the LLM Community by Treating Token Generation as a Classification for Ensembling. (2024)

Name: GaC, -

Ensemble Learning for Heterogeneous Large Language Models with Deep Parallel Collaboration. (2024)

Name: DeePEn, -

Bridging the Gap between Different Vocabularies for LLM Ensemble. (2024)

Name: EVA, -

Determine-Then-Ensemble: Necessity of Top-k Union for Large Language Model Ensembling. (2024)

Name: UniTe, Code: - -

Pack of LLMs: Model Fusion at Test-Time via Perplexity Optimization. (2024)

Name: PackLLM, -

Purifying large language models by ensembling a small language model. (2024)

Name: -, Code: - -

CITER: Collaborative Inference for Efficient Large Language Model Decoding with Token-Level Routing. (2025)

Name: CITER, -

(Newly added paper, April 2025:) An Expert is Worth One Token: Synergizing Multiple Expert LLMs as Generalist via Expert Token Routing. (2024)

Name: ETR, -

(Newly added paper, April 2025:) Speculative Ensemble: Fast Large Language Model Ensemble via Speculation. (2025)

Name: Speculative Ensemble, -

(Newly added paper, September 2025:) Transformer Copilot: Learning from The Mistake Log in LLM Fine-tuning. (2025)

Name: Transformer Copilot,

-

Cool-Fusion: Fuse Large Language Models without Training. (2024)

Name: Cool-Fusion, Code: - -

Hit the Sweet Spot! Span-Level Ensemble for Large Language Models. (2024)

Name: SweetSpan, Code: - -

SpecFuse: Ensembling Large Language Models via Next-Segment Prediction. (2024)

Name: SpecFuse, Code: - -

(Newly added paper, June 2025:) RLAE: Reinforcement Learning-Assisted Ensemble for LLMs. (2025)

Name: RLAE, Code: -

-

Ensembling Large Language Models with Process Reward-Guided Tree Search for Better Complex Reasoning. (2024)

Name: LE-MCTS, Code: -

Figure 5: Summary analysis of the key attributes of ensemble-after-inference methods. (Please note that this table may not be fully updated to include all the papers listed below.)

-

Smoothie: Label Free Language Model Routing. (2024)

Name: Smoothie, -

Getting MoRE out of Mixture of Language Model Reasoning Experts. (2023)

Name: MoRE, -

LLM-Blender: Ensembling Large Language Models with Pairwise Ranking and Generative Fusion. (2023)

Name: LLM-Blender, -

LLM-TOPLA: Efficient LLM Ensemble by Maximising Diversity. (2024)

Name: LLM-TOPLA, -

URG: A Unified Ranking and Generation Method for Ensembling Language Models. (2024)

Name: URG, Code: - -

(Newly added paper, April 2025:) DFPE: A Diverse Fingerprint Ensemble for Enhancing LLM Performance. (2025)

Name: DFPE, -

(Newly added paper, April 2025:) Two Heads are Better than One: Zero-shot Cognitive Reasoning via Multi-LLM Knowledge Fusion. (2024)

Name: MLKF, -

(Newly added paper, April 2025:) Symbolic Mixture-of-Experts: Adaptive Skill-based Routing for Heterogeneous Reasoning. (2025)

Name: Symbolic-MoE, -

(Newly added paper, April 2025:) BALANCING ACT: DIVERSITY AND CONSISTENCY IN LARGE LANGUAGE MODEL ENSEMBLES. (2025)

Name: DMoA, Code: - -

(Newly added paper, June 2025:) EL4NER: Ensemble Learning for Named Entity Recognition via Multiple Small-Parameter Large Language Models. (2025)

Name: EL4NER, Code: - -

(Newly added paper, September 2025:) LENS: Learning Ensemble Confidence from Neural States for Multi-LLM Answer Integration. (2025)

Name: EL4NER, Code: - -

(Newly added paper, September 2025:) CARGO: A Framework for Confidence-Aware Routing of Large Language Models. (2025)

Name: CARGO, Code: - -

(Newly added paper, September 2025:) LLM-Forest: Ensemble Learning of LLMs with Graph-Augmented Prompts for Data Imputation. (2025)

Name: LLM-Forest,

-

EcoAssistant: Using LLM Assistant More Affordably and Accurately. (2023)

Name: EcoAssistant, -

Large Language Model Cascades with Mixture of Thoughts Representations for Cost-efficient Reasoning. (2023)

Name: -, -

Model Cascading: Towards Jointly Improving Efficiency and Accuracy of NLP Systems. (2022)

Name: Model Cascading, Code: - -

Cache & Distil: Optimising API Calls to Large Language Models. (2023)

Name: neural caching, -

A Unified Approach to Routing and Cascading for LLMs. (2024)

Name: Cascade Routing, -

When Does Confidence-Based Cascade Deferral Suffice? (2023)

Name: -, Code: - -

FrugalGPT: How to Use Large Language Models While Reducing Cost and Improving Performance. (2023)

Name: FrugalGPT, Code: - -

Language Model Cascades: Token-level uncertainty and beyond. (2024)

Name: FrugalGPT, Code: - -

AutoMix: Automatically Mixing Language Models. (2023)

Name: AutoMix, -

Dynamic Ensemble Reasoning for LLM Experts. (2024)

Name: DER, Code: - -

(Newly added paper, April 2025:) EMAFusionTM: A SELF-OPTIMIZING SYSTEM FOR SEAMLESS LLM SELECTION AND INTEGRATION. (2025)

Name: EMAFusionTM, Code: - -

(Newly added paper, June 2025:) Do We Truly Need So Many Samples? Multi-LLM Repeated Sampling Efficiently Scales Test-Time Compute. (2025)

Name: ModelSwitch,

-

LLM-BLENDER: Ensembling Large Language Models with Pairwise Ranking and Generative Fusion. (2023)

Name: MixInstruct, Evaluation Goal: Performance, -

RouterBench: A Benchmark for Multi-LLM Routing System. (2024)

Name: RouterBench, Evaluation Goal: Performance and cost, -

(Newly added paper, April 2025:) RouterEval: A Comprehensive Benchmark for Routing LLMs to Explore Model-level Scaling Up in LLMs. (2025)

Name: Speculative Ensemble,

Beyond the methods presented before, the concept of LLM Ensemble has found applications in a variety of more specialized tasks and domains. Here we give some examples:

-

Ensemble-Instruct: Generating Instruction-Tuning Data with a Heterogeneous Mixture of LMs. (2023)

Name: Ensemble-Instruct, Task: Instruction-Tuning Data Generation, -

Bayesian Calibration of Win Rate Estimation with LLM Evaluators. (2024)

Name: BWRS, Bayesian Dawid-Skene, Task: Win Rate Estimation, -

PromptMind Team at MEDIQA-CORR 2024: Improving Clinical Text Correction with Error Categorization and LLM Ensembles. (2024)

Name: -, Task: SQL generation, Code: - -

LLM-Ensemble: Optimal Large Language Model Ensemble Method for E-commerce Product Attribute Value Extraction. (2024)

Name: -, Task: Product Attribute Value Extraction, Code: - -

(Newly added paper, April 2025:) FuseGen: PLM Fusion for Data-generation based Zero-shot Learning. (2024)

Name: FuseGen, Task: Data-generation, -

(Newly added paper, April 2025:) On Preserving the Knowledge of Long Clinical Texts. (2023)

Name: -, Task: Prediction tasks on long clinical notes, Code: - -

(Newly added paper, April 2025:) Consensus Entropy: Harnessing Multi-VLM Agreement for Self-Verifying and Self-Improving OCR. (2025)

Name: Consensus Entropy (CE), Task: Optical Character Recognition, Code: - -

(Newly added paper, June 2025:) Beyond Monoliths: Expert Orchestration for More Capable, Democratic, and Safe Large Language Models. (2025)

Name: Expert Orchestration, Task: -, Code: -

-

(Newly added paper, May 2025:) Model Merging in LLMs, MLLMs, and Beyond: Methods, Theories, Applications and Opportunities. (2024)

GitHub: -

(Newly added paper, May 2025:) Merge, Ensemble, and Cooperate! A Survey on Collaborative Strategies in the Era of Large Language Models. (2024)

GitHub: - -

(Newly added paper, May 2025:) A Survey on Collaborative Mechanisms Between Large and Small Language Models. (2025)

GitHub: - -

(Newly added paper, May 2025:) A comprehensive review on ensemble deep learning: Opportunities and challenges. (2023)

GitHub: - -

(Newly added paper, May 2025:) Doing More with Less – Implementing Routing Strategies in Large Language Model-Based Systems: An Extended Survey. (2025)

GitHub: - -

(Newly added paper, May 2025:) A Survey on Model MoErging: Recycling and Routing Among Specialized Experts for Collaborative Learning. (2024)

GitHub: - -

(Newly added paper, May 2025:) Deep Model Fusion: A Survey. (2023)

GitHub: - -

(Newly added paper, June 2025:) Towards Efficient Multi-LLM Inference: Characterization and Analysis of LLM Routing and Hierarchical Techniques. (2025)

GitHub: - -

(Newly added paper, July 2025:) Toward Edge General Intelligence with Multiple-Large Language Model (Multi-LLM): Architecture, Trust, and Orchestration. (2025)

GitHub: -

Here we briefly list some related papers, which are either discovered by us or suggested by the authors to this repository. They mainly focus on LLM Collaboration.

-

(Newly added paper, June 2025:) If Multi-Agent Debate is the Answer, What is the Question? (2025)

Name: Heter-MAD, Code: - -

(Newly added paper, June 2025:) Nature-Inspired Population-Based Evolution of Large Language Models (2025)

Name: GENOME, -

(Newly added paper, September 2025:) LLM-Forest: Ensemble Learning of LLMs with Graph-Augmented Prompts for Data Imputation (2024)

Name: LLM-Forest, -

(Newly added paper, September 2025:) Small-Large Collaboration: Training-efficient Concept Personalization for Large VLM (2025)

Name: SLC,

@article{chen2025harnessing,

title={Harnessing Multiple Large Language Models: A Survey on LLM Ensemble},

author={Chen, Zhijun and Li, Jingzheng and Chen, Pengpeng and Li, Zhuoran and Sun, Kai and Luo, Yuankai and Mao, Qianren and Yang, Dingqi and Sun, Hailong and Yu, Philip S},

journal={arXiv preprint arXiv:2502.18036},

year={2025}

}

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Awesome-LLM-Ensemble

Similar Open Source Tools

Awesome-LLM-Ensemble

Awesome-LLM-Ensemble is a collection of papers on LLM Ensemble, focusing on the comprehensive use of multiple large language models to benefit from their individual strengths. It provides a systematic review of recent developments in LLM Ensemble, including taxonomy, methods for ensemble before, during, and after inference, benchmarks, applications, and related surveys.

Awesome-LM-SSP

The Awesome-LM-SSP repository is a collection of resources related to the trustworthiness of large models (LMs) across multiple dimensions, with a special focus on multi-modal LMs. It includes papers, surveys, toolkits, competitions, and leaderboards. The resources are categorized into three main dimensions: safety, security, and privacy. Within each dimension, there are several subcategories. For example, the safety dimension includes subcategories such as jailbreak, alignment, deepfake, ethics, fairness, hallucination, prompt injection, and toxicity. The security dimension includes subcategories such as adversarial examples, poisoning, and system security. The privacy dimension includes subcategories such as contamination, copyright, data reconstruction, membership inference attacks, model extraction, privacy-preserving computation, and unlearning.

lmdeploy

LMDeploy is a toolkit for compressing, deploying, and serving LLM, developed by the MMRazor and MMDeploy teams. It has the following core features: * **Efficient Inference** : LMDeploy delivers up to 1.8x higher request throughput than vLLM, by introducing key features like persistent batch(a.k.a. continuous batching), blocked KV cache, dynamic split&fuse, tensor parallelism, high-performance CUDA kernels and so on. * **Effective Quantization** : LMDeploy supports weight-only and k/v quantization, and the 4-bit inference performance is 2.4x higher than FP16. The quantization quality has been confirmed via OpenCompass evaluation. * **Effortless Distribution Server** : Leveraging the request distribution service, LMDeploy facilitates an easy and efficient deployment of multi-model services across multiple machines and cards. * **Interactive Inference Mode** : By caching the k/v of attention during multi-round dialogue processes, the engine remembers dialogue history, thus avoiding repetitive processing of historical sessions.

latentbox

Latent Box is a curated collection of resources for AI, creativity, and art. It aims to bridge the information gap with high-quality content, promote diversity and interdisciplinary collaboration, and maintain updates through community co-creation. The website features a wide range of resources, including articles, tutorials, tools, and datasets, covering various topics such as machine learning, computer vision, natural language processing, generative art, and creative coding.

awesome-object-detection-datasets

This repository is a curated list of awesome public object detection and recognition datasets. It includes a wide range of datasets related to object detection and recognition tasks, such as general detection and recognition datasets, autonomous driving datasets, adverse weather datasets, person detection datasets, anti-UAV datasets, optical aerial imagery datasets, low-light image datasets, infrared image datasets, SAR image datasets, multispectral image datasets, 3D object detection datasets, vehicle-to-everything field datasets, super-resolution field datasets, and face detection and recognition datasets. The repository also provides information on tools for data annotation, data augmentation, and data management related to object detection tasks.

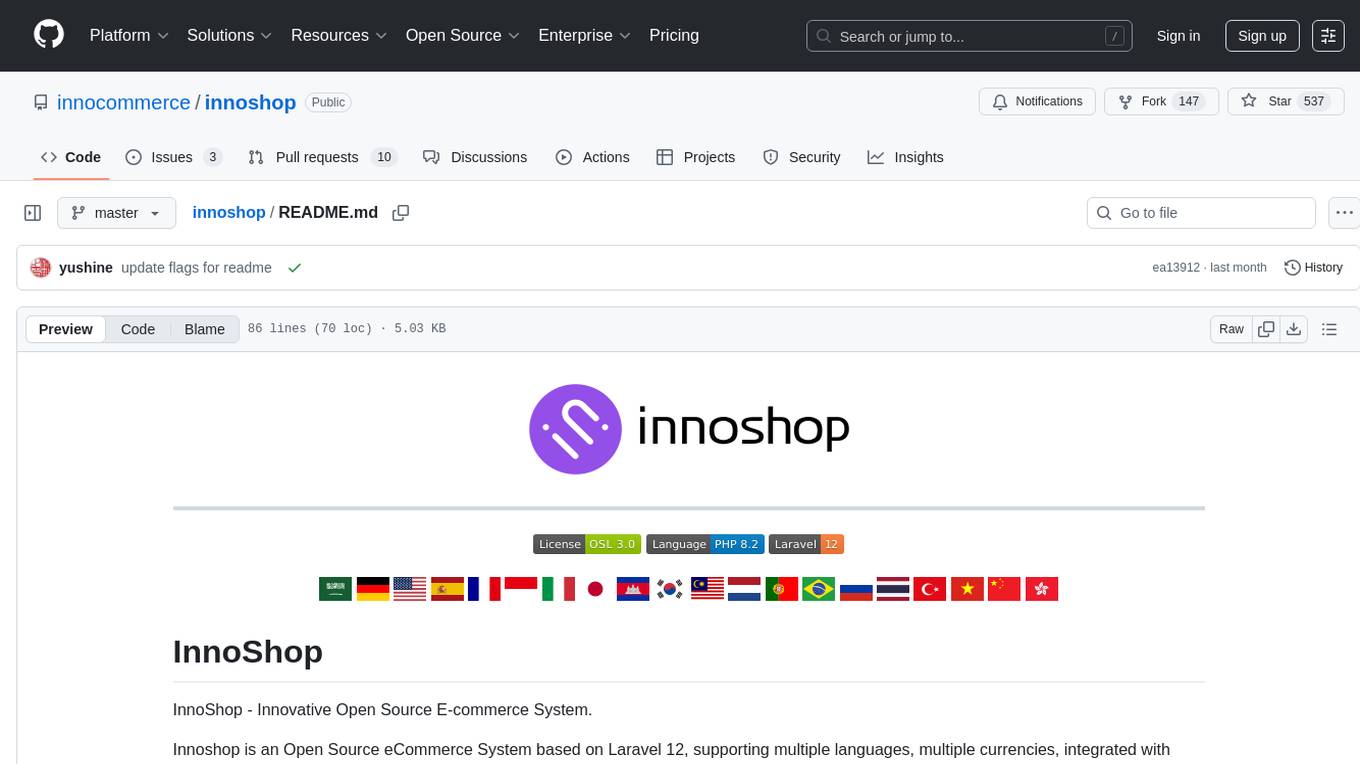

innoshop

InnoShop is an innovative open-source e-commerce system based on Laravel 12. It supports multiple languages, multiple currencies, and is integrated with OpenAI. The system features plugin mechanisms and theme template development for enhanced user experience and system extensibility. It is globally oriented, user-friendly, and based on the latest technology with deep AI integration.

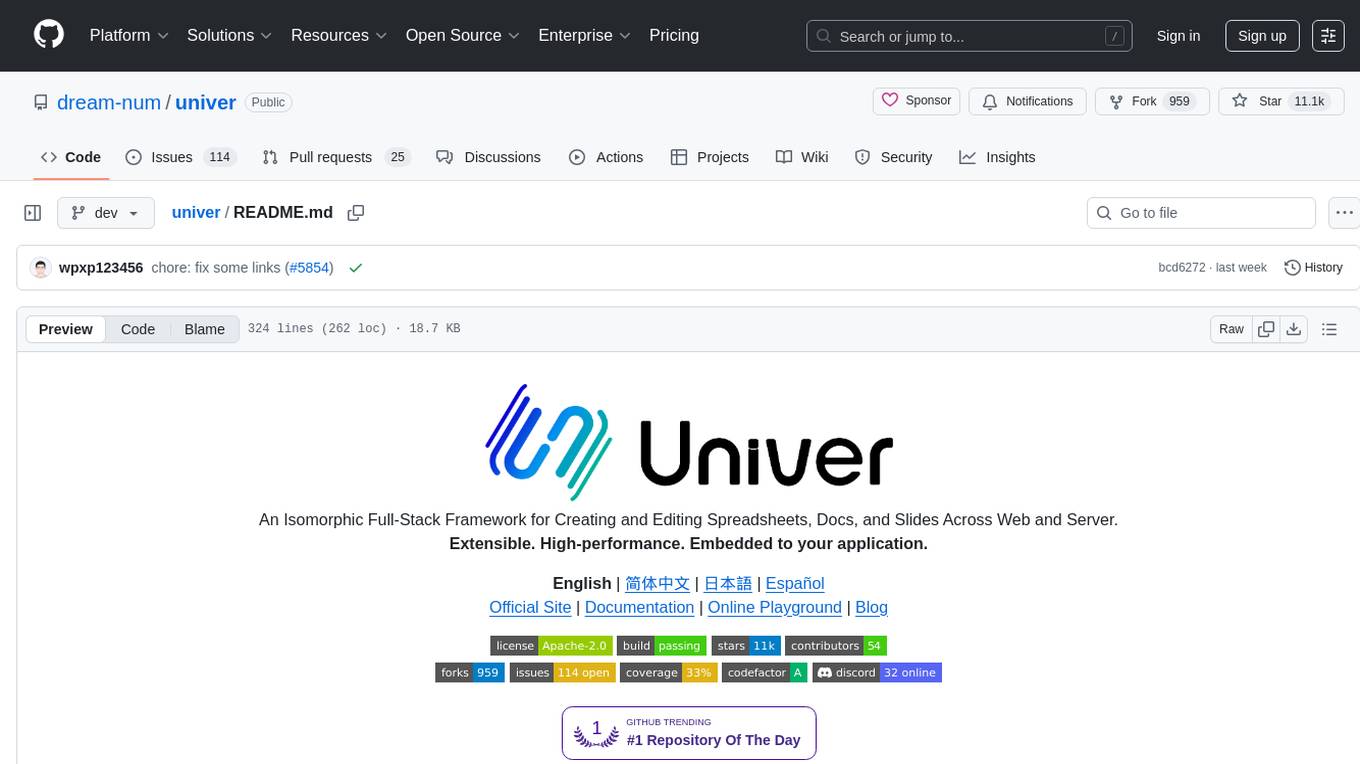

univer

Univer is an isomorphic full-stack framework designed for creating and editing spreadsheets, documents, and slides across web and server. It is highly extensible, high-performance, and can be embedded into applications. Univer offers a wide range of features including formulas, conditional formatting, data validation, collaborative editing, printing, import & export, and more. It supports multiple languages and provides a distraction-free editing experience with a clean interface. Univer is suitable for data analysts, software developers, project managers, content creators, and educators.

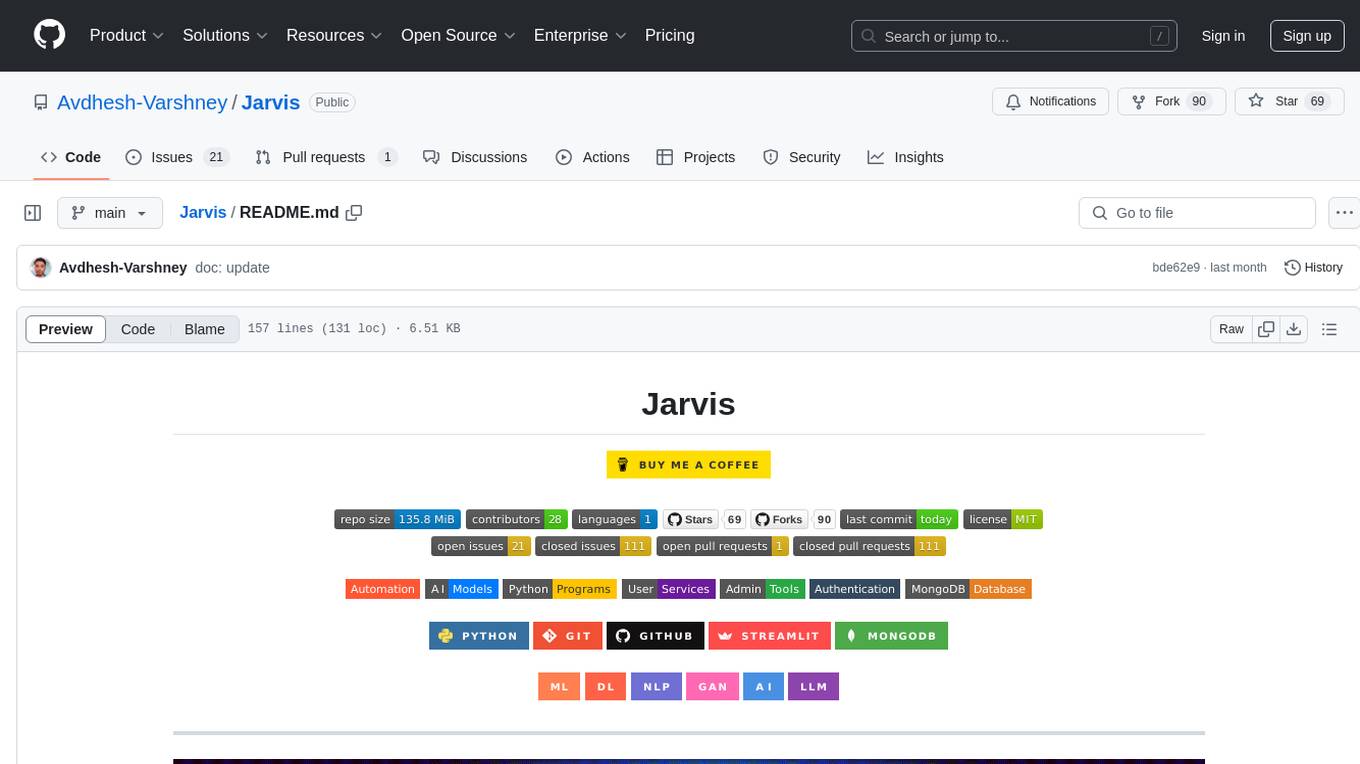

Jarvis

Jarvis is a powerful virtual AI assistant designed to simplify daily tasks through voice command integration. It features automation, device management, and personalized interactions, transforming technology engagement. Built using Python and AI models, it serves personal and administrative needs efficiently, making processes seamless and productive.

ST-LLM

ST-LLM is a temporal-sensitive video large language model that incorporates joint spatial-temporal modeling, dynamic masking strategy, and global-local input module for effective video understanding. It has achieved state-of-the-art results on various video benchmarks. The repository provides code and weights for the model, along with demo scripts for easy usage. Users can train, validate, and use the model for tasks like video description, action identification, and reasoning.

sglang

SGLang is a structured generation language designed for large language models (LLMs). It makes your interaction with LLMs faster and more controllable by co-designing the frontend language and the runtime system. The core features of SGLang include: - **A Flexible Front-End Language**: This allows for easy programming of LLM applications with multiple chained generation calls, advanced prompting techniques, control flow, multiple modalities, parallelism, and external interaction. - **A High-Performance Runtime with RadixAttention**: This feature significantly accelerates the execution of complex LLM programs by automatic KV cache reuse across multiple calls. It also supports other common techniques like continuous batching and tensor parallelism.

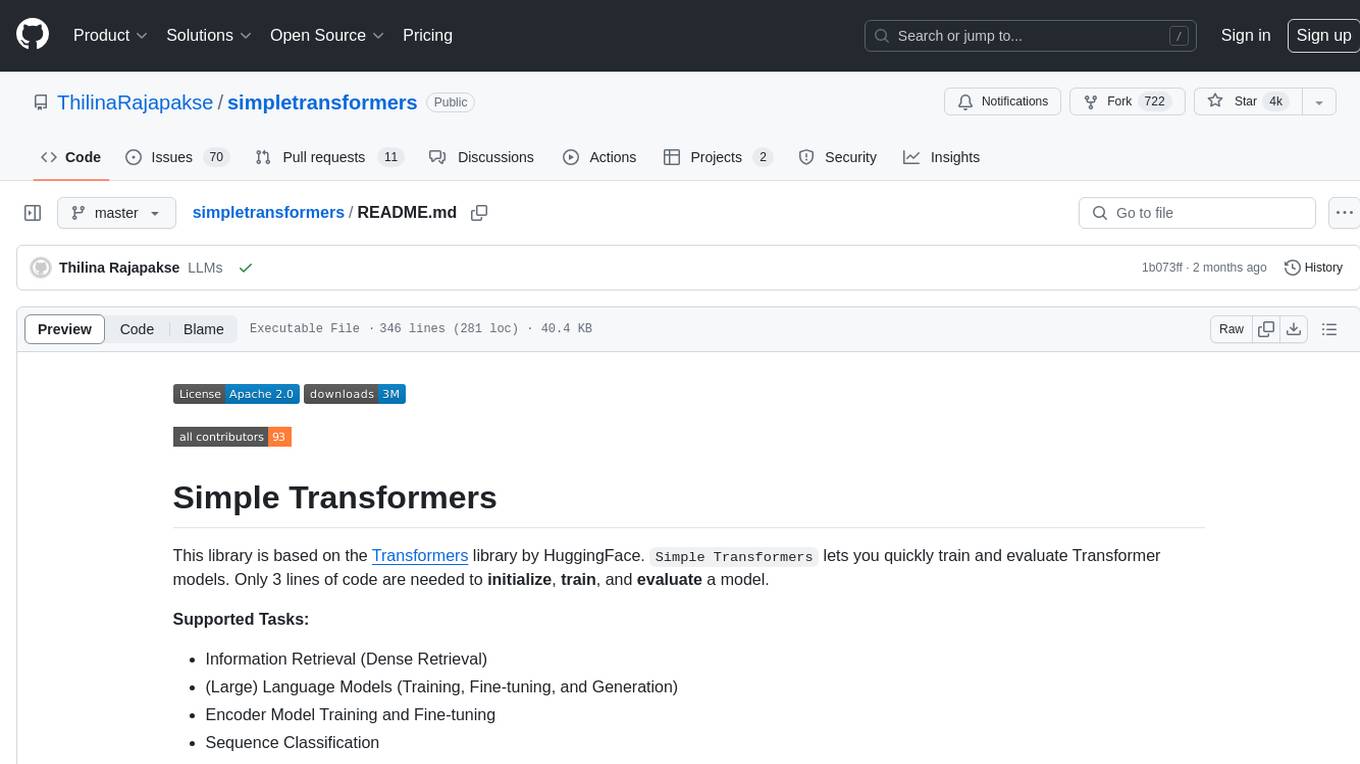

simpletransformers

Simple Transformers is a library based on the Transformers library by HuggingFace, allowing users to quickly train and evaluate Transformer models with only 3 lines of code. It supports various tasks such as Information Retrieval, Language Models, Encoder Model Training, Sequence Classification, Token Classification, Question Answering, Language Generation, T5 Model, Seq2Seq Tasks, Multi-Modal Classification, and Conversational AI.

unilm

The 'unilm' repository is a collection of tools, models, and architectures for Foundation Models and General AI, focusing on tasks such as NLP, MT, Speech, Document AI, and Multimodal AI. It includes various pre-trained models, such as UniLM, InfoXLM, DeltaLM, MiniLM, AdaLM, BEiT, LayoutLM, WavLM, VALL-E, and more, designed for tasks like language understanding, generation, translation, vision, speech, and multimodal processing. The repository also features toolkits like s2s-ft for sequence-to-sequence fine-tuning and Aggressive Decoding for efficient sequence-to-sequence decoding. Additionally, it offers applications like TrOCR for OCR, LayoutReader for reading order detection, and XLM-T for multilingual NMT.

Everything-LLMs-And-Robotics

The Everything-LLMs-And-Robotics repository is the world's largest GitHub repository focusing on the intersection of Large Language Models (LLMs) and Robotics. It provides educational resources, research papers, project demos, and Twitter threads related to LLMs, Robotics, and their combination. The repository covers topics such as reasoning, planning, manipulation, instructions and navigation, simulation frameworks, perception, and more, showcasing the latest advancements in the field.

LLMFarm

LLMFarm is an iOS and MacOS app designed to work with large language models (LLM). It allows users to load different LLMs with specific parameters, test the performance of various LLMs on iOS and macOS, and identify the most suitable model for their projects. The tool is based on ggml and llama.cpp by Georgi Gerganov and incorporates sources from rwkv.cpp by saharNooby, Mia by byroneverson, and LlamaChat by alexrozanski. LLMFarm features support for MacOS (13+) and iOS (16+), various inferences and sampling methods, Metal compatibility (not supported on Intel Mac), model setting templates, LoRA adapters support, LoRA finetune support, LoRA export as model support, and more. It also offers a range of inferences including LLaMA, GPTNeoX, Replit, GPT2, Starcoder, RWKV, Falcon, MPT, Bloom, and others. Additionally, it supports multimodal models like LLaVA, Obsidian, and MobileVLM. Users can customize inference options through JSON files and access supported models for download.

For similar tasks

Awesome-LLM-Ensemble

Awesome-LLM-Ensemble is a collection of papers on LLM Ensemble, focusing on the comprehensive use of multiple large language models to benefit from their individual strengths. It provides a systematic review of recent developments in LLM Ensemble, including taxonomy, methods for ensemble before, during, and after inference, benchmarks, applications, and related surveys.

aimet

AIMET is a library that provides advanced model quantization and compression techniques for trained neural network models. It provides features that have been proven to improve run-time performance of deep learning neural network models with lower compute and memory requirements and minimal impact to task accuracy. AIMET is designed to work with PyTorch, TensorFlow and ONNX models. We also host the AIMET Model Zoo - a collection of popular neural network models optimized for 8-bit inference. We also provide recipes for users to quantize floating point models using AIMET.

neural-compressor

Intel® Neural Compressor is an open-source Python library that supports popular model compression techniques such as quantization, pruning (sparsity), distillation, and neural architecture search on mainstream frameworks such as TensorFlow, PyTorch, ONNX Runtime, and MXNet. It provides key features, typical examples, and open collaborations, including support for a wide range of Intel hardware, validation of popular LLMs, and collaboration with cloud marketplaces, software platforms, and open AI ecosystems.

auto-round

AutoRound is an advanced weight-only quantization algorithm for low-bits LLM inference. It competes impressively against recent methods without introducing any additional inference overhead. The method adopts sign gradient descent to fine-tune rounding values and minmax values of weights in just 200 steps, often significantly outperforming SignRound with the cost of more tuning time for quantization. AutoRound is tailored for a wide range of models and consistently delivers noticeable improvements.

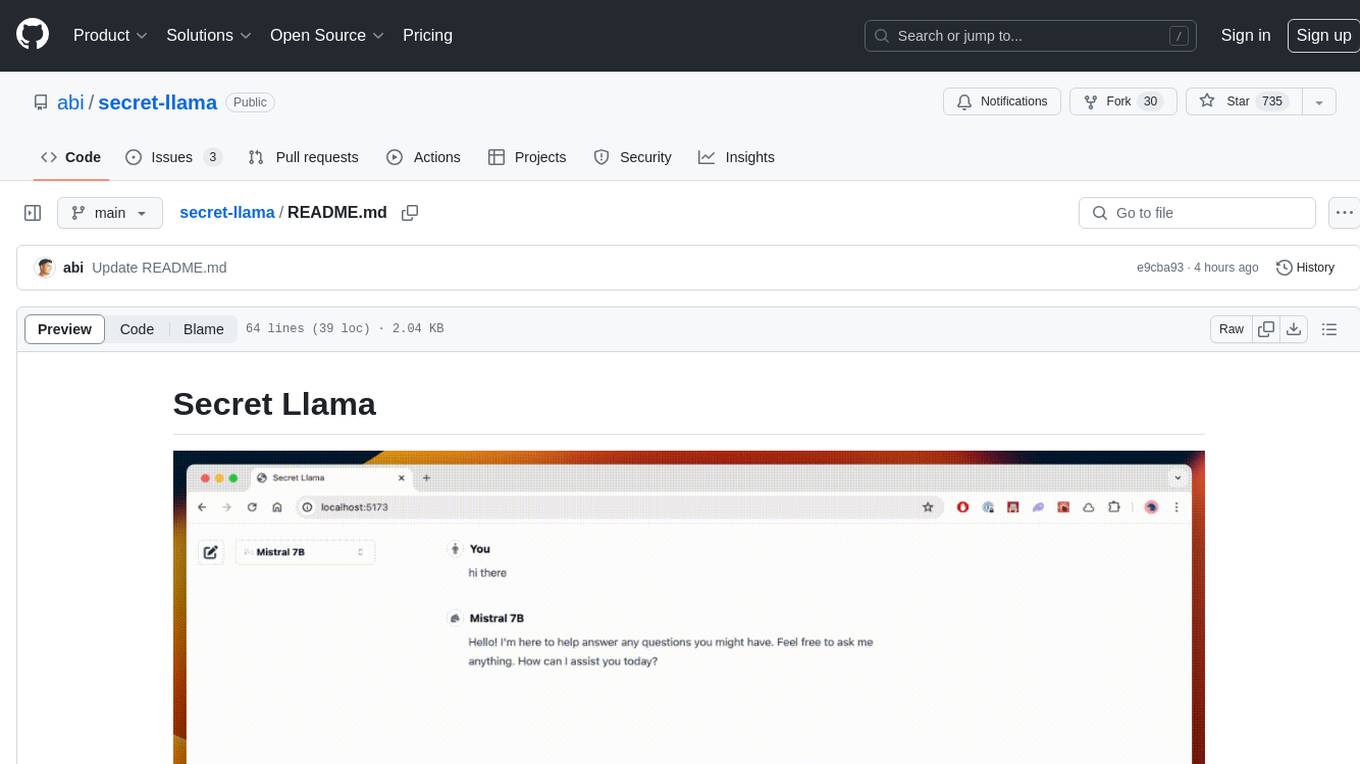

secret-llama

Entirely-in-browser, fully private LLM chatbot supporting Llama 3, Mistral and other open source models. Fully private = No conversation data ever leaves your computer. Runs in the browser = No server needed and no install needed! Works offline. Easy-to-use interface on par with ChatGPT, but for open source LLMs. System requirements include a modern browser with WebGPU support. Supported models include TinyLlama-1.1B-Chat-v0.4-q4f32_1-1k, Llama-3-8B-Instruct-q4f16_1, Phi1.5-q4f16_1-1k, and Mistral-7B-Instruct-v0.2-q4f16_1. Looking for contributors to improve the interface, support more models, speed up initial model loading time, and fix bugs.

baal

Baal is an active learning library that supports both industrial applications and research use cases. It provides a framework for Bayesian active learning methods such as Monte-Carlo Dropout, MCDropConnect, Deep ensembles, and Semi-supervised learning. Baal helps in labeling the most uncertain items in the dataset pool to improve model performance and reduce annotation effort. The library is actively maintained by a dedicated team and has been used in various research papers for production and experimentation.

LLM-Fine-Tuning

This GitHub repository contains examples of fine-tuning open source large language models. It showcases the process of fine-tuning and quantizing large language models using efficient techniques like Lora and QLora. The repository serves as a practical guide for individuals looking to optimize the performance of language models through fine-tuning.

magpie

This is the official repository for 'Alignment Data Synthesis from Scratch by Prompting Aligned LLMs with Nothing'. Magpie is a tool designed to synthesize high-quality instruction data at scale by extracting it directly from an aligned Large Language Models (LLMs). It aims to democratize AI by generating large-scale alignment data and enhancing the transparency of model alignment processes. Magpie has been tested on various model families and can be used to fine-tune models for improved performance on alignment benchmarks such as AlpacaEval, ArenaHard, and WildBench.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.

-orange)