infra

Infrastructure that's powering E2B Cloud.

Stars: 878

E2B Infra is a cloud runtime for AI agents. It provides SDKs and CLI to customize and manage environments and run AI agents in the cloud. The infrastructure is deployed using Terraform and is currently only deployable on GCP. The main components of the infrastructure are the API server, daemon running inside instances (sandboxes), Nomad driver for managing instances (sandboxes), and Nomad driver for building environments (templates).

README:

E2B is an open-source infrastructure for AI code interpreting. In our main repository e2b-dev/e2b we are giving you SDKs and CLI to customize and manage environments and run your AI agents in the cloud.

This repository contains the infrastructure that powers the E2B platform.

Read the self-hosting guide to learn how to set up the infrastructure on your own. The infrastructure is deployed using Terraform.

Supported cloud providers:

- 🟢 GCP

- 🚧 AWS

- [ ] Azure

- [ ] General linux machine

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for infra

Similar Open Source Tools

infra

E2B Infra is a cloud runtime for AI agents. It provides SDKs and CLI to customize and manage environments and run AI agents in the cloud. The infrastructure is deployed using Terraform and is currently only deployable on GCP. The main components of the infrastructure are the API server, daemon running inside instances (sandboxes), Nomad driver for managing instances (sandboxes), and Nomad driver for building environments (templates).

genai-factory

GenAI Factory is a collection of end-to-end blueprints to deploy generative AI infrastructures in Google Cloud Platform (GCP), following security best practices. It embraces Infrastructure as Code (IaC) best practices, implements infrastructure in Terraform, and follows the least-privilege principle. The tool is compatible with Cloud Foundation Fabric FAST project-factory and application templates, allowing users to deploy various AI applications and systems on GCP.

cedana-cli

Cedana is a framework for the democritization and commodification of compute. It leverages checkpoint/restore to migrate work across machines, clouds, and beyond. The repo contains a CLI tool for developers to experiment with the system.

spring-ai-alibaba

Spring AI Alibaba is an AI application framework for Java developers that seamlessly integrates with Alibaba Cloud QWen LLM services and cloud-native infrastructures. It provides features like support for various AI models, high-level AI agent abstraction, function calling, and RAG support. The framework aims to simplify the development, evaluation, deployment, and observability of AI native Java applications. It offers open-source framework and ecosystem integrations to support features like prompt template management, event-driven AI applications, and more.

agents-at-scale-ark

ARK is an agentic runtime for Kubernetes that codifies patterns and practices developed across client projects. It provides a foundation for platform-agnostic operations and standardized deployment approaches. The project is in early access, evolving based on team feedback, and aims to share technical approach with the community for feedback and input in the field of agentic AI systems and Kubernetes orchestration.

llmariner

LLMariner is an extensible open source platform built on Kubernetes to simplify the management of generative AI workloads. It enables efficient handling of training and inference data within clusters, with OpenAI-compatible APIs for seamless integration with a wide range of AI-driven applications.

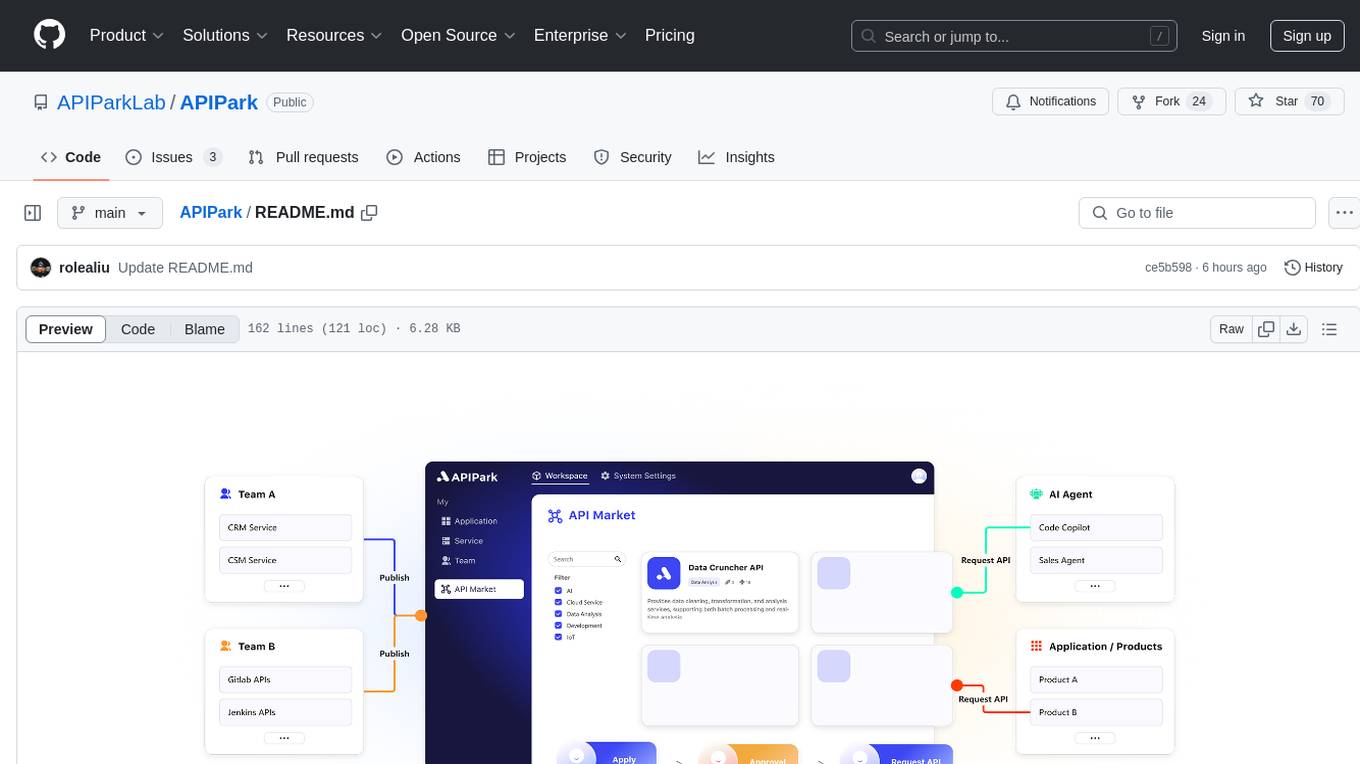

APIPark

APIPark is an open-source AI Gateway and Developer Portal that enables users to easily manage, integrate, and deploy AI and API services. It provides robust API management features, including creation, monitoring, and access control, to help developers efficiently and securely develop and manage their APIs. The platform aims to solve challenges such as connecting to powerful AI models, managing complex AI & API call relationships, overseeing API creation and security, simplifying fault detection and troubleshooting, and enhancing the visibility and valuation of data assets.

bedrock-agentcore-starter-toolkit

Amazon Bedrock AgentCore Starter Toolkit enables developers to deploy and operate highly effective AI agents securely at scale using any framework and model. It provides tools and capabilities to make agents more effective and capable, purpose-built infrastructure to securely scale agents, and controls to operate trustworthy agents. The toolkit includes modular services like Runtime, Memory, Gateway, Code Interpreter, Browser, Observability, Identity, and Import Agent for seamless migration of existing agents. It is currently in public preview and offers enterprise-grade security and reliability for accelerating AI agent development.

AgentUp

AgentUp is an active development tool that provides a developer-first agent framework for creating AI agents with enterprise-grade infrastructure. It allows developers to define agents with configuration, ensuring consistent behavior across environments. The tool offers secure design, configuration-driven architecture, extensible ecosystem for customizations, agent-to-agent discovery, asynchronous task architecture, deterministic routing, and MCP support. It supports multiple agent types like reactive agents and iterative agents, making it suitable for chatbots, interactive applications, research tasks, and more. AgentUp is built by experienced engineers from top tech companies and is designed to make AI agents production-ready, secure, and reliable.

nocobase

NocoBase is an extensible AI-powered no-code platform that offers total control, infinite extensibility, and AI collaboration. It enables teams to adapt quickly and reduce costs without the need for years of development or wasted resources. With NocoBase, users can deploy the platform in minutes and have complete control over their projects. The platform is data model-driven, allowing for unlimited possibilities by decoupling UI and data structure. It integrates AI capabilities seamlessly into business systems, enabling roles such as translator, analyst, researcher, or assistant. NocoBase provides a simple and intuitive user experience with a 'what you see is what you get' approach. It is designed for extension through its plugin-based architecture, allowing users to customize and extend functionalities easily.

kgateway

Kgateway is a feature-rich, fast, and flexible Kubernetes-native API gateway built on top of Envoy proxy and the Kubernetes Gateway API. It excels in function-level routing, supports legacy apps, microservices, and serverless, offers robust discovery capabilities, integrates seamlessly with open-source projects, and is designed to support hybrid applications with various technologies, architectures, protocols, and clouds.

CSGHub

CSGHub is an open source, trustworthy large model asset management platform that can assist users in governing the assets involved in the lifecycle of LLM and LLM applications (datasets, model files, codes, etc). With CSGHub, users can perform operations on LLM assets, including uploading, downloading, storing, verifying, and distributing, through Web interface, Git command line, or natural language Chatbot. Meanwhile, the platform provides microservice submodules and standardized OpenAPIs, which could be easily integrated with users' own systems. CSGHub is committed to bringing users an asset management platform that is natively designed for large models and can be deployed On-Premise for fully offline operation. CSGHub offers functionalities similar to a privatized Huggingface(on-premise Huggingface), managing LLM assets in a manner akin to how OpenStack Glance manages virtual machine images, Harbor manages container images, and Sonatype Nexus manages artifacts.

kong

Kong, or Kong API Gateway, is a cloud-native, platform-agnostic, scalable API Gateway distinguished for its high performance and extensibility via plugins. It also provides advanced AI capabilities with multi-LLM support. By providing functionality for proxying, routing, load balancing, health checking, authentication (and more), Kong serves as the central layer for orchestrating microservices or conventional API traffic with ease. Kong runs natively on Kubernetes thanks to its official Kubernetes Ingress Controller.

langgraph

LangGraph is a low-level orchestration framework for building, managing, and deploying long-running, stateful agents. It provides durable execution, human-in-the-loop capabilities, comprehensive memory management, debugging tools, and production-ready deployment infrastructure. LangGraph can be used standalone or integrated with other LangChain products to streamline LLM application development.

coze-studio

Coze Studio is an all-in-one AI agent development tool that offers the most convenient AI agent development environment, from development to deployment. It provides core technologies for AI agent development, complete app templates, and build frameworks. Coze Studio aims to simplify creating, debugging, and deploying AI agents through visual design and build tools, enabling powerful AI app development and customized business logic. The tool is developed using Golang for the backend, React + TypeScript for the frontend, and follows microservices architecture based on domain-driven design principles.

holoinsight

HoloInsight is a cloud-native observability platform that provides low-cost and high-performance monitoring services for cloud-native applications. It offers deep insights through real-time log analysis and AI integration. The platform is designed to help users gain a comprehensive understanding of their applications' performance and behavior in the cloud environment. HoloInsight is easy to deploy using Docker and Kubernetes, making it a versatile tool for monitoring and optimizing cloud-native applications. With a focus on scalability and efficiency, HoloInsight is suitable for organizations looking to enhance their observability and monitoring capabilities in the cloud.

For similar tasks

infra

E2B Infra is a cloud runtime for AI agents. It provides SDKs and CLI to customize and manage environments and run AI agents in the cloud. The infrastructure is deployed using Terraform and is currently only deployable on GCP. The main components of the infrastructure are the API server, daemon running inside instances (sandboxes), Nomad driver for managing instances (sandboxes), and Nomad driver for building environments (templates).

genai-factory

GenAI Factory is a collection of end-to-end blueprints to deploy generative AI infrastructures in Google Cloud Platform (GCP), following security best practices. It embraces Infrastructure as Code (IaC) best practices, implements infrastructure in Terraform, and follows the least-privilege principle. The tool is compatible with Cloud Foundation Fabric FAST project-factory and application templates, allowing users to deploy various AI applications and systems on GCP.

superagent-py

Superagent is an open-source framework that enables developers to integrate production-ready AI assistants into any application quickly and easily. It provides a Python SDK for interacting with the Superagent API, allowing developers to create, manage, and invoke AI agents. The SDK simplifies the process of building AI-powered applications, making it accessible to developers of all skill levels.

AGiXT

AGiXT is a dynamic Artificial Intelligence Automation Platform engineered to orchestrate efficient AI instruction management and task execution across a multitude of providers. Our solution infuses adaptive memory handling with a broad spectrum of commands to enhance AI's understanding and responsiveness, leading to improved task completion. The platform's smart features, like Smart Instruct and Smart Chat, seamlessly integrate web search, planning strategies, and conversation continuity, transforming the interaction between users and AI. By leveraging a powerful plugin system that includes web browsing and command execution, AGiXT stands as a versatile bridge between AI models and users. With an expanding roster of AI providers, code evaluation capabilities, comprehensive chain management, and platform interoperability, AGiXT is consistently evolving to drive a multitude of applications, affirming its place at the forefront of AI technology.

Awesome-European-Tech

Awesome European Tech is an up-to-date list of recommended European projects and companies curated by the community to support and strengthen the European tech ecosystem. It focuses on privacy and sustainability, showcasing companies that adhere to GDPR compliance and sustainability standards. The project aims to highlight and support European startups and projects excelling in privacy, sustainability, and innovation to contribute to a more diverse, resilient, and interconnected global tech landscape.

LarAgent

LarAgent is a framework designed to simplify the creation and management of AI agents within Laravel projects. It offers an Eloquent-like syntax for creating and managing AI agents, Laravel-style artisan commands, flexible agent configuration, structured output handling, image input support, and extensibility. LarAgent supports multiple chat history storage options, custom tool creation, event system for agent interactions, multiple provider support, and can be used both in Laravel and standalone environments. The framework is constantly evolving to enhance developer experience, improve AI capabilities, enhance security and storage features, and enable advanced integrations like provider fallback system, Laravel Actions integration, and voice chat support.

agent-squad

Agent Squad is a flexible, lightweight open-source framework for orchestrating multiple AI agents to handle complex conversations. It intelligently routes queries, maintains context across interactions, and offers pre-built components for quick deployment. The system allows easy integration of custom agents and conversation messages storage solutions, making it suitable for various applications from simple chatbots to sophisticated AI systems, scaling efficiently.

mcp-gateway-registry

The MCP Gateway & Registry is a unified, enterprise-ready platform that centralizes access to both MCP Servers and AI Agents using the Model Context Protocol (MCP). It serves as a Unified MCP Server Gateway, MCP Servers Registry, and Agent Registry & A2A Communication Hub. The platform integrates with external registries, providing a single control plane for tool access, agent orchestration, and communication patterns. It transforms the chaos of managing individual MCP server configurations into an organized approach with secure, governed access to curated servers and registered agents. The platform supports dynamic tool discovery, autonomous agent communication, and unified policies for server and agent access.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.