AIO

陈大剩家庭 All-in-One 服务器搭建指南,这是一个完整的家庭 All-in-One 服务器搭建指南,帮助大家在家中搭建企业级的虚拟化环境。

Stars: 157

AIO is a comprehensive guide for setting up a home All-in-One server, enabling users to create an enterprise-level virtualized environment at home. It allows running multiple operating systems simultaneously, achieving public network access, optimizing hardware performance, and reducing IT costs. The guide includes detailed documentation for setting up from scratch and requires a computer with virtualization support, VMware ESXi installation media, and basic network configuration knowledge.

README:

💪 一台电脑顶十台!让每一颗 CPU、每一 GB 内存都发挥极致性能

这是一个完整的家庭 All-in-One 服务器搭建指南,帮助大家在家中搭建企业级的虚拟化环境。通过本项目,可以:

- 🏠 告别昂贵云服务,在家享受企业级数据中心的强大功能

- 🖥️ Windows、Linux、OpenWrt、macOS 多系统同时运行

- 🌐 全家设备随时随地公网访问,突破网络限制

- 💰 大幅降低 IT 成本,一台设备替代多台专用设备

- 多系统虚拟化: 同时运行多个操作系统,资源高效利用

- 网络穿透: 实现公网访问,让家变成专属云端

- 企业级性能: 充分发挥硬件性能,达到企业级标准

- 详细文档: 从零开始的完整搭建指南

- 成本优化: 一次投入,长期受益

- 一台支持虚拟化的电脑(推荐配置:16GB+ 内存,500GB+ 存储)

- VMware ESXi 安装介质

- 基本的网络配置知识

由于个人水平有限,加上编写时间仓促,教程中难免存在不准确之处,欢迎大家指正并提交 PR 完善内容,贡献请阅读的 贡献指南 了解:

- 如何报告问题和提出建议

- 文档编写规范和要求

- 代码提交流程和规范

- Pull Request 最佳实践

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for AIO

Similar Open Source Tools

AIO

AIO is a comprehensive guide for setting up a home All-in-One server, enabling users to create an enterprise-level virtualized environment at home. It allows running multiple operating systems simultaneously, achieving public network access, optimizing hardware performance, and reducing IT costs. The guide includes detailed documentation for setting up from scratch and requires a computer with virtualization support, VMware ESXi installation media, and basic network configuration knowledge.

AionUi

AionUi is a user interface library for building modern and responsive web applications. It provides a set of customizable components and styles to create visually appealing user interfaces. With AionUi, developers can easily design and implement interactive web interfaces that are both functional and aesthetically pleasing. The library is built using the latest web technologies and follows best practices for performance and accessibility. Whether you are working on a personal project or a professional application, AionUi can help you streamline the UI development process and deliver a seamless user experience.

Pulse

Pulse is a real-time monitoring tool designed for Proxmox, Docker, and Kubernetes infrastructure. It provides a unified dashboard to consolidate metrics, alerts, and AI-powered insights into a single interface. Suitable for homelabs, sysadmins, and MSPs, Pulse offers core monitoring features, AI-powered functionalities, multi-platform support, security and operations features, and community integrations. Pulse Pro unlocks advanced AI analysis and auto-fix capabilities. The tool is privacy-focused, secure by design, and offers detailed documentation for installation, configuration, security, troubleshooting, and more.

transformerlab-app

Transformer Lab is an app that allows users to experiment with Large Language Models by providing features such as one-click download of popular models, finetuning across different hardware, RLHF and Preference Optimization, working with LLMs across different operating systems, chatting with models, using different inference engines, evaluating models, building datasets for training, calculating embeddings, providing a full REST API, running in the cloud, converting models across platforms, supporting plugins, embedded Monaco code editor, prompt editing, inference logs, all through a simple cross-platform GUI.

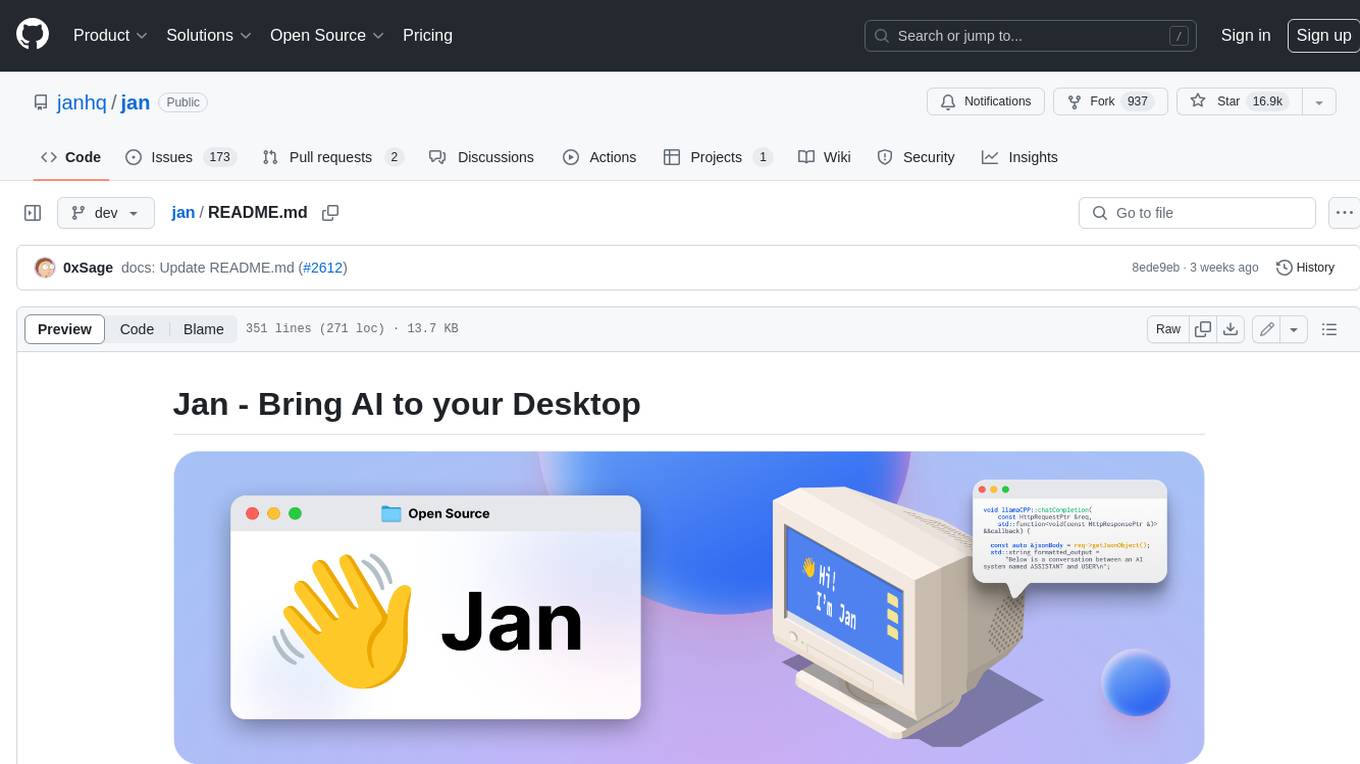

jan

Jan is an open-source ChatGPT alternative that runs 100% offline on your computer. It supports universal architectures, including Nvidia GPUs, Apple M-series, Apple Intel, Linux Debian, and Windows x64. Jan is currently in development, so expect breaking changes and bugs. It is lightweight and embeddable, and can be used on its own within your own projects.

astron-rpa

AstronRPA is an enterprise-grade Robotic Process Automation (RPA) desktop application that supports low-code/no-code development. It enables users to rapidly build workflows and automate desktop software and web pages. The tool offers comprehensive automation support for various applications, highly component-based design, enterprise-grade security and collaboration features, developer-friendly experience, native agent empowerment, and multi-channel trigger integration. It follows a frontend-backend separation architecture with components for system operations, browser automation, GUI automation, AI integration, and more. The tool is deployed via Docker and designed for complex RPA scenarios.

nodetool

NodeTool is a platform designed for AI enthusiasts, developers, and creators, providing a visual interface to access a variety of AI tools and models. It simplifies access to advanced AI technologies, offering resources for content creation, data analysis, automation, and more. With features like a visual editor, seamless integration with leading AI platforms, model manager, and API integration, NodeTool caters to both newcomers and experienced users in the AI field.

natively-cluely-ai-assistant

Natively is a free, open-source, privacy-first AI assistant designed to help users in real time during meetings, interviews, presentations, and conversations. Unlike traditional AI tools that work after the conversation, Natively operates while the conversation is happening. It runs as an invisible, always-on-top desktop overlay, listens when prompted, observes the screen content, and provides instant, context-aware assistance. The tool is fully transparent, customizable, and grants users complete control over local vs cloud AI, data, and credentials.

lancedb

LanceDB is an open-source database for vector-search built with persistent storage, which greatly simplifies retrieval, filtering, and management of embeddings. The key features of LanceDB include: Production-scale vector search with no servers to manage. Store, query, and filter vectors, metadata, and multi-modal data (text, images, videos, point clouds, and more). Support for vector similarity search, full-text search, and SQL. Native Python and Javascript/Typescript support. Zero-copy, automatic versioning, manage versions of your data without needing extra infrastructure. GPU support in building vector index(*). Ecosystem integrations with LangChain 🦜️🔗, LlamaIndex 🦙, Apache-Arrow, Pandas, Polars, DuckDB, and more on the way. LanceDB's core is written in Rust 🦀 and is built using Lance, an open-source columnar format designed for performant ML workloads.

CBbot

CBbot is an AI-powered coding assistant for macOS that helps users write code more efficiently, process documents, and automate tasks. It offers easy installation, built-in AI coding capabilities, auto configuration, and smart tools. Users can download CBbot for macOS 10.15 or higher, with Apple Silicon or Intel chip, and at least 6GB memory and 10GB disk space. The tool requires an internet connection for AI features. CBbot assists users in installing Docker Desktop, binding keys, troubleshooting, and using various skills for document processing and automation tasks. It also provides community support, billing based on usage, and network tips for using overseas AI models.

llamafarm

LlamaFarm is a comprehensive AI framework that empowers users to build powerful AI applications locally, with full control over costs and deployment options. It provides modular components for RAG systems, vector databases, model management, prompt engineering, and fine-tuning. Users can create differentiated AI products without needing extensive ML expertise, using simple CLI commands and YAML configs. The framework supports local-first development, production-ready components, strategy-based configuration, and deployment anywhere from laptops to the cloud.

bifrost

Bifrost is a high-performance AI gateway that unifies access to multiple providers through a single OpenAI-compatible API. It offers features like automatic failover, load balancing, semantic caching, and enterprise-grade functionalities. Users can deploy Bifrost in seconds with zero configuration, benefiting from its core infrastructure, advanced features, enterprise and security capabilities, and developer experience. The repository structure is modular, allowing for maximum flexibility. Bifrost is designed for quick setup, easy configuration, and seamless integration with various AI models and tools.

MentraOS

MentraOS is an open source operating system designed for smart glasses. It simplifies the development of smart glasses apps by handling pairing, connection, data streaming, and cross-compatibility. Developers can create apps using the TypeScript SDK quickly and easily, with access to smart glasses I/O components like displays, microphones, cameras, and speakers. The platform emphasizes cross-compatibility, speed of app development, control over device features, and easy distribution to users. The MentraOS Community is dedicated to promoting open, cross-compatible, and user-controlled personal computing through the development and support of MentraOS.

atom

Atom is an open-source, self-hosted AI agent platform that allows users to automate workflows by interacting with AI agents. Users can speak or type requests, and Atom's specialty agents can plan, verify, and execute complex workflows across various tech stacks. Unlike SaaS alternatives, Atom runs entirely on the user's infrastructure, ensuring data privacy. The platform offers features such as voice interface, specialty agents for sales, marketing, and engineering, browser and device automation, universal memory and context, agent governance system, deep integrations, dynamic skills, and more. Atom is designed for business automation, multi-agent workflows, and enterprise governance.

claude-007-agents

Claude Code Agents is an open-source AI agent system designed to enhance development workflows by providing specialized AI agents for orchestration, resilience engineering, and organizational memory. These agents offer specialized expertise across technologies, AI system with organizational memory, and an agent orchestration system. The system includes features such as engineering excellence by design, advanced orchestration system, Task Master integration, live MCP integrations, professional-grade workflows, and organizational intelligence. It is suitable for solo developers, small teams, enterprise teams, and open-source projects. The system requires a one-time bootstrap setup for each project to analyze the tech stack, select optimal agents, create configuration files, set up Task Master integration, and validate system readiness.

lmms-lab-writer

LMMs-Lab Writer is an AI-native LaTeX editor designed for researchers who prioritize ideas over syntax. It offers a local-first approach with AI agents for editing assistance, one-click LaTeX setup with automatic package installation, support for multiple languages, AI-powered workflows with OpenCode integration, Git integration for modern collaboration, fully open-source with MIT license, cross-platform compatibility, and a comparison with Overleaf highlighting its advantages. The tool aims to streamline the writing and publishing process for researchers while ensuring data security and control.

For similar tasks

AIO

AIO is a comprehensive guide for setting up a home All-in-One server, enabling users to create an enterprise-level virtualized environment at home. It allows running multiple operating systems simultaneously, achieving public network access, optimizing hardware performance, and reducing IT costs. The guide includes detailed documentation for setting up from scratch and requires a computer with virtualization support, VMware ESXi installation media, and basic network configuration knowledge.

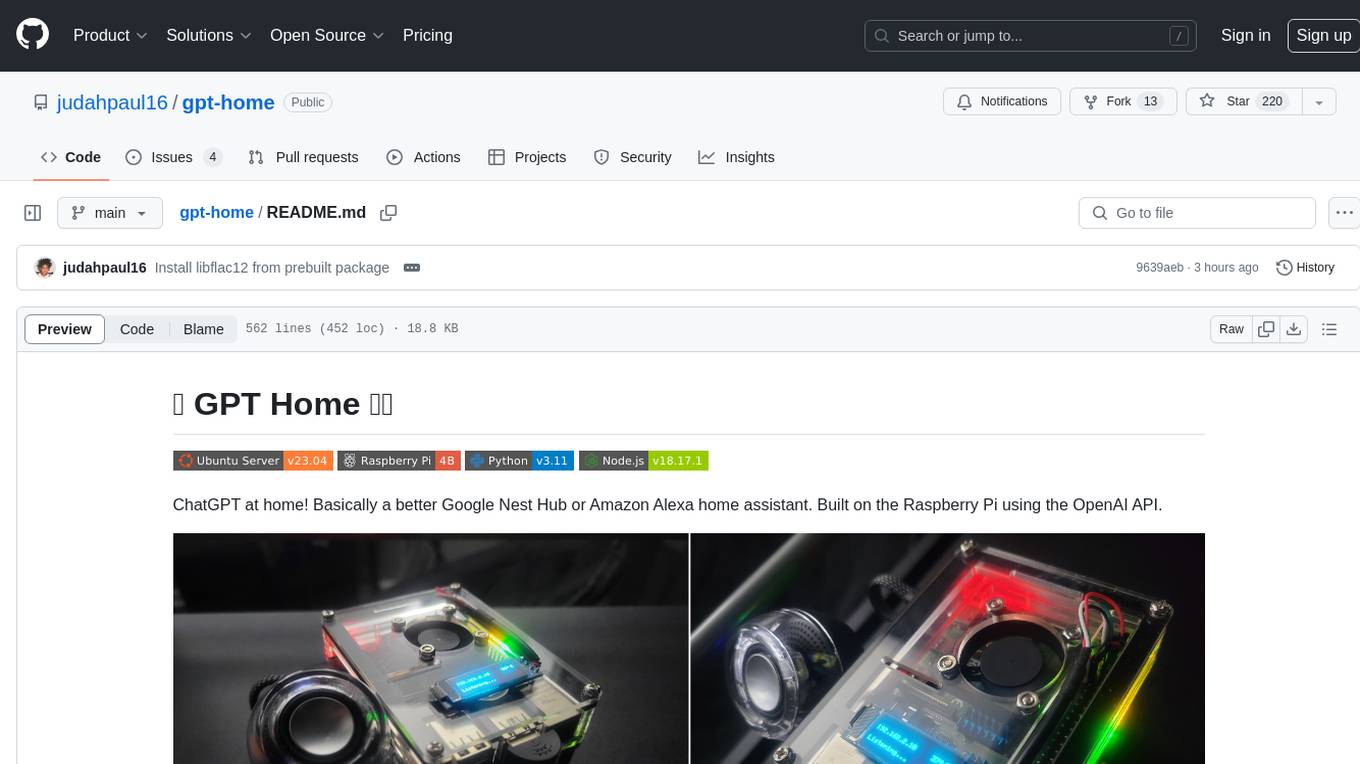

gpt-home

GPT Home is a project that allows users to build their own home assistant using Raspberry Pi and OpenAI API. It serves as a guide for setting up a smart home assistant similar to Google Nest Hub or Amazon Alexa. The project integrates various components like OpenAI, Spotify, Philips Hue, and OpenWeatherMap to provide a personalized home assistant experience. Users can follow the detailed instructions provided to build their own version of the home assistant on Raspberry Pi, with optional components for customization. The project also includes system configurations, dependencies installation, and setup scripts for easy deployment. Overall, GPT Home offers a DIY solution for creating a smart home assistant using Raspberry Pi and OpenAI technology.

noether

Noether is Emmi AI's open software framework for Engineering AI. It is built on transformer building blocks, delivering the full engineering stack for building, training, and operating industrial simulation models across engineering verticals. The framework eliminates the need for component re-engineering or an in-house deep learning team. Noether features a modular transformer architecture optimized for physical systems, hardware agnostic execution across CPU, MPS, and NVIDIA GPUs, industrial-grade design for high-fidelity simulations, and built-in support for Multi-GPU and SLURM cluster environments.

For similar jobs

minio

MinIO is a High Performance Object Storage released under GNU Affero General Public License v3.0. It is API compatible with Amazon S3 cloud storage service. Use MinIO to build high performance infrastructure for machine learning, analytics and application data workloads.

ai-on-gke

This repository contains assets related to AI/ML workloads on Google Kubernetes Engine (GKE). Run optimized AI/ML workloads with Google Kubernetes Engine (GKE) platform orchestration capabilities. A robust AI/ML platform considers the following layers: Infrastructure orchestration that support GPUs and TPUs for training and serving workloads at scale Flexible integration with distributed computing and data processing frameworks Support for multiple teams on the same infrastructure to maximize utilization of resources

kong

Kong, or Kong API Gateway, is a cloud-native, platform-agnostic, scalable API Gateway distinguished for its high performance and extensibility via plugins. It also provides advanced AI capabilities with multi-LLM support. By providing functionality for proxying, routing, load balancing, health checking, authentication (and more), Kong serves as the central layer for orchestrating microservices or conventional API traffic with ease. Kong runs natively on Kubernetes thanks to its official Kubernetes Ingress Controller.

AI-in-a-Box

AI-in-a-Box is a curated collection of solution accelerators that can help engineers establish their AI/ML environments and solutions rapidly and with minimal friction, while maintaining the highest standards of quality and efficiency. It provides essential guidance on the responsible use of AI and LLM technologies, specific security guidance for Generative AI (GenAI) applications, and best practices for scaling OpenAI applications within Azure. The available accelerators include: Azure ML Operationalization in-a-box, Edge AI in-a-box, Doc Intelligence in-a-box, Image and Video Analysis in-a-box, Cognitive Services Landing Zone in-a-box, Semantic Kernel Bot in-a-box, NLP to SQL in-a-box, Assistants API in-a-box, and Assistants API Bot in-a-box.

awsome-distributed-training

This repository contains reference architectures and test cases for distributed model training with Amazon SageMaker Hyperpod, AWS ParallelCluster, AWS Batch, and Amazon EKS. The test cases cover different types and sizes of models as well as different frameworks and parallel optimizations (Pytorch DDP/FSDP, MegatronLM, NemoMegatron...).

generative-ai-cdk-constructs

The AWS Generative AI Constructs Library is an open-source extension of the AWS Cloud Development Kit (AWS CDK) that provides multi-service, well-architected patterns for quickly defining solutions in code to create predictable and repeatable infrastructure, called constructs. The goal of AWS Generative AI CDK Constructs is to help developers build generative AI solutions using pattern-based definitions for their architecture. The patterns defined in AWS Generative AI CDK Constructs are high level, multi-service abstractions of AWS CDK constructs that have default configurations based on well-architected best practices. The library is organized into logical modules using object-oriented techniques to create each architectural pattern model.

model_server

OpenVINO™ Model Server (OVMS) is a high-performance system for serving models. Implemented in C++ for scalability and optimized for deployment on Intel architectures, the model server uses the same architecture and API as TensorFlow Serving and KServe while applying OpenVINO for inference execution. Inference service is provided via gRPC or REST API, making deploying new algorithms and AI experiments easy.

dify-helm

Deploy langgenius/dify, an LLM based chat bot app on kubernetes with helm chart.