aigne-hub

AIGNE Hub is the default multi-provider LLM/GPT adapter and API key usage manager for all AIGNE-based apps and Blocklets.

Stars: 387

AIGNE Hub is a unified AI gateway that manages connections to multiple LLM and AIGC providers, eliminating the complexity of handling API keys, usage tracking, and billing across different AI services. It provides self-hosting capabilities, multi-provider management, unified security, usage analytics, flexible billing, and seamless integration with the AIGNE framework. The tool supports various AI providers and deployment scenarios, catering to both enterprise self-hosting and service provider modes. Users can easily deploy and configure AI providers, enable billing, and utilize core capabilities such as chat completions, image generation, embeddings, and RESTful APIs. AIGNE Hub ensures secure access, encrypted API key management, user permissions, and audit logging. Built with modern technologies like AIGNE Framework, Node.js, TypeScript, React, SQLite, and Blocklet for cloud-native deployment.

README:

The Unified AI Gateway for the AIGNE Ecosystem

Formerly AI Kit - Now evolved into the comprehensive AIGNE Hub

AIGNE Hub is a unified AI gateway that manages connections to multiple LLM and AIGC providers, eliminating the complexity of handling API keys, usage tracking, and billing across different AI services. Built as a Blocklet using the AIGNE framework, it evolved from our AI Kit project to become the backbone of the entire AIGNE ecosystem.

Whether you're building applications with the AIGNE framework, using AIGNE Studio, or developing with the AIGNE CLI, AIGNE Hub seamlessly provides unified AI capabilities behind the scenes.

- 🏠 Self-Hosting Ready: Deploy your own instance for complete control and privacy

- 🔌 Multi-Provider Management: Connect to 8+ AI providers through one interface

- 🔐 Unified Security: Encrypted API key storage with fine-grained access controls

- 📊 Usage Analytics: Comprehensive tracking and cost analysis

- 💰 Flexible Billing: Run internally or offer as a service with built-in credit system

- ⚡ Seamless Integration: Works out-of-the-box with AIGNE framework applications

- OpenAI - GPT models, DALL-E, Embeddings

- Anthropic - Claude models

- Amazon Bedrock - AWS hosted models

- Google Gemini - Gemini Pro, Vision

- DeepSeek - Advanced reasoning models

- Ollama - Local model deployment

- OpenRouter - Access to multiple providers

- xAI - Grok models

- Doubao - Doubao AI models

- Poe - Poe AI platform

- And more providers being added...

Perfect for internal teams and organizations requiring data control:

- Deploy within your infrastructure

- No external billing - pay providers directly

- Keep all data within your security perimeter

- Ideal for corporate AI initiatives and development teams

Turn AIGNE Hub into a customer-facing AI service:

- Enable credit billing with Payment Kit integration

- Set custom pricing and profit margins

- Automatic user onboarding with starter credits

- Comprehensive billing and usage management

- Perfect for AI service providers and SaaS platforms

-

Deploy on Blocklet Server:

- Visit Blocklet Store and search for "AIGNE Hub"

- Click "Launch" and follow the installation wizard

-

Configure AI Providers:

- Access admin panel → Config → AI Providers

- Add your API keys for desired providers

- Start Using: Your AIGNE Hub is ready for team usage with OAuth or direct API access

- Enable Billing: Install Payment Kit, configure rates, and set up customer flows

// Using AIGNE Framework with AIGNE Hub

import { AIGNEHubChatModel } from "@aigne/aigne-hub";

const model = new AIGNEHubChatModel({

url: "https://your-aigne-hub-url/api/v2/chat",

accessKey: "your-oauth-access-key",

model: "openai/gpt-3.5-turbo",

});

const result = await model.invoke({

messages: "Hello, AIGNE Hub!",

});

console.log(result);- Chat Completions: Conversational AI and text generation

- Image Generation: AI-powered image creation and editing

- Embeddings: Vector representations for semantic search

- Built-in Playground: Test models in real-time

- RESTful APIs: Standard HTTP endpoints for integration

- Credit-Based Billing: Flexible usage-based payments

- Custom Pricing: Set your own rates and profit margins

- Usage Tracking: Detailed consumption analytics

- Cost Analysis: Track spending across providers and models

- Payment Integration: Seamless credit purchases through Payment Kit

- OAuth Integration: Secure application access

- API Key Management: Encrypted storage with access controls

- User Management: Role-based permissions and access

- Audit Logging: Complete usage and access history

Built with modern technologies for reliability and performance:

- AIGNE Framework: Latest version with enhanced integration

- Node.js & TypeScript: Robust backend with full type safety

- React 19: Modern frontend with latest React features

- SQLite: Local data storage with Sequelize ORM

- Blocklet: Cloud-native deployment and scaling

We welcome contributions! Please see CONTRIBUTING.md for detailed guidelines on:

- Development setup and workflow

- Code style and testing requirements

- Adding new AI providers

- Submitting pull requests

- Documentation: AIGNE Hub Docs

- Community: ArcBlock Community

- Issues: GitHub Issues

This project is licensed under the Elastic License 2.0 License - see the LICENSE file for details.

Transform your AI development workflow with AIGNE Hub - the central nervous system for all your generative AI needs.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for aigne-hub

Similar Open Source Tools

aigne-hub

AIGNE Hub is a unified AI gateway that manages connections to multiple LLM and AIGC providers, eliminating the complexity of handling API keys, usage tracking, and billing across different AI services. It provides self-hosting capabilities, multi-provider management, unified security, usage analytics, flexible billing, and seamless integration with the AIGNE framework. The tool supports various AI providers and deployment scenarios, catering to both enterprise self-hosting and service provider modes. Users can easily deploy and configure AI providers, enable billing, and utilize core capabilities such as chat completions, image generation, embeddings, and RESTful APIs. AIGNE Hub ensures secure access, encrypted API key management, user permissions, and audit logging. Built with modern technologies like AIGNE Framework, Node.js, TypeScript, React, SQLite, and Blocklet for cloud-native deployment.

Zettelgarden

Zettelgarden is a human-centric, open-source personal knowledge management system that helps users develop and maintain their understanding of the world. It focuses on creating and connecting atomic notes, thoughtful AI integration, and scalability from personal notes to company knowledge bases. The project is actively evolving, with features subject to change based on community feedback and development priorities.

persistent-ai-memory

Persistent AI Memory System is a comprehensive tool that offers persistent, searchable storage for AI assistants. It includes features like conversation tracking, MCP tool call logging, and intelligent scheduling. The system supports multiple databases, provides enhanced memory management, and offers various tools for memory operations, schedule management, and system health checks. It also integrates with various platforms like LM Studio, VS Code, Koboldcpp, Ollama, and more. The system is designed to be modular, platform-agnostic, and scalable, allowing users to handle large conversation histories efficiently.

aider-desk

AiderDesk is a desktop application that enhances coding workflow by leveraging AI capabilities. It offers an intuitive GUI, project management, IDE integration, MCP support, settings management, cost tracking, structured messages, visual file management, model switching, code diff viewer, one-click reverts, and easy sharing. Users can install it by downloading the latest release and running the executable. AiderDesk also supports Python version detection and auto update disabling. It includes features like multiple project management, context file management, model switching, chat mode selection, question answering, cost tracking, MCP server integration, and MCP support for external tools and context. Development setup involves cloning the repository, installing dependencies, running in development mode, and building executables for different platforms. Contributions from the community are welcome following specific guidelines.

talkcody

TalkCody is a free, open-source AI coding agent designed for developers who value speed, cost, control, and privacy. It offers true freedom to use any AI model without vendor lock-in, maximum speed through unique four-level parallelism, and complete privacy as everything runs locally without leaving the user's machine. With professional-grade features like multimodal input support, MCP server compatibility, and a marketplace for agents and skills, TalkCody aims to enhance development productivity and flexibility.

heurist-agent-framework

Heurist Agent Framework is a flexible multi-interface AI agent framework that allows processing text and voice messages, generating images and videos, interacting across multiple platforms, fetching and storing information in a knowledge base, accessing external APIs and tools, and composing complex workflows using Mesh Agents. It supports various platforms like Telegram, Discord, Twitter, Farcaster, REST API, and MCP. The framework is built on a modular architecture and provides core components, tools, workflows, and tool integration with MCP support.

atom

Atom is an open-source, self-hosted AI agent platform that allows users to automate workflows by interacting with AI agents. Users can speak or type requests, and Atom's specialty agents can plan, verify, and execute complex workflows across various tech stacks. Unlike SaaS alternatives, Atom runs entirely on the user's infrastructure, ensuring data privacy. The platform offers features such as voice interface, specialty agents for sales, marketing, and engineering, browser and device automation, universal memory and context, agent governance system, deep integrations, dynamic skills, and more. Atom is designed for business automation, multi-agent workflows, and enterprise governance.

sparka

Sparka AI is a multi-provider AI chat tool that allows users to access various AI models like Claude, GPT-5, Gemini, and Grok through a single interface. It offers features such as document analysis, image generation, code execution, and research tools without the need for multiple subscriptions. The tool is open-source, production-ready, and provides capabilities for collaboration, secure authentication, attachment support, AI-powered image generation, syntax highlighting, resumable streams, chat branching, chat sharing, deep research, code execution, document creation, and web analytics. Built with modern technologies for scalability and performance, Sparka AI integrates with Vercel AI SDK, tRPC, Drizzle ORM, PostgreSQL, Redis, and AI SDK Gateway.

abi

ABI (Agentic Brain Infrastructure) is a Python-based AI Operating System designed to serve as the core infrastructure for building an Agentic AI Ontology Engine. It empowers organizations to integrate, manage, and scale AI-driven operations with multiple AI models, focusing on ontology, agent-driven workflows, and analytics. ABI emphasizes modularity and customization, providing a customizable framework aligned with international standards and regulatory frameworks. It offers features such as configurable AI agents, ontology management, integrations with external data sources, data processing pipelines, workflow automation, analytics, and data handling capabilities.

llmchat

LLMChat is an all-in-one AI chat interface that supports multiple language models, offers a plugin library for enhanced functionality, enables web search capabilities, allows customization of AI assistants, provides text-to-speech conversion, ensures secure local data storage, and facilitates data import/export. It also includes features like knowledge spaces, prompt library, personalization, and can be installed as a Progressive Web App (PWA). The tech stack includes Next.js, TypeScript, Pglite, LangChain, Zustand, React Query, Supabase, Tailwind CSS, Framer Motion, Shadcn, and Tiptap. The roadmap includes upcoming features like speech-to-text and knowledge spaces.

openops

OpenOps is a No-Code FinOps automation platform designed to help organizations reduce cloud costs and streamline financial operations. It offers customizable workflows for automating key FinOps processes, comes with its own Excel-like database and visualization system, and enables collaboration between different teams. OpenOps integrates seamlessly with major cloud providers, third-party FinOps tools, communication platforms, and project management tools, providing a comprehensive solution for efficient cost-saving measures implementation.

astron-agent

Astron Agent is an enterprise-grade, commercial-friendly Agentic Workflow development platform that integrates AI workflow orchestration, model management, AI and MCP tool integration, RPA automation, and team collaboration features. It supports high-availability deployment, enabling organizations to rapidly build scalable, production-ready intelligent agent applications and establish their AI foundation for the future. The platform is stable, reliable, and business-friendly, with key features such as enterprise-grade high availability, intelligent RPA integration, ready-to-use tool ecosystem, and flexible large model support.

ComfyUI-Copilot

ComfyUI-Copilot is an intelligent assistant built on the Comfy-UI framework that simplifies and enhances the AI algorithm debugging and deployment process through natural language interactions. It offers intuitive node recommendations, workflow building aids, and model querying services to streamline development processes. With features like interactive Q&A bot, natural language node suggestions, smart workflow assistance, and model querying, ComfyUI-Copilot aims to lower the barriers to entry for beginners, boost development efficiency with AI-driven suggestions, and provide real-time assistance for developers.

Open-WebUI-Functions

Open-WebUI-Functions is a collection of Python-based functions that extend Open WebUI with custom pipelines, filters, and integrations. Users can interact with AI models, process data efficiently, and customize the Open WebUI experience. It includes features like custom pipelines, data processing filters, Azure AI support, N8N workflow integration, flexible configuration, secure API key management, and support for both streaming and non-streaming processing. The functions require an active Open WebUI instance, may need external AI services like Azure AI, and admin access for installation. Security features include automatic encryption of sensitive information like API keys. Pipelines include Azure AI Foundry, N8N, Infomaniak, and Google Gemini. Filters like Time Token Tracker measure response time and token usage. Integrations with Azure AI, N8N, Infomaniak, and Google are supported. Contributions are welcome, and the project is licensed under Apache License 2.0.

OpenChat

OS Chat is a free, open-source AI personal assistant that combines 40+ language models with powerful automation capabilities. It allows users to deploy background agents, connect services like Gmail, Calendar, Notion, GitHub, and Slack, and get things done through natural conversation. With features like smart automation, service connectors, AI models, chat management, interface customization, and premium features, OS Chat offers a comprehensive solution for managing digital life and workflows. It prioritizes privacy by being open source and self-hostable, with encrypted API key storage.

bifrost

Bifrost is a high-performance AI gateway that unifies access to multiple providers through a single OpenAI-compatible API. It offers features like automatic failover, load balancing, semantic caching, and enterprise-grade functionalities. Users can deploy Bifrost in seconds with zero configuration, benefiting from its core infrastructure, advanced features, enterprise and security capabilities, and developer experience. The repository structure is modular, allowing for maximum flexibility. Bifrost is designed for quick setup, easy configuration, and seamless integration with various AI models and tools.

For similar tasks

aigne-hub

AIGNE Hub is a unified AI gateway that manages connections to multiple LLM and AIGC providers, eliminating the complexity of handling API keys, usage tracking, and billing across different AI services. It provides self-hosting capabilities, multi-provider management, unified security, usage analytics, flexible billing, and seamless integration with the AIGNE framework. The tool supports various AI providers and deployment scenarios, catering to both enterprise self-hosting and service provider modes. Users can easily deploy and configure AI providers, enable billing, and utilize core capabilities such as chat completions, image generation, embeddings, and RESTful APIs. AIGNE Hub ensures secure access, encrypted API key management, user permissions, and audit logging. Built with modern technologies like AIGNE Framework, Node.js, TypeScript, React, SQLite, and Blocklet for cloud-native deployment.

ai-gateway

LangDB AI Gateway is an open-source enterprise AI gateway built in Rust. It provides a unified interface to all LLMs using the OpenAI API format, focusing on high performance, enterprise readiness, and data control. The gateway offers features like comprehensive usage analytics, cost tracking, rate limiting, data ownership, and detailed logging. It supports various LLM providers and provides OpenAI-compatible endpoints for chat completions, model listing, embeddings generation, and image generation. Users can configure advanced settings, such as rate limiting, cost control, dynamic model routing, and observability with OpenTelemetry tracing. The gateway can be run with Docker Compose and integrated with MCP tools for server communication.

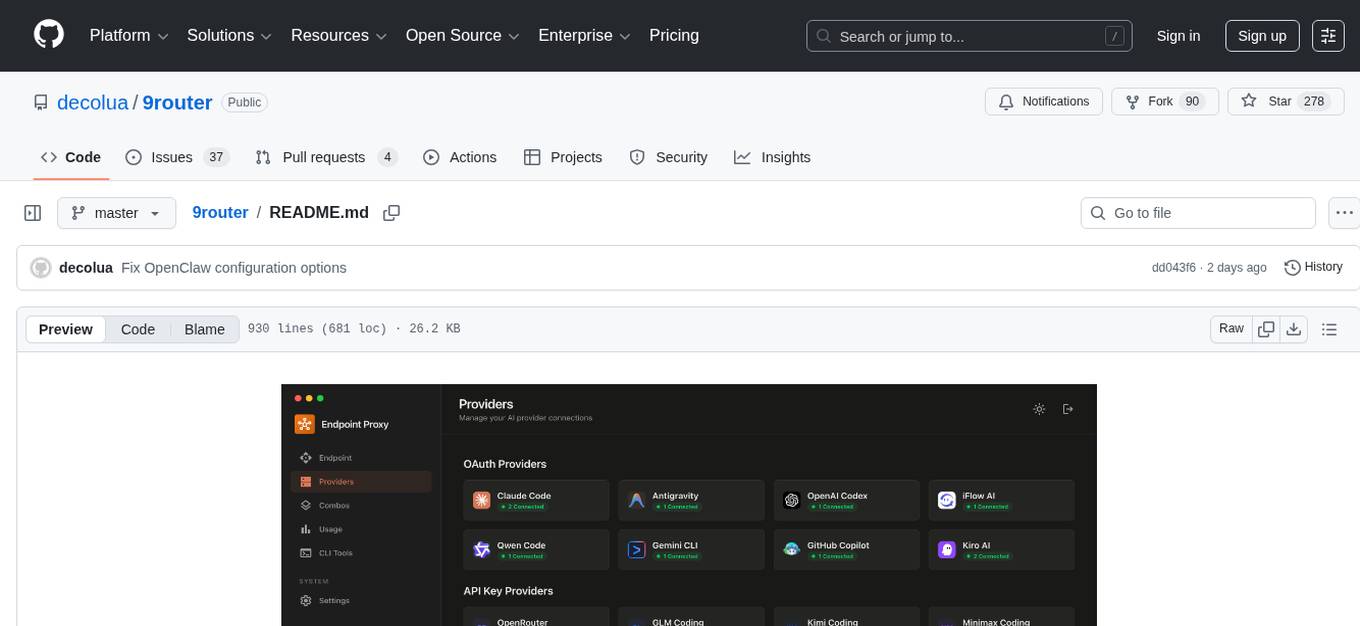

9router

9Router is a free AI router tool designed to help developers maximize their AI subscriptions, auto-route to free and cheap AI models with smart fallback, and avoid hitting limits and wasting money. It offers features like real-time quota tracking, format translation between OpenAI, Claude, and Gemini, multi-account support, auto token refresh, custom model combinations, request logging, cloud sync, usage analytics, and flexible deployment options. The tool supports various providers like Claude Code, Codex, Gemini CLI, GitHub Copilot, GLM, MiniMax, iFlow, Qwen, and Kiro, and allows users to create combos for different scenarios. Users can connect to the tool via CLI tools like Cursor, Claude Code, Codex, OpenClaw, and Cline, and deploy it on VPS, Docker, or Cloudflare Workers.

proxypal

ProxyPal is a desktop app that allows users to utilize multiple AI subscriptions (such as Claude, ChatGPT, Gemini, GitHub Copilot) with any coding tool. It acts as a bridge between various AI providers and coding tools, offering features like connecting to different AI services, GitHub Copilot integration, Antigravity support, usage analytics, request monitoring, and auto-configuration. The app supports multiple platforms and clients, providing a seamless experience for developers to leverage AI capabilities in their coding workflow. ProxyPal simplifies the process of using AI models in coding environments, enhancing productivity and efficiency.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.