aiohttp-sse

Server-sent events support for aiohttp

Stars: 230

aiohttp-sse is a library that provides support for server-sent events for aiohttp. Server-sent events are a way to send real-time updates from a server to a client. This can be useful for things like live chat, stock tickers, or any other application where you need to send updates to a client without having to wait for the client to request them.

README:

.. image:: https://github.com/aio-libs/aiohttp-sse/workflows/CI/badge.svg?event=push :target: https://github.com/aio-libs/aiohttp-sse/actions?query=event%3Apush+branch%3Amaster

.. image:: https://codecov.io/gh/aio-libs/aiohttp-sse/branch/master/graph/badge.svg :target: https://codecov.io/gh/aio-libs/aiohttp-sse

.. image:: https://pyup.io/repos/github/aio-libs/aiohttp-sse/shield.svg :target: https://pyup.io/repos/github/aio-libs/aiohttp-sse/ :alt: Updates

.. image:: https://badges.gitter.im/Join%20Chat.svg :target: https://gitter.im/aio-libs/Lobby :alt: Chat on Gitter

The EventSource interface is used to receive server-sent events. It connects to a server over HTTP and receives events in text/event-stream format without closing the connection. aiohttp-sse provides support for server-sent events for aiohttp_.

Installation process as simple as::

$ pip install aiohttp-sse

.. code:: python

import asyncio

import json

from datetime import datetime

from aiohttp import web

from aiohttp_sse import sse_response

async def hello(request: web.Request) -> web.StreamResponse:

async with sse_response(request) as resp:

while resp.is_connected():

time_dict = {"time": f"Server Time : {datetime.now()}"}

data = json.dumps(time_dict, indent=2)

print(data)

await resp.send(data)

await asyncio.sleep(1)

return resp

async def index(_request: web.Request) -> web.StreamResponse:

html = """

<html>

<body>

<script>

var eventSource = new EventSource("/hello");

eventSource.addEventListener("message", event => {

document.getElementById("response").innerText = event.data;

});

</script>

<h1>Response from server:</h1>

<div id="response"></div>

</body>

</html>

"""

return web.Response(text=html, content_type="text/html")

app = web.Application()

app.router.add_route("GET", "/hello", hello)

app.router.add_route("GET", "/", index)

web.run_app(app, host="127.0.0.1", port=8080)

- http://www.w3.org/TR/2011/WD-eventsource-20110310/

- https://developer.mozilla.org/en-US/docs/Server-sent_events/Using_server-sent_events

- aiohttp_ 3+

The aiohttp-sse is offered under Apache 2.0 license.

.. _Python: https://www.python.org .. _asyncio: http://docs.python.org/3/library/asyncio.html .. _aiohttp: https://github.com/aio-libs/aiohttp

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for aiohttp-sse

Similar Open Source Tools

aiohttp-sse

aiohttp-sse is a library that provides support for server-sent events for aiohttp. Server-sent events are a way to send real-time updates from a server to a client. This can be useful for things like live chat, stock tickers, or any other application where you need to send updates to a client without having to wait for the client to request them.

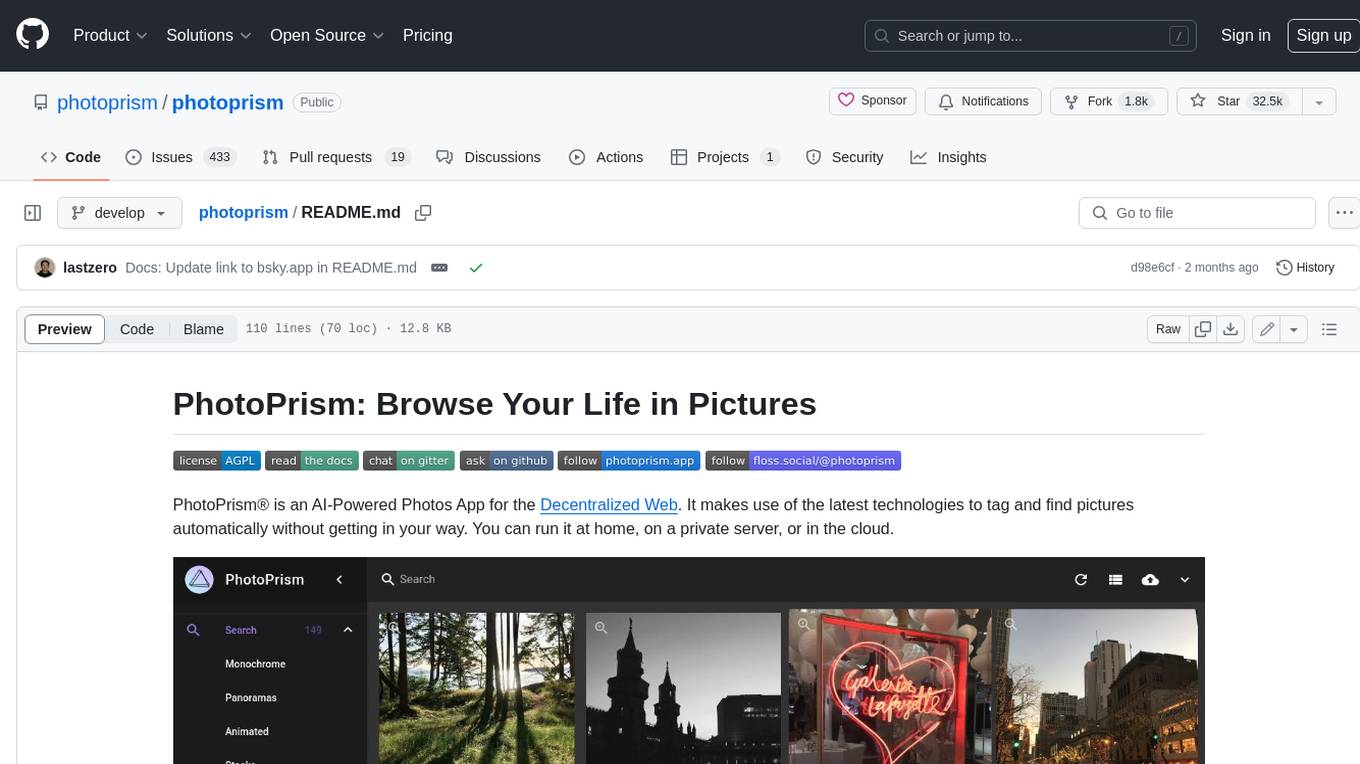

photoprism

PhotoPrism is an AI-powered photos app for the decentralized web. It uses the latest technologies to tag and find pictures automatically without getting in your way. You can run it at home, on a private server, or in the cloud.

tiledesk

Tiledesk is an Open Source Live Chat platform with integrated Chatbots written in NodeJs and Express. It provides a multi-channel platform for Web, Android, and iOS, offering out-of-the-box chatbots that work alongside humans. Users can automate conversations using native chatbot technology powered by AI, connect applications via APIs or Webhooks, deploy visual applications within conversations, and enable applications to interact with chatbots or end-users. Tiledesk is multichannel, allowing chatbot scripts with images and buttons to run on various channels like Whatsapp, Facebook Messenger, and Telegram. The project includes Tiledesk Server, Dashboard, Design Studio, Chat21 ionic, Web Widget, Server, Http Server, MongoDB, and a proxy. It offers Helm charts for Kubernetes deployment, but customization is recommended for production environments, such as integrating with external MongoDB or monitoring/logging tools. Enterprise customers can request private Docker images by contacting [email protected].

auth0-assistant0

Assistant0 is an AI personal assistant that consolidates digital life by accessing multiple tools to help users stay organized and efficient. It integrates with Gmail for email summaries, manages calendars, retrieves user information, enables online shopping with human-in-the-loop authorizations, uploads and retrieves documents, lists GitHub repositories and events, and soon provides Slack notifications and Google Drive access. With tool-calling capabilities, it acts as a digital personal secretary, enhancing efficiency and ushering in intelligent automation. Security challenges are addressed by using Auth0 for secure tool calling with scoped access tokens, ensuring user data protection.

Elite-Dangerous-AI-Integration

Elite-Dangerous-AI-Integration aims to provide a seamless and efficient experience for commanders by integrating Elite:Dangerous with various services for Speech-to-Text, Text-to-Speech, and Large Language Models. The AI reacts to game events, given commands, and can perform actions like taking screenshots or fetching information from APIs. It is designed for all commanders, enhancing roleplaying, replacing third-party websites, and assisting with tutorials.

dewhale

Dewhale is a GitHub-Powered AI tool designed for effortless development. It utilizes prompt engineering techniques under the GPT-4 model to issue commands, allowing users to generate code with lower usage costs and easy customization. The tool seamlessly integrates with GitHub, providing version control, code review, and collaborative features. Users can join discussions on the design philosophy of Dewhale and explore detailed instructions and examples for setting up and using the tool.

az-hop

Azure HPC On-Demand Platform (az-hop) provides an end-to-end deployment mechanism for a base HPC infrastructure on Azure. It delivers a complete HPC cluster solution ready for users to run applications, which is easy to deploy and manage for HPC administrators. az-hop leverages various Azure building blocks and can be used as-is or easily customized and extended to meet any uncovered requirements. Industry-standard tools like Terraform, Ansible, and Packer are used to provision and configure this environment, which contains: - An HPC OnDemand Portal for all user access, remote shell access, remote visualization access, job submission, file access, and more - An Active Directory for user authentication and domain control - Open PBS or SLURM as a Job Scheduler - Dynamic resources provisioning and autoscaling is done by Azure CycleCloud pre-configured job queues and integrated health-checks to quickly avoid non-optimal nodes - A Jumpbox to provide admin access - A common shared file system for home directory and applications is delivered by Azure Netapp Files - Grafana dashboards to monitor your cluster - Remote Visualization with noVNC and GPU acceleration with VirtualGL

superinterface

Superinterface is an AI assistants library for building AI capabilities into your app or website. It allows you to use React components and hooks to create AI-first assistants-based interfaces such as chats and wizards. The project is currently in the process of writing documentation. For more information, visit https://superinterface.ai. Examples are hosted at https://examples-next.superinterface.ai.

airsync-android

Android app for AirSync 2.0 built with Kotlin Jetpack Compose. Users can connect using a QR code scan and save the last device for easier re-connection. The app is developed with gratitude to the community, AI research for assistance, and various contributors. It aims to provide a seamless experience for users to manage notifications efficiently.

zodiac

Zodiac is a frontend tool designed to interact with Google's Gemini Pro using vanilla JS. It provides a user-friendly interface for accessing the functionalities of Google AI Suite. The tool simplifies the process of utilizing Gemini Pro's capabilities through a straightforward web application. Users can easily integrate their API key and access various features offered by Google AI Suite. Zodiac aims to streamline the interaction with Gemini Pro and enhance the user experience by offering a simple and intuitive interface for managing AI tasks.

StoryToolKit

StoryToolkitAI is a film editing tool that utilizes AI to transcribe, index scenes, search through footage, and create stories. It offers features such as automatic transcription, translation, story creation, speaker detection, project file management, and more. The tool works locally on your machine and integrates with DaVinci Resolve Studio 18. It aims to streamline the editing process by leveraging AI capabilities and enhancing user efficiency.

ai-shifu

AI-Shifu is an AI-led chat flow tool powered by LLM that provides an interactive and immersive experience for users. It allows users to follow a preset chat flow while being able to ask questions and affect the conversation. The tool can make personalized outputs based on user identity, interests, and preferences, making users feel like they are receiving one-on-one service. It is suitable for education, storytelling, product guides, surveys, and game NPC scenarios.

vibes.diy

vibes.diy is a tool that allows users to easily create interactive mini apps using AI technology. With zero setup required, users can turn their ideas into interactive apps instantly. The tool provides features such as browsing and returning to previous creations, auto-saving work securely, and seeing app changes in real-time. Every app created is automatically saved, allowing users to browse creations, return to previous work, keep track of building history, and save screenshots of apps.

DocsGPT

DocsGPT is an open-source documentation assistant powered by GPT models. It simplifies the process of searching for information in project documentation by allowing developers to ask questions and receive accurate answers. With DocsGPT, users can say goodbye to manual searches and quickly find the information they need. The tool aims to revolutionize project documentation experiences and offers features like live previews, Discord community, guides, and contribution opportunities. It consists of a Flask app, Chrome extension, similarity search index creation script, and a frontend built with Vite and React. Users can quickly get started with DocsGPT by following the provided setup instructions and can contribute to its development by following the guidelines in the CONTRIBUTING.md file. The project follows a Code of Conduct to ensure a harassment-free community environment for all participants. DocsGPT is licensed under MIT and is built with LangChain.

crawl4ai

Crawl4AI is a powerful and free web crawling service that extracts valuable data from websites and provides LLM-friendly output formats. It supports crawling multiple URLs simultaneously, replaces media tags with ALT, and is completely free to use and open-source. Users can integrate Crawl4AI into Python projects as a library or run it as a standalone local server. The tool allows users to crawl and extract data from specified URLs using different providers and models, with options to include raw HTML content, force fresh crawls, and extract meaningful text blocks. Configuration settings can be adjusted in the `crawler/config.py` file to customize providers, API keys, chunk processing, and word thresholds. Contributions to Crawl4AI are welcome from the open-source community to enhance its value for AI enthusiasts and developers.

Conversational-Azure-OpenAI-Accelerator

The Conversational Azure OpenAI Accelerator is a tool designed to provide rapid, no-cost custom demos tailored to customer use cases, from internal HR/IT to external contact centers. It focuses on top use cases of GenAI conversation and summarization, plus live backend data integration. The tool automates conversations across voice and text channels, providing a valuable way to save money and improve customer and employee experience. By combining Azure OpenAI + Cognitive Search, users can efficiently deploy a ChatGPT experience using web pages, knowledge base articles, and data sources. The tool enables simultaneous deployment of conversational content to chatbots, IVR, voice assistants, and more in one click, eliminating the need for in-depth IT involvement. It leverages Microsoft's advanced AI technologies, resulting in a conversational experience that can converse in human-like dialogue, respond intelligently, and capture content for omni-channel unified analytics.

For similar tasks

aiohttp-sse

aiohttp-sse is a library that provides support for server-sent events for aiohttp. Server-sent events are a way to send real-time updates from a server to a client. This can be useful for things like live chat, stock tickers, or any other application where you need to send updates to a client without having to wait for the client to request them.

lettabot

LettaBot is a personal AI assistant that operates across multiple messaging platforms including Telegram, Slack, Discord, WhatsApp, and Signal. It offers features like unified memory, persistent memory, local tool execution, voice message transcription, scheduling, and real-time message updates. Users can interact with LettaBot through various commands and setup wizards. The tool can be used for controlling smart home devices, managing background tasks, connecting to Letta Code, and executing specific operations like file exploration and internet queries. LettaBot ensures security through outbound connections only, restricted tool execution, and access control policies. Development and releases are automated, and troubleshooting guides are provided for common issues.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

oss-fuzz-gen

This framework generates fuzz targets for real-world `C`/`C++` projects with various Large Language Models (LLM) and benchmarks them via the `OSS-Fuzz` platform. It manages to successfully leverage LLMs to generate valid fuzz targets (which generate non-zero coverage increase) for 160 C/C++ projects. The maximum line coverage increase is 29% from the existing human-written targets.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.