gromacs_copilot

Let LLM run your MDs.

Stars: 172

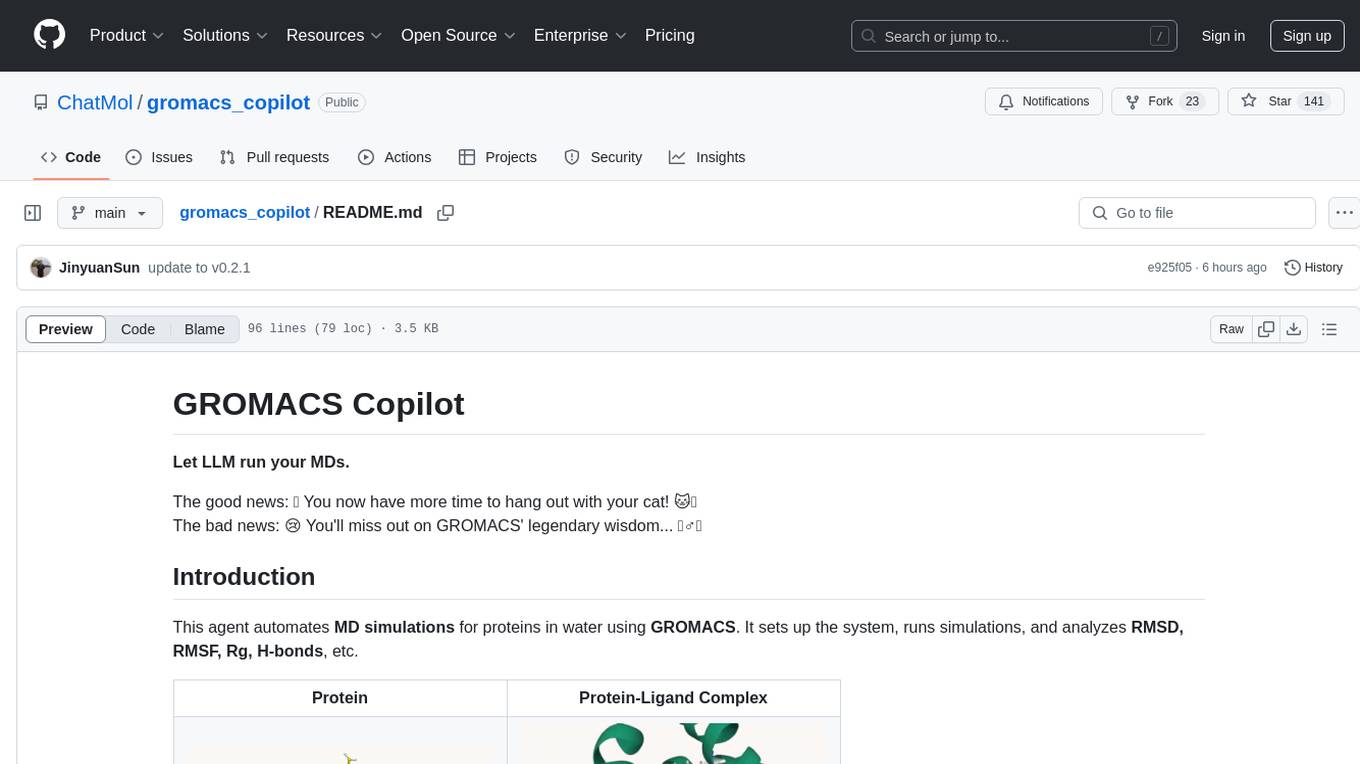

GROMACS Copilot is an agent designed to automate molecular dynamics simulations for proteins in water using GROMACS. It handles system setup, simulation execution, and result analysis automatically, providing outputs such as RMSD, RMSF, Rg, and H-bonds. Users can interact with the agent through prompts and API keys from DeepSeek and OpenAI. The tool aims to simplify the process of running MD simulations, allowing users to focus on other tasks while it handles the technical aspects of the simulations.

README:

Let LLM run your MDs.

The good news: 🎉 You now have more time to hang out with your cat! 🐱💖

The bad news: 😢 You'll miss out on GROMACS' legendary wisdom... 🧙♂️💬

This agent automates MD simulations for proteins in water using GROMACS. It sets up the system, runs simulations, and analyzes RMSD, RMSF, Rg, H-bonds, etc.

| Protein | Protein-Ligand Complex |

|

|

| A demo of output report | A demo of output report |

- Install the package

pip install git+https://github.com/ChatMol/gromacs_copilot.git

conda install -c conda-forge acpype # for protein-ligand complex

conda install -c conda-forge gmx_mmpbsa # for MM-PBSA/GBSA analysis- Prepare a working dir and a input pdb

mkdir md_workspace && cd md_workspace

wget https://files.rcsb.org/download/1PGA.pdb

grep -v HOH 1PGA.pdb > 1pga_protein.pdb

cd ..gmx_copilot --workspace md_workspace/ \

--prompt "setup simulation system for 1pga_protein.pdb in the workspace" \

--api-key $DEEPSEEK_API_KEY \

--model deepseek-chat \

--url https://api.deepseek.com/chat/completionsgmx_copilot --workspace md_workspace/ \

--prompt "setup simulation system for 1pga_protein.pdb in the workspace" \

--api-key $OPENAI_API_KEY \

--model gpt-4o \

--url https://api.openai.com/v1/chat/completionsgmx_copilot --workspace md_workspace/ \

--prompt "setup simulation system for 1pga_protein.pdb in the workspace" \

--api-key $GEMINI_API_KEY \

--model gemini-2.0-flash \

--url https://generativelanguage.googleapis.com/v1beta/chat/completions- Agent mode The agent mode is good automation of a long acting trajectory of using tools.

gmx_copilot --workspace md_workspace/ \

--prompt "run 1 ns production md for 1pga_protein.pdb in the workspace, and analyze rmsd" \

--mode agentThe agent handles system setup, simulation execution, and result analysis automatically. 🚀

This project is dual-licensed under:

- GPLv3 (Open Source License)

- Commercial License (For proprietary use)

For commercial licensing, read this.

- 🤖 LLM sometimes struggles with selecting the correct group index. Double-checking the selection is recommended.

- ⚡ The interaction between LLM and

gmxprompt input isn't always seamless. Running commands based on suggestions can help you get the correct results more easily.

GROMACS Copilot is provided "as is" without warranty of any kind, express or implied. The authors and contributors disclaim all warranties including, but not limited to, the implied warranties of merchantability and fitness for a particular purpose. Users employ this software at their own risk.

The authors bear no responsibility for any consequences arising from the use, misuse, or misinterpretation of this software or its outputs. Results obtained through GROMACS Copilot should be independently validated prior to use in research, publications, or decision-making processes.

This software is intended for research and educational purposes only. Users are solely responsible for ensuring compliance with applicable laws, regulations, and ethical standards in their jurisdiction.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for gromacs_copilot

Similar Open Source Tools

gromacs_copilot

GROMACS Copilot is an agent designed to automate molecular dynamics simulations for proteins in water using GROMACS. It handles system setup, simulation execution, and result analysis automatically, providing outputs such as RMSD, RMSF, Rg, and H-bonds. Users can interact with the agent through prompts and API keys from DeepSeek and OpenAI. The tool aims to simplify the process of running MD simulations, allowing users to focus on other tasks while it handles the technical aspects of the simulations.

crewAI

CrewAI is a cutting-edge framework designed to orchestrate role-playing autonomous AI agents. By fostering collaborative intelligence, CrewAI empowers agents to work together seamlessly, tackling complex tasks. It enables AI agents to assume roles, share goals, and operate in a cohesive unit, much like a well-oiled crew. Whether you're building a smart assistant platform, an automated customer service ensemble, or a multi-agent research team, CrewAI provides the backbone for sophisticated multi-agent interactions. With features like role-based agent design, autonomous inter-agent delegation, flexible task management, and support for various LLMs, CrewAI offers a dynamic and adaptable solution for both development and production workflows.

draive

draive is an open-source Python library designed to simplify and accelerate the development of LLM-based applications. It offers abstract building blocks for connecting functionalities with large language models, flexible integration with various AI solutions, and a user-friendly framework for building scalable data processing pipelines. The library follows a function-oriented design, allowing users to represent complex programs as simple functions. It also provides tools for measuring and debugging functionalities, ensuring type safety and efficient asynchronous operations for modern Python apps.

Controllable-RAG-Agent

This repository contains a sophisticated deterministic graph-based solution for answering complex questions using a controllable autonomous agent. The solution is designed to ensure that answers are solely based on the provided data, avoiding hallucinations. It involves various steps such as PDF loading, text preprocessing, summarization, database creation, encoding, and utilizing large language models. The algorithm follows a detailed workflow involving planning, retrieval, answering, replanning, content distillation, and performance evaluation. Heuristics and techniques implemented focus on content encoding, anonymizing questions, task breakdown, content distillation, chain of thought answering, verification, and model performance evaluation.

AntSK

AntSK is an AI knowledge base/agent built with .Net8+Blazor+SemanticKernel. It features a semantic kernel for accurate natural language processing, a memory kernel for continuous learning and knowledge storage, a knowledge base for importing and querying knowledge from various document formats, a text-to-image generator integrated with StableDiffusion, GPTs generation for creating personalized GPT models, API interfaces for integrating AntSK into other applications, an open API plugin system for extending functionality, a .Net plugin system for integrating business functions, real-time information retrieval from the internet, model management for adapting and managing different models from different vendors, support for domestic models and databases for operation in a trusted environment, and planned model fine-tuning based on llamafactory.

guidellm

GuideLLM is a powerful tool for evaluating and optimizing the deployment of large language models (LLMs). By simulating real-world inference workloads, GuideLLM helps users gauge the performance, resource needs, and cost implications of deploying LLMs on various hardware configurations. This approach ensures efficient, scalable, and cost-effective LLM inference serving while maintaining high service quality. Key features include performance evaluation, resource optimization, cost estimation, and scalability testing.

crewAI

crewAI is a cutting-edge framework for orchestrating role-playing, autonomous AI agents. By fostering collaborative intelligence, CrewAI empowers agents to work together seamlessly, tackling complex tasks. It provides a flexible and structured approach to AI collaboration, enabling users to define agents with specific roles, goals, and tools, and assign them tasks within a customizable process. crewAI supports integration with various LLMs, including OpenAI, and offers features such as autonomous task delegation, flexible task management, and output parsing. It is open-source and welcomes contributions, with a focus on improving the library based on usage data collected through anonymous telemetry.

OM1

OpenMind's OM1 is a modular AI runtime empowering developers to create and deploy multimodal AI agents across digital environments and physical robots. OM1 agents process diverse inputs like web data, social media, camera feeds, and LIDAR, enabling actions including motion, autonomous navigation, and natural conversations. The goal is to create highly capable human-focused robots that are easy to upgrade and reconfigure for different physical form factors. OM1 features a modular architecture, supports new hardware via plugins, offers web-based debugging display, and pre-configured endpoints for various services.

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

OpenDAN-Personal-AI-OS

OpenDAN is an open source Personal AI OS that consolidates various AI modules for personal use. It empowers users to create powerful AI agents like assistants, tutors, and companions. The OS allows agents to collaborate, integrate with services, and control smart devices. OpenDAN offers features like rapid installation, AI agent customization, connectivity via Telegram/Email, building a local knowledge base, distributed AI computing, and more. It aims to simplify life by putting AI in users' hands. The project is in early stages with ongoing development and future plans for user and kernel mode separation, home IoT device control, and an official OpenDAN SDK release.

synthora

Synthora is a lightweight and extensible framework for LLM-driven Agents and ALM research. It aims to simplify the process of building, testing, and evaluating agents by providing essential components. The framework allows for easy agent assembly with a single config, reducing the effort required for tuning and sharing agents. Although in early development stages with unstable APIs, Synthora welcomes feedback and contributions to enhance its stability and functionality.

poml

POML (Prompt Orchestration Markup Language) is a novel markup language designed to bring structure, maintainability, and versatility to advanced prompt engineering for Large Language Models (LLMs). It addresses common challenges in prompt development, such as lack of structure, complex data integration, format sensitivity, and inadequate tooling. POML provides a systematic way to organize prompt components, integrate diverse data types seamlessly, and manage presentation variations, empowering developers to create more sophisticated and reliable LLM applications.

graphrag-local-ollama

GraphRAG Local Ollama is a repository that offers an adaptation of Microsoft's GraphRAG, customized to support local models downloaded using Ollama. It enables users to leverage local models with Ollama for large language models (LLMs) and embeddings, eliminating the need for costly OpenAPI models. The repository provides a simple setup process and allows users to perform question answering over private text corpora by building a graph-based text index and generating community summaries for closely-related entities. GraphRAG Local Ollama aims to improve the comprehensiveness and diversity of generated answers for global sensemaking questions over datasets.

neuron-ai

Neuron is a PHP framework for creating and orchestrating AI Agents, providing tools for the entire agentic application development lifecycle. It allows integration of AI entities in existing PHP applications with a powerful and flexible architecture. Neuron offers tutorials and educational content to help users get started using AI Agents in their projects. The framework supports various LLM providers, tools, and toolkits, enabling users to create fully functional agents for tasks like data analysis, chatbots, and structured output. Neuron also facilitates monitoring and debugging of AI applications, ensuring control over agent behavior and decision-making processes.

CoLLM

CoLLM is a novel method that integrates collaborative information into Large Language Models (LLMs) for recommendation. It converts recommendation data into language prompts, encodes them with both textual and collaborative information, and uses a two-step tuning method to train the model. The method incorporates user/item ID fields in prompts and employs a conventional collaborative model to generate user/item representations. CoLLM is built upon MiniGPT-4 and utilizes pretrained Vicuna weights for training.

easydist

EasyDist is an automated parallelization system and infrastructure designed for multiple ecosystems. It offers usability by making parallelizing training or inference code effortless with just a single line of change. It ensures ecological compatibility by serving as a centralized source of truth for SPMD rules at the operator-level for various machine learning frameworks. EasyDist decouples auto-parallel algorithms from specific frameworks and IRs, allowing for the development and benchmarking of different auto-parallel algorithms in a flexible manner. The architecture includes MetaOp, MetaIR, and the ShardCombine Algorithm for SPMD sharding rules without manual annotations.

For similar tasks

gromacs_copilot

GROMACS Copilot is an agent designed to automate molecular dynamics simulations for proteins in water using GROMACS. It handles system setup, simulation execution, and result analysis automatically, providing outputs such as RMSD, RMSF, Rg, and H-bonds. Users can interact with the agent through prompts and API keys from DeepSeek and OpenAI. The tool aims to simplify the process of running MD simulations, allowing users to focus on other tasks while it handles the technical aspects of the simulations.

For similar jobs

AlphaFold3

AlphaFold3 is an implementation of the Alpha Fold 3 model in PyTorch for accurate structure prediction of biomolecular interactions. It includes modules for genetic diffusion and full model examples for forward pass computations. The tool allows users to generate random pair and single representations, operate on atomic coordinates, and perform structure predictions based on input tensors. The implementation also provides functionalities for training and evaluating the model.

biochatter

Generative AI models have shown tremendous usefulness in increasing accessibility and automation of a wide range of tasks. This repository contains the `biochatter` Python package, a generic backend library for the connection of biomedical applications to conversational AI. It aims to provide a common framework for deploying, testing, and evaluating diverse models and auxiliary technologies in the biomedical domain. BioChatter is part of the BioCypher ecosystem, connecting natively to BioCypher knowledge graphs.

admet_ai

ADMET-AI is a platform for ADMET prediction using Chemprop-RDKit models trained on ADMET datasets from the Therapeutics Data Commons. It offers command line, Python API, and web server interfaces for making ADMET predictions on new molecules. The platform can be easily installed using pip and supports GPU acceleration. It also provides options for processing TDC data, plotting results, and hosting a web server. ADMET-AI is a machine learning platform for evaluating large-scale chemical libraries.

AI-Drug-Discovery-Design

AI-Drug-Discovery-Design is a repository focused on Artificial Intelligence-assisted Drug Discovery and Design. It explores the use of AI technology to accelerate and optimize the drug development process. The advantages of AI in drug design include speeding up research cycles, improving accuracy through data-driven models, reducing costs by minimizing experimental redundancies, and enabling personalized drug design for specific patients or disease characteristics.

bionemo-framework

NVIDIA BioNeMo Framework is a collection of programming tools, libraries, and models for computational drug discovery. It accelerates building and adapting biomolecular AI models by providing domain-specific, optimized models and tooling for GPU-based computational resources. The framework offers comprehensive documentation and support for both community and enterprise users.

New-AI-Drug-Discovery

New AI Drug Discovery is a repository focused on the applications of Large Language Models (LLM) in drug discovery. It provides resources, tools, and examples for leveraging LLM technology in the pharmaceutical industry. The repository aims to showcase the potential of using AI-driven approaches to accelerate the drug discovery process, improve target identification, and optimize molecular design. By exploring the intersection of artificial intelligence and drug development, this repository offers insights into the latest advancements in computational biology and cheminformatics.

gromacs_copilot

GROMACS Copilot is an agent designed to automate molecular dynamics simulations for proteins in water using GROMACS. It handles system setup, simulation execution, and result analysis automatically, providing outputs such as RMSD, RMSF, Rg, and H-bonds. Users can interact with the agent through prompts and API keys from DeepSeek and OpenAI. The tool aims to simplify the process of running MD simulations, allowing users to focus on other tasks while it handles the technical aspects of the simulations.

Biomni

Biomni is a general-purpose biomedical AI agent designed to autonomously execute a wide range of research tasks across diverse biomedical subfields. By integrating cutting-edge large language model (LLM) reasoning with retrieval-augmented planning and code-based execution, Biomni helps scientists dramatically enhance research productivity and generate testable hypotheses.