Best AI tools for< Testing >

20 - AI tool Sites

QuarkIQL

QuarkIQL is a generative testing tool for computer vision APIs. It allows users to create custom test images and requests with just a few clicks. QuarkIQL also provides a log of your queries so you can run more experiments without starting from square one.

Tricentis

Tricentis is an AI-powered testing tool that offers a comprehensive set of test automation capabilities to address various testing challenges. It provides end-to-end test automation solutions for a wide range of applications, including Salesforce, mobile testing, performance testing, and data integrity testing. Tricentis leverages advanced ML technologies to enable faster and smarter testing, ensuring quality at speed with reduced risk, time, and costs. The platform also offers continuous performance testing, change and data intelligence, and model-based, codeless test automation for mobile applications.

Testlio

Testlio is a trusted software testing partner that maximizes software testing impact by offering comprehensive solutions for quality challenges. They provide a range of services including manual and automated testing, tailored testing strategies for diverse industries, and a cutting-edge platform for seamless collaboration. Testlio's AI-enhanced solutions help reduce risk in high-stake releases and ensure smarter decision-making. With a focus on quality reliability and efficiency, Testlio is a proven partner for mission-critical quality assurance.

BlazeMeter

BlazeMeter by Perforce is an AI-powered continuous testing platform designed to automate testing processes and enhance software quality. It offers effortless test creation, seamless test execution, instant issue analysis, and self-sustaining maintenance. BlazeMeter provides a comprehensive solution for performance, functional, scriptless, API testing, and monitoring, along with test data and service virtualization. The platform enables teams to speed up digital transformation, shift quality left, and streamline DevOps practices. With AI analytics, scriptless test creation, and UX testing capabilities, BlazeMeter empowers users to drive innovation, accuracy, and speed in their test automation efforts.

Rainforest QA

Rainforest QA is an AI-powered test automation platform designed for SaaS startups to streamline and accelerate their testing processes. It offers AI-accelerated testing, no-code test automation, and expert QA services to help teams achieve reliable test coverage and faster release cycles. Rainforest QA's platform integrates with popular tools, provides detailed insights for easy debugging, and ensures visual-first testing for a seamless user experience. With a focus on automating end-to-end tests, Rainforest QA aims to eliminate QA bottlenecks and help teams ship bug-free code with confidence.

QA Wolf

QA Wolf is an AI-native service that delivers 80% automated end-to-end test coverage for web and mobile apps in weeks, not years. It automates hundreds of tests using Playwright code for web and Appium for mobile, providing reliable test results on every run. With features like 100% parallel run infrastructure, zero flake guarantee, and unlimited test runs, QA Wolf aims to help software teams ship better software faster by taking QA completely off their plate.

Keploy

Keploy is an open-source AI-powered API, integration, and unit testing agent designed for developers. It offers a unified testing platform that uses AI to write and validate tests, maximizing coverage and minimizing effort. With features like automated test generation, record-and-replay for integration tests, and API testing automation, Keploy aims to streamline the testing process for developers. The platform also provides GitHub PR unit test agents, centralized reporting dashboards, and smarter test deduplication to enhance testing efficiency and effectiveness.

Momentic

Momentic is an AI testing tool that offers automated AI testing for software applications. It streamlines regression testing, production monitoring, and UI automation, making test automation easy with its AI capabilities. Momentic is designed to be simple to set up, easy to maintain, and accelerates team productivity by creating and deploying tests faster with its intuitive low-code editor. The tool adapts to applications, saves time with automated test maintenance, and allows testing anywhere, anytime using cloud, local, or CI/CD pipelines.

Meticulous

Meticulous is an AI tool that revolutionizes frontend testing by automatically generating and maintaining test suites for web applications. It eliminates the need for manual test writing and maintenance, ensuring comprehensive test coverage without the hassle. Meticulous uses AI to monitor user interactions, generate test suites, and provide visual end-to-end testing capabilities. It offers lightning-fast testing, parallelized across a compute cluster, and integrates seamlessly with existing test suites. The tool is battle-tested to handle complex applications and provides developers with confidence in their code changes.

Autify

Autify is an AI testing company focused on solving challenges in automation testing. They aim to make software testing faster and easier, enabling companies to release faster and maintain application stability. Their flagship product, Autify No Code, allows anyone to create automated end-to-end tests for applications. Zenes, their new product, simplifies the process of creating new software tests through AI. Autify is dedicated to innovation in the automation testing space and is trusted by leading organizations.

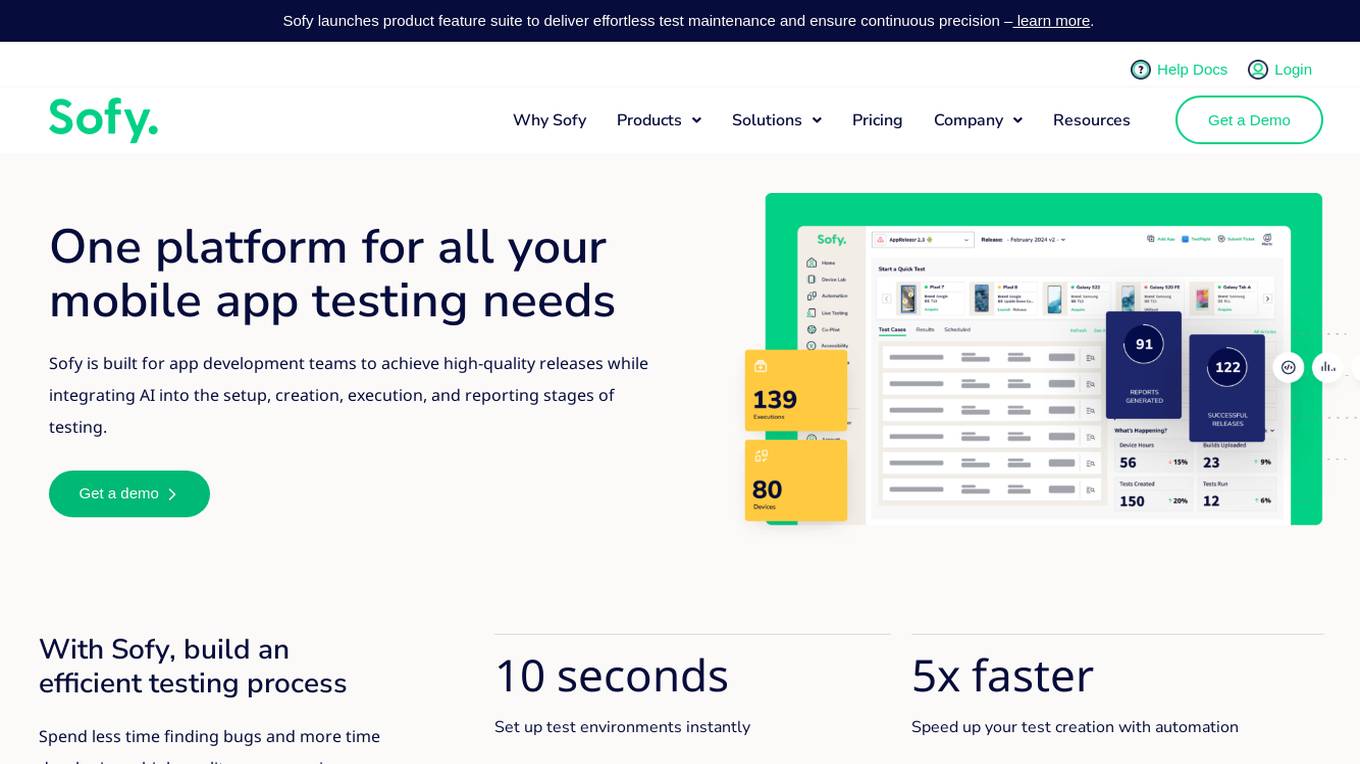

Sofy

Sofy is a revolutionary no-code testing platform for mobile applications that integrates AI to streamline the testing process. It offers features such as manual and ad-hoc testing, no-code automation, AI-powered test case generation, and real device testing. Sofy helps app development teams achieve high-quality releases by simplifying test maintenance and ensuring continuous precision. With a focus on efficiency and user experience, Sofy is trusted by top industries for its all-in-one testing solution.

RoostGPT

RoostGPT is an AI-driven testing copilot that offers automated test case generation using Large Language Models (LLMs). It helps in building reliable software by providing 100% test coverage every single time. RoostGPT leverages generative AI to automate test case generation, freeing up developer time and enhancing test accuracy and coverage. It also detects static vulnerabilities in artifacts like source code and logs to ensure data security. The platform is trusted by global financial institutions and industry leaders for its ability to fill gaps in test coverage and simplify testing and deployment processes.

金数据AI考试

The website offers an AI testing system that allows users to generate test questions instantly. It features a smart question bank, rapid question generation, and immediate test creation. Users can try out various test questions, such as generating knowledge test questions for car sales, company compliance standards, and real estate tax rate knowledge. The system ensures each test paper has similar content and difficulty levels. It also provides random question selection to reduce cheating possibilities. Employees can access the test link directly, view test scores immediately after submission, and check incorrect answers with explanations. The system supports single sign-on via WeChat for employee verification and record-keeping of employee rankings and test attempts. The platform prioritizes enterprise data security with a three-level network security rating, ISO/IEC 27001 information security management system, and ISO/IEC 27701 privacy information management system.

QA.tech

QA.tech is an advanced end-to-end testing application designed for B2B SaaS companies. It offers AI-powered testing solutions to help businesses ship faster, cut costs, and improve testing efficiency. The application features an AI agent named Jarvis that automates the testing process by scanning web apps, creating detailed memory structures, generating tests based on user interactions, and continuously testing for defects. QA.tech provides developer-friendly bug reports, supports various web frameworks, and integrates with CI/CD pipelines. It aims to revolutionize the testing process by offering faster, smarter, and more efficient testing solutions.

Supertest

Supertest is an AI copilot designed for software testing, offering a cutting-edge solution to revolutionize the way unit tests are written. By integrating seamlessly with VS Code, Supertest allows users to create unit tests in seconds with just one click. The tool automates various day-to-day QA engineering tasks using AI technology, providing a game-changing solution for development teams to save time and improve efficiency.

TestDriver

TestDriver is an AI-powered testing tool that helps developers automate their testing process. It can be integrated with GitHub and can test anything, right in the GitHub environment. TestDriver is easy to set up and use, and it can help developers save time and effort by offloading testing to AI. It uses Dashcam.io technology to provide end-to-end exploratory testing, allowing developers to see the screen, logs, and thought process as the AI completes its test.

Filuta AI

Filuta AI is an advanced AI application that redefines game testing with planning agents. It utilizes Composite AI with planning techniques to provide a 24/7 testing environment for smooth, bug-free releases. The application brings deep space technology to game testing, enabling intelligent agents to analyze game states, adapt in real time, and execute action sequences to achieve test goals. Filuta AI offers goal-driven testing, adaptive exploration, detailed insights, and shorter development cycles, making it a valuable tool for game developers, QA leads, game designers, automation engineers, and producers.

nunu.ai

nunu.ai is an AI-powered platform designed to revolutionize game testing by leveraging AI agents to conduct end-to-end tests at scale. The platform allows users to describe what they want to test in plain English, eliminating the need for coding or technical expertise. With powerful dashboards and detailed reports, nunu.ai provides real-time monitoring of test outcomes. The platform offers cost reduction, 24/7 availability, easy integration, human-like testing, multi-platform support, minimal maintenance, multiplayer testing capabilities, and enterprise-grade security.

Tusk

Tusk is an AI-powered automated testing platform that helps engineering teams generate high-quality unit and integration tests with codebase and business context. It runs on pull requests to suggest verified test cases, enabling faster and safer code shipping. Tusk offers features like shift-left testing, autonomous test generation, self-healing tests, and seamless integration with CI/CD pipelines. Trusted by engineering leaders at fast-growing companies, Tusk aims to improve test coverage and code quality while reducing the release cycle time.

Checksum.ai

Checksum.ai is an AI-powered end-to-end test automation tool that generates and maintains tests based on real user behavior. It helps users save time in development, achieve comprehensive test coverage, and ensure bug-free code deployment. The tool is self-maintaining, auto-healing, and integrates with popular platforms like Playwright, Cypress, Github, Gitlab, Jenkins, and CircleCI. Checksum.ai is designed to streamline the testing process, allowing users to focus on shipping high-quality products with confidence.

1 - Open Source AI Tools

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

20 - OpenAI Gpts

Security Testing Advisor

Ensures software security through comprehensive testing techniques.

Usability Testing Advisor

Enhances user experience through rigorous usability testing and feedback.

Performance Testing Advisor

Ensures software performance meets organizational standards and expectations.

Product Testing Advisor

Ensures product quality through rigorous, systematic testing processes.

React Native Testing Library Owl

Assists in writing React Native tests using the React Native Testing Library.

Vitest Expert Testing Framework Multilingual

Multilingual AI for Vitest unit testing management.

MochaJS Expert in JavaScript unit testing

Assistant polyglotte pour tests unitaires avec MochaJS

Test Shaman

Test Shaman: Guiding software testing with Grug wisdom and humor, balancing fun with practical advice.

Test Case GPT

I will provide guidance on testing, verification, and validation for QA roles.

Inspection AI

Expert in testing, inspection, certification, compliant with OpenAI policies, developed on OpenAI.

Expert Testers

Chat with Software Testing Experts. Ping Jason if you won't want to be an expert or have feedback.

Mockito Mentor

Java testing consultant specializing in Mockito, based on the book Mockito Made Clear and related blog posts by Ken Kousen.