Best AI tools for< Optimize Model Performance >

20 - AI tool Sites

Valohai

Valohai is a scalable MLOps platform that enables Continuous Integration/Continuous Deployment (CI/CD) for machine learning and pipeline automation on-premises and across various cloud environments. It helps streamline complex machine learning workflows by offering framework-agnostic ML capabilities, automatic versioning with complete lineage of ML experiments, hybrid and multi-cloud support, scalability and performance optimization, streamlined collaboration among data scientists, IT, and business units, and smart orchestration of ML workloads on any infrastructure. Valohai also provides a knowledge repository for storing and sharing the entire model lifecycle, facilitating cross-functional collaboration, and allowing developers to build with total freedom using any libraries or frameworks.

SambaNova Systems

SambaNova Systems is an AI platform that revolutionizes AI workloads by offering an enterprise-grade full stack platform purpose-built for generative AI. It provides state-of-the-art AI and deep learning capabilities to help customers outcompete their peers. SambaNova delivers the only enterprise-grade full stack platform, from chips to models, designed for generative AI in the enterprise. The platform includes the SN40L Full Stack Platform with 1T+ parameter models, Composition of Experts, and Samba Apps. SambaNova also offers resources to accelerate AI journeys and solutions for various industries like financial services, healthcare, manufacturing, and more.

Together AI

Together AI is an AI Acceleration Cloud platform that offers fast inference, fine-tuning, and training services. It provides self-service NVIDIA GPUs, model deployment on custom hardware, AI chat app, code execution sandbox, and tools to find the right model for specific use cases. The platform also includes a model library with open-source models, documentation for developers, and resources for advancing open-source AI. Together AI enables users to leverage pre-trained models, fine-tune them, or build custom models from scratch, catering to various generative AI needs.

Granica

Granica is an AI tool designed for data compression and optimization, enabling users to transform petabytes of data into terabytes with self-optimizing, lossless compression. It offers state-of-the-art technology that works seamlessly across various platforms like Iceberg, Delta, Trino, Spark, Snowflake, and Databricks. Granica helps organizations reduce storage costs, improve query performance, and enhance data accessibility for AI and analytics workloads.

Intel Gaudi AI Accelerator Developer

The Intel Gaudi AI accelerator developer website provides resources, guidance, tools, and support for building, migrating, and optimizing AI models. It offers software, model references, libraries, containers, and tools for training and deploying Generative AI and Large Language Models. The site focuses on the Intel Gaudi accelerators, including tutorials, documentation, and support for developers to enhance AI model performance.

Teraflow.ai

Teraflow.ai is an AI-enablement company that specializes in helping businesses adopt and scale their artificial intelligence models. They offer services in data engineering, ML engineering, AI/UX, and cloud architecture. Teraflow.ai assists clients in fixing data issues, boosting ML model performance, and integrating AI into legacy customer journeys. Their team of experts deploys solutions quickly and efficiently, using modern practices and hyper scaler technology. The company focuses on making AI work by providing fixed pricing solutions, building team capabilities, and utilizing agile-scrum structures for innovation. Teraflow.ai also offers certifications in GCP and AWS, and partners with leading tech companies like HashiCorp, AWS, and Microsoft Azure.

Contlo

Contlo is an AI-powered marketing platform that helps businesses create personalized campaigns and automated customer journeys across multiple channels, including email, SMS, WhatsApp, web push, and social media. It uses a brand's own generative AI model to optimize marketing efforts and drive customer engagement. Contlo also offers audience management, data collection, and business insights to help businesses make informed decisions.

Directly

Directly is an AI-powered platform that offers on-demand and automated customer support solutions. The platform connects organizations with highly qualified experts who can handle customer inquiries efficiently. By leveraging AI and machine learning, Directly automates repetitive questions, improving business continuity and digital transformation. The platform follows a pay-for-performance compensation model and provides global support in multiple languages. Directly aims to enhance customer satisfaction, reduce contact center volume, and save costs for businesses.

FineTuneAIs.com

FineTuneAIs.com is a platform that specializes in custom AI model fine-tuning. Users can fine-tune their AI models to achieve better performance and accuracy. The platform requires JavaScript to be enabled for optimal functionality.

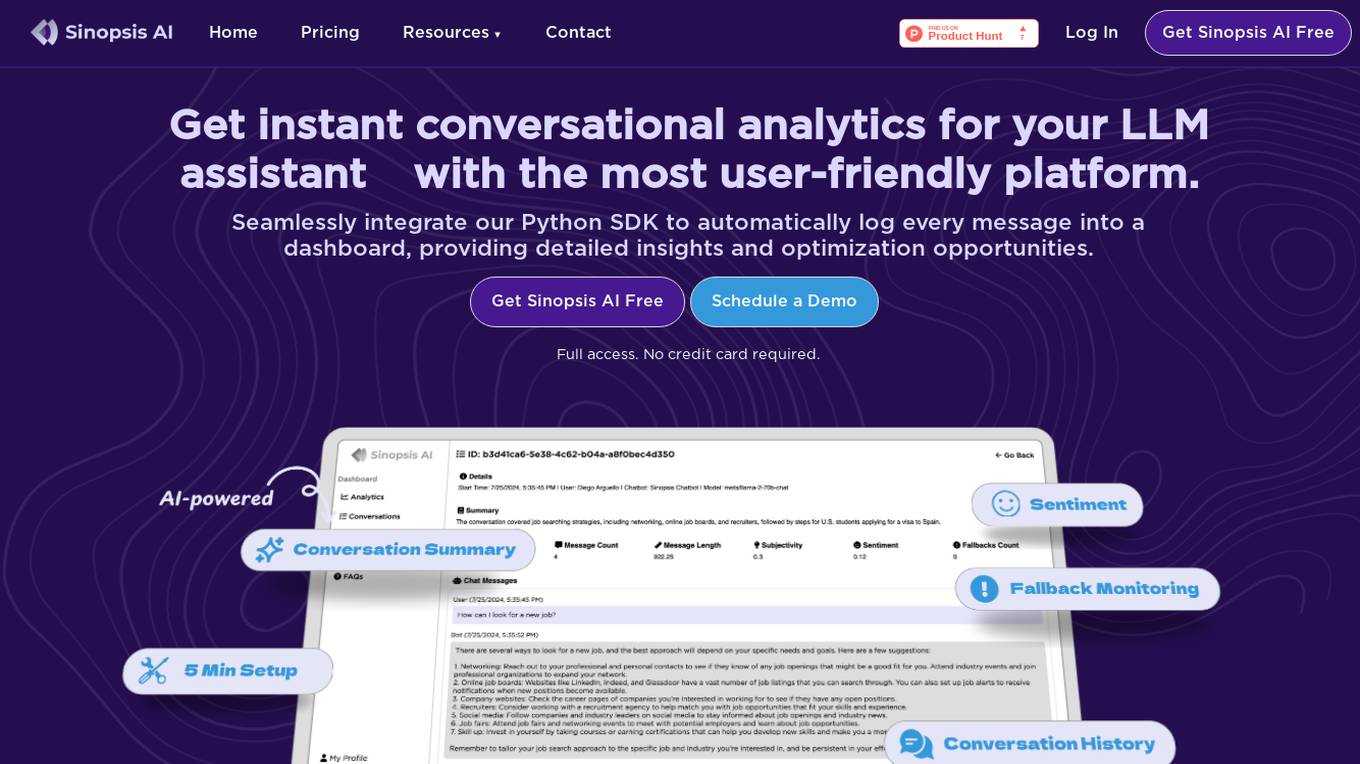

Sinopsis AI

Sinopsis AI is an analytics tool designed for AI chatbots, offering detailed insights into user interactions to enhance response accuracy and user experience. It seamlessly integrates with existing systems, providing real-time data updates and customizable analytics reports. With a user-friendly platform and model-agnostic compatibility, Sinopsis AI empowers businesses to optimize their chatbot performance and improve customer engagement strategies.

Modular

Modular is a fast, scalable Gen AI inference platform that offers a comprehensive suite of tools and resources for AI development and deployment. It provides solutions for AI model development, deployment options, AI inference, research, and resources like documentation, models, tutorials, and step-by-step guides. Modular supports GPU and CPU performance, intelligent scaling to any cluster, and offers deployment options for various editions. The platform enables users to build agent workflows, utilize AI retrieval and controlled generation, develop chatbots, engage in code generation, and improve resource utilization through batch processing.

Arthur

Arthur is an industry-leading MLOps platform that simplifies deployment, monitoring, and management of traditional and generative AI models. It ensures scalability, security, compliance, and efficient enterprise use. Arthur's turnkey solutions enable companies to integrate the latest generative AI technologies into their operations, making informed, data-driven decisions. The platform offers open-source evaluation products, model-agnostic monitoring, deployment with leading data science tools, and model risk management capabilities. It emphasizes collaboration, security, and compliance with industry standards.

Forma.ai

Forma.ai is an AI-powered sales performance management software designed to optimize and run sales compensation processes efficiently. The platform offers features such as AI-powered plan configuration, connected modeling for optimization, end-to-end automation of sales comp management, flexible data integrations, and next-gen automation. Forma.ai provides advantages such as faster decision-making, revenue capture, cost reduction, flexibility, and scalability. However, some disadvantages include the need for AI skills, potential data security concerns, and initial learning curve. The application is suitable for jobs in sales operations, finance, human resources, sales compensation planning, and sales performance data management. Users can find Forma.ai using keywords like sales comp, AI-powered software, sales performance management, sales incentives, and sales compensation. The tool can be used for tasks like design with AI, plan and model, deploy and manage, optimize comp plans, and automate sales comp.

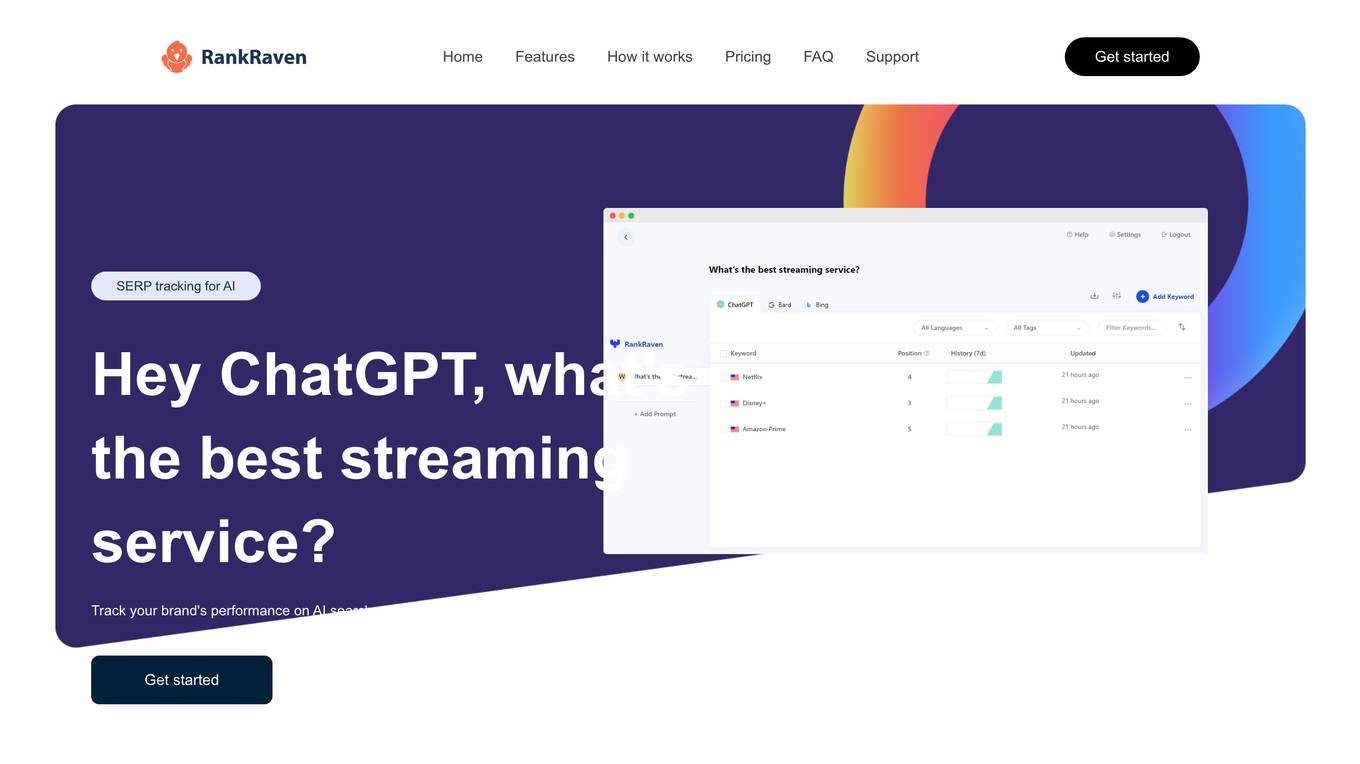

RankRaven

RankRaven is an advanced AI rank tracking tool that allows users to monitor and analyze their brand's performance on AI search engines. The tool leverages multiple AI models such as OpenAI ChatGPT, Google Bard, and Microsoft Bing to provide fast and accurate SEO tracking. Users can track their brand's rank across different AI search models, receive daily rank updates, compare performance across languages and countries, and analyze trends over time. RankRaven automates the process of running prompts and checking keyword appearances in model answers, making it a valuable tool for individuals, businesses, and agencies looking to optimize their AI SEO strategies.

Presspool.ai

Presspool.ai is an AI-powered platform that offers high-intent cybersecurity leads through endorsements from top industry voices. It provides a network of cybersecurity influencers trusted by CTOs, CISOs, and decision-makers at Fortune 500 companies. The platform helps brands and publishers create campaigns, match with ideal influencers, and optimize marketing strategies using real-time analytics.

FLUX AI Image Generator

FLUX AI Image Generator is a cutting-edge AI image generation model developed by Black Forest Labs. It offers state-of-the-art performance in prompt following, visual quality, image detail, and output diversity. The application provides multiple model variants, exceptional text rendering capabilities, complex composition mastery, improved hand rendering, and efficient performance. Users can access FLUX AI Image Generator through various platforms and benefit from its open-source availability for research and artistic purposes. The tool is continuously innovating to stay at the forefront of AI image generation technology.

ONNX Runtime

ONNX Runtime is a production-grade AI engine designed to accelerate machine learning training and inferencing in various technology stacks. It supports multiple languages and platforms, optimizing performance for CPU, GPU, and NPU hardware. ONNX Runtime powers AI in Microsoft products and is widely used in cloud, edge, web, and mobile applications. It also enables large model training and on-device training, offering state-of-the-art models for tasks like image synthesis and text generation.

DentroChat

DentroChat is an AI chat application that reimagines the way users interact with AI models. It allows users to select from various large language models (LLMs) in different modes, enabling them to choose the best AI for their specific tasks. With seamless mode switching and optimized performance, DentroChat offers flexibility and precision in AI interactions.

FuriosaAI

FuriosaAI is an AI application that offers Hardware RNGD for LLM and Multimodality, as well as WARBOY for Computer Vision. It provides a comprehensive developer experience through the Furiosa SDK, Model Zoo, and Dev Support. The application focuses on efficient AI inference, high-performance LLM and multimodal deployment capabilities, and sustainable mass adoption of AI. FuriosaAI features the Tensor Contraction Processor architecture, software for streamlined LLM deployment, and a robust ecosystem support. It aims to deliver powerful and efficient deep learning acceleration while ensuring future-proof programmability and efficiency.

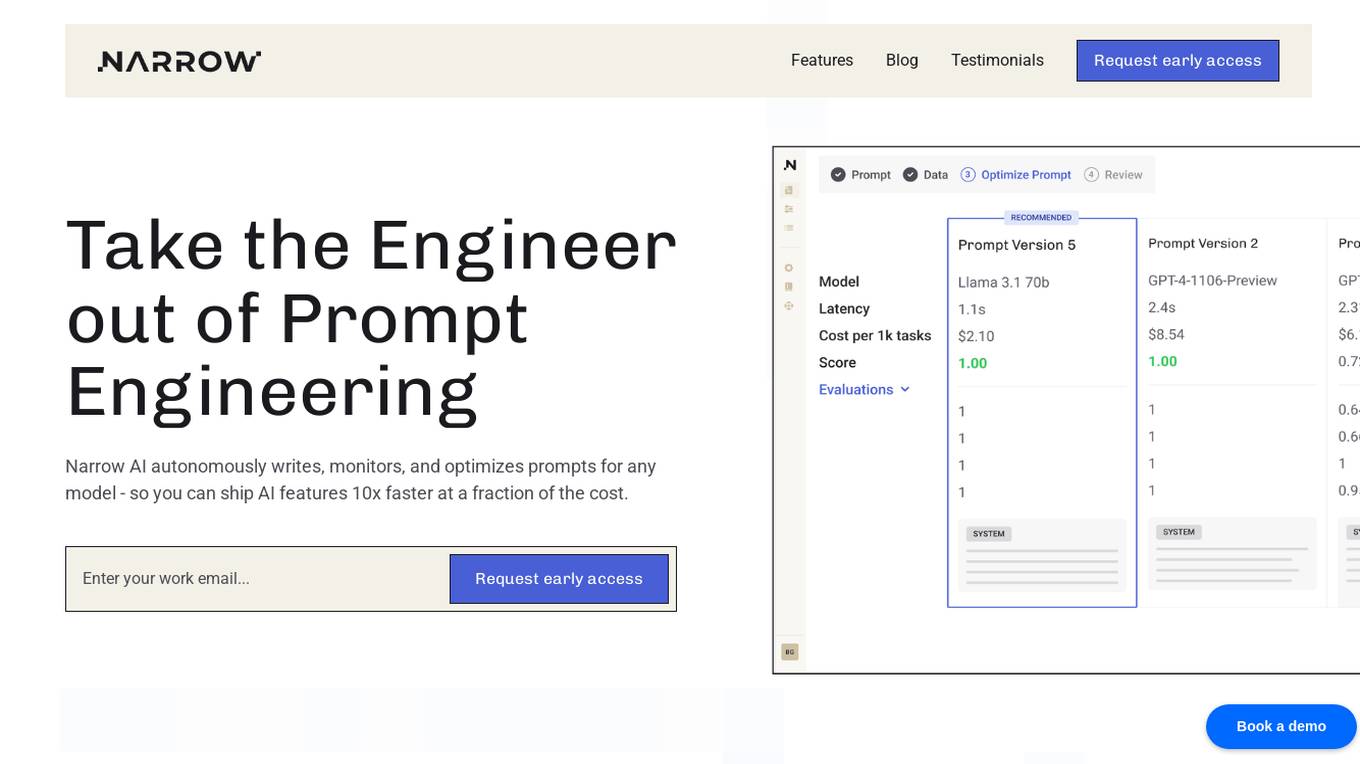

Narrow AI

Narrow AI is an AI application that autonomously writes, monitors, and optimizes prompts for any model, enabling users to ship AI features 10x faster at a fraction of the cost. It streamlines the workflow by allowing users to test new models in minutes, compare prompt performance, and deploy on the optimal model for their use case. Narrow AI helps users maximize efficiency by generating expert-level prompts, adapting prompts to new models, and optimizing prompts for quality, cost, and speed.

11 - Open Source AI Tools

BentoML

BentoML is an open-source model serving library for building performant and scalable AI applications with Python. It comes with everything you need for serving optimization, model packaging, and production deployment.

Qwen-TensorRT-LLM

Qwen-TensorRT-LLM is a project developed for the NVIDIA TensorRT Hackathon 2023, focusing on accelerating inference for the Qwen-7B-Chat model using TRT-LLM. The project offers various functionalities such as FP16/BF16 support, INT8 and INT4 quantization options, Tensor Parallel for multi-GPU parallelism, web demo setup with gradio, Triton API deployment for maximum throughput/concurrency, fastapi integration for openai requests, CLI interaction, and langchain support. It supports models like qwen2, qwen, and qwen-vl for both base and chat models. The project also provides tutorials on Bilibili and blogs for adapting Qwen models in NVIDIA TensorRT-LLM, along with hardware requirements and quick start guides for different model types and quantization methods.

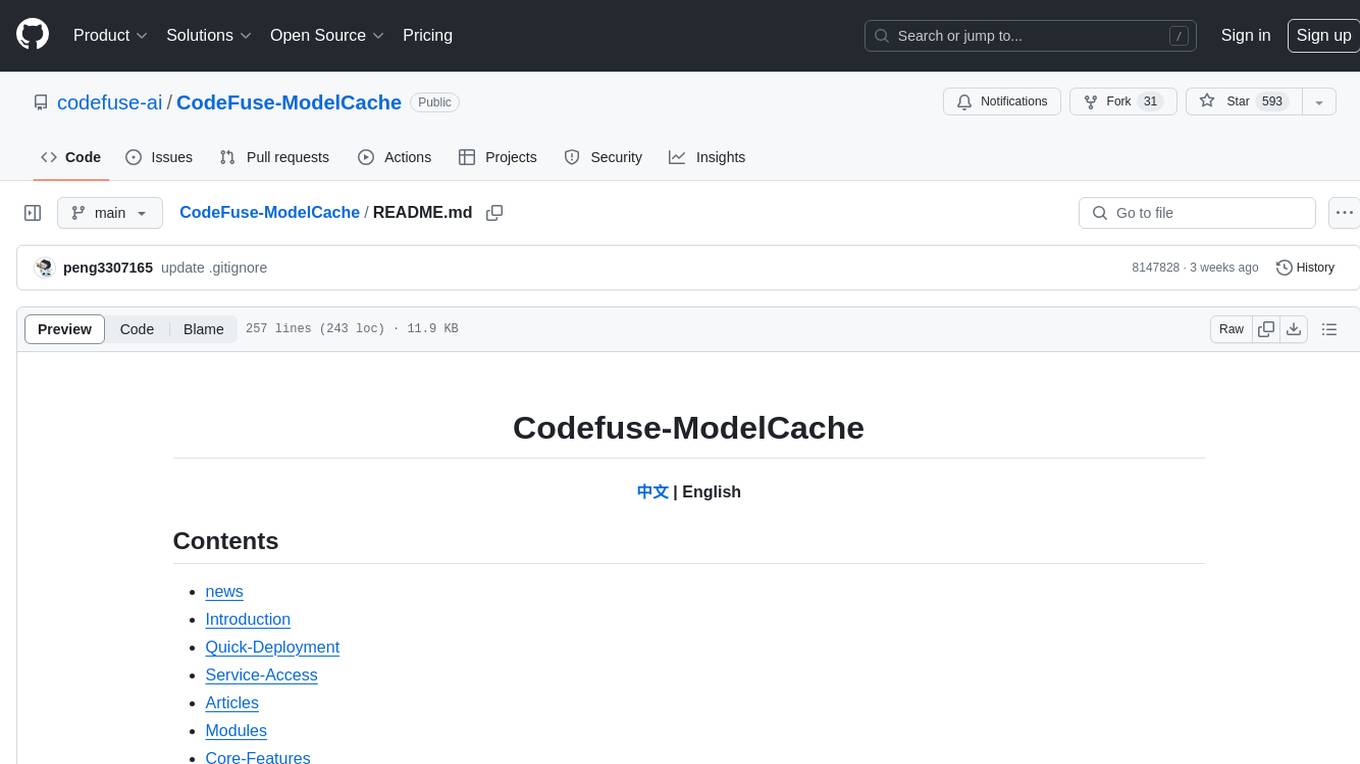

CodeFuse-ModelCache

Codefuse-ModelCache is a semantic cache for large language models (LLMs) that aims to optimize services by introducing a caching mechanism. It helps reduce the cost of inference deployment, improve model performance and efficiency, and provide scalable services for large models. The project caches pre-generated model results to reduce response time for similar requests and enhance user experience. It integrates various embedding frameworks and local storage options, offering functionalities like cache-writing, cache-querying, and cache-clearing through RESTful API. The tool supports multi-tenancy, system commands, and multi-turn dialogue, with features for data isolation, database management, and model loading schemes. Future developments include data isolation based on hyperparameters, enhanced system prompt partitioning storage, and more versatile embedding models and similarity evaluation algorithms.

llm-awq

AWQ (Activation-aware Weight Quantization) is a tool designed for efficient and accurate low-bit weight quantization (INT3/4) for Large Language Models (LLMs). It supports instruction-tuned models and multi-modal LMs, providing features such as AWQ search for accurate quantization, pre-computed AWQ model zoo for various LLMs, memory-efficient 4-bit linear in PyTorch, and efficient CUDA kernel implementation for fast inference. The tool enables users to run large models on resource-constrained edge platforms, delivering more efficient responses with LLM/VLM chatbots through 4-bit inference.

LazyLLM

LazyLLM is a low-code development tool for building complex AI applications with multiple agents. It assists developers in building AI applications at a low cost and continuously optimizing their performance. The tool provides a convenient workflow for application development and offers standard processes and tools for various stages of application development. Users can quickly prototype applications with LazyLLM, analyze bad cases with scenario task data, and iteratively optimize key components to enhance the overall application performance. LazyLLM aims to simplify the AI application development process and provide flexibility for both beginners and experts to create high-quality applications.

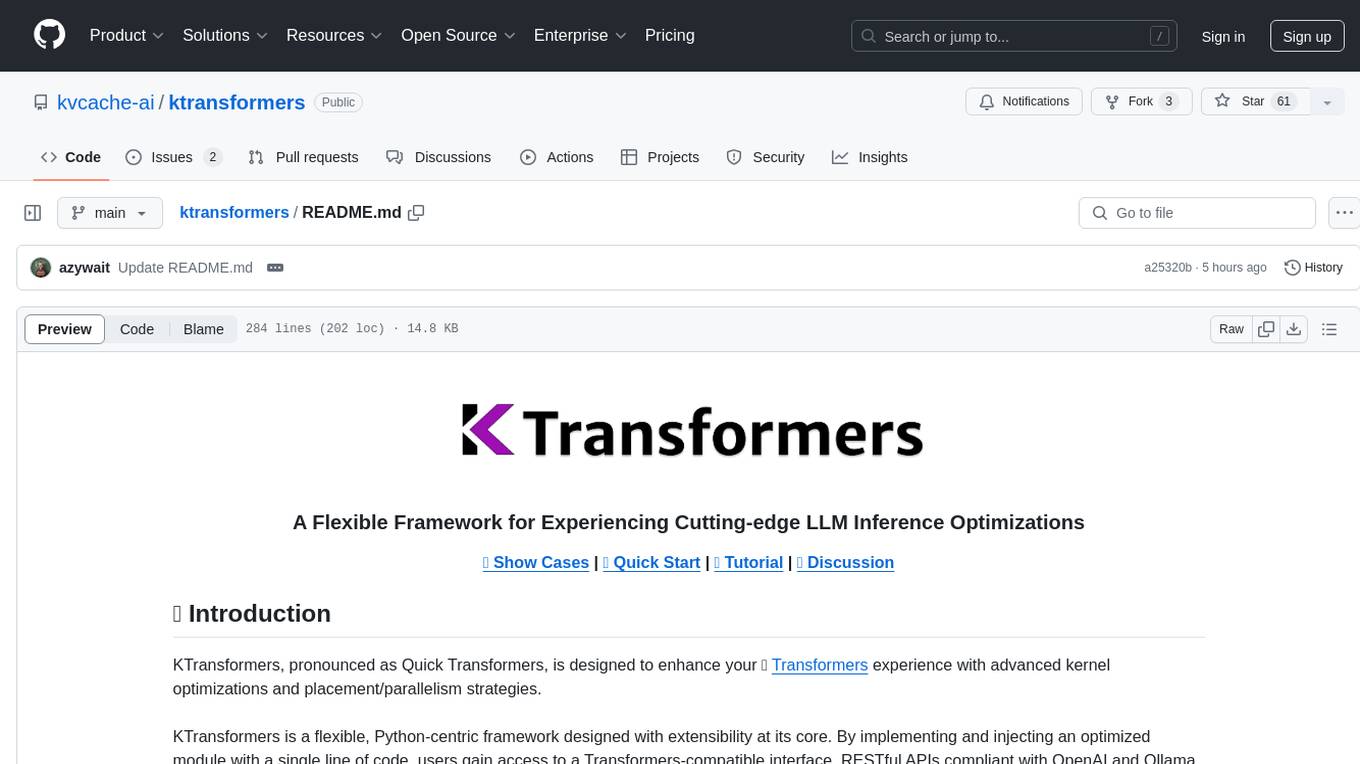

ktransformers

KTransformers is a flexible Python-centric framework designed to enhance the user's experience with advanced kernel optimizations and placement/parallelism strategies for Transformers. It provides a Transformers-compatible interface, RESTful APIs compliant with OpenAI and Ollama, and a simplified ChatGPT-like web UI. The framework aims to serve as a platform for experimenting with innovative LLM inference optimizations, focusing on local deployments constrained by limited resources and supporting heterogeneous computing opportunities like GPU/CPU offloading of quantized models.

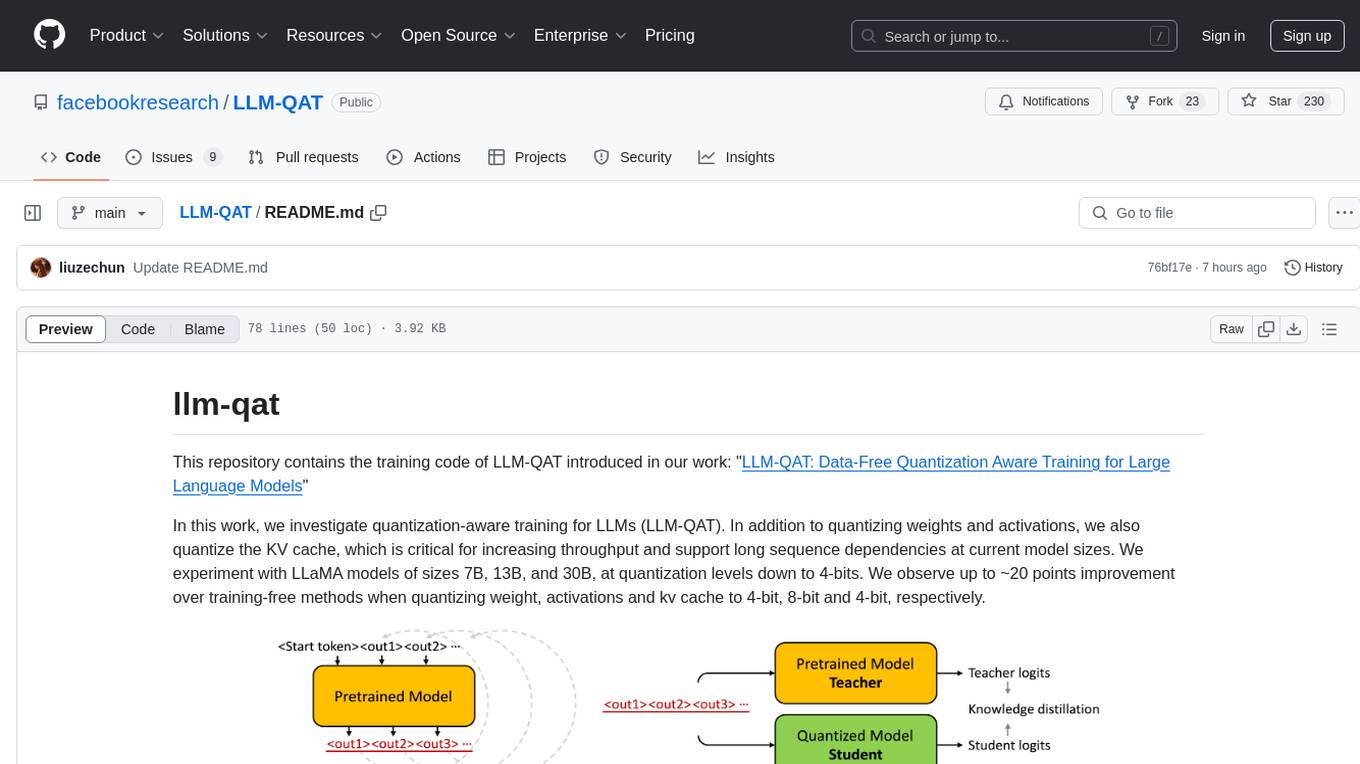

LLM-QAT

This repository contains the training code of LLM-QAT for large language models. The work investigates quantization-aware training for LLMs, including quantizing weights, activations, and the KV cache. Experiments were conducted on LLaMA models of sizes 7B, 13B, and 30B, at quantization levels down to 4-bits. Significant improvements were observed when quantizing weight, activations, and kv cache to 4-bit, 8-bit, and 4-bit, respectively.

UltraRAG

The UltraRAG framework is a researcher and developer-friendly RAG system solution that simplifies the process from data construction to model fine-tuning in domain adaptation. It introduces an automated knowledge adaptation technology system, supporting no-code programming, one-click synthesis and fine-tuning, multidimensional evaluation, and research-friendly exploration work integration. The architecture consists of Frontend, Service, and Backend components, offering flexibility in customization and optimization. Performance evaluation in the legal field shows improved results compared to VanillaRAG, with specific metrics provided. The repository is licensed under Apache-2.0 and encourages citation for support.

AngelSlim

AngelSlim is a comprehensive and efficient large model compression toolkit designed to be user-friendly. It integrates mainstream compression algorithms for easy one-click access, continuously innovates compression algorithms, and optimizes end-to-end performance in model compression and deployment. It supports various models for quantization and speculative sampling, with a focus on performance optimization and ease of use.

LMeterX

LMeterX is a professional large language model performance testing platform that supports model inference services based on large model inference frameworks and cloud services. It provides an intuitive Web interface for creating and managing test tasks, monitoring testing processes, and obtaining detailed performance analysis reports to support model deployment and optimization.

linghe

A library of high-performance kernels for LLM training, linghe is designed for MoE training with FP8 quantization. It provides fused quantization kernels, memory-efficiency kernels, and implementation-optimized kernels. The repo benchmarks on H800 with specific configurations and offers examples in tests. Users can refer to the API for more details.

20 - OpenAI Gpts

Back Propagation

I'm Back Propagation, here to help you understand and apply back propagation techniques to your AI models.

Apple CoreData Complete Code Expert

A detailed expert trained on all 5,588 pages of Apple CoreData, offering complete coding solutions. Saving time? https://www.buymeacoffee.com/parkerrex ☕️❤️

Optimisateur de Performance GPT

Expert en optimisation de performance et traitement de données

ML Engineer GPT

I'm a Python and PyTorch expert with knowledge of ML infrastructure requirements ready to help you build and scale your ML projects.

Data Architect

Database Developer assisting with SQL/NoSQL, architecture, and optimization.

Unreal Assistant

Assists with Unreal Engine 5 C++ coding, editor know-how, and blueprint visuals.

Nimbus

Expert in CFA, quant, software engineering, data science, and economics for investment strategies.

Modelos de Negocios GPT

Guía paso a paso para la creación y mejora de modelos de negocio usando la metodología Business Model Canvas.

Shell Mentor

An AI GPT model designed to assist with Shell/Bash programming, providing real-time code suggestions, debugging tips, and script optimization for efficient command-line operations.

Octorate Code Companion

I help developers understand and use APIs, referencing a YAML model.

Agent Prompt Generator for LLM's

This GPT generates the best possible LLM-agents for your system prompts. You can also specify the model size, like 3B, 33B, 70B, etc.