Best AI tools for< Optimize Data Costs >

20 - AI tool Sites

Granica AI

Granica AI is a Training Data Platform designed to make data safe for use with AI while keeping it cost-efficient. It offers state-of-the-art accuracy, cost-efficient data optimization, data visibility insights, and cloud cost savings. The platform helps in protecting data privacy, optimizing data costs, and gaining data visibility for AI teams to achieve big results while minimizing privacy risk.

Codimite

Codimite is an AI-assisted offshore development services solution that specializes in Web2 to Web3 communication. They offer PWA solutions, cloud modernization, and a range of services to help organizations maximize opportunities with state-of-the-art technologies. With a dedicated team of engineers and project managers, Codimite ensures efficient project management and communication. Their unique culture, experienced team, and focus on performance empower clients to achieve success. Codimite also excels in development infrastructure modernization, collaboration, data, and artificial intelligence development. They have a strong partnership with Google Cloud and offer services such as application migration, cost optimization, and collaboration solutions.

OtterTune

OtterTune was a database tuning service start-up founded by Carnegie Mellon University. Unfortunately, the company is no longer operational. The founder, DJ OT, is currently in prison for a parole violation. Despite its closure, OtterTune was known for its innovative approach to database tuning. The website now serves as a research archive and provides access to its GitHub repository.

EverSQL

EverSQL is an AI-powered SQL query optimizer and database observability tool that specializes in optimizing PostgreSQL and MySQL databases. It offers automatic SQL query optimization, ongoing performance insights, and cost reduction recommendations. With over 100,000 professionals trusting EverSQL, it aims to save time and improve database performance by making SQL queries faster and more efficient.

Creatus.AI

Creatus.AI is an AI-powered platform that provides a range of tools and services to help businesses boost productivity and transform their workplaces. With over 35 AI models and tools, and 90+ business integrations, Creatus.AI offers a comprehensive suite of solutions for businesses of all sizes. The platform's AI-native workspace and autonomous team members enable businesses to automate tasks, improve efficiency, and gain valuable insights from data. Creatus.AI also specializes in custom AI integrations and solutions, helping businesses to tailor AI solutions to their specific needs.

RAGNA Desktop

RAGNA Desktop is a private AI multitool that runs locally on your desktop PC or laptop without the need for an internet connection. It is designed to automate repetitive tasks, increase efficiency, and free up capacity for more important matters. The application ensures data privacy and security by processing all AI, calculations, and analyses on your device, keeping sensitive information protected. RAGNA Desktop offers tools for AI automation, flexibility, and security, helping users enhance productivity and optimize work processes while adhering to the latest data protection regulations.

Domino Data Lab

Domino Data Lab is an enterprise AI platform that enables users to build, deploy, and manage AI models across any environment. It fosters collaboration, establishes best practices, and ensures governance while reducing costs. The platform provides access to a broad ecosystem of open source and commercial tools, and infrastructure, allowing users to accelerate and scale AI impact. Domino serves as a central hub for AI operations and knowledge, offering integrated workflows, automation, and hybrid multicloud capabilities. It helps users optimize compute utilization, enforce compliance, and centralize knowledge across teams.

Pulse

Pulse is a world-class expert support tool for BigData stacks, specifically focusing on ensuring the stability and performance of Elasticsearch and OpenSearch clusters. It offers early issue detection, AI-generated insights, and expert support to optimize performance, reduce costs, and align with user needs. Pulse leverages AI for issue detection and root-cause analysis, complemented by real human expertise, making it a strategic ally in search cluster management.

Looker

Looker is a business intelligence platform that offers embedded analytics and AI-powered BI solutions. Leveraging Google's AI-led innovation, Looker delivers intelligent BI by combining foundational AI, cloud-first infrastructure, industry-leading APIs, and a flexible semantic layer. It allows users to build custom data experiences, transform data into integrated experiences, and create deeply integrated dashboards. Looker also provides a universal semantic modeling layer for unified, trusted data sources and offers self-service analytics capabilities through Looker and Looker Studio. Additionally, Looker features Gemini, an AI-powered analytics assistant that accelerates analytical workflows and offers a collaborative and conversational user experience.

Gastrograph AI

Gastrograph AI is a cutting-edge artificial intelligence platform that empowers food and beverage companies to optimize their products for consistent market success. Leveraging the world's largest sensory database, Gastrograph AI provides deep insights into consumer preferences, enabling companies to develop new products, enter new markets, and optimize existing products with confidence. With Gastrograph AI, companies can reduce time to market costs, simplify product development, and gain access to trustworthy insights, leading to measurable results and a competitive edge in the global marketplace.

GrapixAI

GrapixAI is a leading provider of low-cost cloud GPU rental services and AI server solutions. The company's focus on flexibility, scalability, and cutting-edge technology enables a variety of AI applications in both local and cloud environments. GrapixAI offers the lowest prices for on-demand GPUs such as RTX4090, RTX 3090, RTX A6000, RTX A5000, and A40. The platform provides Docker-based container ecosystem for quick software setup, powerful GPU search console, customizable pricing options, various security levels, GUI and CLI interfaces, real-time bidding system, and personalized customer support.

Token Counter

Token Counter is an AI tool designed to convert text input into tokens for various AI models. It helps users accurately determine the token count and associated costs when working with AI models. By providing insights into tokenization strategies and cost structures, Token Counter streamlines the process of utilizing advanced technologies.

SambaNova Systems

SambaNova Systems is an AI platform that revolutionizes AI workloads by offering an enterprise-grade full stack platform purpose-built for generative AI. It provides state-of-the-art AI and deep learning capabilities to help customers outcompete their peers. SambaNova delivers the only enterprise-grade full stack platform, from chips to models, designed for generative AI in the enterprise. The platform includes the SN40L Full Stack Platform with 1T+ parameter models, Composition of Experts, and Samba Apps. SambaNova also offers resources to accelerate AI journeys and solutions for various industries like financial services, healthcare, manufacturing, and more.

SS&C Blue Prism

SS&C Blue Prism is an intelligent automation platform that offers a comprehensive suite of tools for automating business processes. It leverages AI and machine learning to accelerate automation journeys, make human-like decisions, and extract data accurately from documents. The platform enables human and digital worker collaboration, accelerates straight-through processing, and integrates process automations rapidly. SS&C Blue Prism is designed to help organizations optimize operations, improve productivity, and enhance customer experiences through intelligent automation solutions.

StoryChief

StoryChief is an all-in-one content marketing platform that empowers marketing agencies and teams to create, collaborate, and distribute content effectively across digital channels. With features like Content Calendar, Social Media Management, AI Power Mode, and Analytics & Reporting, StoryChief streamlines content creation, maximizes reach, and enhances SEO. The platform offers advantages such as centralizing workflow, speeding up publishing, cutting costs, and scaling with agency growth. However, some disadvantages include the need for a learning curve, potential dependency on AI recommendations, and limited customization options.

NeuProScan

NeuProScan is an AI platform designed for the early detection of pre-clinical Alzheimer's from MRI scans. It utilizes AI technology to predict the likelihood of developing Alzheimer's years in advance, helping doctors improve diagnosis accuracy and optimize the use of costly PET scans. The platform is fully customizable, user-friendly, and can be run on devices or in the cloud. NeuProScan aims to provide patients and healthcare systems with valuable insights for better planning and decision-making.

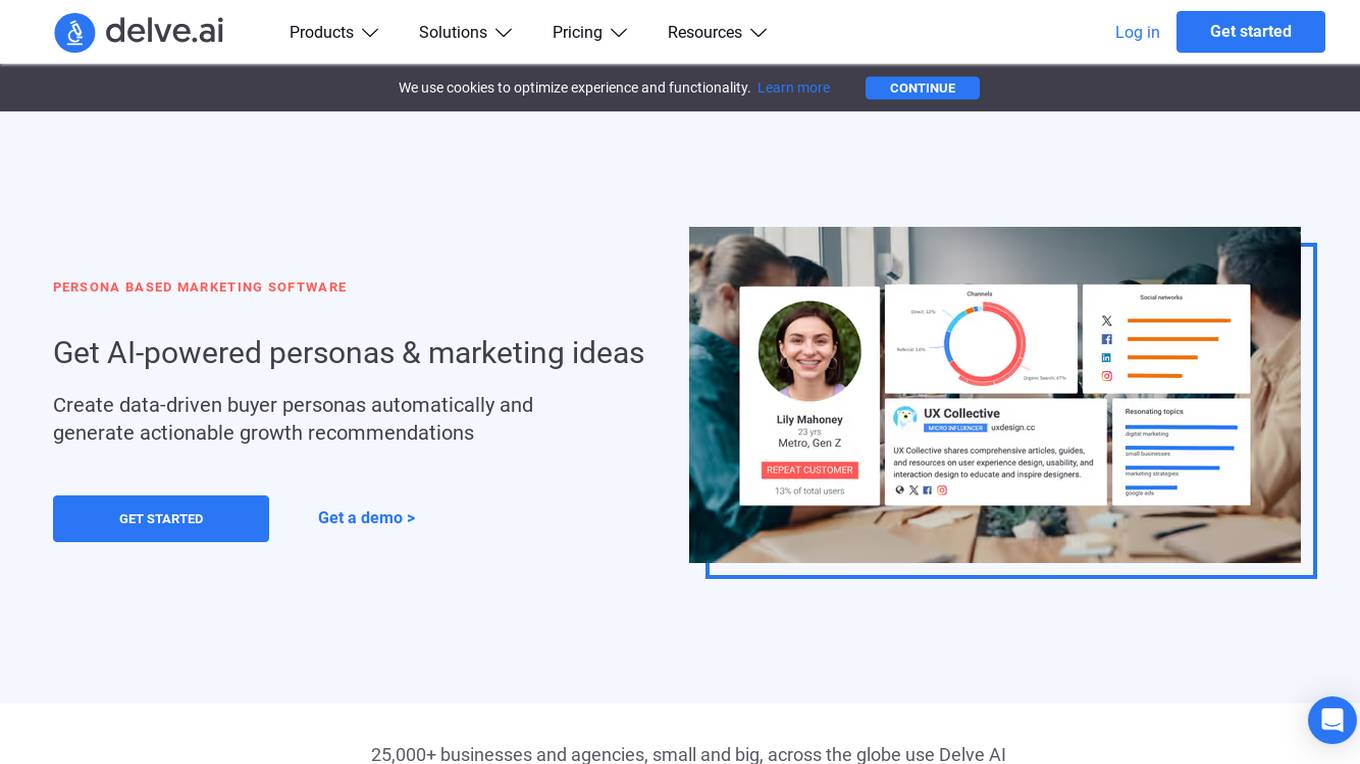

Delve AI

Delve AI is an AI-powered persona-based marketing platform that offers a suite of tools to create data-driven buyer personas, analyze competitors, optimize marketing strategies, and enhance customer insights. It provides solutions for e-commerce, B2B, and agencies, helping businesses generate actionable growth recommendations. Delve AI's technology revolutionizes customer understanding by leveraging AI to develop targeted marketing plans and reduce customer acquisition costs.

Tribe AI

Tribe AI is a modern consultancy specializing in AI, data, and machine learning, helping organizations leverage artificial intelligence. The platform offers bespoke AI solutions, advisory services, and GenAI acceleration to unlock the potential of cutting-edge technology. Tribe AI connects top AI talent with companies across various industries, such as healthcare, venture capital, insurance, private equity, and technology, to optimize operations and drive innovation. The platform also features a network of experienced AI researchers, data scientists, ML engineers, and AI fairness experts, ensuring high-quality and secure AI solutions for clients.

Keebo

Keebo is an AI tool designed for Snowflake optimization, offering automated query, cost, and tuning optimization. It is the only fully-automated Snowflake optimizer that dynamically adjusts to save customers 25% and more. Keebo's patented technology, based on cutting-edge research, optimizes warehouse size, clustering, and memory without impacting performance. It learns and adjusts to workload changes in real-time, setting up in just 30 minutes and delivering savings within 24 hours. The tool uses telemetry metadata for optimizations, providing full visibility and adjustability for complex scenarios and schedules.

Anycores

Anycores is an AI tool designed to optimize the performance of deep neural networks and reduce the cost of running AI models in the cloud. It offers a platform that provides automated solutions for tuning and inference consultation, optimized networks zoo, and platform for reducing AI model cost. Anycores focuses on faster execution, reducing inference time over 10x times, and footprint reduction during model deployment. It is device agnostic, supporting Nvidia, AMD GPUs, Intel, ARM, AMD CPUs, servers, and edge devices. The tool aims to provide highly optimized, low footprint networks tailored to specific deployment scenarios.

20 - Open Source AI Tools

HybridAGI

HybridAGI is the first Programmable LLM-based Autonomous Agent that lets you program its behavior using a **graph-based prompt programming** approach. This state-of-the-art feature allows the AGI to efficiently use any tool while controlling the long-term behavior of the agent. Become the _first Prompt Programmers in history_ ; be a part of the AI revolution one node at a time! **Disclaimer: We are currently in the process of upgrading the codebase to integrate DSPy**

AI-Drug-Discovery-Design

AI-Drug-Discovery-Design is a repository focused on Artificial Intelligence-assisted Drug Discovery and Design. It explores the use of AI technology to accelerate and optimize the drug development process. The advantages of AI in drug design include speeding up research cycles, improving accuracy through data-driven models, reducing costs by minimizing experimental redundancies, and enabling personalized drug design for specific patients or disease characteristics.

ck

Collective Mind (CM) is a collection of portable, extensible, technology-agnostic and ready-to-use automation recipes with a human-friendly interface (aka CM scripts) to unify and automate all the manual steps required to compose, run, benchmark and optimize complex ML/AI applications on any platform with any software and hardware: see online catalog and source code. CM scripts require Python 3.7+ with minimal dependencies and are continuously extended by the community and MLCommons members to run natively on Ubuntu, MacOS, Windows, RHEL, Debian, Amazon Linux and any other operating system, in a cloud or inside automatically generated containers while keeping backward compatibility - please don't hesitate to report encountered issues here and contact us via public Discord Server to help this collaborative engineering effort! CM scripts were originally developed based on the following requirements from the MLCommons members to help them automatically compose and optimize complex MLPerf benchmarks, applications and systems across diverse and continuously changing models, data sets, software and hardware from Nvidia, Intel, AMD, Google, Qualcomm, Amazon and other vendors: * must work out of the box with the default options and without the need to edit some paths, environment variables and configuration files; * must be non-intrusive, easy to debug and must reuse existing user scripts and automation tools (such as cmake, make, ML workflows, python poetry and containers) rather than substituting them; * must have a very simple and human-friendly command line with a Python API and minimal dependencies; * must require minimal or zero learning curve by using plain Python, native scripts, environment variables and simple JSON/YAML descriptions instead of inventing new workflow languages; * must have the same interface to run all automations natively, in a cloud or inside containers. CM scripts were successfully validated by MLCommons to modularize MLPerf inference benchmarks and help the community automate more than 95% of all performance and power submissions in the v3.1 round across more than 120 system configurations (models, frameworks, hardware) while reducing development and maintenance costs.

CursorLens

Cursor Lens is an open-source tool that acts as a proxy between Cursor and various AI providers, logging interactions and providing detailed analytics to help developers optimize their use of AI in their coding workflow. It supports multiple AI providers, captures and logs all requests, provides visual analytics on AI usage, allows users to set up and switch between different AI configurations, offers real-time monitoring of AI interactions, tracks token usage, estimates costs based on token usage and model pricing. Built with Next.js, React, PostgreSQL, Prisma ORM, Vercel AI SDK, Tailwind CSS, and shadcn/ui components.

AgentNeo

AgentNeo is an advanced, open-source Agentic AI Application Observability, Monitoring, and Evaluation Framework designed to provide deep insights into AI agents, Large Language Model (LLM) calls, and tool interactions. It offers robust logging, visualization, and evaluation capabilities to help debug and optimize AI applications with ease. With features like tracing LLM calls, monitoring agents and tools, tracking interactions, detailed metrics collection, flexible data storage, simple instrumentation, interactive dashboard, project management, execution graph visualization, and evaluation tools, AgentNeo empowers users to build efficient, cost-effective, and high-quality AI-driven solutions.

feedgen

FeedGen is an open-source tool that uses Google Cloud's state-of-the-art Large Language Models (LLMs) to improve product titles, generate more comprehensive descriptions, and fill missing attributes in product feeds. It helps merchants and advertisers surface and fix quality issues in their feeds using Generative AI in a simple and configurable way. The tool relies on GCP's Vertex AI API to provide both zero-shot and few-shot inference capabilities on GCP's foundational LLMs. With few-shot prompting, users can customize the model's responses towards their own data, achieving higher quality and more consistent output. FeedGen is an Apps Script based application that runs as an HTML sidebar in Google Sheets, allowing users to optimize their feeds with ease.

DB-GPT-Hub

DB-GPT-Hub is an experimental project leveraging Large Language Models (LLMs) for Text-to-SQL parsing. It includes stages like data collection, preprocessing, model selection, construction, and fine-tuning of model weights. The project aims to enhance Text-to-SQL capabilities, reduce model training costs, and enable developers to contribute to improving Text-to-SQL accuracy. The ultimate goal is to achieve automated question-answering based on databases, allowing users to execute complex database queries using natural language descriptions. The project has successfully integrated multiple large models and established a comprehensive workflow for data processing, SFT model training, prediction output, and evaluation.

LazyLLM

LazyLLM is a low-code development tool for building complex AI applications with multiple agents. It assists developers in building AI applications at a low cost and continuously optimizing their performance. The tool provides a convenient workflow for application development and offers standard processes and tools for various stages of application development. Users can quickly prototype applications with LazyLLM, analyze bad cases with scenario task data, and iteratively optimize key components to enhance the overall application performance. LazyLLM aims to simplify the AI application development process and provide flexibility for both beginners and experts to create high-quality applications.

rag-experiment-accelerator

The RAG Experiment Accelerator is a versatile tool that helps you conduct experiments and evaluations using Azure AI Search and RAG pattern. It offers a rich set of features, including experiment setup, integration with Azure AI Search, Azure Machine Learning, MLFlow, and Azure OpenAI, multiple document chunking strategies, query generation, multiple search types, sub-querying, re-ranking, metrics and evaluation, report generation, and multi-lingual support. The tool is designed to make it easier and faster to run experiments and evaluations of search queries and quality of response from OpenAI, and is useful for researchers, data scientists, and developers who want to test the performance of different search and OpenAI related hyperparameters, compare the effectiveness of various search strategies, fine-tune and optimize parameters, find the best combination of hyperparameters, and generate detailed reports and visualizations from experiment results.

TensorRT-Model-Optimizer

The NVIDIA TensorRT Model Optimizer is a library designed to quantize and compress deep learning models for optimized inference on GPUs. It offers state-of-the-art model optimization techniques including quantization and sparsity to reduce inference costs for generative AI models. Users can easily stack different optimization techniques to produce quantized checkpoints from torch or ONNX models. The quantized checkpoints are ready for deployment in inference frameworks like TensorRT-LLM or TensorRT, with planned integrations for NVIDIA NeMo and Megatron-LM. The tool also supports 8-bit quantization with Stable Diffusion for enterprise users on NVIDIA NIM. Model Optimizer is available for free on NVIDIA PyPI, and this repository serves as a platform for sharing examples, GPU-optimized recipes, and collecting community feedback.

PromptAgent

PromptAgent is a repository for a novel automatic prompt optimization method that crafts expert-level prompts using language models. It provides a principled framework for prompt optimization by unifying prompt sampling and rewarding using MCTS algorithm. The tool supports different models like openai, palm, and huggingface models. Users can run PromptAgent to optimize prompts for specific tasks by strategically sampling model errors, generating error feedbacks, simulating future rewards, and searching for high-reward paths leading to expert prompts.

superpipe

Superpipe is a lightweight framework designed for building, evaluating, and optimizing data transformation and data extraction pipelines using LLMs. It allows users to easily combine their favorite LLM libraries with Superpipe's building blocks to create pipelines tailored to their unique data and use cases. The tool facilitates rapid prototyping, evaluation, and optimization of end-to-end pipelines for tasks such as classification and evaluation of job departments based on work history. Superpipe also provides functionalities for evaluating pipeline performance, optimizing parameters for cost, accuracy, and speed, and conducting grid searches to experiment with different models and prompts.

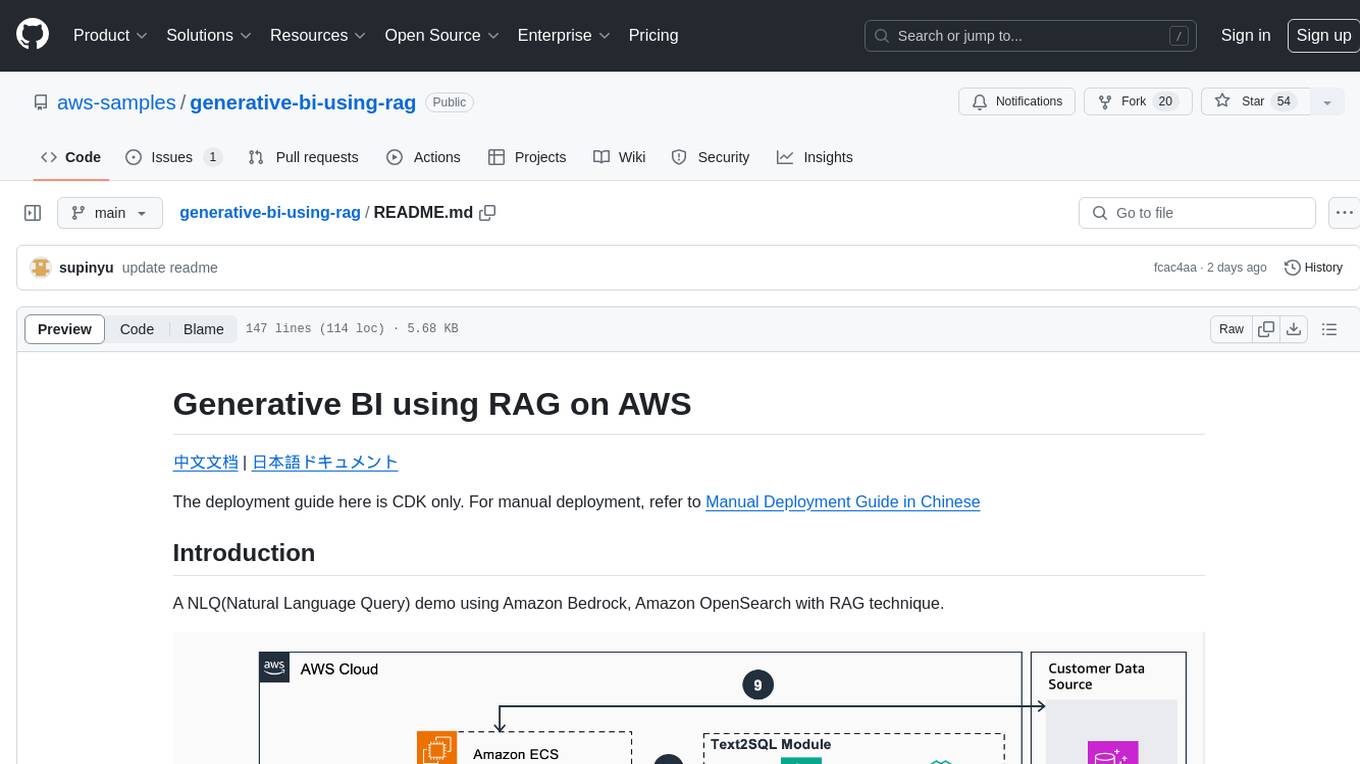

generative-bi-using-rag

Generative BI using RAG on AWS is a comprehensive framework designed to enable Generative BI capabilities on customized data sources hosted on AWS. It offers features such as Text-to-SQL functionality for querying data sources using natural language, user-friendly interface for managing data sources, performance enhancement through historical question-answer ranking, and entity recognition. It also allows customization of business information, handling complex attribution analysis problems, and provides an intuitive question-answering UI with a conversational approach for complex queries.

basiclingua-LLM-Based-NLP

BasicLingua is a Python library that provides functionalities for linguistic tasks such as tokenization, stemming, lemmatization, and many others. It is based on the Gemini Language Model, which has demonstrated promising results in dealing with text data. BasicLingua can be used as an API or through a web demo. It is available under the MIT license and can be used in various projects.

llm-app-stack

LLM App Stack, also known as Emerging Architectures for LLM Applications, is a comprehensive list of available tools, projects, and vendors at each layer of the LLM app stack. It covers various categories such as Data Pipelines, Embedding Models, Vector Databases, Playgrounds, Orchestrators, APIs/Plugins, LLM Caches, Logging/Monitoring/Eval, Validators, LLM APIs (proprietary and open source), App Hosting Platforms, Cloud Providers, and Opinionated Clouds. The repository aims to provide a detailed overview of tools and projects for building, deploying, and maintaining enterprise data solutions, AI models, and applications.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

gpt-researcher

GPT Researcher is an autonomous agent designed for comprehensive online research on a variety of tasks. It can produce detailed, factual, and unbiased research reports with customization options. The tool addresses issues of speed, determinism, and reliability by leveraging parallelized agent work. The main idea involves running 'planner' and 'execution' agents to generate research questions, seek related information, and create research reports. GPT Researcher optimizes costs and completes tasks in around 3 minutes. Features include generating long research reports, aggregating web sources, an easy-to-use web interface, scraping web sources, and exporting reports to various formats.

peft

PEFT (Parameter-Efficient Fine-Tuning) is a collection of state-of-the-art methods that enable efficient adaptation of large pretrained models to various downstream applications. By only fine-tuning a small number of extra model parameters instead of all the model's parameters, PEFT significantly decreases the computational and storage costs while achieving performance comparable to fully fine-tuned models.

DevOpsGPT

DevOpsGPT is an AI-driven software development automation solution that combines Large Language Models (LLM) with DevOps tools to convert natural language requirements into working software. It improves development efficiency by eliminating the need for tedious requirement documentation, shortens development cycles, reduces communication costs, and ensures high-quality deliverables. The Enterprise Edition offers features like existing project analysis, professional model selection, and support for more DevOps platforms. The tool automates requirement development, generates interface documentation, provides pseudocode based on existing projects, facilitates code refinement, enables continuous integration, and supports software version release. Users can run DevOpsGPT with source code or Docker, and the tool comes with limitations in precise documentation generation and understanding existing project code. The product roadmap includes accurate requirement decomposition, rapid import of development requirements, and integration of more software engineering and professional tools for efficient software development tasks under AI planning and execution.

chatgpt-universe

ChatGPT is a large language model that can generate human-like text, translate languages, write different kinds of creative content, and answer your questions in a conversational way. It is trained on a massive amount of text data, and it is able to understand and respond to a wide range of natural language prompts. Here are 5 jobs suitable for this tool, in lowercase letters: 1. content writer 2. chatbot assistant 3. language translator 4. creative writer 5. researcher

20 - OpenAI Gpts

Qtech | FPS

Frost Protection System is an AI bot optimizing open field farming of fruits, vegetables, and flowers, combining real-time data and AI to boost yield, cut costs, and foster sustainable practices in a user-friendly interface.

Cloud Networking Advisor

Optimizes cloud-based networks for efficient organizational operations.

Gas Intellect Pro

Leading-Edge Gas Analysis and Optimization: Adaptable, Accurate, Advanced, developed on OpenAI.

Cloudwise Consultant

Expert in cloud-native solutions, provides tailored tech advice and cost estimates.

Cloud Computing

Expert in cloud computing, offering insights on services, security, and infrastructure.

Calorie Count & Cut Cost: Food Data

Apples vs. Oranges? Optimize your low-calorie diet. Compare food items. Get tailored advice on satiating, nutritious, cost-effective food choices based on 240 items.

DataKitchen DataOps and Data Observability GPT

A specialist in DataOps and Data Observability, aiding in data management and monitoring.

Your Business Data Optimizer Pro

A chatbot expert in business data analysis and optimization.

AI Business Transformer

Top AI for business automation, data analytics, content creation. Optimize efficiency, gain insights, and innovate with AI Business Transformer.

Ecommerce Pricing Advisor

Optimize your pricing for peak market performance and profitability. Seamlessly navigate ecommerce challenges with expert, data-driven pricing strategies. 📈💹

DataTrend Analyst

I transform complex social media data into actionable, strategic insights to optimize your campaigns and drive engagement.

Operations Department Assistant

An Operations Department Assistant aids the operations team by handling administrative tasks, process documentation, and data analysis, helping to streamline and optimize various operational processes within an organization.

Algorithm Expert

I develop and optimize algorithms with a technical and analytical approach.