Best AI tools for< Interpret Research Data >

20 - AI tool Sites

JADBio

JADBio is an automated machine learning (AutoML) platform designed to accelerate biomarker discovery and drug development processes. It offers a no-code solution that automates the discovery of biomarkers and interprets their role based on research needs. JADBio can parse multi-omics data, including genomics, transcriptome, metagenome, proteome, metabolome, phenotype/clinical data, and images, enabling users to efficiently discover insights for various conditions such as cancer, immune system disorders, chronic diseases, infectious diseases, and mental health.

Notably

Notably is a research synthesis platform that uses AI to help researchers analyze and interpret data faster. It offers a variety of features, including a research repository, AI research, digital sticky notes, video transcription, and cluster analysis. Notably is used by companies and organizations of all sizes to conduct product research, market research, academic research, and more.

Hepta AI

Hepta AI is an AI-powered statistics tool designed for scientific research. It simplifies the process of statistical analysis by allowing users to easily input their data and receive comprehensive results, including tables, graphs, and statistical analysis. With a focus on accuracy and efficiency, Hepta AI aims to streamline the research process for scientists and researchers, providing valuable insights and data visualization. The tool offers a user-friendly interface and advanced AI algorithms to deliver precise and reliable statistical outcomes.

Max Planck Institute for Informatics

The Max Planck Institute for Informatics focuses on Visual Computing and Artificial Intelligence, conducting research at the intersection of Computer Graphics, Computer Vision, and AI. The institute aims to develop innovative ways to capture, represent, synthesize, and simulate real-world models with high detail, robustness, and efficiency. By combining concepts from Computer Graphics, Computer Vision, and Machine Learning, the institute lays the groundwork for advanced methods to better perceive and interpret the complex real world. The research conducted here is essential for the development of future intelligent computing systems that interact with humans and the environment intuitively and safely.

Zelma

Zelma is an AI-powered research assistant that enables users to find, graph, and understand U.S. school testing data using plain English. It allows users to search student test data by school district, demographics, grade, and more, and presents the data with graphs, tables, and descriptions. Zelma aims to make education data easily accessible and understandable for everyone.

Human-Centred Artificial Intelligence Lab

The Human-Centred Artificial Intelligence Lab (Holzinger Group) is a research group focused on developing AI solutions that are explainable, trustworthy, and aligned with human values, ethical principles, and legal requirements. The lab works on projects related to machine learning, digital pathology, interactive machine learning, and more. Their mission is to combine human and computer intelligence to address pressing problems in various domains such as forestry, health informatics, and cyber-physical systems. The lab emphasizes the importance of explainable AI, human-in-the-loop interactions, and the synergy between human and machine intelligence.

ClearSeas.AI

ClearSeas.AI is an AI-powered market research visualization dashboard that leverages advanced AI technologies to interpret complex datasets, delivering intuitive and visually compelling insights. The platform offers services such as expert survey design, efficient survey deployment, B2B panel access, and full-service project management. ClearSeas.AI prioritizes data security by employing stringent protocols, advanced encryption technologies, and document protection measures. The platform does not use customer data for AI training, ensuring data privacy and confidentiality. ClearSeas.AI aims to illuminate insights with the power of AI, empowering users to stay ahead of market trends and make informed strategic decisions.

Ogma

Ogma is an interpretable symbolic general problem-solving model that utilizes a symbolic sequence modeling paradigm to address tasks requiring reliability, complex decomposition, and without hallucinations. It offers solutions in areas such as math problem-solving, natural language understanding, and resolution of uncertainty. The technology is designed to provide a structured approach to problem-solving by breaking down tasks into manageable components while ensuring interpretability and self-interpretability. Ogma aims to set benchmarks in problem-solving applications by offering a reliable and transparent methodology.

OAI UI

OAI UI is an all-in-one AI platform designed to streamline various AI-related tasks. It offers a user-friendly interface that allows users to easily interact with AI technologies. The platform integrates multiple AI capabilities, such as natural language processing, machine learning, and computer vision, to provide a comprehensive solution for businesses and individuals looking to leverage AI in their workflows.

IXICO

IXICO is a precision analytics company specializing in intelligent insights in neuroscience. They offer a range of services for drug development analytics, imaging operations, and post-marketing consultancy. With a focus on technology and innovation, IXICO provides expertise in imaging biomarkers, radiological reads, volumetric MRI, PET & SPECT, and advanced MRI. Their TrialTracker platform and Assessa tool utilize innovation and AI for disease modeling and analysis. IXICO supports biopharmaceutical companies in CNS clinical research with cutting-edge neuroimaging techniques and AI technology.

EmpathixAI

EmpathixAI is an innovative AI tool designed to analyze and interpret human emotions through text and voice inputs. The tool uses advanced natural language processing and sentiment analysis algorithms to provide accurate insights into the emotional state of individuals. EmpathixAI helps businesses understand customer feedback, improve communication strategies, and enhance user experiences. With its user-friendly interface and powerful analytics capabilities, EmpathixAI is a valuable tool for companies looking to gain a deeper understanding of customer sentiment and emotions.

Grok-1.5 Vision

Grok-1.5 Vision (Grok-1.5V) is a groundbreaking multimodal AI model developed by Elon Musk's research lab, x.AI. This advanced model has the potential to revolutionize the field of artificial intelligence and shape the future of various industries. Grok-1.5V combines the capabilities of computer vision, natural language processing, and other AI techniques to provide a comprehensive understanding of the world around us. With its ability to analyze and interpret visual data, Grok-1.5V can assist in tasks such as object recognition, image classification, and scene understanding. Additionally, its natural language processing capabilities enable it to comprehend and generate human language, making it a powerful tool for communication and information retrieval. Grok-1.5V's multimodal nature sets it apart from traditional AI models, allowing it to handle complex tasks that require a combination of visual and linguistic understanding. This makes it a valuable asset for applications in fields such as healthcare, manufacturing, and customer service.

GPT-4o

GPT-4o is an advanced multimodal AI platform developed by OpenAI, offering a comprehensive AI interaction experience across text, imagery, and audio. It excels in text comprehension, image analysis, and voice recognition, providing swift, cost-effective, and universally accessible AI technology. GPT-4o democratizes AI by balancing free access with premium features for paid subscribers, revolutionizing the way we interact with artificial intelligence.

Yoursearch.ai

Yoursearch.ai is an AI-powered search engine web application that aims to revolutionize internet research by providing users with better search results without any advertising. With features like a new Messenger service, summarized and relevant search results, and the ability to share findings with family and friends, Yoursearch.ai offers a user-friendly and efficient search experience. The application also emphasizes user control and personalization, allowing users to get only the most relevant information. Yoursearch.ai is committed to protecting minors and offers a service for age verification on websites with adult content. Overall, Yoursearch.ai is a smart search tool that leverages artificial intelligence to enhance productivity and knowledge discovery.

RapidAI Research Institute

RapidAI Research Institute is an academic institution under the RapidAI open-source organization, a non-enterprise academic institution. It serves as a platform for academic research and collaboration, providing opportunities for aspiring researchers to publish papers and engage in scholarly activities. The institute offers mentorship programs and benefits for members, including access to resources such as internet connectivity, GPU configurations, and storage space. The management team consists of esteemed professionals in the field, ensuring a conducive environment for academic growth and development.

Dataconomy

The website is a platform that covers a wide range of topics related to artificial intelligence, big data, machine learning, blockchain, cybersecurity, fintech, gaming, internet of things, startups, and various industries. It provides news, articles, and insights on the latest trends and developments in the field of AI and technology. The platform also offers whitepapers, industry-specific information, and event updates. Users can stay informed about the advancements in AI and related technologies through the content provided on the website.

Labelbox

Labelbox is a data factory platform that empowers AI teams to manage data labeling, train models, and create better data with internet scale RLHF platform. It offers an all-in-one solution comprising tooling and services powered by a global community of domain experts. Labelbox operates a global data labeling infrastructure and operations for AI workloads, providing expert human network for data labeling in various domains. The platform also includes AI-assisted alignment for maximum efficiency, data curation, model training, and labeling services. Customers achieve breakthroughs with high-quality data through Labelbox.

Exa

Exa is a search engine that uses embeddings-based search to retrieve the best content on the web. It is trusted by companies and developers from all over the world. Exa is like Google, but it is better at understanding the meaning of your queries and returning results that are more relevant to your needs. Exa can be used for a variety of tasks, including finding information on the web, conducting research, and building AI applications.

Aiiot Talk

Aiiot Talk is an AI tool that focuses on Artificial Intelligence, Robotics, Technology, Internet of Things, Machine Learning, Business Technology, Data Security, and Marketing. The platform provides insights, articles, and discussions on the latest trends and applications of AI in various industries. Users can explore how AI is reshaping businesses, enhancing security measures, and revolutionizing technology. Aiiot Talk aims to educate and inform readers about the potential of AI and its impact on society and the future.

Techiexpert

Techiexpert.com is an AI tool that provides expert views on emerging technologies such as Artificial Intelligence, Internet of Things, Big Data, Cloud Data Analytics, Machine Learning, and Blockchain. The platform offers a wide range of articles, news, and resources related to the latest trends in the tech industry. Users can explore topics like cybersecurity, data science, deep learning, robotics, and more to stay informed and updated on technological advancements.

20 - Open Source AI Tools

Awesome-LLM-Compression

Awesome LLM compression research papers and tools to accelerate LLM training and inference.

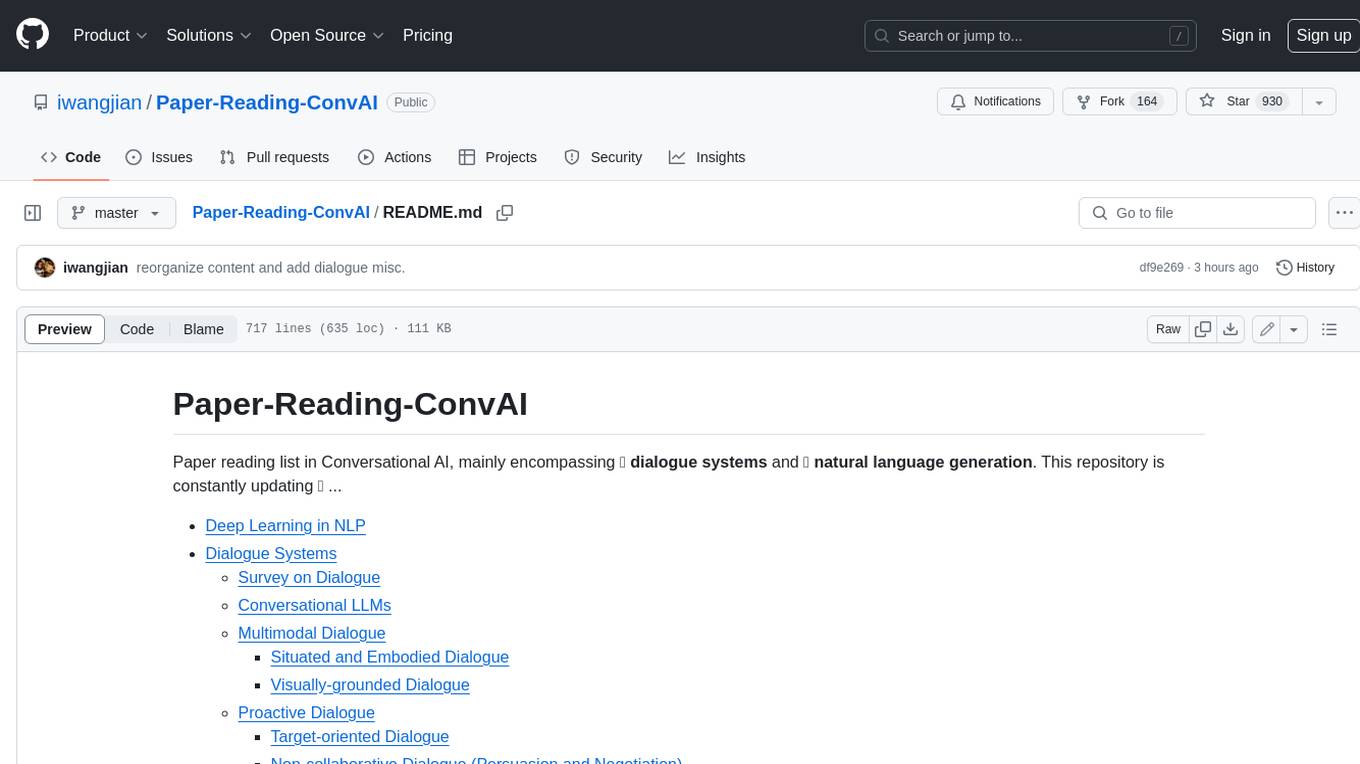

Paper-Reading-ConvAI

Paper-Reading-ConvAI is a repository that contains a list of papers, datasets, and resources related to Conversational AI, mainly encompassing dialogue systems and natural language generation. This repository is constantly updating.

data-to-paper

Data-to-paper is an AI-driven framework designed to guide users through the process of conducting end-to-end scientific research, starting from raw data to the creation of comprehensive and human-verifiable research papers. The framework leverages a combination of LLM and rule-based agents to assist in tasks such as hypothesis generation, literature search, data analysis, result interpretation, and paper writing. It aims to accelerate research while maintaining key scientific values like transparency, traceability, and verifiability. The framework is field-agnostic, supports both open-goal and fixed-goal research, creates data-chained manuscripts, involves human-in-the-loop interaction, and allows for transparent replay of the research process.

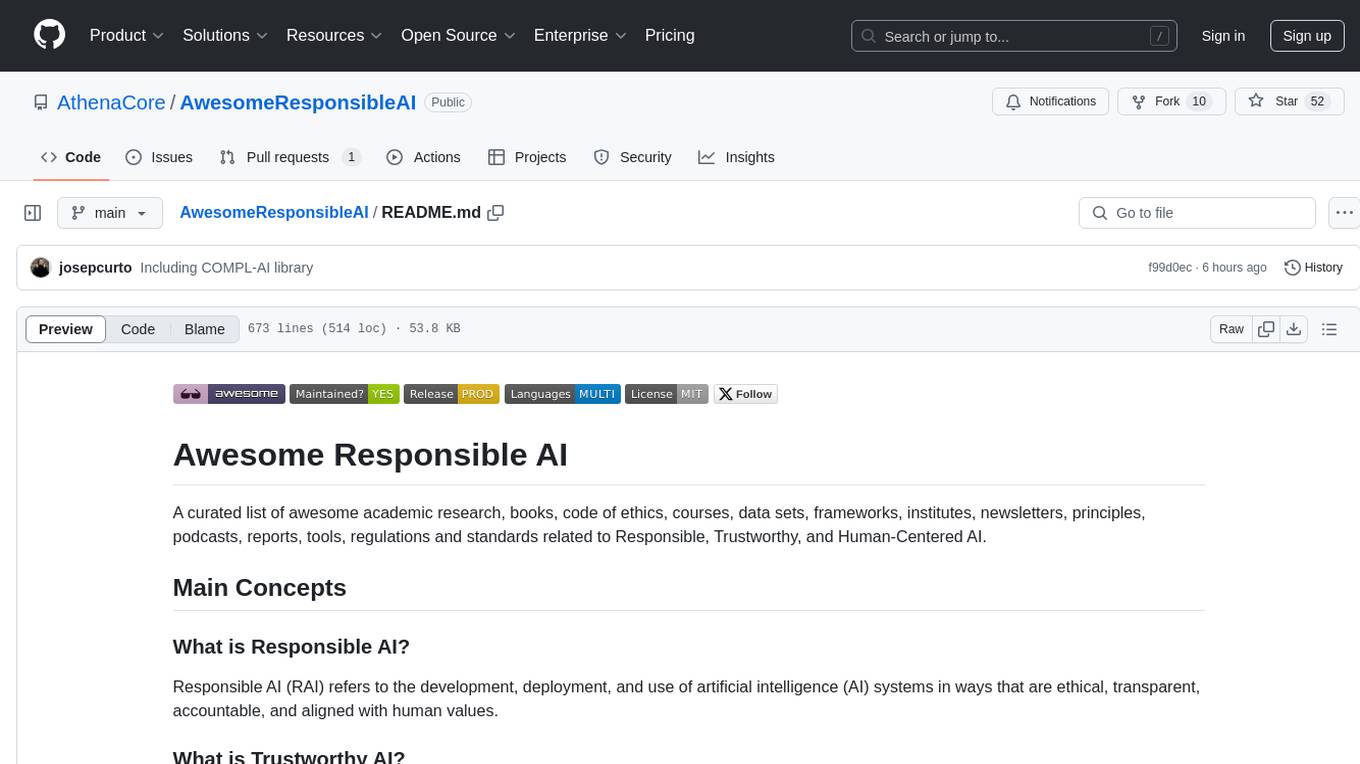

AwesomeResponsibleAI

Awesome Responsible AI is a curated list of academic research, books, code of ethics, courses, data sets, frameworks, institutes, newsletters, principles, podcasts, reports, tools, regulations, and standards related to Responsible, Trustworthy, and Human-Centered AI. It covers various concepts such as Responsible AI, Trustworthy AI, Human-Centered AI, Responsible AI frameworks, AI Governance, and more. The repository provides a comprehensive collection of resources for individuals interested in ethical, transparent, and accountable AI development and deployment.

Open-Medical-Reasoning-Tasks

Open Life Science AI: Medical Reasoning Tasks is a collaborative hub for developing cutting-edge reasoning tasks for Large Language Models (LLMs) in the medical, healthcare, and clinical domains. The repository aims to advance AI capabilities in healthcare by fostering accurate diagnoses, personalized treatments, and improved patient outcomes. It offers a diverse range of medical reasoning challenges such as Diagnostic Reasoning, Treatment Planning, Medical Image Analysis, Clinical Data Interpretation, Patient History Analysis, Ethical Decision Making, Medical Literature Comprehension, and Drug Interaction Assessment. Contributors can join the community of healthcare professionals, AI researchers, and enthusiasts to contribute to the repository by creating new tasks or improvements following the provided guidelines. The repository also provides resources including a task list, evaluation metrics, medical AI papers, and healthcare datasets for training and evaluation.

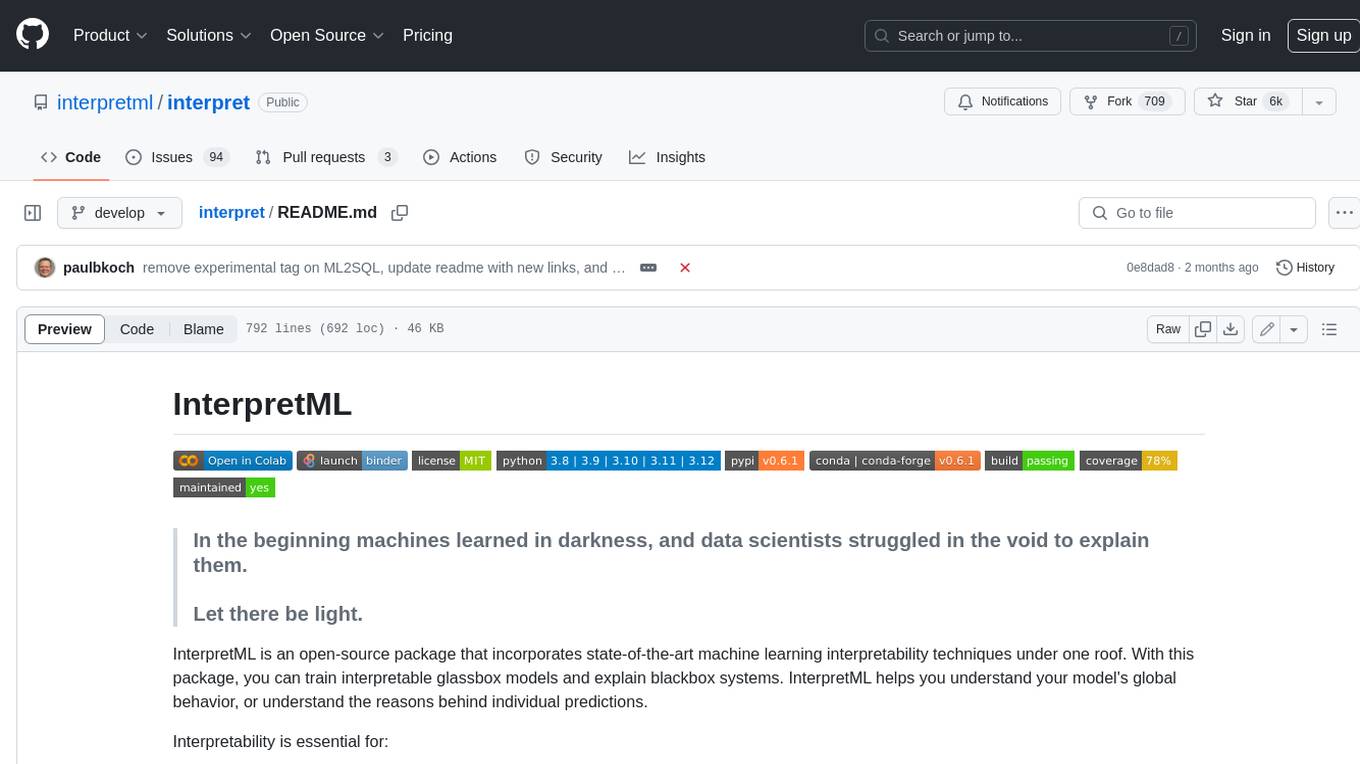

interpret

InterpretML is an open-source package that incorporates state-of-the-art machine learning interpretability techniques under one roof. With this package, you can train interpretable glassbox models and explain blackbox systems. InterpretML helps you understand your model's global behavior, or understand the reasons behind individual predictions. Interpretability is essential for: - Model debugging - Why did my model make this mistake? - Feature Engineering - How can I improve my model? - Detecting fairness issues - Does my model discriminate? - Human-AI cooperation - How can I understand and trust the model's decisions? - Regulatory compliance - Does my model satisfy legal requirements? - High-risk applications - Healthcare, finance, judicial, ...

fiftyone

FiftyOne is an open-source tool designed for building high-quality datasets and computer vision models. It supercharges machine learning workflows by enabling users to visualize datasets, interpret models faster, and improve efficiency. With FiftyOne, users can explore scenarios, identify failure modes, visualize complex labels, evaluate models, find annotation mistakes, and much more. The tool aims to streamline the process of improving machine learning models by providing a comprehensive set of features for data analysis and model interpretation.

LLM-for-misinformation-research

LLM-for-misinformation-research is a curated paper list of misinformation research using large language models (LLMs). The repository covers methods for detection and verification, tools for fact-checking complex claims, decision-making and explanation, claim matching, post-hoc explanation generation, and other tasks related to combating misinformation. It includes papers on fake news detection, rumor detection, fact verification, and more, showcasing the application of LLMs in various aspects of misinformation research.

Awesome-LLM4Cybersecurity

The repository 'Awesome-LLM4Cybersecurity' provides a comprehensive overview of the applications of Large Language Models (LLMs) in cybersecurity. It includes a systematic literature review covering topics such as constructing cybersecurity-oriented domain LLMs, potential applications of LLMs in cybersecurity, and research directions in the field. The repository analyzes various benchmarks, datasets, and applications of LLMs in cybersecurity tasks like threat intelligence, fuzzing, vulnerabilities detection, insecure code generation, program repair, anomaly detection, and LLM-assisted attacks.

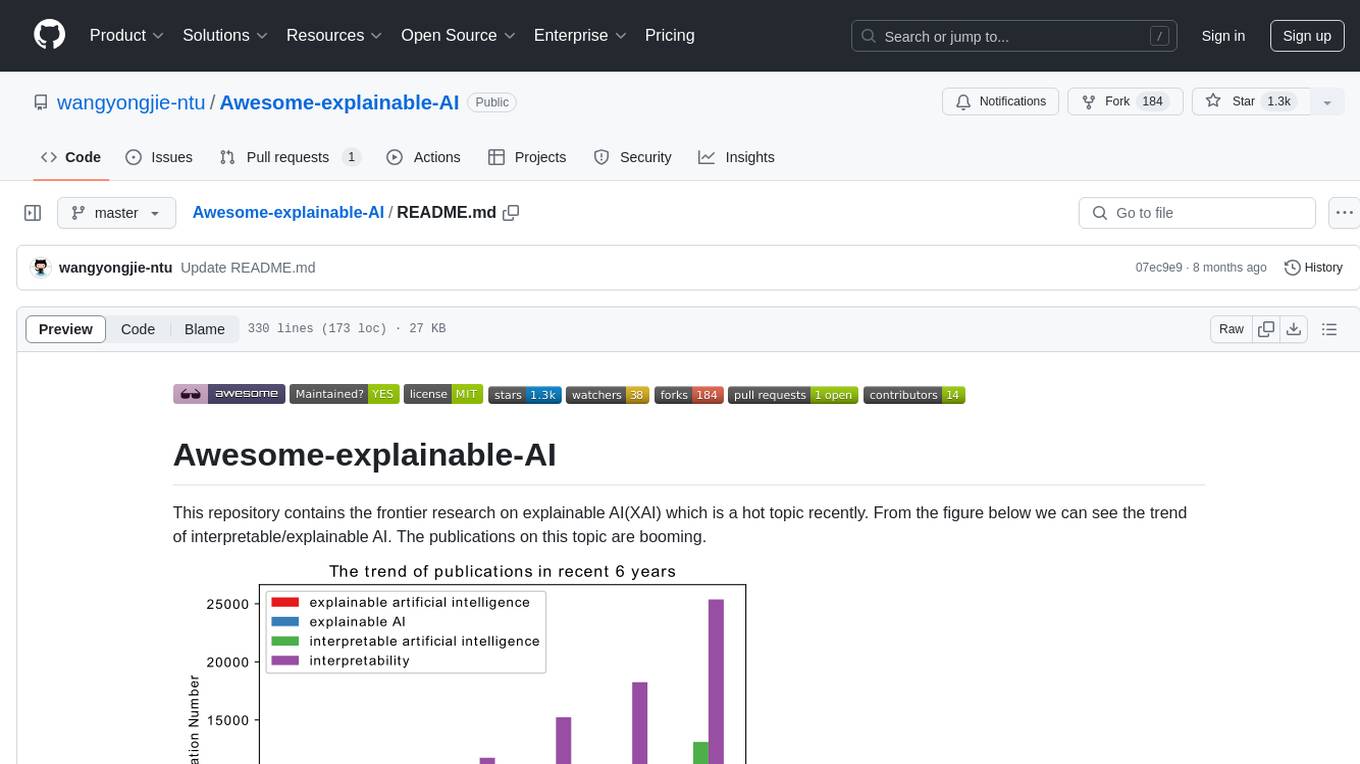

Awesome-explainable-AI

This repository contains frontier research on explainable AI (XAI), a hot topic in the field of artificial intelligence. It includes trends, use cases, survey papers, books, open courses, papers, and Python libraries related to XAI. The repository aims to organize and categorize publications on XAI, provide evaluation methods, and list various Python libraries for explainable AI.

imodelsX

imodelsX is a Scikit-learn friendly library that provides tools for explaining, predicting, and steering text models/data. It also includes a collection of utilities for getting started with text data. **Explainable modeling/steering** | Model | Reference | Output | Description | |---|---|---|---| | Tree-Prompt | [Reference](https://github.com/microsoft/AugML/tree/main/imodelsX/tree_prompt) | Explanation + Steering | Generates a tree of prompts to steer an LLM (_Official_) | | iPrompt | [Reference](https://github.com/microsoft/AugML/tree/main/imodelsX/iprompt) | Explanation + Steering | Generates a prompt that explains patterns in data (_Official_) | | AutoPrompt | [Reference](https://github.com/microsoft/AugML/tree/main/imodelsX/autoprompt) | Explanation + Steering | Find a natural-language prompt using input-gradients (⌛ In progress)| | D3 | [Reference](https://github.com/microsoft/AugML/tree/main/imodelsX/d3) | Explanation | Explain the difference between two distributions | | SASC | [Reference](https://github.com/microsoft/AugML/tree/main/imodelsX/sasc) | Explanation | Explain a black-box text module using an LLM (_Official_) | | Aug-Linear | [Reference](https://github.com/microsoft/AugML/tree/main/imodelsX/aug_linear) | Linear model | Fit better linear model using an LLM to extract embeddings (_Official_) | | Aug-Tree | [Reference](https://github.com/microsoft/AugML/tree/main/imodelsX/aug_tree) | Decision tree | Fit better decision tree using an LLM to expand features (_Official_) | **General utilities** | Model | Reference | |---|---| | LLM wrapper| [Reference](https://github.com/microsoft/AugML/tree/main/imodelsX/llm) | Easily call different LLMs | | | Dataset wrapper| [Reference](https://github.com/microsoft/AugML/tree/main/imodelsX/data) | Download minimially processed huggingface datasets | | | Bag of Ngrams | [Reference](https://github.com/microsoft/AugML/tree/main/imodelsX/bag_of_ngrams) | Learn a linear model of ngrams | | | Linear Finetune | [Reference](https://github.com/microsoft/AugML/tree/main/imodelsX/linear_finetune) | Finetune a single linear layer on top of LLM embeddings | | **Related work** * [imodels package](https://github.com/microsoft/interpretml/tree/main/imodels) (JOSS 2021) - interpretable ML package for concise, transparent, and accurate predictive modeling (sklearn-compatible). * [Adaptive wavelet distillation](https://arxiv.org/abs/2111.06185) (NeurIPS 2021) - distilling a neural network into a concise wavelet model * [Transformation importance](https://arxiv.org/abs/1912.04938) (ICLR 2020 workshop) - using simple reparameterizations, allows for calculating disentangled importances to transformations of the input (e.g. assigning importances to different frequencies) * [Hierarchical interpretations](https://arxiv.org/abs/1807.03343) (ICLR 2019) - extends CD to CNNs / arbitrary DNNs, and aggregates explanations into a hierarchy * [Interpretation regularization](https://arxiv.org/abs/2006.14340) (ICML 2020) - penalizes CD / ACD scores during training to make models generalize better * [PDR interpretability framework](https://www.pnas.org/doi/10.1073/pnas.1814225116) (PNAS 2019) - an overarching framewwork for guiding and framing interpretable machine learning

awesome-mlops

Awesome MLOps is a curated list of tools related to Machine Learning Operations, covering areas such as AutoML, CI/CD for Machine Learning, Data Cataloging, Data Enrichment, Data Exploration, Data Management, Data Processing, Data Validation, Data Visualization, Drift Detection, Feature Engineering, Feature Store, Hyperparameter Tuning, Knowledge Sharing, Machine Learning Platforms, Model Fairness and Privacy, Model Interpretability, Model Lifecycle, Model Serving, Model Testing & Validation, Optimization Tools, Simplification Tools, Visual Analysis and Debugging, and Workflow Tools. The repository provides a comprehensive collection of tools and resources for individuals and teams working in the field of MLOps.

awesome-artificial-intelligence-guidelines

The 'Awesome AI Guidelines' repository aims to simplify the ecosystem of guidelines, principles, codes of ethics, standards, and regulations around artificial intelligence. It provides a comprehensive collection of resources addressing ethical and societal challenges in AI systems, including high-level frameworks, principles, processes, checklists, interactive tools, industry standards initiatives, online courses, research, and industry newsletters, as well as regulations and policies from various countries. The repository serves as a valuable reference for individuals and teams designing, building, and operating AI systems to navigate the complex landscape of AI ethics and governance.

invariant

Invariant Analyzer is an open-source scanner designed for LLM-based AI agents to find bugs, vulnerabilities, and security threats. It scans agent execution traces to identify issues like looping behavior, data leaks, prompt injections, and unsafe code execution. The tool offers a library of built-in checkers, an expressive policy language, data flow analysis, real-time monitoring, and extensible architecture for custom checkers. It helps developers debug AI agents, scan for security violations, and prevent security issues and data breaches during runtime. The analyzer leverages deep contextual understanding and a purpose-built rule matching engine for security policy enforcement.

BTGenBot

BTGenBot is a tool that generates behavior trees for robots using lightweight large language models (LLMs) with a maximum of 7 billion parameters. It fine-tunes on a specific dataset, compares multiple LLMs, and evaluates generated behavior trees using various methods. The tool demonstrates the potential of LLMs with a limited number of parameters in creating effective and efficient robot behaviors.

PromptChains

ChatGPT Queue Prompts is a collection of prompt chains designed to enhance interactions with large language models like ChatGPT. These prompt chains help build context for the AI before performing specific tasks, improving performance. Users can copy and paste prompt chains into the ChatGPT Queue extension to process prompts in sequence. The repository includes example prompt chains for tasks like conducting AI company research, building SEO optimized blog posts, creating courses, revising resumes, enriching leads for CRM, personal finance document creation, workout and nutrition plans, marketing plans, and more.

project_alice

Alice is an agentic workflow framework that integrates task execution and intelligent chat capabilities. It provides a flexible environment for creating, managing, and deploying AI agents for various purposes, leveraging a microservices architecture with MongoDB for data persistence. The framework consists of components like APIs, agents, tasks, and chats that interact to produce outputs through files, messages, task results, and URL references. Users can create, test, and deploy agentic solutions in a human-language framework, making it easy to engage with by both users and agents. The tool offers an open-source option, user management, flexible model deployment, and programmatic access to tasks and chats.

pytorch-grad-cam

This repository provides advanced AI explainability for PyTorch, offering state-of-the-art methods for Explainable AI in computer vision. It includes a comprehensive collection of Pixel Attribution methods for various tasks like Classification, Object Detection, Semantic Segmentation, and more. The package supports high performance with full batch image support and includes metrics for evaluating and tuning explanations. Users can visualize and interpret model predictions, making it suitable for both production and model development scenarios.

Awesome-Interpretability-in-Large-Language-Models

This repository is a collection of resources focused on interpretability in large language models (LLMs). It aims to help beginners get started in the area and keep researchers updated on the latest progress. It includes libraries, blogs, tutorials, forums, tools, programs, papers, and more related to interpretability in LLMs.

20 - OpenAI Gpts

Theoretical Research Advisor

Guides scientific investigations and theoretical research methodologies.

MetaPsych Assistant

Assists in psychological meta-analysis research with R language expertise.

Data Interpretation

Upload an image of a statistical analysis and we'll interpret the results: linear regression, logistic regression, ANOVA, cluster analysis, MDS, factor analysis, and many more

AI-Powered SPSS Aid: Manuscript Interpretation

I assist with SPSS data interpretation for academic manuscripts.

Epidemiology

Expert in epidemiology, modeling disease spread and analyzing public health data.

Qually the Qualitative Researcher

Expert in health qualitative research and thematic analysis.

Qualitative Quest

I'm Qualitative Quest, here to offer concise advice on qualitative research methods, analysis techniques, and tools.

GUNSHIGPT

TikTok users data analysis and interpretation. (@apify actions)(Email me if any inquiries)

Asesor Estadístico

Experto en estadística listo para ayudar con análisis e interpretación de datos.

Maurice

Your go-to for designing, analyzing, and recording experiments, and generate your lab report.

Socrate

Il Tutor che hai sempre desiderato. Le sue materie di punta sono diritto amministrativo, civile, privacy e protezione dei dati personali. Ti aiuta anche nella comprensione di testi complessi. E' noto per il suo metodo di insegnamento basato su domande e risposte (la maieutica) e per la sua empatia

EconoGraph

Expert in Micro Economics, interprets graphs, explains concepts, avoids direct exam answers.