Best AI tools for< Identify Risk >

20 - AI tool Sites

Intelligencia AI

Intelligencia AI is a leading provider of AI-powered solutions for the pharmaceutical industry. Our suite of solutions helps de-risk and enhance clinical development and decision-making. We use a combination of data, AI, and machine learning to provide insights into the probability of success for drugs across multiple therapeutic areas. Our solutions are used by many of the top global pharmaceutical companies to improve their R&D productivity and make more informed decisions.

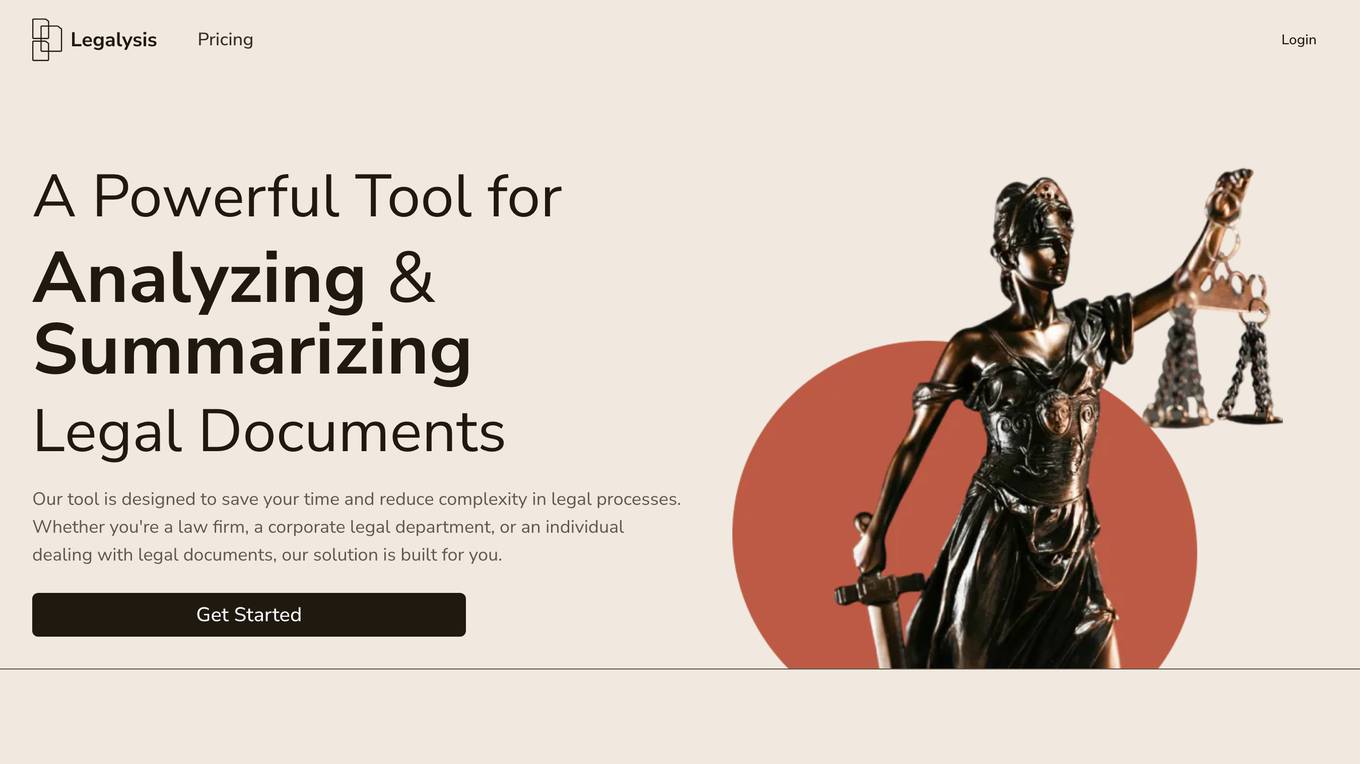

Legalysis

Legalysis is a powerful tool for analyzing and summarizing legal documents. It is designed to save time and reduce complexity in legal processes. The tool uses advanced AI technology to examine contracts and other legal documents in depth, detecting potential risks and issues with impressive accuracy. It also converts dense, lengthy legal documents into brief, one-page summaries, making them easier to understand. Legalysis is a valuable tool for law firms, corporate legal departments, and individuals dealing with legal documents.

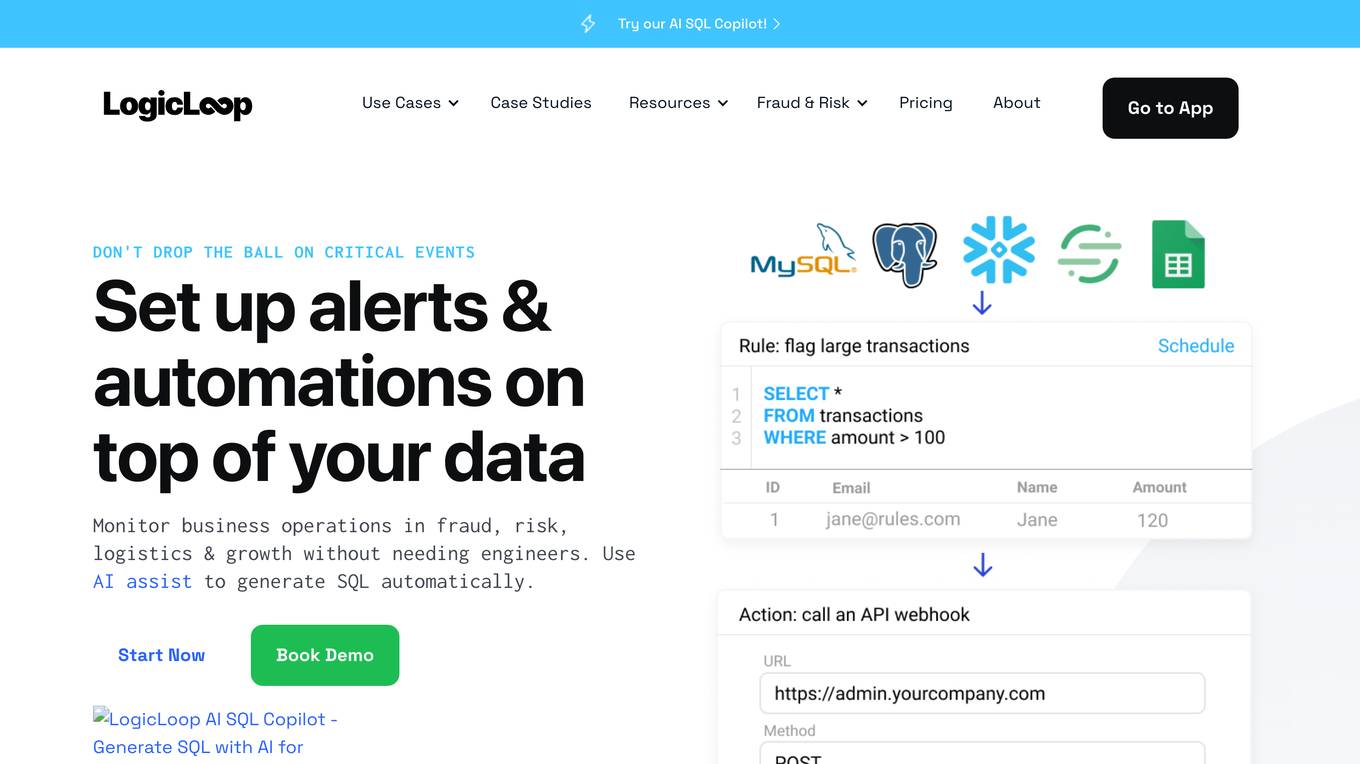

LogicLoop

LogicLoop is an all-in-one operations automation platform that allows users to set up alerts and automations on top of their data. It is designed to help businesses monitor their operations, identify risks, and take action to prevent problems. LogicLoop can be used by businesses of all sizes and industries, and it is particularly well-suited for businesses that are looking to improve their efficiency and reduce their risk.

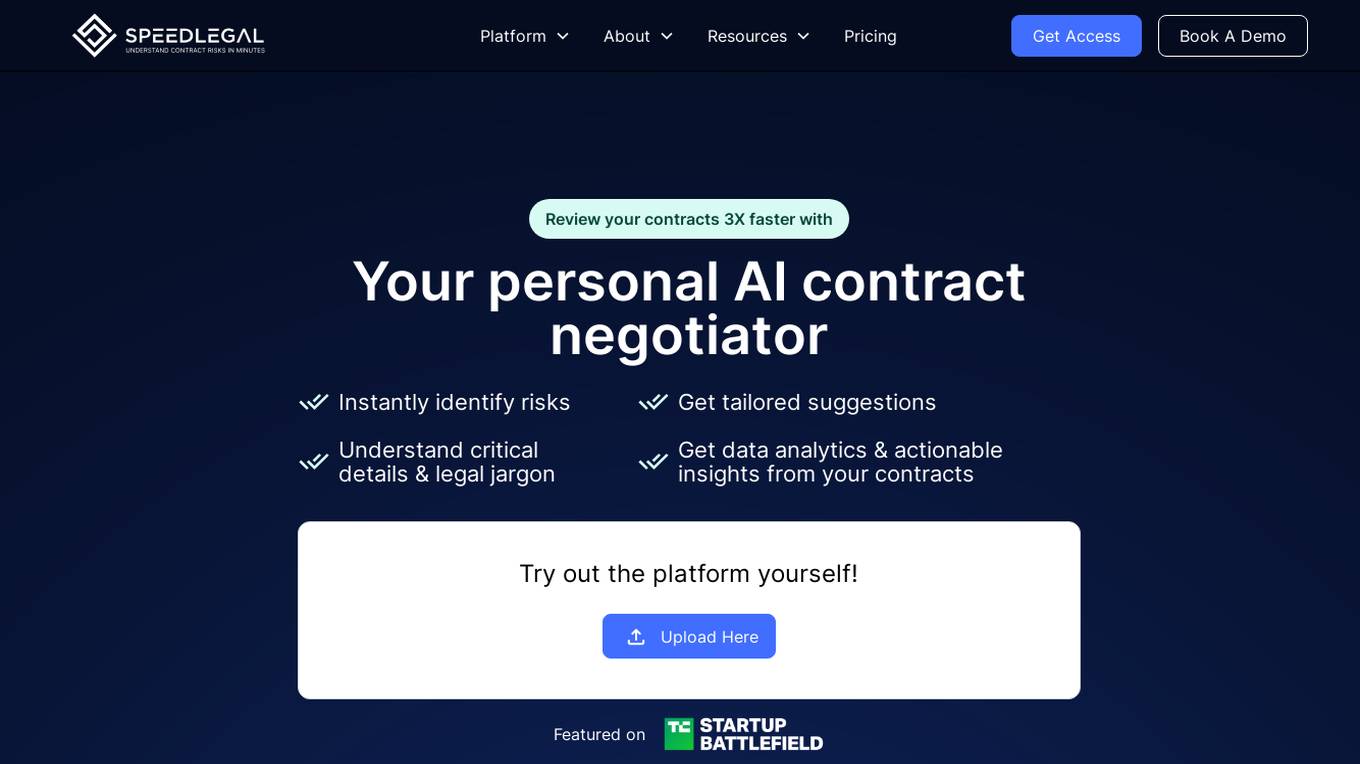

SpeedLegal

SpeedLegal is a technological startup that uses Machine Learning technology (specifically Deep Learning, LLMs and genAI) to highlight the terms and the key risks of any contract. We analyze your documents and send you a simplified report so you can make a more informed decision before signing your name on the dotted line.

ThetaRay

ThetaRay is an AI-powered transaction monitoring platform designed for fintechs and banks to detect threats and ensure trust in global payments. It uses unsupervised machine learning to efficiently detect anomalies in data sets and pinpoint suspected cases of money laundering with minimal false positives. The platform helps businesses satisfy regulators, save time and money, and drive financial growth by identifying risks accurately, boosting efficiency, and reducing false positives.

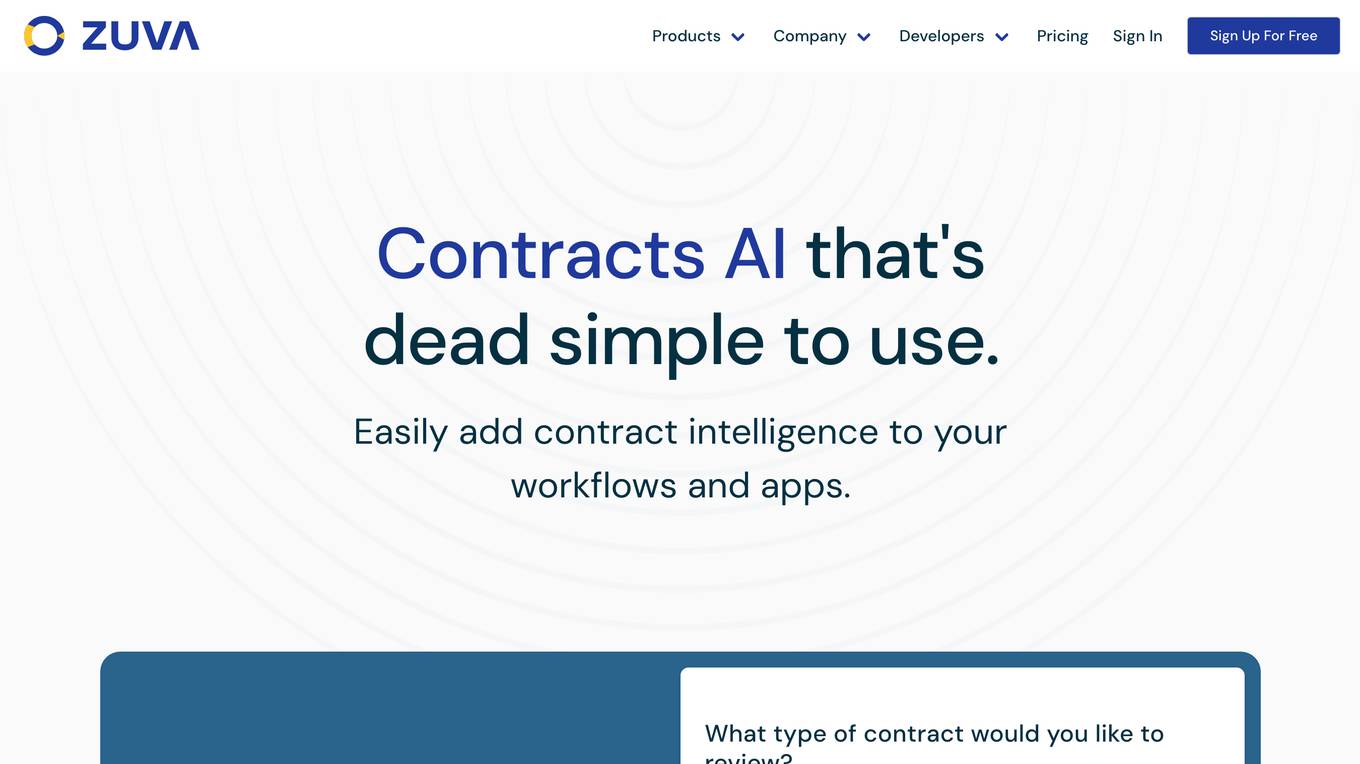

DocAI

DocAI is an API-driven platform that enables you to implement contracts AI into your applications, without requiring development from the ground-up. Our AI identifies and extracts 1,300+ common legal clauses, provisions and data points from a variety of document types. Our AI is a low-code experience for all. Easily train new fields without the need for a data scientist. All you need is subject matter expertise. Flexible and scalable. Flexible deployment options in the Zuva hosted cloud or on prem, across multiple geographical regions. Reliable, expert-built AI our customers can trust. Over 1,300+ out of the box AI fields that are built and trained by experienced lawyers and subject matter experts. Fields identify and extract common legal clauses, provisions and data points from unstructured documents and contracts, including ones written in non-standard language.

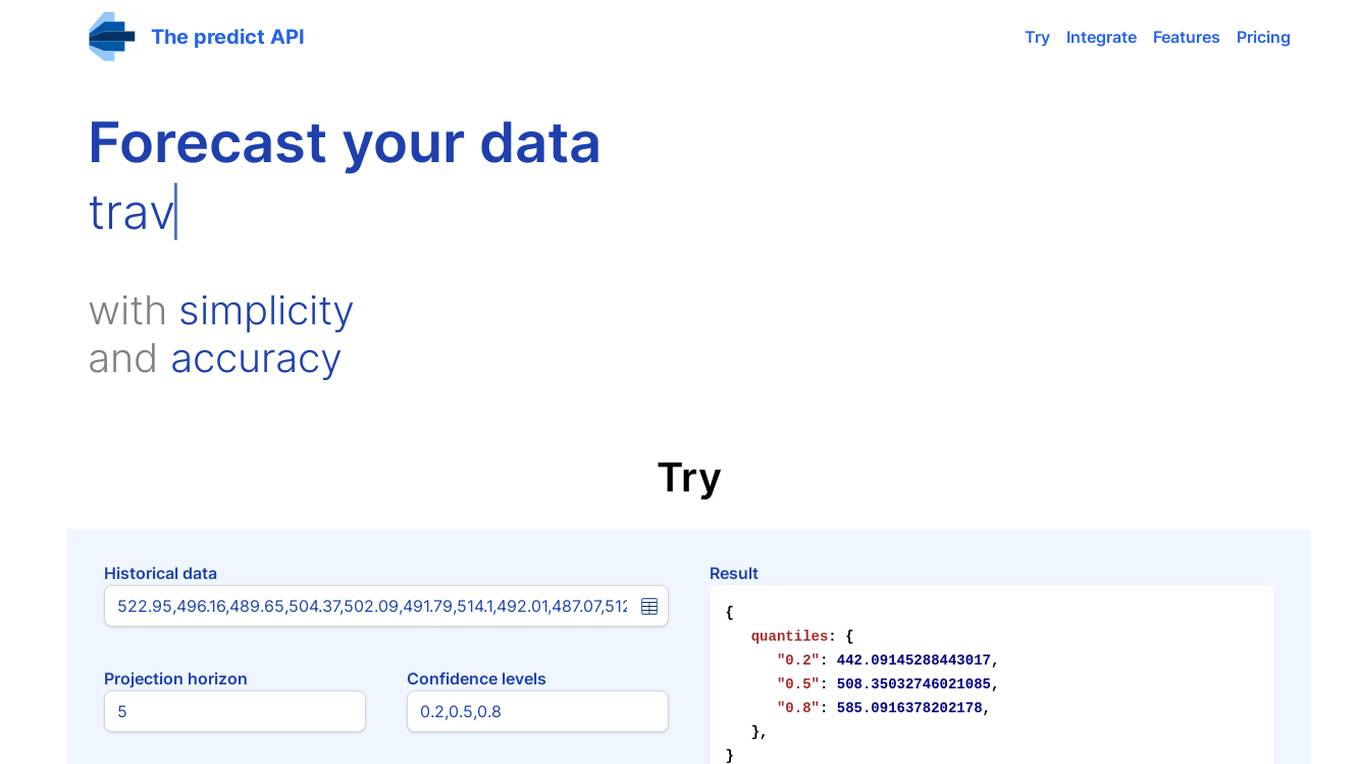

Predict API

The Predict API is a powerful tool that allows you to forecast your data with simplicity and accuracy. It uses the latest advancements in stochastic modeling and machine learning to provide you with reliable projections. The API is easy to use and can be integrated with any application. It is also highly scalable, so you can use it to forecast large datasets. With the Predict API, you can gain valuable insights into your data and make better decisions.

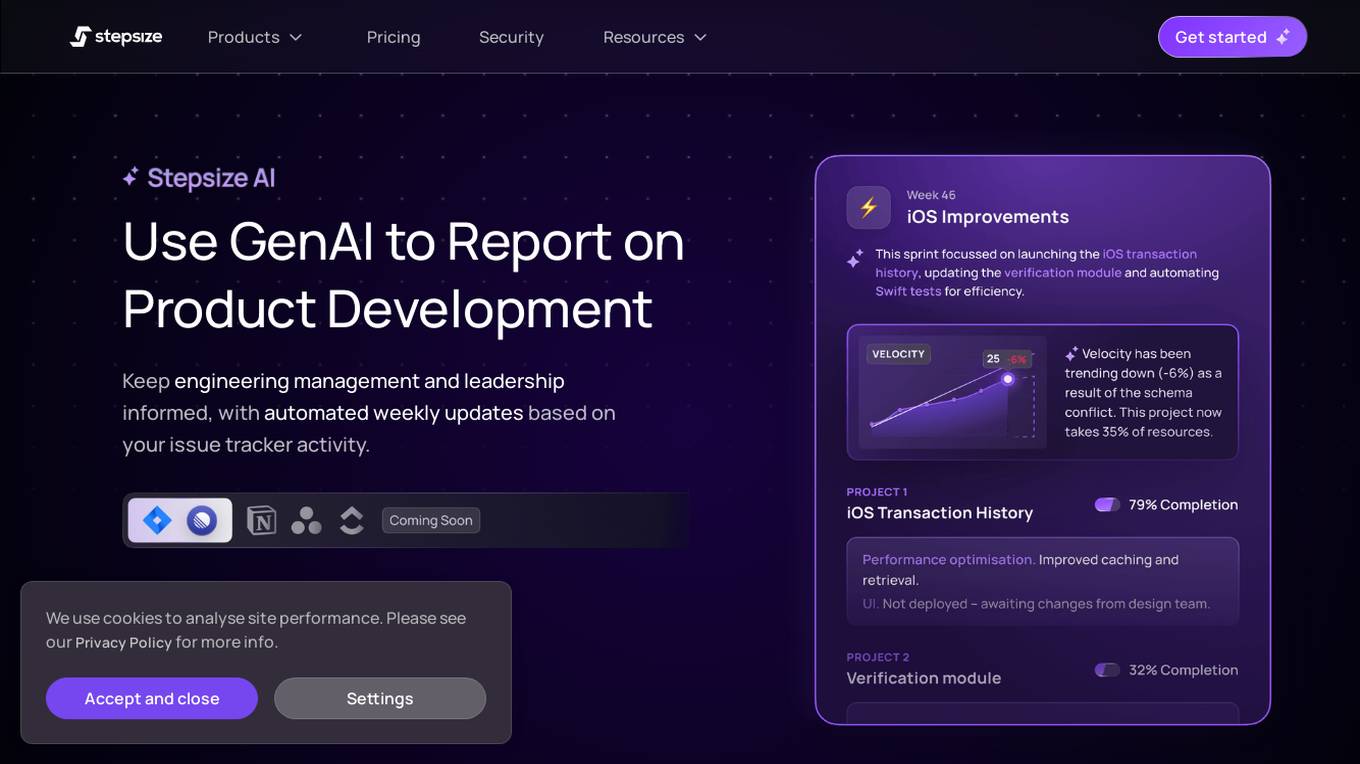

Stepsize AI

Stepsize AI is an AI-powered reporting tool for software development teams. It analyzes issue tracker activity to generate automated weekly updates on team and project progress. Stepsize AI provides metrics with automatic commentary, project-level AI insights, and intelligent delivery risk surfacing. It offers tailored insights, complete visibility, and unified focus, helping teams stay aligned and make timely decisions.

iSEM.ai

iSEM.ai is an end-to-end AI-powered AML and Fraud Detection solution that empowers users to identify risks, investigate anomalies, and streamline reporting. The platform combines human intelligence with machine technology to adapt, reduce risks, and enhance efficiency in combating financial crimes. iSEM.ai offers tailored solutions to manage client data, onboard monitoring, client profile management, watchlist monitoring, transaction monitoring, transaction screening, and fraud monitoring. The application is designed to help businesses comply with regulations, detect suspicious activities, and ensure seamless protection at every step.

Saifr

Saifr is an AI-powered marketing compliance solution that simplifies compliance reviews and content creation processes. With accurate data and decades of insights, Saifr's AI technology helps users identify compliance risks, propose alternative phrasing, and streamline compliance workflows. The platform aims to enhance operational efficiency, safeguard against risks, and make compliance reviews more efficient for users to focus on creative work.

Limbic

Limbic is a clinical AI application designed for mental healthcare providers to save time, improve outcomes, and maximize impact. It offers a suite of tools developed by a team of therapists, physicians, and PhDs in computational psychiatry. Limbic is known for its evidence-based approach, safety focus, and commitment to patient care. The application leverages AI technology to enhance various aspects of the mental health pathway, from assessments to therapeutic content delivery. With a strong emphasis on patient safety and clinical accuracy, Limbic aims to support clinicians in meeting the rising demand for mental health services while improving patient outcomes and preventing burnout.

Fordi

Fordi is an AI management tool that helps businesses avoid risks in real-time. It provides a comprehensive view of all AI systems, allowing businesses to identify and mitigate risks before they cause damage. Fordi also provides continuous monitoring and alerting, so businesses can be sure that their AI systems are always operating safely.

Privado AI

Privado AI is a privacy engineering tool that bridges the gap between privacy compliance and software development. It automates personal data visibility and privacy governance, helping organizations to identify privacy risks, track data flows, and ensure compliance with regulations such as CPRA, MHMDA, FTC, and GDPR. The tool provides real-time visibility into how personal data is collected, used, shared, and stored by scanning the code of websites, user-facing applications, and backend systems. Privado offers features like Privacy Code Scanning, programmatic privacy governance, automated GDPR RoPA reports, risk identification without assessments, and developer-friendly privacy guidance.

Pyrafect

Pyrafect is an AI tool that combines AI and risk-based analysis to help users identify high-risk issues and streamline bug fixes. By leveraging AI technology, Pyrafect aims to assist users in prioritizing tasks and delivering a stable user experience. The tool offers early access to its features, allowing users to proactively manage risks and focus on what matters most.

Oatmeal Health

Oatmeal Health is an AI-powered lung cancer screening and diagnosis platform designed to help Federally Qualified Health Centers (FQHCs) identify high-risk patients, provide reimbursed lung cancer screenings, and share revenue without increasing the clinical team's workload. The platform uses AI-driven patient identification, virtual NPs and AI for screening and navigation, shared reimbursement revenue model, and research-backed innovation to advance early detection tools. Oatmeal Health aims to improve patient engagement, clinical efficiency, quality, compliance, and revenue impact for FQHCs, all at no cost implementation.

Concentric AI

Concentric AI is a Managed Data Security Posture Management tool that utilizes Semantic Intelligence to provide comprehensive data security solutions. The platform offers features such as autonomous data discovery, data risk identification, centralized remediation, easy deployment, and data security posture management. Concentric AI helps organizations protect sensitive data, prevent data loss, and ensure compliance with data security regulations. The tool is designed to simplify data governance and enhance data security across various data repositories, both in the cloud and on-premises.

Dataminr

Dataminr is a leading AI company that provides real-time event, risk, and threat detection. Its revolutionary real-time AI Platform discovers the earliest signals of events, risks, and threats from within public data. Dataminr's products deliver critical information first—so organizations can respond quickly and manage crises effectively.

Alphy

Alphy is a modern AI tool for communication compliance that helps companies detect and prevent harmful and unlawful language in their communication. The AI classifier has a 94% accuracy rate and can identify over 40 high-risk categories of harmful language. By using Reflect AI, companies can shield themselves from reputational, ethical, and legal risks, ensuring compliance and preventing costly litigation.

ClosedLoop

ClosedLoop is a healthcare data science platform that helps organizations improve outcomes and reduce unnecessary costs with accurate, explainable, and actionable predictions of individual-level health risks. The platform provides a comprehensive library of easily modifiable templates for healthcare-specific predictive models, machine learning (ML) features, queries, and data transformation, which accelerates time to value. ClosedLoop's AI/ML platform is designed exclusively for the data science needs of modern healthcare organizations and helps deliver measurable clinical and financial impact.

HealthITAnalytics

HealthITAnalytics is a leading source of news, insights, and analysis on the use of information technology in healthcare. The website covers a wide range of topics, including artificial intelligence, machine learning, data analytics, and population health management. HealthITAnalytics also provides resources for healthcare professionals, such as white papers, webinars, and podcasts.

0 - Open Source AI Tools

20 - OpenAI Gpts

Startup Critic

Apply gold-standard startup valuation and assessment methods to identify risks and gaps in your business model and product ideas.

Fluffy Risk Analyst

A cute sheep expert in risk analysis, providing downloadable checklists.

Project Risk Assessment Advisor

Assesses project risks to mitigate potential organizational impacts.

Diabetes Risk Evaluator

A professional, medical-focused tool for diabetes risk assessment.

Brand Safety Audit

Get a detailed risk analysis for public relations, marketing, and internal communications, identifying challenges and negative impacts to refine your messaging strategy.

Technical Service Agreement Review Expert

Review your tech service agreements 24/7, find legal risk and give suggestions. (Powered by LegalNow ai.legalnow.xyz)

Terms & Conditions Reader

A helper for reading and summarizing terms and conditions (or terms of service).

Lux Market Abuse Advisor

Luxembourg Market Abuse Specialist offering guidance on regulations.

EU CRA Assistant

Expert in the EU Cyber Resilience Act, providing clear explanations and guidance.

Asistente Ley 406 y Fallo de inconstitucionalidad

Experto en análisis de Ley Contrato 406, formal y accesible, evita especulaciones.

Otto the AuditBot

An expert in audit and compliance, providing precise accounting guidance.