Best AI tools for< Fuse Pokemon >

1 - AI tool Sites

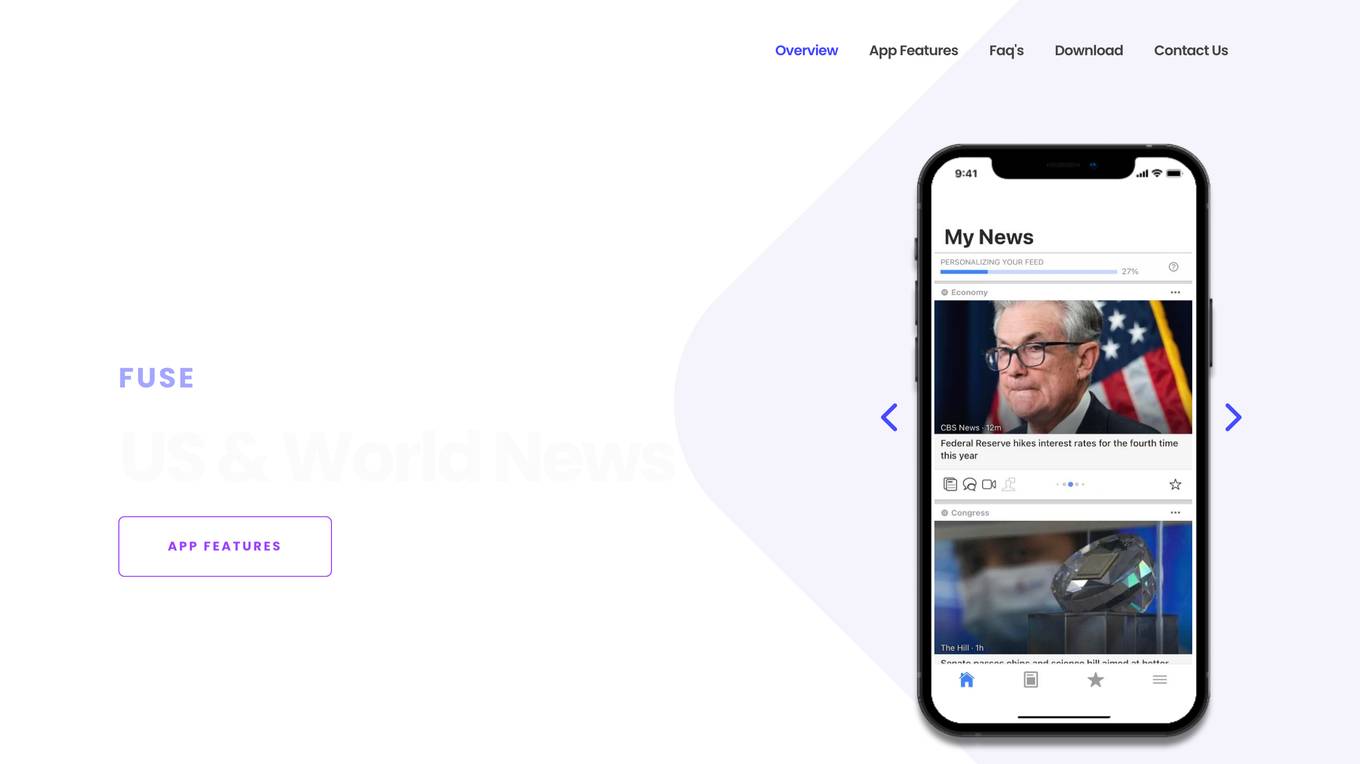

Fuse

Fuse is a smart news aggregator that delivers personalized and complete coverage of top news stories from the U.S. and around the world. Stories are covered from every angle - with articles, videos and opinions from trusted sources. Fuse employs AI/ML algorithms to continuously collect, organize, prioritize and personalize news stories. Articles, videos and opinions are collected from all the major news media outlets and automatically organized by stories and topics.

site

: 0

0 - Open Source AI Tools

No tools available

2 - OpenAI Gpts

PokedexGPT V3

Containing The Entire Pokemon Universe | All Gen Pokemon, Items, Abilities, Berrys, Eggs, Region Details, Etc | Battle Simulation | Upload Image for Pokedex to ID | Fuse Pokemon | Explore || Type Menu to see full options.

gpt

: 1K+