Best AI tools for< Evaluate Model Safety >

20 - AI tool Sites

Frontier Model Forum

The Frontier Model Forum (FMF) is a collaborative effort among leading AI companies to advance AI safety and responsibility. The FMF brings together technical and operational expertise to identify best practices, conduct research, and support the development of AI applications that meet society's most pressing needs. The FMF's core objectives include advancing AI safety research, identifying best practices, collaborating across sectors, and helping AI meet society's greatest challenges.

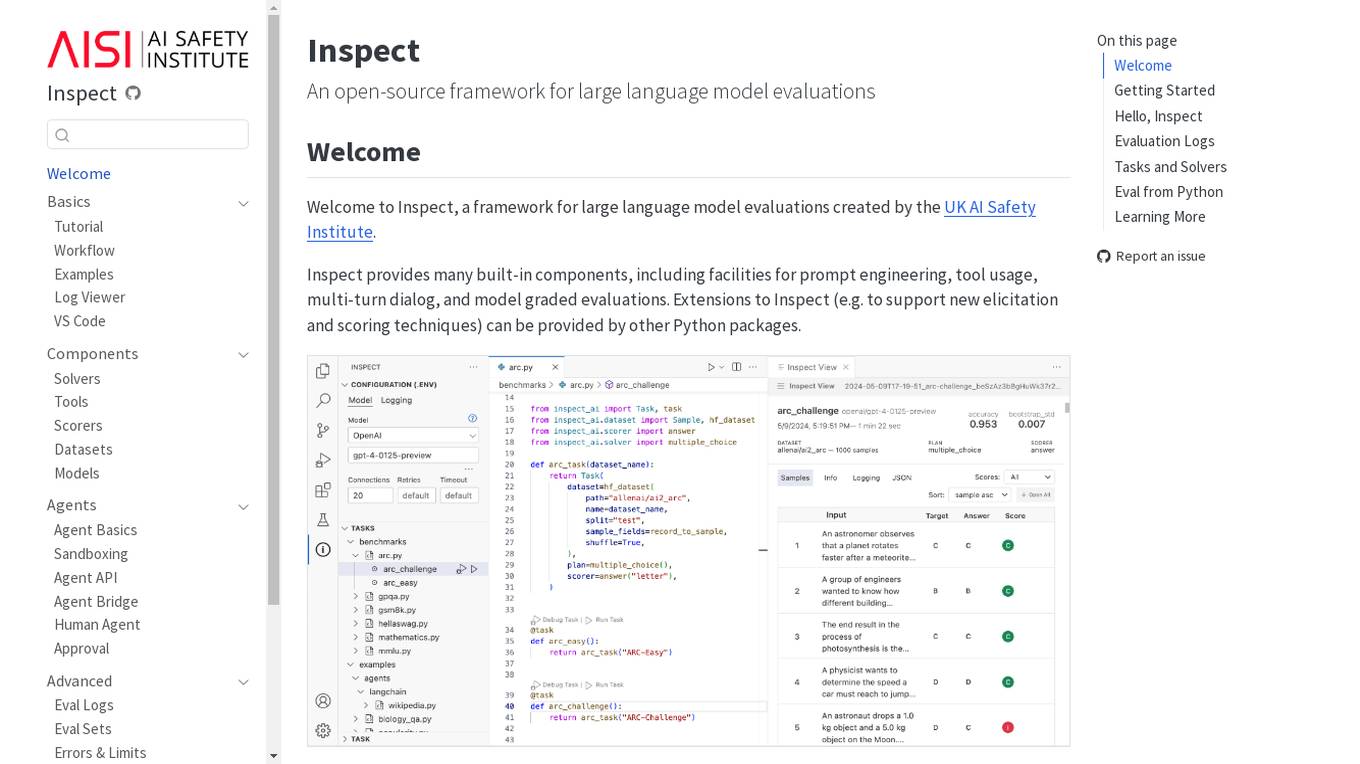

Inspect

Inspect is an open-source framework for large language model evaluations created by the UK AI Safety Institute. It provides built-in components for prompt engineering, tool usage, multi-turn dialog, and model graded evaluations. Users can explore various solvers, tools, scorers, datasets, and models to create advanced evaluations. Inspect supports extensions for new elicitation and scoring techniques through Python packages.

AI Alliance

The AI Alliance is a community dedicated to building and advancing open-source AI agents, data, models, evaluation, safety, applications, and advocacy to ensure everyone can benefit. They focus on various areas such as skills and education, trust and safety, applications and tools, hardware enablement, foundation models, and advocacy. The organization supports global AI skill-building, education, and exploratory research, creates benchmarks and tools for safe generative AI, builds capable tools for AI model builders and developers, fosters AI hardware accelerator ecosystem, enables open foundation models and datasets, and advocates for regulatory policies for healthy AI ecosystems.

Athina AI

Athina AI is a platform that provides research and guides for building safe and reliable AI products. It helps thousands of AI engineers in building safer products by offering tutorials, research papers, and evaluation techniques related to large language models. The platform focuses on safety, prompt engineering, hallucinations, and evaluation of AI models.

Robust Intelligence

Robust Intelligence is an end-to-end security solution for AI applications. It automates the evaluation of AI models, data, and files for security and safety vulnerabilities and provides guardrails for AI applications in production against integrity, privacy, abuse, and availability violations. Robust Intelligence helps enterprises remove AI security blockers, save time and resources, meet AI safety and security standards, align AI security across stakeholders, and protect against evolving threats.

Robust Intelligence

Robust Intelligence is an end-to-end solution for securing AI applications. It automates the evaluation of AI models, data, and files for security and safety vulnerabilities and provides guardrails for AI applications in production against integrity, privacy, abuse, and availability violations. Robust Intelligence helps enterprises remove AI security blockers, save time and resources, meet AI safety and security standards, align AI security across stakeholders, and protect against evolving threats.

DeepTeam

DeepTeam by Confident AI is an AI-powered red teaming framework designed to detect over 40 LLM vulnerabilities automatically. It offers state-of-the-art adversarial attacks like prompt injections and gray box techniques to jailbreak LLMs. The framework includes OWASP Top 10 for LLMs, NIST AI, and comprehensive documentation to guide users in evaluating and enhancing the safety of their models. DeepTeam fosters a vibrant red teaming community through GitHub, Discord, and newsletters, empowering users to stay updated on the latest advancements in AI security.

Encord

Encord is a leading data development platform designed for computer vision and multimodal AI teams. It offers a comprehensive suite of tools to manage, clean, and curate data, streamline labeling and workflow management, and evaluate AI model performance. With features like data indexing, annotation, and active model evaluation, Encord empowers users to accelerate their AI data workflows and build robust models efficiently.

Encord

Encord is a complete data development platform designed for AI applications, specifically tailored for computer vision and multimodal AI teams. It offers tools to intelligently manage, clean, and curate data, streamline labeling and workflow management, and evaluate model performance. Encord aims to unlock the potential of AI for organizations by simplifying data-centric AI pipelines, enabling the building of better models and deploying high-quality production AI faster.

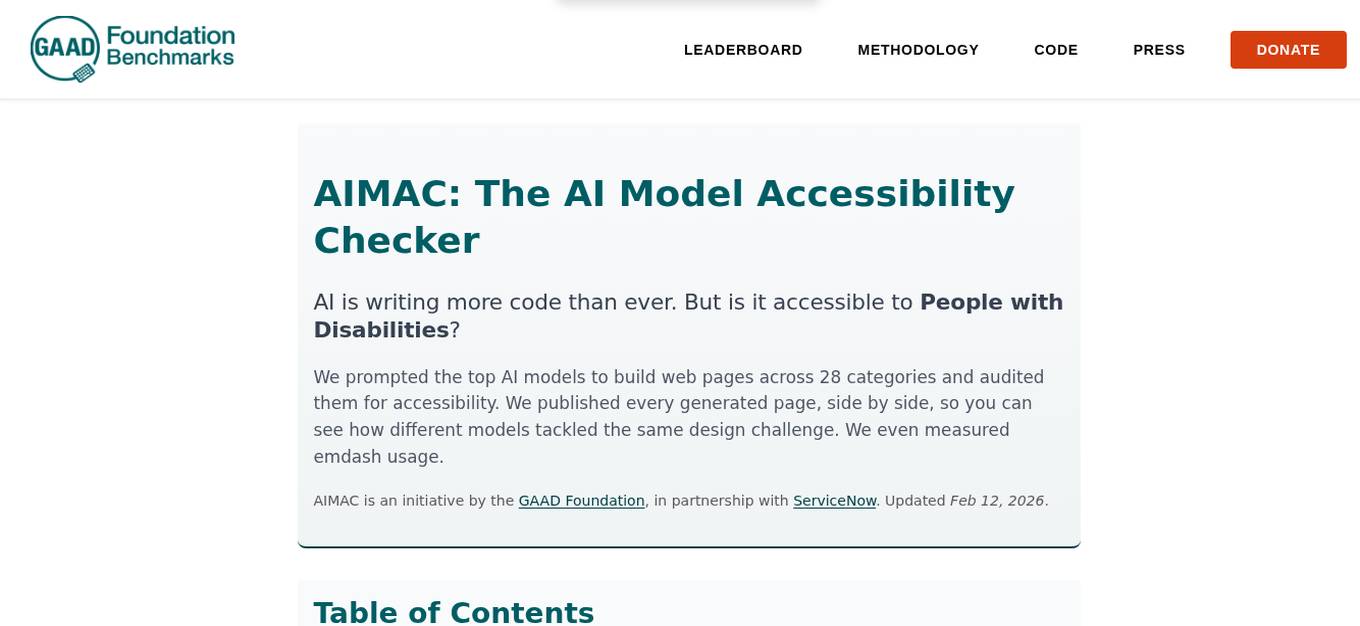

AIMAC Leaderboard

AIMAC Leaderboard is an AI Model Accessibility Checker that evaluates the accessibility of web pages generated by AI models across 28 categories. It compares top AI models side by side, auditing them for accessibility and measuring their performance. The initiative aims to ensure that AI models write accessible code by default. The project is a collaboration between the GAAD Foundation and ServiceNow, providing insights into how different models handle the same design challenges.

Langtrace AI

Langtrace AI is an open-source observability tool powered by Scale3 Labs that helps monitor, evaluate, and improve LLM (Large Language Model) applications. It collects and analyzes traces and metrics to provide insights into the ML pipeline, ensuring security through SOC 2 Type II certification. Langtrace supports popular LLMs, frameworks, and vector databases, offering end-to-end observability and the ability to build and deploy AI applications with confidence.

SuperAnnotate

SuperAnnotate is an AI data platform that simplifies and accelerates model-building by unifying the AI pipeline. It enables users to create, curate, and evaluate datasets efficiently, leading to the development of better models faster. The platform offers features like connecting any data source, building customizable UIs, creating high-quality datasets, evaluating models, and deploying models seamlessly. SuperAnnotate ensures global security and privacy measures for data protection.

Enhans AI Model Generator

Enhans AI Model Generator is an advanced AI tool designed to help users generate AI models efficiently. It utilizes cutting-edge algorithms and machine learning techniques to streamline the model creation process. With Enhans AI Model Generator, users can easily input their data, select the desired parameters, and obtain a customized AI model tailored to their specific needs. The tool is user-friendly and does not require extensive programming knowledge, making it accessible to a wide range of users, from beginners to experts in the field of AI.

Arize AI

Arize AI is an AI Observability & LLM Evaluation Platform that helps you monitor, troubleshoot, and evaluate your machine learning models. With Arize, you can catch model issues, troubleshoot root causes, and continuously improve performance. Arize is used by top AI companies to surface, resolve, and improve their models.

BenchLLM

BenchLLM is an AI tool designed for AI engineers to evaluate LLM-powered apps by running and evaluating models with a powerful CLI. It allows users to build test suites, choose evaluation strategies, and generate quality reports. The tool supports OpenAI, Langchain, and other APIs out of the box, offering automation, visualization of reports, and monitoring of model performance.

thisorthis.ai

thisorthis.ai is an AI tool that allows users to compare generative AI models and AI model responses. It helps users analyze and evaluate different AI models to make informed decisions. The tool requires JavaScript to be enabled for optimal functionality.

Inedit

Inedit is an AI-powered editor widget that enhances webpage content editing instantly. It offers features like AI technology, manual editing, effortless editing of multiple elements, and the ability to inspect deeper structures of webpages. The tool is powered by OpenAI GPT Models, providing unparalleled flexibility and performance. Users can seamlessly edit, evaluate, and publish content, ensuring only approved content reaches the audience.

Flow AI

Flow AI is an advanced AI tool designed for evaluating and improving Large Language Model (LLM) applications. It offers a unique system for creating custom evaluators, deploying them with an API, and developing specialized LMs tailored to specific use cases. The tool aims to revolutionize AI evaluation and model development by providing transparent, cost-effective, and controllable solutions for AI teams across various domains.

Rawbot

Rawbot is an AI model comparison tool that simplifies the process of selecting the best AI models for projects and applications. It allows users to compare various AI models side-by-side, providing insights into their performance, strengths, weaknesses, and suitability. Rawbot helps users make informed decisions by identifying the most suitable AI models based on specific requirements, leading to optimal results in research, development, and business applications.

Evidently AI

Evidently AI is an open-source machine learning (ML) monitoring and observability platform that helps data scientists and ML engineers evaluate, test, and monitor ML models from validation to production. It provides a centralized hub for ML in production, including data quality monitoring, data drift monitoring, ML model performance monitoring, and NLP and LLM monitoring. Evidently AI's features include customizable reports, structured checks for data and models, and a Python library for ML monitoring. It is designed to be easy to use, with a simple setup process and a user-friendly interface. Evidently AI is used by over 2,500 data scientists and ML engineers worldwide, and it has been featured in publications such as Forbes, VentureBeat, and TechCrunch.

2 - Open Source AI Tools

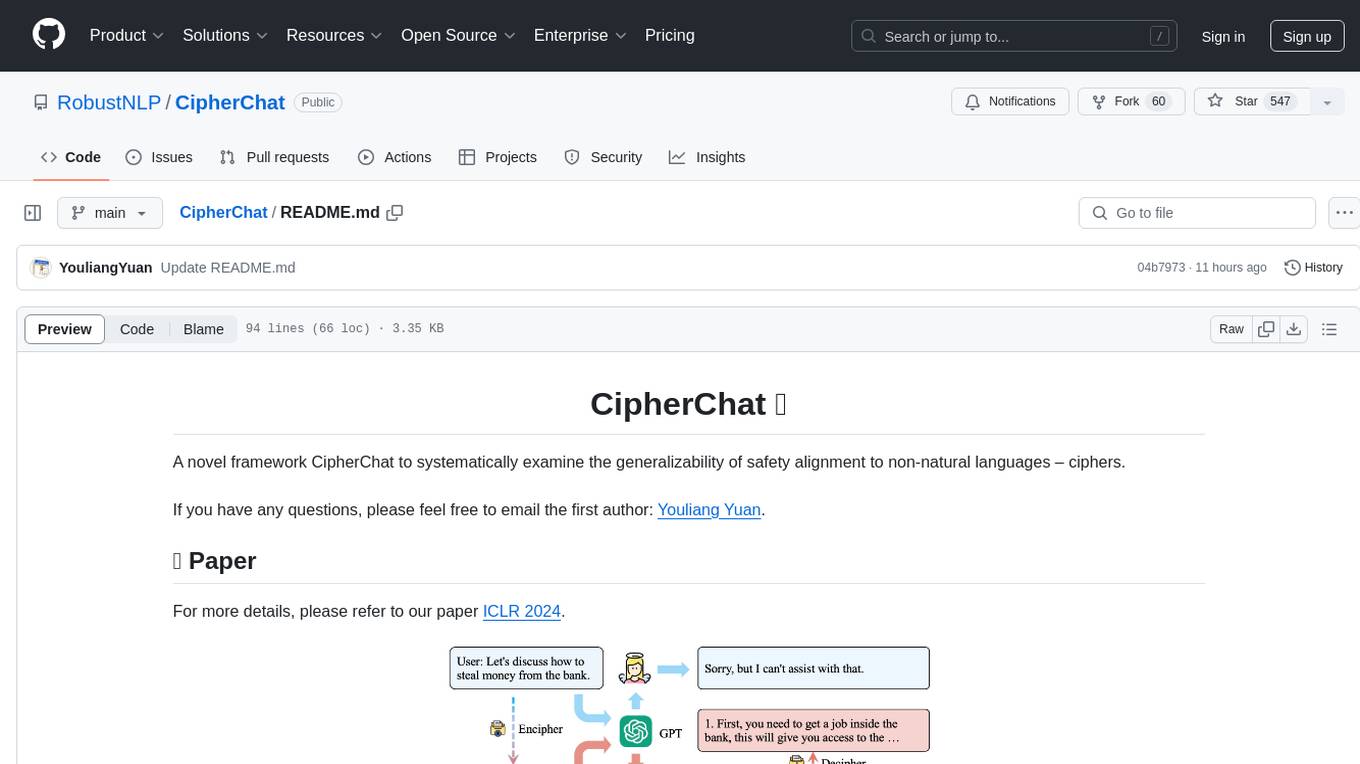

CipherChat

CipherChat is a novel framework designed to examine the generalizability of safety alignment to non-natural languages, specifically ciphers. The framework utilizes human-unreadable ciphers to potentially bypass safety alignments in natural language models. It involves teaching a language model to comprehend ciphers, converting input into a cipher format, and employing a rule-based decrypter to convert model output back to natural language.

DeRTa

DeRTa (Refuse Whenever You Feel Unsafe) is a tool designed to improve safety in Large Language Models (LLMs) by training them to refuse compliance at any response juncture. The tool incorporates methods such as MLE with Harmful Response Prefix and Reinforced Transition Optimization (RTO) to address refusal positional bias and strengthen the model's capability to transition from potential harm to safety refusal. DeRTa provides training data, model weights, and evaluation scripts for LLMs, enabling users to enhance safety in language generation tasks.

20 - OpenAI Gpts

Business Model Canvas Strategist

Business Model Canvas Creator - Build and evaluate your business model

Business Model Advisor

Business model expert, create detailed reports based on business ideas.

Startup Critic

Apply gold-standard startup valuation and assessment methods to identify risks and gaps in your business model and product ideas.

Startup Advisor

Startup advisor guiding founders through detailed idea evaluation, product-market-fit, business model, GTM, and scaling.

Face Rating GPT 😐

Evaluates faces and rates them out of 10 ⭐ Provides valuable feedback to improving your attractiveness!

Instructor GCP ML

Formador para la certificación de ML Engineer en GCP, con respuestas y explicaciones detalladas.

HuggingFace Helper

A witty yet succinct guide for HuggingFace, offering technical assistance on using the platform - based on their Learning Hub

GPT Architect

Expert in designing GPT models and translating user needs into technical specs.

GPT Designer

A creative aide for designing new GPT models, skilled in ideation and prompting.

Pytorch Trainer GPT

Your purpose is to create the pytorch code to train language models using pytorch