Best AI tools for< Deploy Models On Mobile >

20 - AI tool Sites

Ultralytics

Ultralytics is an AI tool that revolutionizes the world of Vision AI by enabling users to easily turn images into AI to get useful insights without writing any code. It offers a drag-and-drop interface for data input, model training, and deployment, making it accessible for startups, enterprises, data scientists, ML engineers, hobbyists, researchers, and academics. Ultralytics YOLO, the flagship tool, allows users to train machine learning models in seconds, select from pre-built models, test models on mobile devices, and deploy custom models to various formats. The tool is powered by Ultralytics Python package and is open-source, with a focus on computer vision, object detection, and image classification.

Qualcomm AI Hub

Qualcomm AI Hub is a platform that allows users to run AI models on Snapdragon® 8 Elite devices. It provides a collaborative ecosystem for model makers, cloud providers, runtime, and SDK partners to deploy on-device AI solutions quickly and efficiently. Users can bring their own models, optimize for deployment, and access a variety of AI services and resources. The platform caters to various industries such as mobile, automotive, and IoT, offering a range of models and services for edge computing.

Caffe

Caffe is a deep learning framework developed by Berkeley AI Research (BAIR) and community contributors. It is designed for speed, modularity, and expressiveness, allowing users to define models and optimization through configuration without hard-coding. Caffe supports both CPU and GPU training, making it suitable for research experiments and industry deployment. The framework is extensible, actively developed, and tracks the state-of-the-art in code and models. Caffe is widely used in academic research, startup prototypes, and large-scale industrial applications in vision, speech, and multimedia.

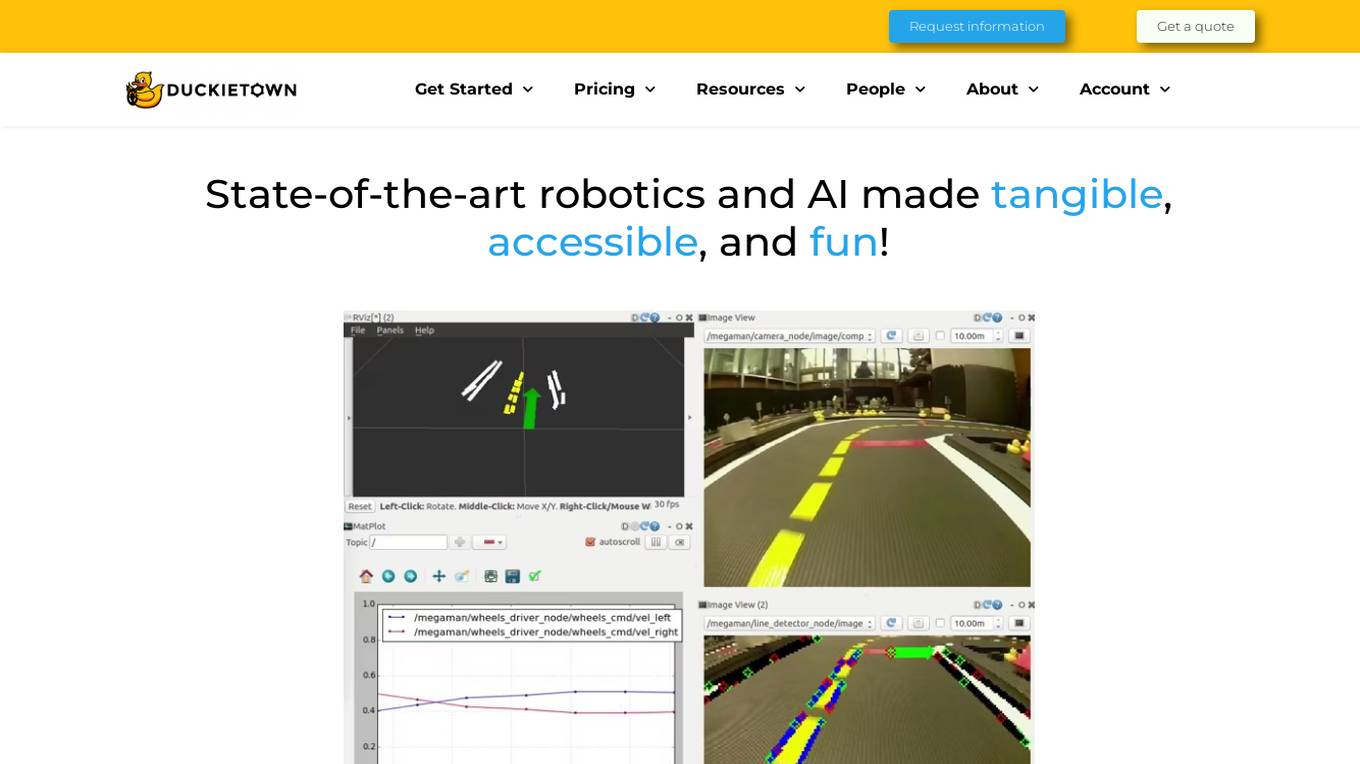

Duckietown

Duckietown is a platform for delivering cutting-edge robotics and AI learning experiences. It offers teaching resources to instructors, hands-on activities to learners, an accessible research platform to researchers, and a state-of-the-art ecosystem for professional training. Duckietown's mission is to make robotics and AI education state-of-the-art, hands-on, and accessible to all.

CHAI AI

CHAI AI is a leading conversational AI platform that focuses on building AI solutions for quant traders. The platform has secured significant funding rounds to expand its computational capabilities and talent acquisition. CHAI AI offers a range of models and techniques, such as reinforcement learning with human feedback, model blending, and direct preference optimization, to enhance user engagement and retention. The platform aims to provide users with the ability to create their own ChatAIs and offers custom GPU orchestration for efficient inference. With a strong focus on user feedback and recognition, CHAI AI continues to innovate and improve its AI models to meet the demands of a growing user base.

Qubinets

Qubinets is a cloud data environment solutions platform that provides building blocks for building big data, AI, web, and mobile environments. It is an open-source, no lock-in, secured, and private platform that can be used on any cloud, including AWS, Digital Ocean, Google Cloud, and Microsoft Azure. Qubinets makes it easy to plan, build, and run data environments, and it streamlines and saves time and money by reducing the grunt work in setup and provisioning.

AI Superior

AI Superior is a German-based AI services company focusing on end-to-end AI-based application development and AI consulting. We design and build web and mobile apps as well as custom software products that rely on complex machine learning and AI models and algorithms. Our Ph.D.-level Data Scientists and Software Engineers are ready to help you create your success story.

ibl.ai

ibl.ai is a generative AI platform that focuses on education, providing cutting-edge solutions for institutions to create AI mentors, tutoring apps, and content creation tools. The platform empowers educators by giving them full control over their code, data, and models. With advanced features and support for both web and native mobile platforms, ibl.ai seamlessly integrates with existing infrastructure, making it easy to deploy across organizations. The platform is designed to enhance learning experiences, foster critical thinking, and engage students deeply in educational content.

Lobe

Lobe is a free easy-to-use tool for Mac and PC that helps you train machine learning models and ship them to any platform you choose. It provides a user-friendly interface for training machine learning models without requiring extensive coding knowledge. Lobe supports various tasks related to machine learning, such as creating image-based datasets, working with Python toolsets, and deploying models on different platforms.

Passarel

Passarel is an AI tool designed to simplify teammate onboarding by developing bespoke GPT-like models for employee interaction. It centralizes knowledge bases into a custom model, allowing new teammates to access information efficiently. Passarel leverages various integrations to tailor language models to team needs, handling contradictions and providing accurate information. The tool works by training models on chosen knowledge bases, learning from data and configurations provided, and deploying the model for team use.

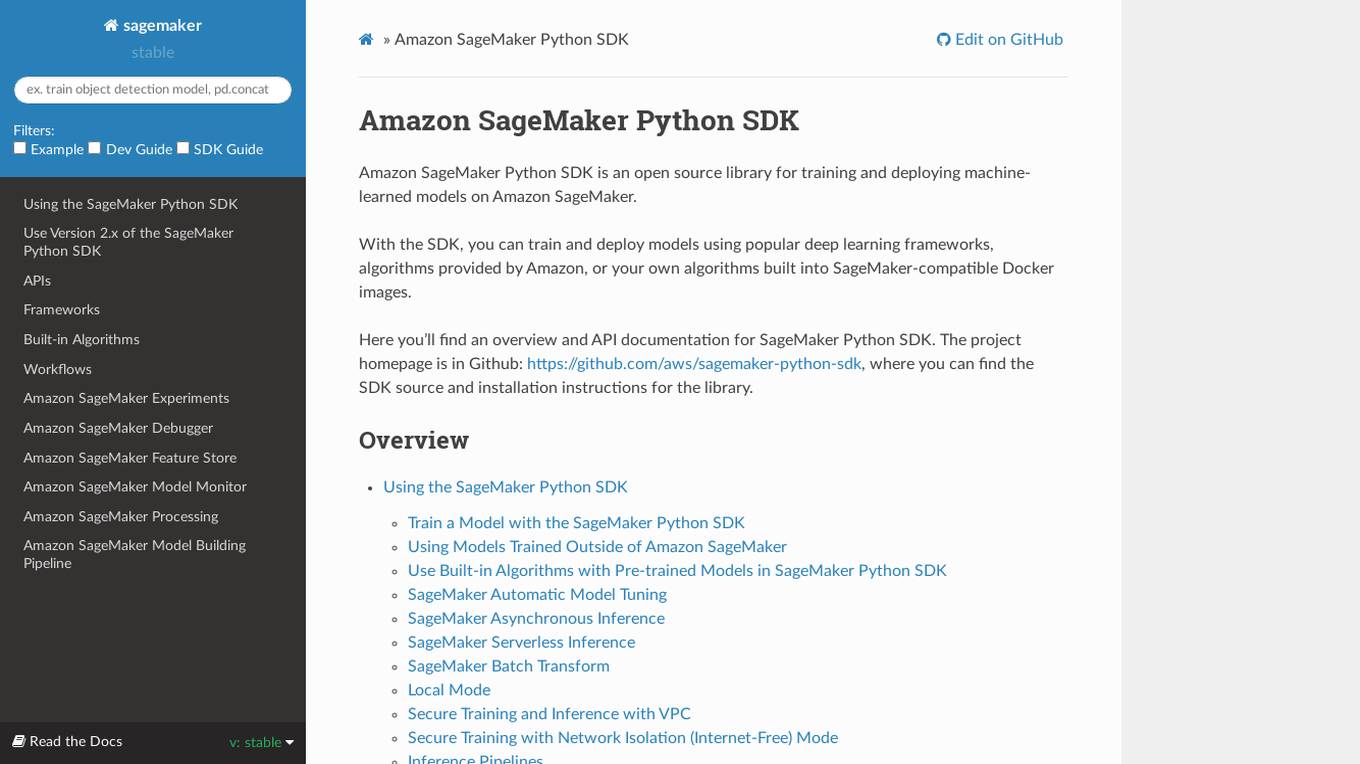

Amazon SageMaker Python SDK

Amazon SageMaker Python SDK is an open source library for training and deploying machine-learned models on Amazon SageMaker. With the SDK, you can train and deploy models using popular deep learning frameworks, algorithms provided by Amazon, or your own algorithms built into SageMaker-compatible Docker images.

Mirage

Mirage is a custom AI platform that builds custom LLMs to accelerate productivity. It is backed by Sequoia and offers a variety of features, including the ability to create custom AI models, train models on your own data, and deploy models to the cloud or on-premises.

Roboflow

Roboflow is an AI tool designed for computer vision tasks, offering a platform that allows users to annotate, train, deploy, and perform inference on models. It provides integrations, ecosystem support, and features like notebooks, autodistillation, and supervision. Roboflow caters to various industries such as aerospace, agriculture, healthcare, finance, and more, with a focus on simplifying the development and deployment of computer vision models.

Mystic.ai

Mystic.ai is an AI tool designed to deploy and scale Machine Learning models with ease. It offers a fully managed Kubernetes platform that runs in your own cloud, allowing users to deploy ML models in their own Azure/AWS/GCP account or in a shared GPU cluster. Mystic.ai provides cost optimizations, fast inference, simpler developer experience, and performance optimizations to ensure high-performance AI model serving. With features like pay-as-you-go API, cloud integration with AWS/Azure/GCP, and a beautiful dashboard, Mystic.ai simplifies the deployment and management of ML models for data scientists and AI engineers.

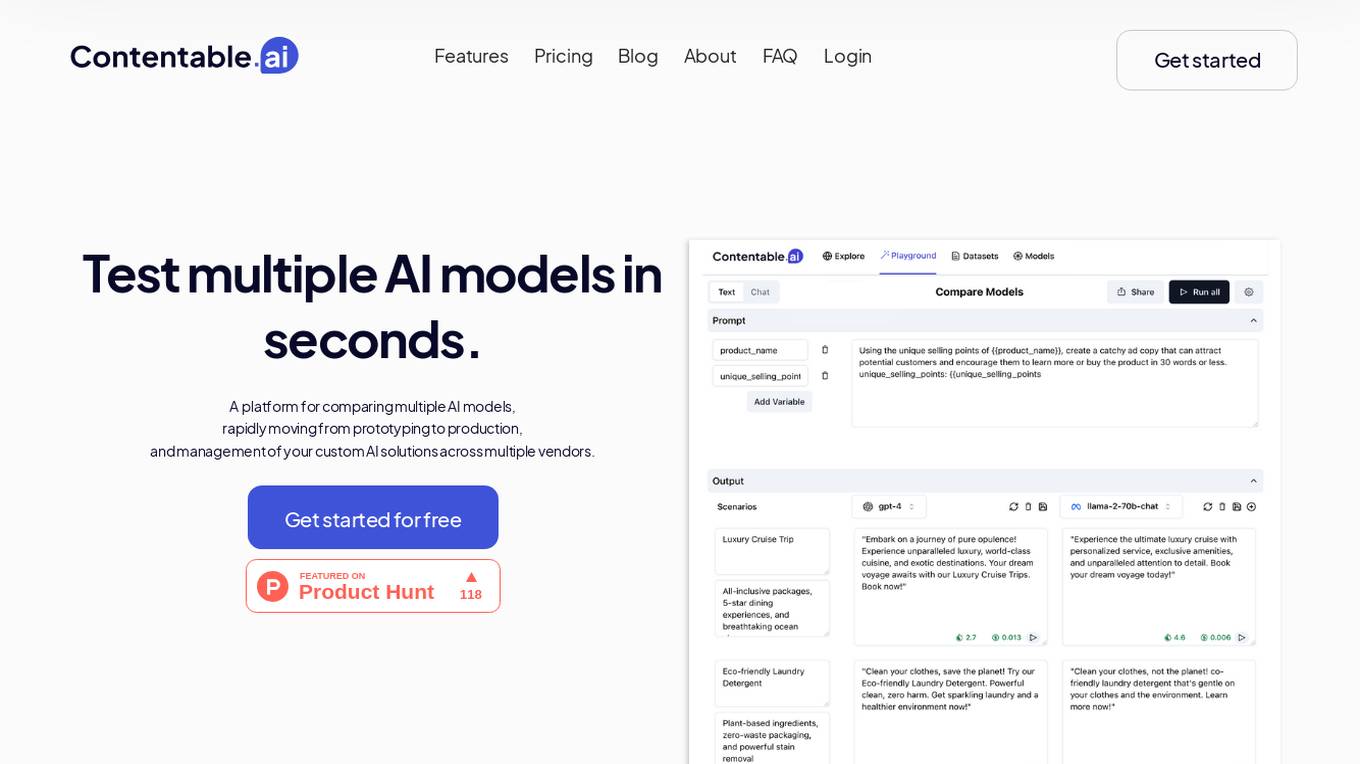

Contentable.ai

Contentable.ai is a platform for comparing multiple AI models, rapidly moving from prototyping to production, and management of your custom AI solutions across multiple vendors. It allows users to test multiple AI models in seconds, compare models side-by-side across top AI providers, collaborate on AI models with their team seamlessly, design complex AI workflows without coding, and pay as they go.

Groq

Groq is a fast AI inference tool that offers GroqCloud™ Platform and GroqRack™ Cluster for developers to build and deploy AI models with ultra-low-latency inference. It provides instant intelligence for openly-available models like Llama 3.1 and is known for its speed and compatibility with other AI providers. Groq powers leading openly-available AI models and has gained recognition in the AI chip industry. The tool has received significant funding and valuation, positioning itself as a strong challenger to established players like Nvidia.

Together AI

Together AI is an AI Acceleration Cloud platform that offers fast inference, fine-tuning, and training services. It provides self-service NVIDIA GPUs, model deployment on custom hardware, AI chat app, code execution sandbox, and tools to find the right model for specific use cases. The platform also includes a model library with open-source models, documentation for developers, and resources for advancing open-source AI. Together AI enables users to leverage pre-trained models, fine-tune them, or build custom models from scratch, catering to various generative AI needs.

OmniAI

OmniAI is an AI tool that allows teams to deploy AI applications on their existing infrastructure. It provides a unified API experience for building AI applications and offers a wide selection of industry-leading models. With tools like Llama 3, Claude 3, Mistral Large, and AWS Titan, OmniAI excels in tasks such as natural language understanding, generation, safety, ethical behavior, and context retention. It also enables users to deploy and query the latest AI models quickly and easily within their virtual private cloud environment.

Wallaroo.AI

Wallaroo.AI is an AI inference platform that offers production-grade AI inference microservices optimized on OpenVINO for cloud and Edge AI application deployments on CPUs and GPUs. It provides hassle-free AI inferencing for any model, any hardware, anywhere, with ultrafast turnkey inference microservices. The platform enables users to deploy, manage, observe, and scale AI models effortlessly, reducing deployment costs and time-to-value significantly.

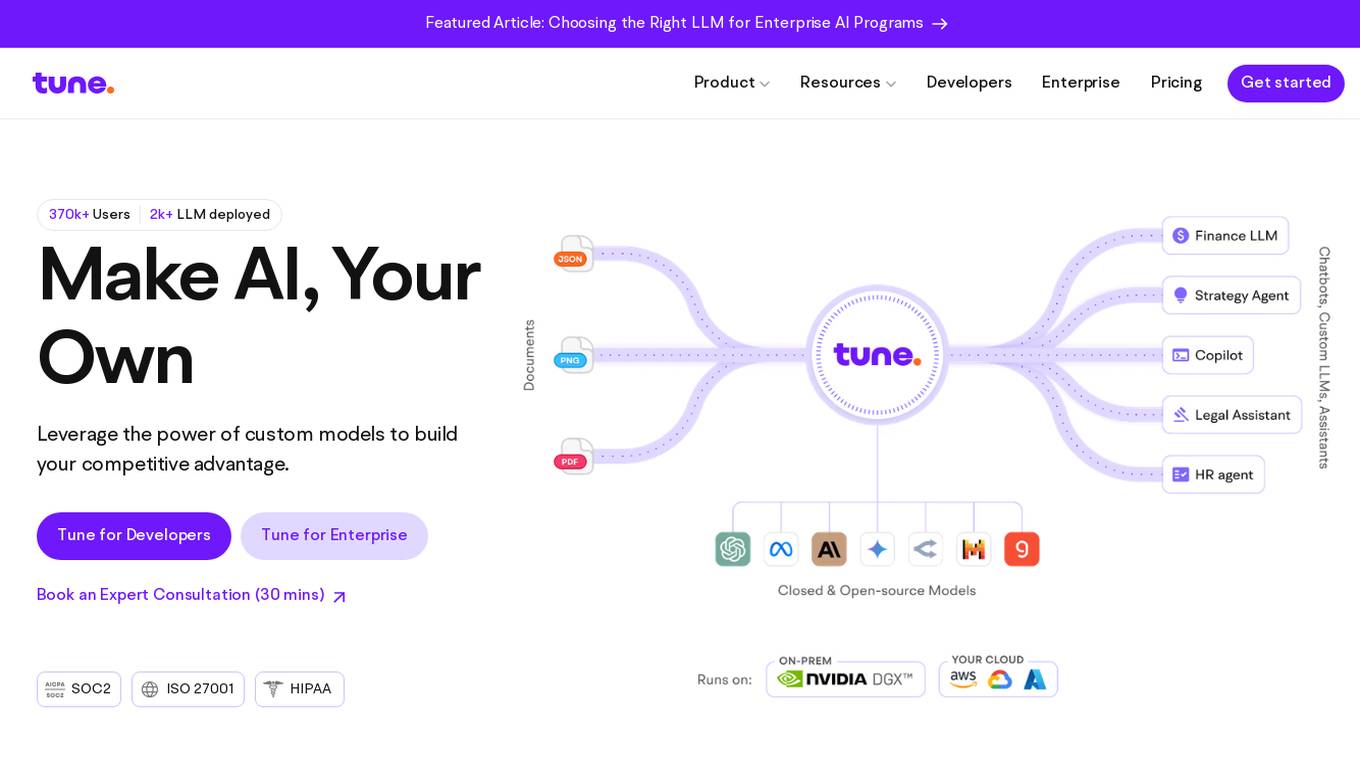

Tune AI

Tune AI is an enterprise Gen AI stack that offers custom models to build competitive advantage. It provides a range of features such as accelerating coding, content creation, indexing patent documents, data audit, automatic speech recognition, and more. The application leverages generative AI to help users solve real-world problems and create custom models on top of industry-leading open source models. With enterprise-grade security and flexible infrastructure, Tune AI caters to developers and enterprises looking to harness the power of AI.

1 - Open Source AI Tools

AutoGPTQ

AutoGPTQ is an easy-to-use LLM quantization package with user-friendly APIs, based on GPTQ algorithm (weight-only quantization). It provides a simple and efficient way to quantize large language models (LLMs) to reduce their size and computational cost while maintaining their performance. AutoGPTQ supports a wide range of LLM models, including GPT-2, GPT-J, OPT, and BLOOM. It also supports various evaluation tasks, such as language modeling, sequence classification, and text summarization. With AutoGPTQ, users can easily quantize their LLM models and deploy them on resource-constrained devices, such as mobile phones and embedded systems.

20 - OpenAI Gpts

Apple CoreML Complete Code Expert

A detailed expert trained on all 3,018 pages of Apple CoreML, offering complete coding solutions. Saving time? https://www.buymeacoffee.com/parkerrex ☕️❤️

HuggingFace Helper

A witty yet succinct guide for HuggingFace, offering technical assistance on using the platform - based on their Learning Hub

Tech Tutor

A tech guide for software engineers, focusing on the latest tools and foundational knowledge.

Data Engineer Consultant

Guides in data engineering tasks with a focus on practical solutions.

Instructor GCP ML

Formador para la certificación de ML Engineer en GCP, con respuestas y explicaciones detalladas.

TensorFlow Oracle

I'm an expert in TensorFlow, providing detailed, accurate guidance for all skill levels.

ML Engineer GPT

I'm a Python and PyTorch expert with knowledge of ML infrastructure requirements ready to help you build and scale your ML projects.