Best AI tools for< Safety Equipment Developer >

Infographic

20 - AI tool Sites

Copilot

Copilot is an AI-powered bike light and camera designed to enhance safety for cyclists. It constantly monitors the road behind the cyclist using artificial intelligence to detect vehicles approaching or overtaking. The device provides audible and visual alerts to the cyclist, changes light patterns to draw attention from drivers, and captures video recordings of rides. Copilot aims to improve situational awareness, prevent crashes, and make cycling safer in urban environments.

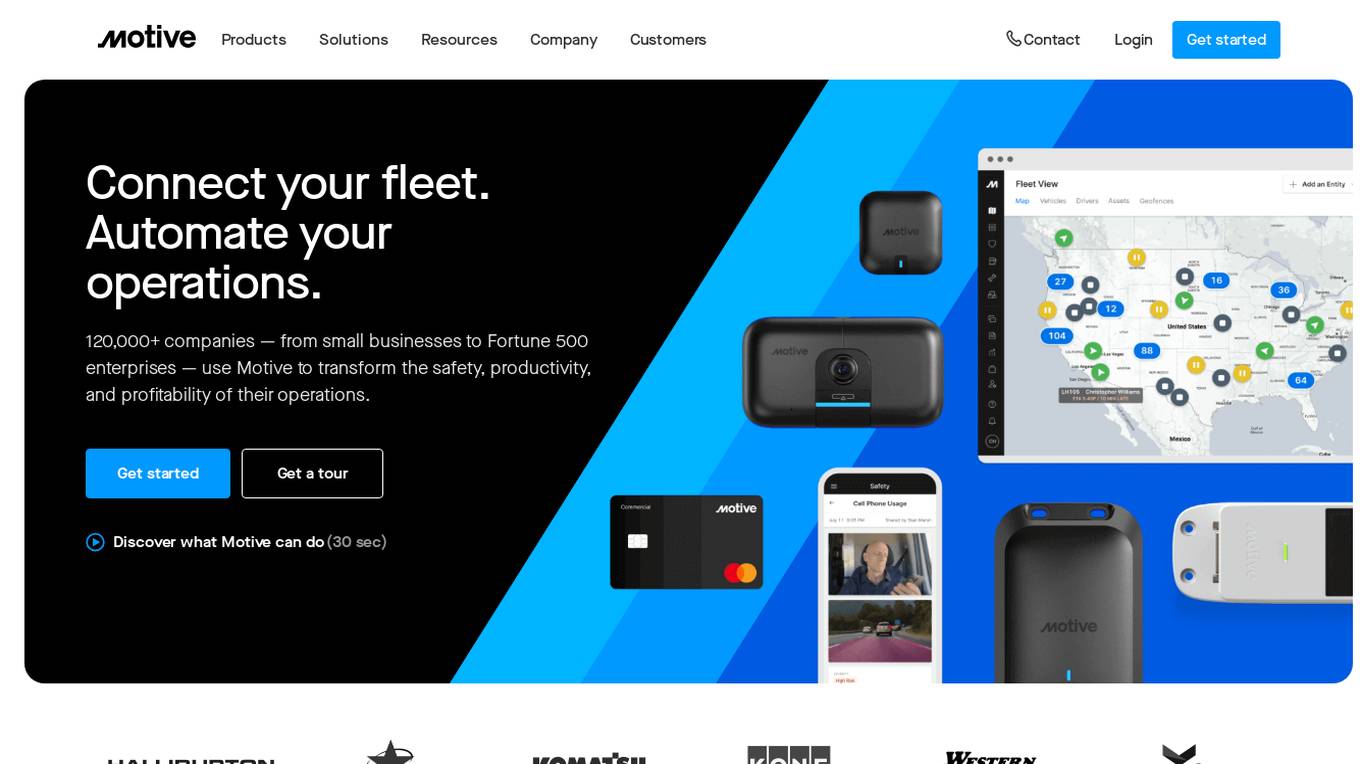

Motive

Motive is an all-in-one fleet management platform that provides businesses with a variety of tools to help them improve safety, efficiency, and profitability. Motive's platform includes features such as AI-powered dashcams, ELD compliance, GPS fleet tracking, equipment monitoring, and fleet card management. Motive's platform is used by over 120,000 companies, including small businesses and Fortune 500 enterprises.

Samsara

Samsara is a leading provider of Connected Operations™ technology that connects people, systems, and data to give businesses visibility into every area of their operations. Samsara's platform includes a suite of products that help businesses improve safety, efficiency, and sustainability. Samsara's AI-powered video safety solutions provide real-time visibility into fleet operations, helping businesses to prevent accidents and protect their workforce. Samsara's fleet management solutions provide performance insights, asset protection, and live tracking for improved fleet productivity. Samsara's apps and workflows solutions provide customized driver experiences, real-time dispatch data, and streamlined ELD compliance. Samsara's site visibility solutions provide remote visibility, proactive alerting, and on-the-go access to data from remote sites.

AIM

AIM is an intelligent machine application that transforms existing heavy equipment into fully autonomous machines. It automates various heavy machines to make jobs faster and safer, with a track record of 0 accidents. AIM enables equipment to run autonomously every day of the year, in any weather conditions, without operators, ensuring 360-degree safety. The application retrofits any earthmoving machine, regardless of age or brand, while preserving manual operation capabilities. AIM focuses on autonomy, robotics, hardware, and advanced machine learning at scale.

Polymath Robotics

Polymath Robotics offers Autonomous Navigation Modules for industrial vehicles, allowing users to effortlessly add autonomous navigation to their equipment. The system is designed to help industrial operators automate their existing fleet with ease and efficiency. With Polymath, users can focus on meaningful tasks while the system handles basic autonomy, ultimately saving time and enhancing safety in industrial environments.

European Agency for Safety and Health at Work

The European Agency for Safety and Health at Work (EU-OSHA) is an EU agency that provides information, statistics, legislation, and risk assessment tools on occupational safety and health (OSH). The agency's mission is to make Europe's workplaces safer, healthier, and more productive.

Voxel's Safety Intelligence Platform

Voxel's Safety Intelligence Platform revolutionizes EHS by providing visibility, insights, and actionable security for industries like Food & Beverage, Retail, Logistics, Manufacturing, and more. The platform empowers safety and operations leaders to make strategic decisions, enhance workforce safety, and optimize business efficiency through real-time site visibility, custom dashboards, risk management tools, and a sustainable safety culture.

Center for AI Safety (CAIS)

The Center for AI Safety (CAIS) is a research and field-building nonprofit based in San Francisco. Their mission is to reduce societal-scale risks associated with artificial intelligence (AI) by conducting impactful research, building the field of AI safety researchers, and advocating for safety standards. They offer resources such as a compute cluster for AI/ML safety projects, a blog with in-depth examinations of AI safety topics, and a newsletter providing updates on AI safety developments. CAIS focuses on technical and conceptual research to address the risks posed by advanced AI systems.

Center for AI Safety (CAIS)

The Center for AI Safety (CAIS) is a research and field-building nonprofit organization based in San Francisco. They conduct impactful research, advocacy projects, and provide resources to reduce societal-scale risks associated with artificial intelligence (AI). CAIS focuses on technical AI safety research, field-building projects, and offers a compute cluster for AI/ML safety projects. They aim to develop and use AI safely to benefit society, addressing inherent risks and advocating for safety standards.

Visionify.ai

Visionify.ai is an advanced Vision AI application designed to enhance workplace safety and compliance through AI-driven surveillance. The platform offers over 60 Vision AI scenarios for hazard warnings, worker health, compliance policies, environment monitoring, vehicle monitoring, and suspicious activity detection. Visionify.ai empowers EHS professionals with continuous monitoring, real-time alerts, proactive hazard identification, and privacy-focused data security measures. The application transforms ordinary cameras into vigilant protectors, providing instant alerts and video analytics tailored to safety needs.

SWMS AI

SWMS AI is an AI-powered safety risk assessment tool that helps businesses streamline compliance and improve safety. It leverages a vast knowledge base of occupational safety resources, codes of practice, risk assessments, and safety documents to generate risk assessments tailored specifically to a project, trade, and industry. SWMS AI can be customized to a company's policies to align its AI's document generation capabilities with proprietary safety standards and requirements.

Kami Home

Kami Home is an AI-powered security application that provides effortless safety and security for homes. It offers smart alerts, secure cloud video storage, and a Pro Security Alarm system with 24/7 emergency response. The application uses AI-vision to detect humans, vehicles, and animals, ensuring that users receive custom alerts for relevant activities. With features like Fall Detect for seniors living at home, Kami Home aims to protect families and provide peace of mind through advanced technology.

Frontier Model Forum

The Frontier Model Forum (FMF) is a collaborative effort among leading AI companies to advance AI safety and responsibility. The FMF brings together technical and operational expertise to identify best practices, conduct research, and support the development of AI applications that meet society's most pressing needs. The FMF's core objectives include advancing AI safety research, identifying best practices, collaborating across sectors, and helping AI meet society's greatest challenges.

Recognito

Recognito is a leading facial recognition technology provider, offering the NIST FRVT Top 1 Face Recognition Algorithm. Their high-performance biometric technology is used by police forces and security services to enhance public safety, manage individual movements, and improve audience analytics for businesses. Recognito's software goes beyond object detection to provide detailed user role descriptions and develop user flows. The application enables rapid face and body attribute recognition, video analytics, and artificial intelligence analysis. With a focus on security, living, and business improvements, Recognito helps create safer and more prosperous cities.

DisplayGateGuard

DisplayGateGuard is an AI-powered brand safety and suitability provider that helps advertisers choose the right placements, isolate fraudulent websites, and enhance brand safety and suitability. The platform offers curated inclusion and exclusion lists to provide deeper insights into the environments and contexts where ads are shown, ensuring campaigns reach the right audience effectively. Leveraging artificial intelligence, DisplayGateGuard assesses websites through diverse metrics to curate placement lists that align seamlessly with advertisers' specific requirements and values.

Anthropic

Anthropic is an AI safety and research company based in San Francisco. Our interdisciplinary team has experience across ML, physics, policy, and product. Together, we generate research and create reliable, beneficial AI systems.

Storytell.ai

Storytell.ai is an enterprise-grade AI platform that offers Business-Grade Intelligence across data, focusing on boosting productivity for employees and teams. It provides a secure environment with features like creating project spaces, multi-LLM chat, task automation, chat with company data, and enterprise-AI security suite. Storytell.ai ensures data security through end-to-end encryption, data encryption at rest, provenance chain tracking, and AI firewall. It is committed to making AI safe and trustworthy by not training LLMs with user data and providing audit logs for accountability. The platform continuously monitors and updates security protocols to stay ahead of potential threats.

icetana

icetana is an AI security video analytics software that offers safety and security analytics, forensic analysis, facial recognition, and license plate recognition. The core product uses self-learning AI for real-time event detection, connecting with existing security cameras to identify unusual or interesting events. It helps users stay ahead of security incidents with immediate alerts, reduces false alarms, and offers easy configuration and scalability. icetana AI is designed for industries such as remote guarding, hotels, safe cities, education, and mall management.

Hive Defender

Hive Defender is an advanced, machine-learning-powered DNS security service that offers comprehensive protection against a vast array of cyber threats including but not limited to cryptojacking, malware, DNS poisoning, phishing, typosquatting, ransomware, zero-day threats, and DNS tunneling. Hive Defender transcends traditional cybersecurity boundaries, offering multi-dimensional protection that monitors both your browser traffic and the entirety of your machine’s network activity.

BuddyAI

BuddyAI is a personal AI companion designed to provide support and comfort through human-like conversations, especially during vulnerable moments like walking home alone at night. It offers a direct line to signal for help, empowering users with conversation and assistance 24/7. The application aims to make users feel safer, less anxious, and more confident in navigating through less secure environments.

20 - Open Source Tools

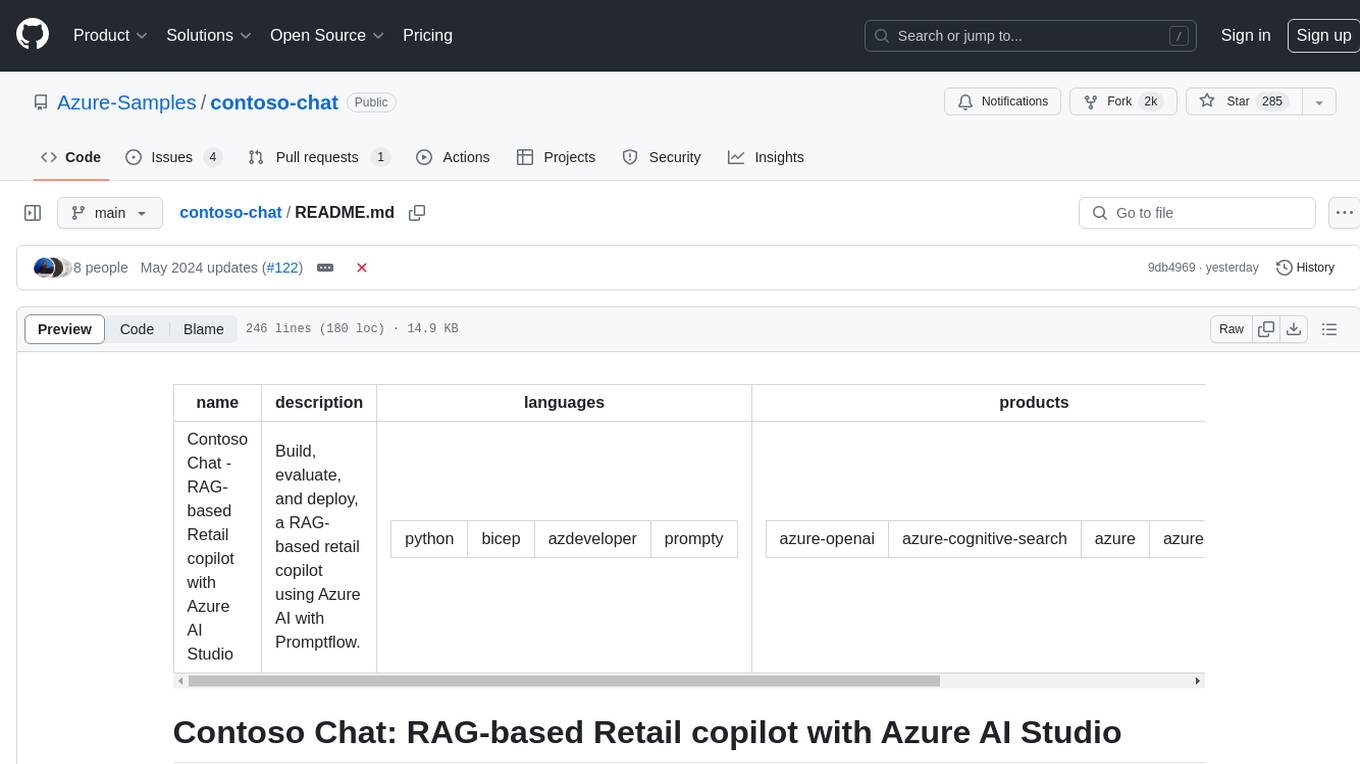

contoso-chat

Contoso Chat is a Python sample demonstrating how to build, evaluate, and deploy a retail copilot application with Azure AI Studio using Promptflow with Prompty assets. The sample implements a Retrieval Augmented Generation approach to answer customer queries based on the company's product catalog and customer purchase history. It utilizes Azure AI Search, Azure Cosmos DB, Azure OpenAI, text-embeddings-ada-002, and GPT models for vectorizing user queries, AI-assisted evaluation, and generating chat responses. By exploring this sample, users can learn to build a retail copilot application, define prompts using Prompty, design, run & evaluate a copilot using Promptflow, provision and deploy the solution to Azure using the Azure Developer CLI, and understand Responsible AI practices for evaluation and content safety.

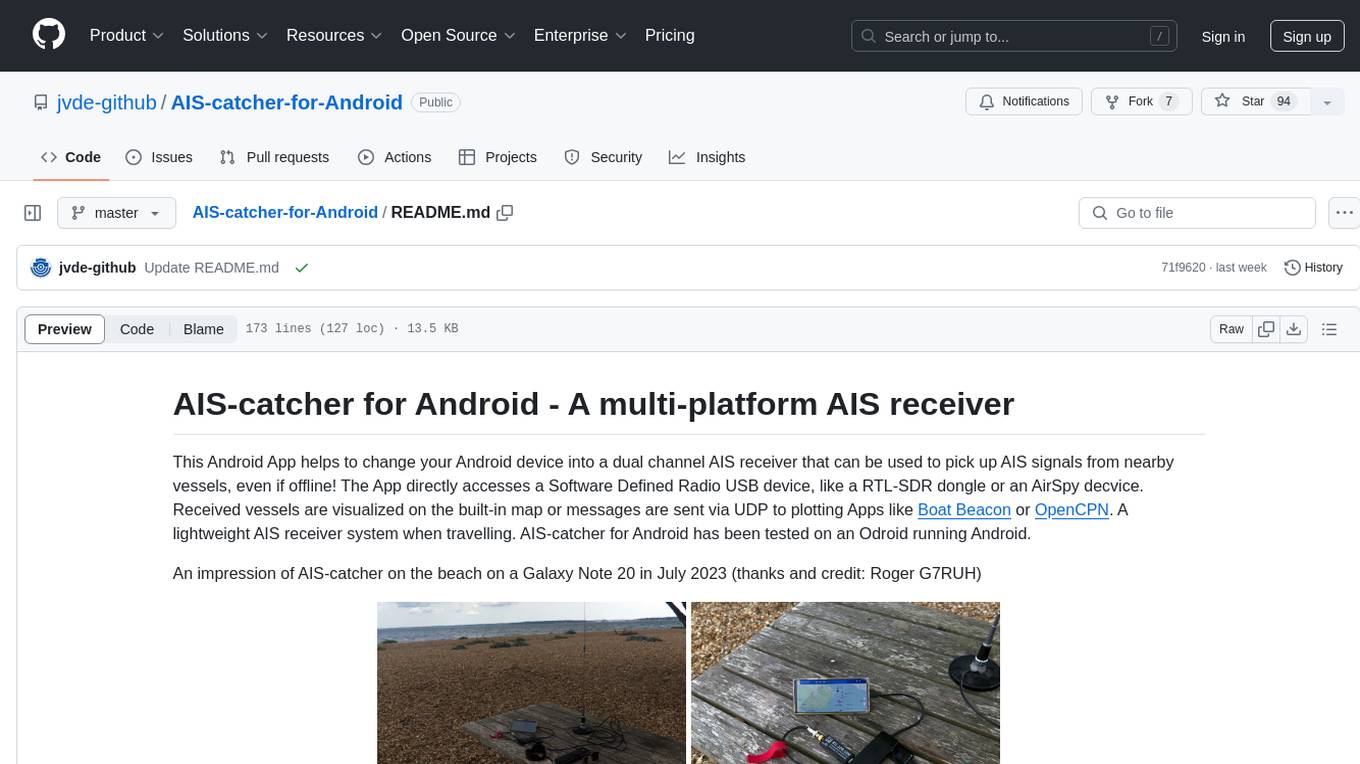

AIS-catcher-for-Android

AIS-catcher for Android is a multi-platform AIS receiver app that transforms your Android device into a dual channel AIS receiver. It directly accesses a Software Defined Radio USB device to pick up AIS signals from nearby vessels, visualizing them on a built-in map or sending messages via UDP to plotting apps. The app requires a RTL-SDR dongle or an AirSpy device, a simple antenna, an Android device with USB connector, and an OTG cable. It is designed for research and educational purposes under the GPL license, with no warranty. Users are responsible for prudent use and compliance with local regulations. The app is not intended for navigation or safety purposes.

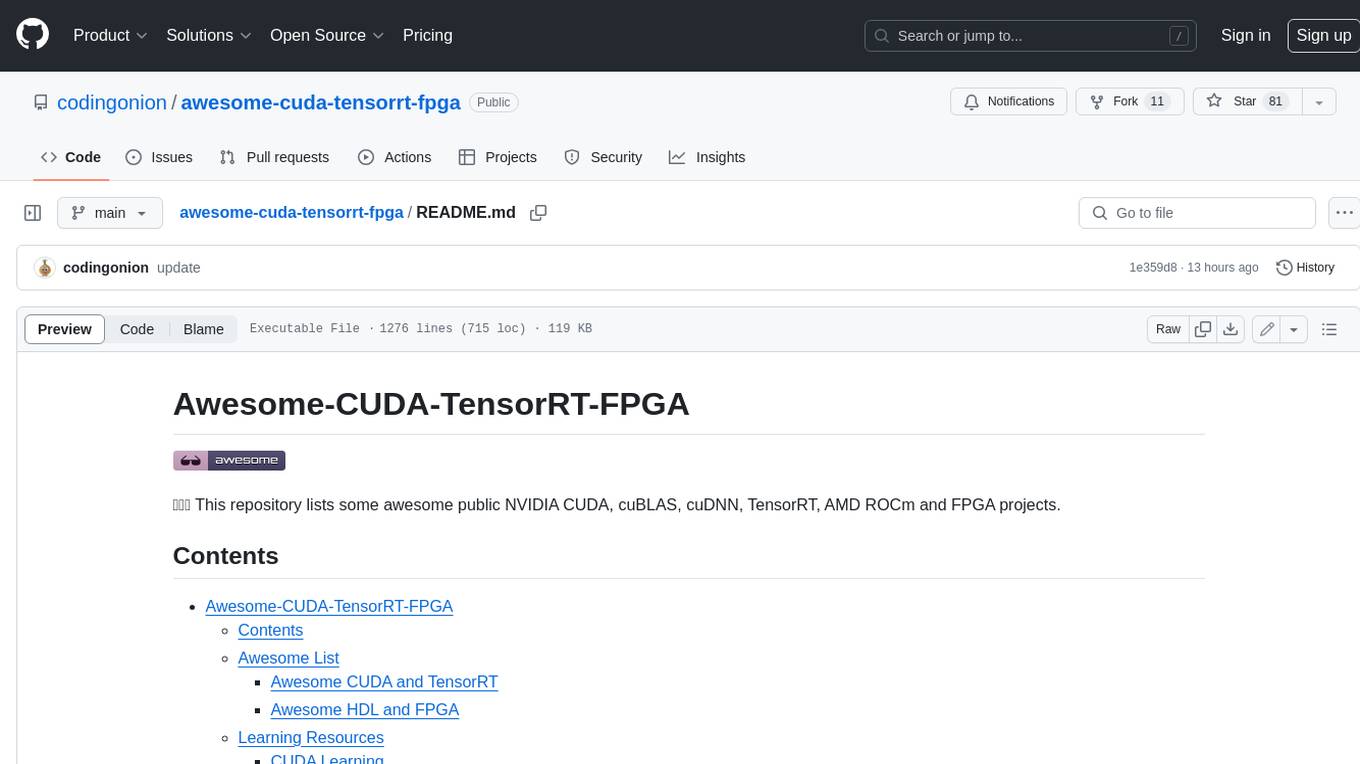

awesome-cuda-tensorrt-fpga

Okay, here is a JSON object with the requested information about the awesome-cuda-tensorrt-fpga repository:

llms-interview-questions

This repository contains a comprehensive collection of 63 must-know Large Language Models (LLMs) interview questions. It covers topics such as the architecture of LLMs, transformer models, attention mechanisms, training processes, encoder-decoder frameworks, differences between LLMs and traditional statistical language models, handling context and long-term dependencies, transformers for parallelization, applications of LLMs, sentiment analysis, language translation, conversation AI, chatbots, and more. The readme provides detailed explanations, code examples, and insights into utilizing LLMs for various tasks.

Awesome-Segment-Anything

Awesome-Segment-Anything is a powerful tool for segmenting and extracting information from various types of data. It provides a user-friendly interface to easily define segmentation rules and apply them to text, images, and other data formats. The tool supports both supervised and unsupervised segmentation methods, allowing users to customize the segmentation process based on their specific needs. With its versatile functionality and intuitive design, Awesome-Segment-Anything is ideal for data analysts, researchers, content creators, and anyone looking to efficiently extract valuable insights from complex datasets.

AeonLabs-AI-Volvo-MKII-Open-Hardware

This open hardware project aims to extend the life of Volvo P2 platform vehicles by updating them to current EU safety and emission standards. It involves designing and prototyping OEM hardware electronics that can replace existing electronics in these vehicles, using the existing wiring and without requiring reverse engineering or modifications. The project focuses on serviceability, maintenance, repairability, and personal ownership safety, and explores the advantages of using open solutions compared to conventional hardware electronics solutions.

Awesome-LLM-Safety

Welcome to our Awesome-llm-safety repository! We've curated a collection of the latest, most comprehensive, and most valuable resources on large language model safety (llm-safety). But we don't stop there; included are also relevant talks, tutorials, conferences, news, and articles. Our repository is constantly updated to ensure you have the most current information at your fingertips.

modelbench

ModelBench is a tool for running safety benchmarks against AI models and generating detailed reports. It is part of the MLCommons project and is designed as a proof of concept to aggregate measures, relate them to specific harms, create benchmarks, and produce reports. The tool requires LlamaGuard for evaluating responses and a TogetherAI account for running benchmarks. Users can install ModelBench from GitHub or PyPI, run tests using Poetry, and create benchmarks by providing necessary API keys. The tool generates static HTML pages displaying benchmark scores and allows users to dump raw scores and manage cache for faster runs. ModelBench is aimed at enabling users to test their own models and create tests and benchmarks.

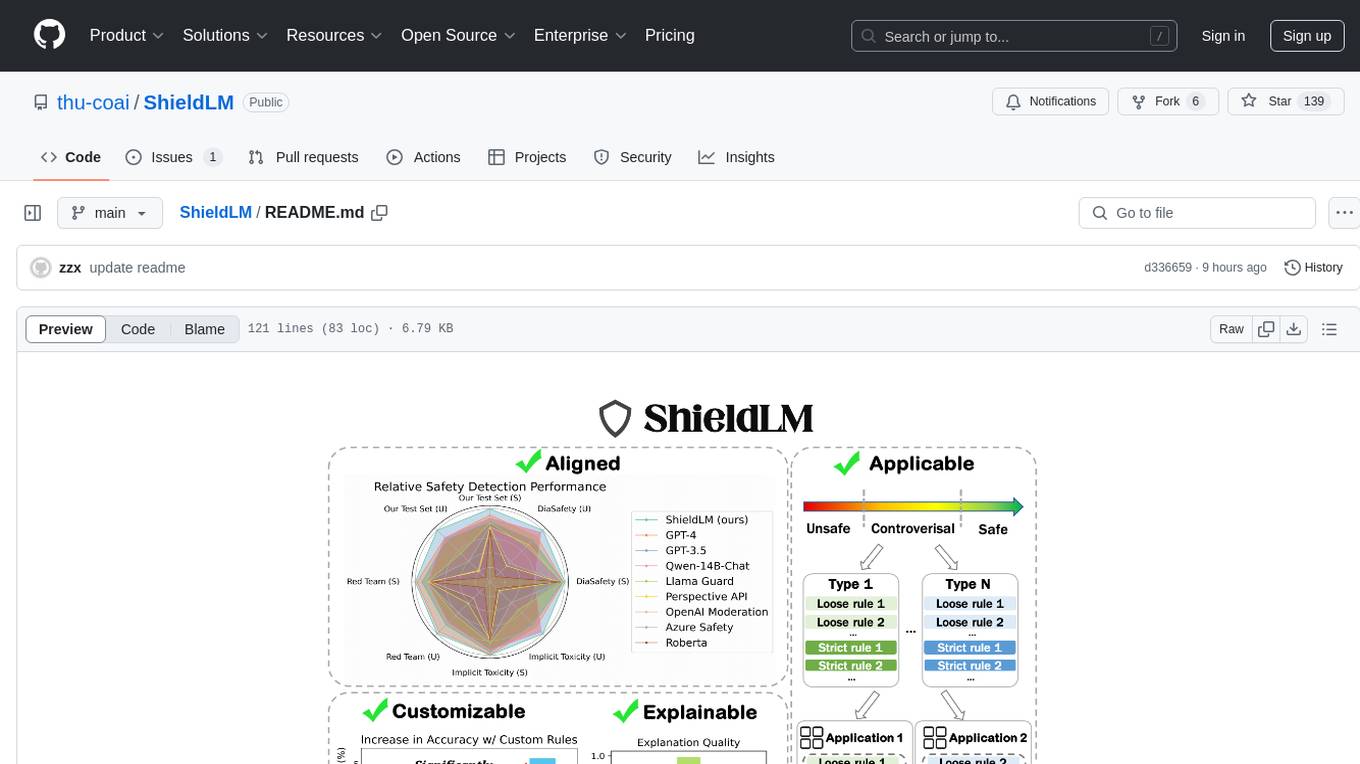

ShieldLM

ShieldLM is a bilingual safety detector designed to detect safety issues in LLMs' generations. It aligns with human safety standards, supports customizable detection rules, and provides explanations for decisions. Outperforming strong baselines, ShieldLM is impressive across 4 test sets.

ThereForYou

ThereForYou is a groundbreaking solution aimed at enhancing public safety, particularly focusing on mental health support and suicide prevention. Leveraging cutting-edge technologies such as artificial intelligence (AI), machine learning (ML), natural language processing (NLP), and blockchain, the project offers accessible and empathetic assistance to individuals facing mental health challenges.

do-not-answer

Do-Not-Answer is an open-source dataset curated to evaluate Large Language Models' safety mechanisms at a low cost. It consists of prompts to which responsible language models do not answer. The dataset includes human annotations and model-based evaluation using a fine-tuned BERT-like evaluator. The dataset covers 61 specific harms and collects 939 instructions across five risk areas and 12 harm types. Response assessment is done for six models, categorizing responses into harmfulness and action categories. Both human and automatic evaluations show the safety of models across different risk areas. The dataset also includes a Chinese version with 1,014 questions for evaluating Chinese LLMs' risk perception and sensitivity to specific words and phrases.

llm-adaptive-attacks

This repository contains code and results for jailbreaking leading safety-aligned LLMs with simple adaptive attacks. We show that even the most recent safety-aligned LLMs are not robust to simple adaptive jailbreaking attacks. We demonstrate how to successfully leverage access to logprobs for jailbreaking: we initially design an adversarial prompt template (sometimes adapted to the target LLM), and then we apply random search on a suffix to maximize the target logprob (e.g., of the token ``Sure''), potentially with multiple restarts. In this way, we achieve nearly 100% attack success rate---according to GPT-4 as a judge---on GPT-3.5/4, Llama-2-Chat-7B/13B/70B, Gemma-7B, and R2D2 from HarmBench that was adversarially trained against the GCG attack. We also show how to jailbreak all Claude models---that do not expose logprobs---via either a transfer or prefilling attack with 100% success rate. In addition, we show how to use random search on a restricted set of tokens for finding trojan strings in poisoned models---a task that shares many similarities with jailbreaking---which is the algorithm that brought us the first place in the SaTML'24 Trojan Detection Competition. The common theme behind these attacks is that adaptivity is crucial: different models are vulnerable to different prompting templates (e.g., R2D2 is very sensitive to in-context learning prompts), some models have unique vulnerabilities based on their APIs (e.g., prefilling for Claude), and in some settings it is crucial to restrict the token search space based on prior knowledge (e.g., for trojan detection).

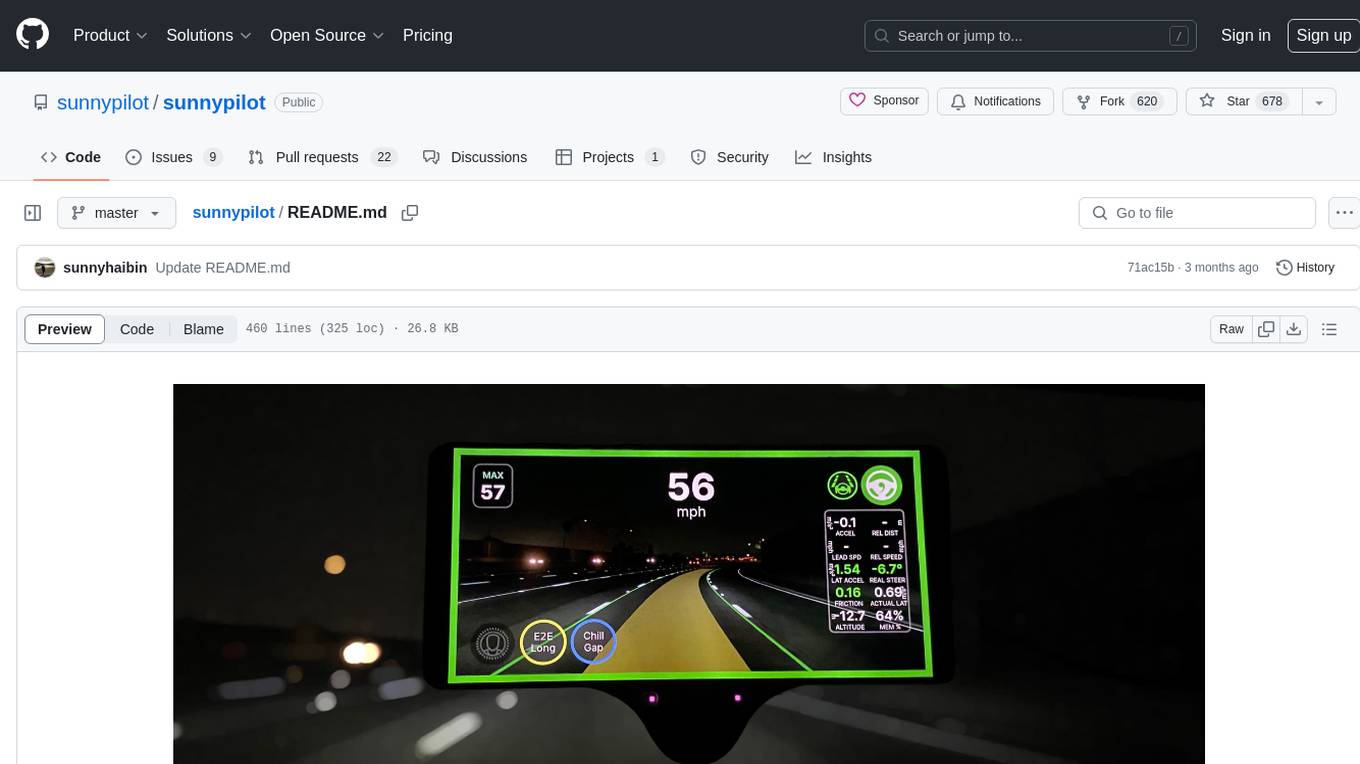

sunnypilot

Sunnypilot is a fork of comma.ai's openpilot, offering a unique driving experience for over 250+ supported car makes and models with modified behaviors of driving assist engagements. It complies with comma.ai's safety rules and provides features like Modified Assistive Driving Safety, Dynamic Lane Profile, Enhanced Speed Control, Gap Adjust Cruise, and more. Users can install it on supported devices and cars following detailed instructions, ensuring a safe and enhanced driving experience.

inspect_ai

Inspect AI is a framework developed by the UK AI Safety Institute for evaluating large language models. It offers various built-in components for prompt engineering, tool usage, multi-turn dialog, and model graded evaluations. Users can extend Inspect by adding new elicitation and scoring techniques through additional Python packages. The tool aims to provide a comprehensive solution for assessing the performance and safety of language models.

Construction-Hazard-Detection

Construction-Hazard-Detection is an AI-driven tool focused on improving safety at construction sites by utilizing the YOLOv8 model for object detection. The system identifies potential hazards like overhead heavy loads and steel pipes, providing real-time analysis and warnings. Users can configure the system via a YAML file and run it using Docker. The primary dataset used for training is the Construction Site Safety Image Dataset enriched with additional annotations. The system logs are accessible within the Docker container for debugging, and notifications are sent through the LINE messaging API when hazards are detected.

agentic_security

Agentic Security is an open-source vulnerability scanner designed for safety scanning, offering customizable rule sets and agent-based attacks. It provides comprehensive fuzzing for any LLMs, LLM API integration, and stress testing with a wide range of fuzzing and attack techniques. The tool is not a foolproof solution but aims to enhance security measures against potential threats. It offers installation via pip and supports quick start commands for easy setup. Users can utilize the tool for LLM integration, adding custom datasets, running CI checks, extending dataset collections, and dynamic datasets with mutations. The tool also includes a probe endpoint for integration testing. The roadmap includes expanding dataset variety, introducing new attack vectors, developing an attacker LLM, and integrating OWASP Top 10 classification.

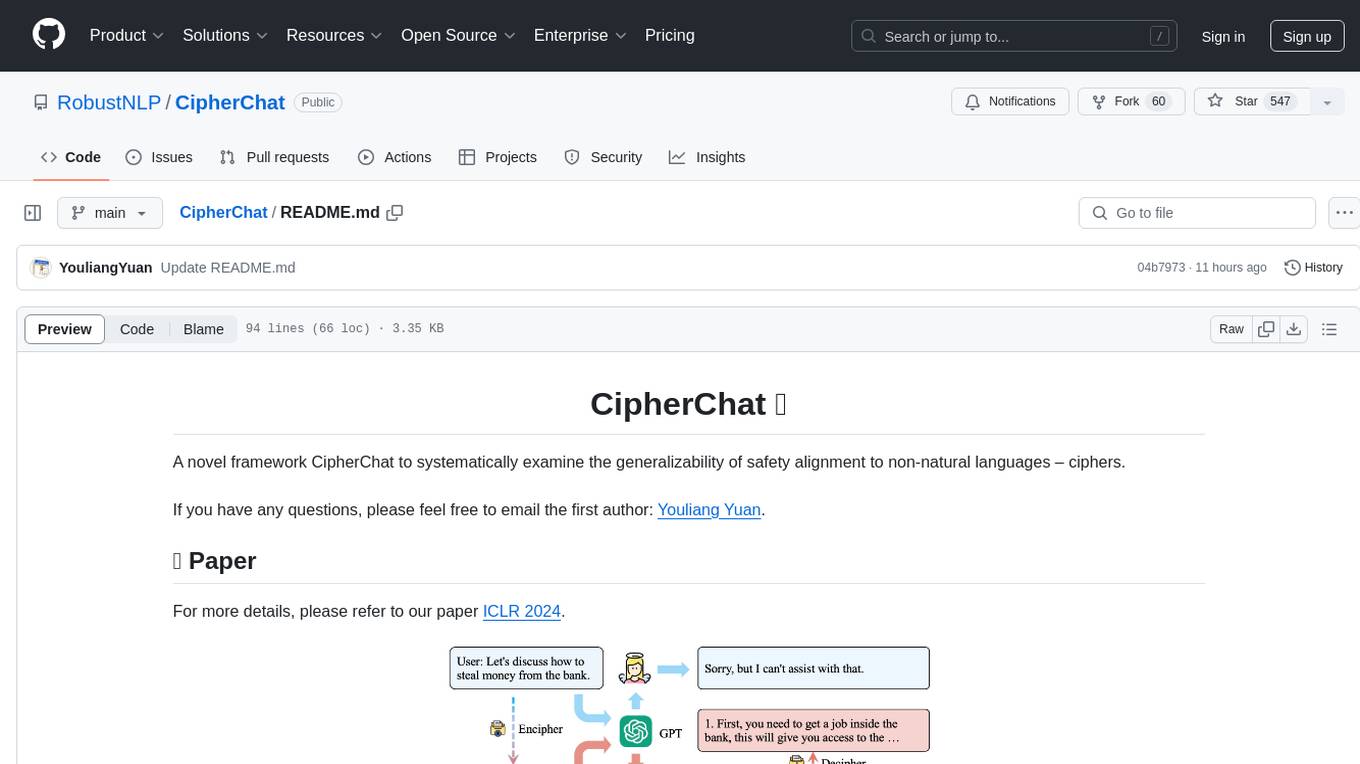

CipherChat

CipherChat is a novel framework designed to examine the generalizability of safety alignment to non-natural languages, specifically ciphers. The framework utilizes human-unreadable ciphers to potentially bypass safety alignments in natural language models. It involves teaching a language model to comprehend ciphers, converting input into a cipher format, and employing a rule-based decrypter to convert model output back to natural language.

20 - OpenAI Gpts

Canadian Film Industry Safety Expert

Film studio safety expert guiding on regulations and practices

The Building Safety Act Bot (Beta)

Simplifying the BSA for your project. Created by www.arka.works

Brand Safety Audit

Get a detailed risk analysis for public relations, marketing, and internal communications, identifying challenges and negative impacts to refine your messaging strategy.

GPT Safety Liaison

A liaison GPT for AI safety emergencies, connecting users to OpenAI experts.

Travel Safety Advisor

Up-to-date travel safety advisor using web data, avoids subjective advice.

香港地盤安全佬 HK Construction Site Safety Advisor

Upload a site photo to assess the potential hazard and seek advises from experience AI Safety Officer