Center for AI Safety (CAIS)

Reducing Societal-scale Risks from AI

The Center for AI Safety (CAIS) is a research and field-building nonprofit based in San Francisco. Their mission is to reduce societal-scale risks associated with artificial intelligence (AI) by conducting impactful research, building the field of AI safety researchers, and advocating for safety standards. They offer resources such as a compute cluster for AI/ML safety projects, a blog with in-depth examinations of AI safety topics, and a newsletter providing updates on AI safety developments. CAIS focuses on technical and conceptual research to address the risks posed by advanced AI systems.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Features

Advantages

Disadvantages

Frequently Asked Questions

Alternative AI tools for Center for AI Safety (CAIS)

Similar sites

Center for AI Safety (CAIS)

The Center for AI Safety (CAIS) is a research and field-building nonprofit based in San Francisco. Their mission is to reduce societal-scale risks associated with artificial intelligence (AI) by conducting impactful research, building the field of AI safety researchers, and advocating for safety standards. They offer resources such as a compute cluster for AI/ML safety projects, a blog with in-depth examinations of AI safety topics, and a newsletter providing updates on AI safety developments. CAIS focuses on technical and conceptual research to address the risks posed by advanced AI systems.

Center for AI Safety (CAIS)

The Center for AI Safety (CAIS) is a research and field-building nonprofit organization based in San Francisco. They conduct impactful research, advocacy projects, and provide resources to reduce societal-scale risks associated with artificial intelligence (AI). CAIS focuses on technical AI safety research, field-building projects, and offers a compute cluster for AI/ML safety projects. They aim to develop and use AI safely to benefit society, addressing inherent risks and advocating for safety standards.

The Institute for the Advancement of Legal and Ethical AI (ALEA)

The Institute for the Advancement of Legal and Ethical AI (ALEA) is a platform dedicated to supporting socially, economically, and environmentally sustainable futures through open research and education. They focus on developing legal and ethical frameworks to ensure that AI systems benefit society while minimizing harm to the economy and the environment. ALEA engages in activities such as open data collection, model training, technical and policy research, education, and community building to promote the responsible use of AI.

Frontier Model Forum

The Frontier Model Forum (FMF) is a collaborative effort among leading AI companies to advance AI safety and responsibility. The FMF brings together technical and operational expertise to identify best practices, conduct research, and support the development of AI applications that meet society's most pressing needs. The FMF's core objectives include advancing AI safety research, identifying best practices, collaborating across sectors, and helping AI meet society's greatest challenges.

Center for Human-Compatible Artificial Intelligence

The Center for Human-Compatible Artificial Intelligence (CHAI) is dedicated to building exceptional AI systems for the benefit of humanity. Their mission is to steer AI research towards developing systems that are provably beneficial. CHAI collaborates with researchers, faculty, staff, and students to advance the field of AI alignment and care-like relationships in machine caregiving. They focus on topics such as political neutrality in AI, offline reinforcement learning, and coordination with experts.

AI Security Institute (AISI)

The AI Security Institute (AISI) is a state-backed organization dedicated to advancing AI governance and safety. They conduct rigorous AI research to understand the impacts of advanced AI, develop risk mitigations, and collaborate with AI developers and governments to shape global policymaking. The institute aims to equip governments with a scientific understanding of the risks posed by advanced AI, monitor AI development, evaluate national security risks, and promote responsible AI development. With a team of top technical staff and partnerships with leading research organizations, AISI is at the forefront of AI governance.

JMIR AI

JMIR AI is a new peer-reviewed journal focused on research and applications for the health artificial intelligence (AI) community. It includes contemporary developments as well as historical examples, with an emphasis on sound methodological evaluations of AI techniques and authoritative analyses. It is intended to be the main source of reliable information for health informatics professionals to learn about how AI techniques can be applied and evaluated.

blog.biocomm.ai

blog.biocomm.ai is an AI safety blog that focuses on the existential threat posed by uncontrolled and uncontained AI technology. It curates and organizes information related to AI safety, including the risks and challenges associated with the proliferation of AI. The blog aims to educate and raise awareness about the importance of developing safe and regulated AI systems to ensure the survival of humanity.

Coalition for Health AI (CHAI)

The Coalition for Health AI (CHAI) is an AI application that provides guidelines for the responsible use of AI in health. It focuses on developing best practices and frameworks for safe and equitable AI in healthcare. CHAI aims to address algorithmic bias and collaborates with diverse stakeholders to drive the development, evaluation, and appropriate use of AI in healthcare.

SWMS AI

SWMS AI is an AI-powered safety risk assessment tool that helps businesses streamline compliance and improve safety. It leverages a vast knowledge base of occupational safety resources, codes of practice, risk assessments, and safety documents to generate risk assessments tailored specifically to a project, trade, and industry. SWMS AI can be customized to a company's policies to align its AI's document generation capabilities with proprietary safety standards and requirements.

Responsible AI Institute

The Responsible AI Institute is a global non-profit organization dedicated to equipping organizations and AI professionals with tools and knowledge to create, procure, and deploy AI systems that are safe and trustworthy. They offer independent assessments, conformity assessments, and certification programs to ensure that AI systems align with internal policies, regulations, laws, and best practices for responsible technology use. The institute also provides resources, news, and a community platform for members to collaborate and stay informed about responsible AI practices and regulations.

Transparency Coalition

The Transparency Coalition is a platform dedicated to advocating for legislation and transparency in the field of artificial intelligence. It aims to create AI safeguards for the greater good by focusing on training data, accountability, and ethical practices in AI development and deployment. The platform emphasizes the importance of regulating training data to prevent misuse and harm caused by AI systems. Through advocacy and education, the Transparency Coalition seeks to promote responsible AI innovation and protect personal privacy.

Health AI Partnership

Health AI Partnership (HAIP) is an AI tool designed to empower healthcare professionals to effectively, safely, and equitably use AI through community-informed up-to-date standards. The platform offers resources, publications, events, and a practice network to advance the use of AI in healthcare and support professionals in implementing AI solutions.

Montreal AI Ethics Institute

The Montreal AI Ethics Institute (MAIEI) is an international non-profit organization founded in 2018, dedicated to democratizing AI ethics literacy. It equips citizens concerned about artificial intelligence and its impact on society to take action through research summaries, columns, and AI applications in various fields.

Petrie-Flom Center at Harvard Law School

The Petrie-Flom Center at Harvard Law School is a leading center for the study of health law and policy. The Center's mission is to improve the health of the public through research, teaching, and advocacy. The Center's work focuses on a wide range of health law and policy issues, including access to care, the regulation of health care providers, and the ethical and legal implications of new health technologies.

Fordi

Fordi is an AI management tool that helps businesses avoid risks in real-time. It provides a comprehensive view of all AI systems, allowing businesses to identify and mitigate risks before they cause damage. Fordi also provides continuous monitoring and alerting, so businesses can be sure that their AI systems are always operating safely.

For similar tasks

Center for AI Safety (CAIS)

The Center for AI Safety (CAIS) is a research and field-building nonprofit based in San Francisco. Their mission is to reduce societal-scale risks associated with artificial intelligence (AI) by conducting impactful research, building the field of AI safety researchers, and advocating for safety standards. They offer resources such as a compute cluster for AI/ML safety projects, a blog with in-depth examinations of AI safety topics, and a newsletter providing updates on AI safety developments. CAIS focuses on technical and conceptual research to address the risks posed by advanced AI systems.

For similar jobs

Center for AI Safety (CAIS)

The Center for AI Safety (CAIS) is a research and field-building nonprofit based in San Francisco. Their mission is to reduce societal-scale risks associated with artificial intelligence (AI) by conducting impactful research, building the field of AI safety researchers, and advocating for safety standards. They offer resources such as a compute cluster for AI/ML safety projects, a blog with in-depth examinations of AI safety topics, and a newsletter providing updates on AI safety developments. CAIS focuses on technical and conceptual research to address the risks posed by advanced AI systems.

blog.biocomm.ai

blog.biocomm.ai is an AI safety blog that focuses on the existential threat posed by uncontrolled and uncontained AI technology. It curates and organizes information related to AI safety, including the risks and challenges associated with the proliferation of AI. The blog aims to educate and raise awareness about the importance of developing safe and regulated AI systems to ensure the survival of humanity.

Rationale

Rationale is a cutting-edge decision-making AI tool that leverages the power of the latest GPT technology and in-context learning. It is designed to assist users in making informed decisions by providing valuable insights and recommendations based on the data provided. With its advanced algorithms and machine learning capabilities, Rationale aims to streamline the decision-making process and enhance overall efficiency.

CHAI AI

CHAI AI is a leading conversational AI platform that focuses on building AI solutions for quant traders. The platform has secured significant funding rounds to expand its computational capabilities and talent acquisition. CHAI AI offers a range of models and techniques, such as reinforcement learning with human feedback, model blending, and direct preference optimization, to enhance user engagement and retention. The platform aims to provide users with the ability to create their own ChatAIs and offers custom GPU orchestration for efficient inference. With a strong focus on user feedback and recognition, CHAI AI continues to innovate and improve its AI models to meet the demands of a growing user base.

Cambrian Copilot

Cambrian Copilot is an AI tool designed for researchers and engineers to stay up-to-date with the latest machine learning research. With the ability to search over 240,000 ML papers, the tool helps users discover new research, understand complex details, and automate literature reviews. It simplifies the process of keeping track of the rapid developments in the field of machine learning.

Nexus

Nexus is a Business-led Enterprise AI Platform that empowers business teams to transform their workflows into autonomous agents in a matter of days. It offers a secure, reliable, and flexible solution that enables enterprises to deploy 100x faster without involving engineering teams. Nexus provides adaptive intelligence, dynamic planning, continuous learning, and technology-agnostic intelligent automation. It allows for universal deployment, works with existing tools, and grows with businesses without increasing headcount.

Mnemonic AI

Mnemonic AI is an end-to-end marketing intelligence platform that helps businesses unify their data, understand their customers, and automate their growth. It connects disparate data sources to build AI-powered customer insights and streamline marketing processes. The platform offers features such as connecting marketing and sales apps, generating AI insights, and automating growth through BI-grade reports. Mnemonic AI is trusted by forward-thinking marketing teams to transform marketing intelligence and drive confident decisions.

Functime

Functime is an AI tool specializing in time-series machine learning at scale. It offers a comprehensive set of features and functions to assist users in forecasting and analyzing time-series data efficiently. With its user-friendly interface and detailed documentation, Functime is designed to cater to both beginners and experienced users in the field of machine learning. The tool provides scoring, ranking, and plotting functions to evaluate forecasts, along with an AI copilot feature for in-depth analysis of trends, seasonality, and causal factors. Functime also offers an API reference for seamless integration with other applications.

Promptmakr

Promptmakr is an AI-powered platform that facilitates the buying and selling of AI prompts. It serves as a marketplace where users can discover, purchase, and sell prompts to enhance their AI projects. With a user-friendly interface and robust features, Promptmakr streamlines the process of accessing high-quality prompts for various applications, from chatbots to image recognition systems. The platform ensures secure transactions and fosters a community of AI enthusiasts and professionals.

StockGPT

StockGPT is an AI-powered financial research assistant that provides knowledge of earnings releases, financial reports, and fundamental information for S&P 500 and Nasdaq companies. It offers features like AI search, customizable filters, up-to-date data, and industry research to help users analyze companies and markets more efficiently.

DiscuroAI

DiscuroAI is an all-in-one platform designed for developers to easily build, test, and consume complex AI workflows. Users can define their workflows in a user-friendly interface and execute them with a single API call. The platform integrates with GPT-3, DALLE-2, and other OpenAI models, allowing users to chain prompts together in powerful ways and extract output in JSON format via API. DiscuroAI enables users to build and test complex self-transforming AI workflows and datasets, execute workflows with one API call, and monitor AI usage across workflows.

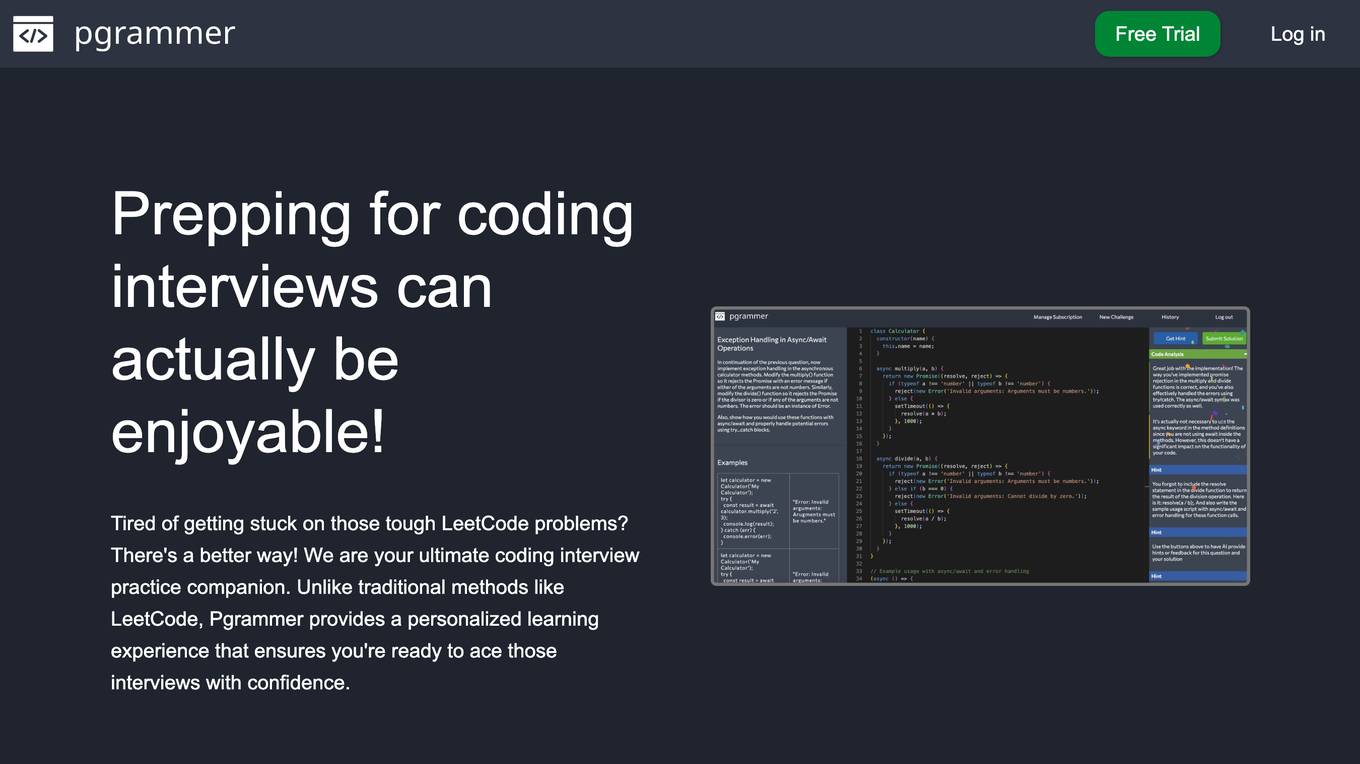

Pgrammer

Pgrammer is an AI-powered platform designed to help users practice coding interview questions with hints and personalized learning experiences. Unlike traditional methods like LeetCode, Pgrammer offers a diverse set of questions for over 20 programming languages, real-time hints, and solution analysis to improve coding skills and knowledge. The platform uses AI, specifically GPT-4, to determine question difficulty levels and provide feedback on code submissions. Pgrammer aims to make coding interview preparation enjoyable and effective for both beginners and seasoned professionals.

Google Colab Copilot

Google Colab Copilot is an AI tool that integrates the GitHub Copilot functionality into Google Colab, allowing users to easily generate code suggestions and improve their coding workflow. By following a simple setup guide, users can start using the tool to enhance their coding experience and boost productivity. With features like code generation, auto-completion, and real-time suggestions, Google Colab Copilot is a valuable tool for developers looking to streamline their coding process.

Lobe

Lobe is a free easy-to-use tool for Mac and PC that helps you train machine learning models and ship them to any platform you choose. It provides a user-friendly interface for training machine learning models without requiring extensive coding knowledge. Lobe supports various tasks related to machine learning, such as creating image-based datasets, working with Python toolsets, and deploying models on different platforms.

GitHub Buoyant

GitHub Buoyant is an AI-powered marine data aggregator tool that fetches marine conditions from NOAA via various free APIs. It provides wave conditions, wind data, tide levels, and water temperature for coastal areas in the US. The tool offers a comprehensive marine report with detailed information on waves, wind, tides, water temperature, and weather forecasts. It includes a library for developers to integrate marine data into their own code and covers US mainland coastal waters, Great Lakes, Hawaii, Alaska, Puerto Rico, USVI, and Guam. The tool handles NOAA's data quirks and provides insights into the technical aspects of data processing and validation.

Digicurator Agency

Digicurator Agency is an AI automation partner that specializes in building custom automation solutions and training teams for long-term success. They provide end-to-end services, from discovery and strategy to solution design and development, deployment, and empowerment. Their mission is to transform businesses through custom solutions and expert education, empowering clients to master automation for lasting success. The agency offers full-service solutions that employ AI-driven strategies to propel businesses to new heights.

Weaviate

Weaviate is an AI tool designed to empower AI builders to design, build, and ship complete AI experiences. It provides a foundation for search, retrieval augmented generation, and agentic AI. Weaviate offers production-ready AI applications, faster deployment, and seamless model integration. With billion-scale architecture and enterprise-ready deployment options, Weaviate helps AI builders scale seamlessly and meet enterprise requirements. The platform offers AI-first features under one roof, enabling users to write less custom code and build AI apps efficiently.

Microsoft Azure

Microsoft Azure is a cloud computing service that offers a wide range of products and solutions for businesses and developers. It provides services such as databases, analytics, compute, containers, hybrid cloud, AI, application development, and more. Azure aims to help organizations innovate, modernize, and scale their operations by leveraging the power of the cloud. With a focus on flexibility, performance, and security, Azure is designed to support a variety of workloads and use cases across different industries.

Qualifyed

Qualifyed is an AI predictive audiences and lead scoring platform designed to help businesses optimize their advertising efforts by targeting people with the highest probability to become customers. The platform uses machine learning to continuously inspect and score leads, providing a model of targetable individuals with a high probability of conversion. Qualifyed aims to decrease customer acquisition costs, increase online conversions, and enhance the efficiency of offline sales teams. By leveraging data and AI technology, Qualifyed offers a solution to reach ideal customers effectively and automatically.

Vectara

Vectara is a conversational search API platform designed for developers, offering best-in-class retrieval and summarization capabilities. It showcases conversational search capabilities and allows users to ask questions about the news, filter by source, and explore various topics. Vectara also supports personalized data queries and offers a free trial for easy access. The platform is built with grounded generation to minimize hallucinations, providing reliable and accurate results for users.

ThirdAI

ThirdAI is an AI platform that offers a production-ready solution for building and deploying AI applications quickly and efficiently. It provides advanced AI/GenAI technology that can run on any infrastructure, reducing barriers to delivering production-grade AI solutions. With features like enterprise SSO, built-in models, no-code interface, and more, ThirdAI empowers users to create AI applications without the need for specialized GPU servers or AI skills. The platform covers the entire workflow of building AI applications end-to-end, allowing for easy customization and deployment in various environments.

Kupiks

Kupiks is a platform that provides access to alternative data for prediction markets. Users can join the waitlist to get early access to real-time alternative data signals from various sources. The platform offers powerful analytics tools for data-driven decision-making and instant alerts for tracking market shifts. Kupiks aims to help users make better predictions by leveraging alternative data.

Susterra

Susterra is an advanced analytics platform for Public Finance stakeholders, aiming to catalyze urban development by providing powerful insights. The platform integrates leading practices from academia, utilizes public data growth, and leverages technology and innovation, including ML and AI. Susterra offers solutions like TerraScore, TerraVision, TerraView, and Impact IQ, enabling sophisticated evaluation of public benefit programs across various sectors like Utilities, Education, Healthcare, and more. The platform also specializes in data visualization tools and is powered by Google Cloud.

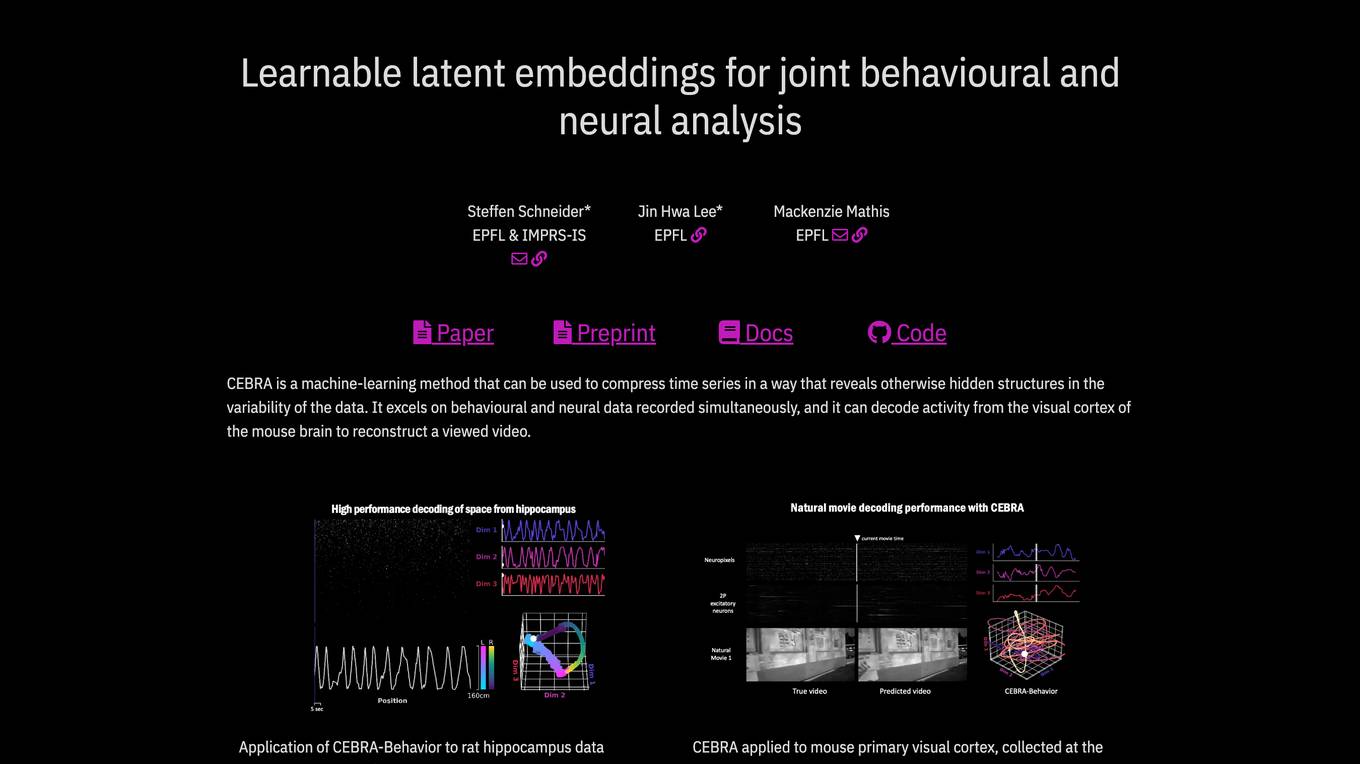

CEBRA

CEBRA is a self-supervised learning algorithm that provides interpretable embeddings of high-dimensional recordings using auxiliary variables. It excels in compressing time series data to reveal hidden structures, particularly in behavioral and neural data. The algorithm can decode activity from the visual cortex, reconstruct viewed videos, decode trajectories, and determine position during navigation. CEBRA is a valuable tool for joint behavioral and neural analysis, offering consistent and high-performance latent spaces for hypothesis testing and label-free usage across various datasets and species.