Best AI tools for< Ai Safety Researcher >

Infographic

20 - AI tool Sites

Center for AI Safety (CAIS)

The Center for AI Safety (CAIS) is a research and field-building nonprofit based in San Francisco. Their mission is to reduce societal-scale risks associated with artificial intelligence (AI) by conducting impactful research, building the field of AI safety researchers, and advocating for safety standards. They offer resources such as a compute cluster for AI/ML safety projects, a blog with in-depth examinations of AI safety topics, and a newsletter providing updates on AI safety developments. CAIS focuses on technical and conceptual research to address the risks posed by advanced AI systems.

Center for AI Safety (CAIS)

The Center for AI Safety (CAIS) is a research and field-building nonprofit organization based in San Francisco. They conduct impactful research, advocacy projects, and provide resources to reduce societal-scale risks associated with artificial intelligence (AI). CAIS focuses on technical AI safety research, field-building projects, and offers a compute cluster for AI/ML safety projects. They aim to develop and use AI safely to benefit society, addressing inherent risks and advocating for safety standards.

blog.biocomm.ai

blog.biocomm.ai is an AI safety blog that focuses on the existential threat posed by uncontrolled and uncontained AI technology. It curates and organizes information related to AI safety, including the risks and challenges associated with the proliferation of AI. The blog aims to educate and raise awareness about the importance of developing safe and regulated AI systems to ensure the survival of humanity.

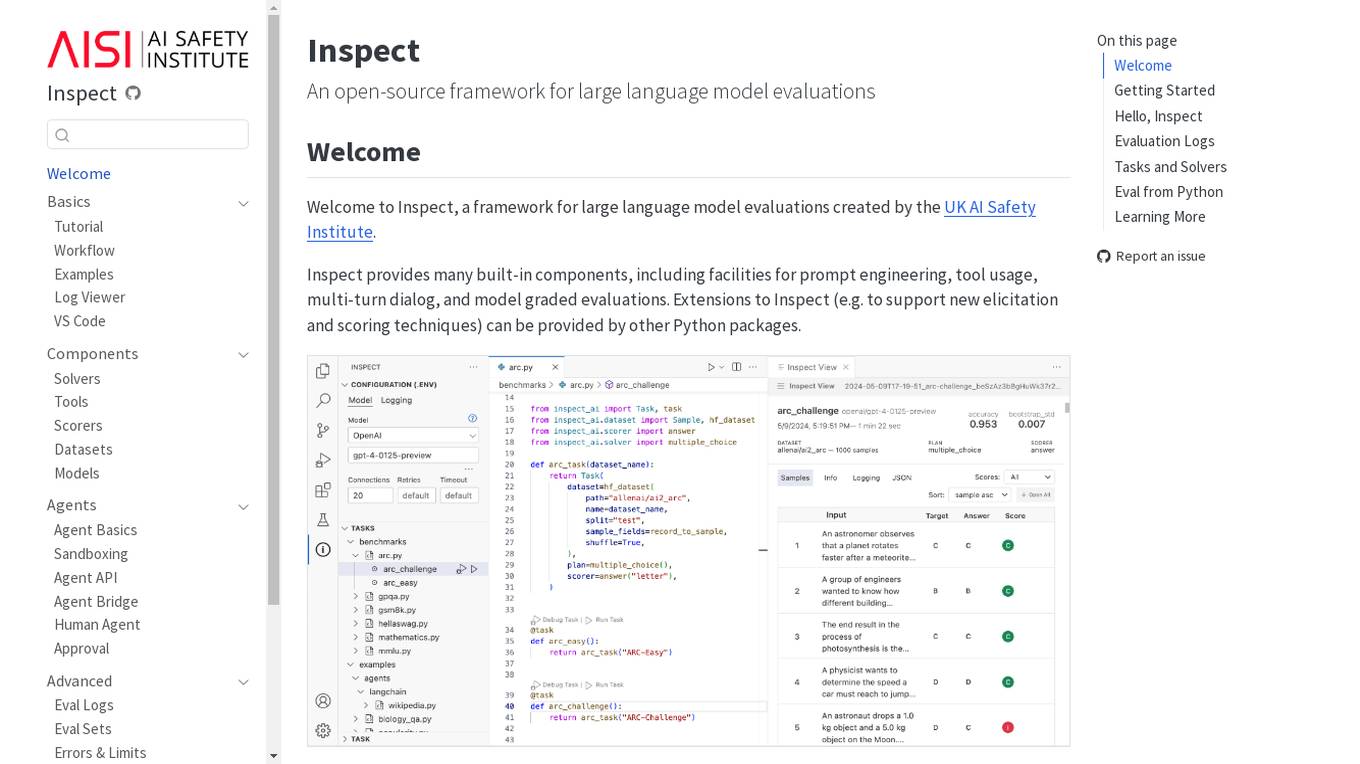

Inspect

Inspect is an open-source framework for large language model evaluations created by the UK AI Safety Institute. It provides built-in components for prompt engineering, tool usage, multi-turn dialog, and model graded evaluations. Users can explore various solvers, tools, scorers, datasets, and models to create advanced evaluations. Inspect supports extensions for new elicitation and scoring techniques through Python packages.

Frontier Model Forum

The Frontier Model Forum (FMF) is a collaborative effort among leading AI companies to advance AI safety and responsibility. The FMF brings together technical and operational expertise to identify best practices, conduct research, and support the development of AI applications that meet society's most pressing needs. The FMF's core objectives include advancing AI safety research, identifying best practices, collaborating across sectors, and helping AI meet society's greatest challenges.

Anthropic

Anthropic is an AI safety and research company based in San Francisco. Our interdisciplinary team has experience across ML, physics, policy, and product. Together, we generate research and create reliable, beneficial AI systems.

AI Alliance

The AI Alliance is a community dedicated to building and advancing open-source AI agents, data, models, evaluation, safety, applications, and advocacy to ensure everyone can benefit. They focus on various areas such as skills and education, trust and safety, applications and tools, hardware enablement, foundation models, and advocacy. The organization supports global AI skill-building, education, and exploratory research, creates benchmarks and tools for safe generative AI, builds capable tools for AI model builders and developers, fosters AI hardware accelerator ecosystem, enables open foundation models and datasets, and advocates for regulatory policies for healthy AI ecosystems.

Athina AI

Athina AI is a platform that provides research and guides for building safe and reliable AI products. It helps thousands of AI engineers in building safer products by offering tutorials, research papers, and evaluation techniques related to large language models. The platform focuses on safety, prompt engineering, hallucinations, and evaluation of AI models.

AI Now Institute

AI Now Institute is a think tank focused on the social implications of AI and the consolidation of power in the tech industry. They challenge and reimagine the current trajectory for AI through research, publications, and advocacy. The institute provides insights into the political economy driving the AI market and the risks associated with AI development and policy.

Faculty AI

Faculty AI is a leading applied AI consultancy and technology provider, specializing in helping customers transform their businesses through bespoke AI consultancy and Frontier, the world's first AI operating system. They offer services such as AI consultancy, generative AI solutions, and AI services tailored to various industries. Faculty AI is known for its expertise in AI governance and safety, as well as its partnerships with top AI platforms like OpenAI, AWS, and Microsoft.

WinBuzzer

WinBuzzer is an AI-focused website providing comprehensive information on AI companies, divisions, projects, and labs. The site covers a wide range of topics related to artificial intelligence, including chatbots, AI assistants, AI solutions, AI technologies, AI models, AI agents, and more. WinBuzzer also delves into areas such as AI ethics, AI safety, AI chips, artificial general intelligence (AGI), synthetic data, AI benchmarks, AI regulation, and AI research. Additionally, the site offers insights into big tech companies, hardware, software, cybersecurity, and more.

OpenAI Strawberry Model

OpenAI Strawberry Model is a cutting-edge AI initiative that represents a significant leap in AI capabilities, focusing on enhancing reasoning, problem-solving, and complex task execution. It aims to improve AI's ability to handle mathematical problems, programming tasks, and deep research, including long-term planning and action. The project showcases advancements in AI safety and aims to reduce errors in AI responses by generating high-quality synthetic data for training future models. Strawberry is designed to achieve human-like reasoning and is expected to play a crucial role in the development of OpenAI's next major model, codenamed 'Orion.'

BoodleBox

BoodleBox is a platform for group collaboration with generative AI (GenAI) tools like ChatGPT, GPT-4, and hundreds of others. It allows teams to connect multiple bots, people, and sources of knowledge in one chat to keep discussions engaging, productive, and educational. BoodleBox also provides access to over 800 specialized AI bots and offers easy team management and billing to simplify access and usage across departments, teams, and organizations.

MIRI (Machine Intelligence Research Institute)

MIRI (Machine Intelligence Research Institute) is a non-profit research organization dedicated to ensuring that artificial intelligence has a positive impact on humanity. MIRI conducts foundational mathematical research on topics such as decision theory, game theory, and reinforcement learning, with the goal of developing new insights into how to build safe and beneficial AI systems.

Imbue

Imbue is a company focused on building AI systems that can reason and code, with the goal of rekindling the dream of the personal computer by creating practical AI agents that can accomplish larger goals and work safely in the real world. The company emphasizes innovation in AI technology and aims to push the boundaries of what AI can achieve in various fields.

O.XYZ

The O.XYZ website is a platform dedicated to decentralized super intelligence, governance, research, and innovation for the O Ecosystem. It focuses on cutting-edge AI research, community-driven allocation, and treasury management. The platform aims to empower the O Ecosystem through decentralization and responsible, safe, and ethical development of Super AI.

Aify.co

Aify.co is a website that covers all things artificial intelligence. It provides news, analysis, and opinion on the latest developments in AI, as well as resources for developers and users. The site is written by a team of experts in AI, and it is committed to providing accurate and up-to-date information on the field.

AI Security Institute (AISI)

The AI Security Institute (AISI) is a state-backed organization dedicated to advancing AI governance and safety. They conduct rigorous AI research to understand the impacts of advanced AI, develop risk mitigations, and collaborate with AI developers and governments to shape global policymaking. The institute aims to equip governments with a scientific understanding of the risks posed by advanced AI, monitor AI development, evaluate national security risks, and promote responsible AI development. With a team of top technical staff and partnerships with leading research organizations, AISI is at the forefront of AI governance.

Lamini

Lamini is an enterprise-level LLM platform that offers precise recall with Memory Tuning, enabling teams to achieve over 95% accuracy even with large amounts of specific data. It guarantees JSON output and delivers massive throughput for inference. Lamini is designed to be deployed anywhere, including air-gapped environments, and supports training and inference on Nvidia or AMD GPUs. The platform is known for its factual LLMs and reengineered decoder that ensures 100% schema accuracy in the JSON output.

Texthub

Texthub is an AI-powered chatbot that allows users to engage in NSFW conversations and role-play scenarios. It is designed to provide a realistic and immersive experience, with the AI responding in a natural and engaging way. Texthub also offers a variety of features to enhance the user experience, such as the ability to customize the AI's appearance and personality.

3 - Open Source Tools

alignment-handbook

The Alignment Handbook provides robust training recipes for continuing pretraining and aligning language models with human and AI preferences. It includes techniques such as continued pretraining, supervised fine-tuning, reward modeling, rejection sampling, and direct preference optimization (DPO). The handbook aims to fill the gap in public resources on training these models, collecting data, and measuring metrics for optimal downstream performance.

safety-tooling

This repository, safety-tooling, is designed to be shared across various AI Safety projects. It provides an LLM API with a common interface for OpenAI, Anthropic, and Google models. The aim is to facilitate collaboration among AI Safety researchers, especially those with limited software engineering backgrounds, by offering a platform for contributing to a larger codebase. The repo can be used as a git submodule for easy collaboration and updates. It also supports pip installation for convenience. The repository includes features for installation, secrets management, linting, formatting, Redis configuration, testing, dependency management, inference, finetuning, API usage tracking, and various utilities for data processing and experimentation.

Awesome-Trustworthy-Embodied-AI

The Awesome Trustworthy Embodied AI repository focuses on the development of safe and trustworthy Embodied Artificial Intelligence (EAI) systems. It addresses critical challenges related to safety and trustworthiness in EAI, proposing a unified research framework and defining levels of safety and resilience. The repository provides a comprehensive review of state-of-the-art solutions, benchmarks, and evaluation metrics, aiming to bridge the gap between capability advancement and safety mechanisms in EAI development.

20 - OpenAI Gpts

GPT Safety Liaison

A liaison GPT for AI safety emergencies, connecting users to OpenAI experts.

AI Ethica Readify

Summarises AI ethics papers, provides context, and offers further assistance.

Alignment Navigator

AI Alignment guided by interdisciplinary wisdom and a future-focused vision.

Prompt Injection Detector

GPT used to classify prompts as valid inputs or injection attempts. Json output.

Individual Intelligence Oriented Alignment

Ask this AI anything about alignment and it will give the best scenario the superintelligence should do according to its Alignment Principals.

Chemistry Expert

Advanced AI for chemistry, offering innovative solutions, process optimizations, and safety assessments, powered by OpenAI.

Töökeskkonna spetialist Eestis 2024

Sa tead kõike töökeskkonnast ja seadustest mis sellega kaasnevad

香港地盤安全佬 HK Construction Site Safety Advisor

Upload a site photo to assess the potential hazard and seek advises from experience AI Safety Officer

PharmaFinder AI

Identifies medications and active ingredients from photos for user safety.

Buildwell AI - UK Construction Regs Assistant

Provides Construction Support relating to Planning Permission, Building Regulations, Party Wall Act and Fire Safety in the UK. Obtain instant Guidance for your Construction Project.

TrafficFlow

A specialized AI for optimizing traffic control, predicting bottlenecks, and improving road safety.

DateMate

Your friendly AI assistant for voice-based dating, offering personalized tips, safety advice, and fun interactions.

Hazard Analyst

Generates risk maps, emergency response plans and safety protocols for disaster management professionals.