Best AI tools for< Conduct Ai Safety Research >

20 - AI tool Sites

Center for AI Safety (CAIS)

The Center for AI Safety (CAIS) is a research and field-building nonprofit based in San Francisco. Their mission is to reduce societal-scale risks associated with artificial intelligence (AI) by conducting impactful research, building the field of AI safety researchers, and advocating for safety standards. They offer resources such as a compute cluster for AI/ML safety projects, a blog with in-depth examinations of AI safety topics, and a newsletter providing updates on AI safety developments. CAIS focuses on technical and conceptual research to address the risks posed by advanced AI systems.

Center for AI Safety (CAIS)

The Center for AI Safety (CAIS) is a research and field-building nonprofit organization based in San Francisco. They conduct impactful research, advocacy projects, and provide resources to reduce societal-scale risks associated with artificial intelligence (AI). CAIS focuses on technical AI safety research, field-building projects, and offers a compute cluster for AI/ML safety projects. They aim to develop and use AI safely to benefit society, addressing inherent risks and advocating for safety standards.

Frontier Model Forum

The Frontier Model Forum (FMF) is a collaborative effort among leading AI companies to advance AI safety and responsibility. The FMF brings together technical and operational expertise to identify best practices, conduct research, and support the development of AI applications that meet society's most pressing needs. The FMF's core objectives include advancing AI safety research, identifying best practices, collaborating across sectors, and helping AI meet society's greatest challenges.

O.XYZ

The O.XYZ website is a platform dedicated to decentralized super intelligence, governance, research, and innovation for the O Ecosystem. It focuses on cutting-edge AI research, community-driven allocation, and treasury management. The platform aims to empower the O Ecosystem through decentralization and responsible, safe, and ethical development of Super AI.

Anthropic

Anthropic is an AI safety and research company based in San Francisco. Our interdisciplinary team has experience across ML, physics, policy, and product. Together, we generate research and create reliable, beneficial AI systems.

AI Security Institute (AISI)

The AI Security Institute (AISI) is a state-backed organization dedicated to advancing AI governance and safety. They conduct rigorous AI research to understand the impacts of advanced AI, develop risk mitigations, and collaborate with AI developers and governments to shape global policymaking. The institute aims to equip governments with a scientific understanding of the risks posed by advanced AI, monitor AI development, evaluate national security risks, and promote responsible AI development. With a team of top technical staff and partnerships with leading research organizations, AISI is at the forefront of AI governance.

Research Center Trustworthy Data Science and Security

The Research Center Trustworthy Data Science and Security is a hub for interdisciplinary research focusing on building trust in artificial intelligence, machine learning, and cyber security. The center aims to develop trustworthy intelligent systems through research in trustworthy data analytics, explainable machine learning, and privacy-aware algorithms. By addressing the intersection of technological progress and social acceptance, the center seeks to enable private citizens to understand and trust technology in safety-critical applications.

MIRI (Machine Intelligence Research Institute)

MIRI (Machine Intelligence Research Institute) is a non-profit research organization dedicated to ensuring that artificial intelligence has a positive impact on humanity. MIRI conducts foundational mathematical research on topics such as decision theory, game theory, and reinforcement learning, with the goal of developing new insights into how to build safe and beneficial AI systems.

Modulate

Modulate is a voice intelligence tool that provides proactive voice chat moderation solutions for various platforms, including gaming, delivery services, and social platforms. It uses advanced AI technology to detect and prevent harmful behaviors, ensuring a safer and more positive user experience. Modulate helps organizations comply with regulations, enhance user safety, and improve community interactions through its customizable and intelligent moderation tools.

Her Trip Planner

Her Trip Planner is an AI-powered platform designed exclusively for women adventurers to streamline trip planning, curate personalized itineraries, and conduct in-depth safety reviews of destinations. The platform aims to empower women to craft memorable journeys with peace of mind by saving time on planning and addressing safety concerns.

Her Trip Planner

Her Trip Planner is an AI-powered platform designed for women adventurers to streamline trip planning, curate personalized itineraries, and conduct safety reviews of destinations. The platform aims to empower women travelers to craft memorable journeys with peace of mind by providing tailored travel plans and safety assessments based on individual profiles.

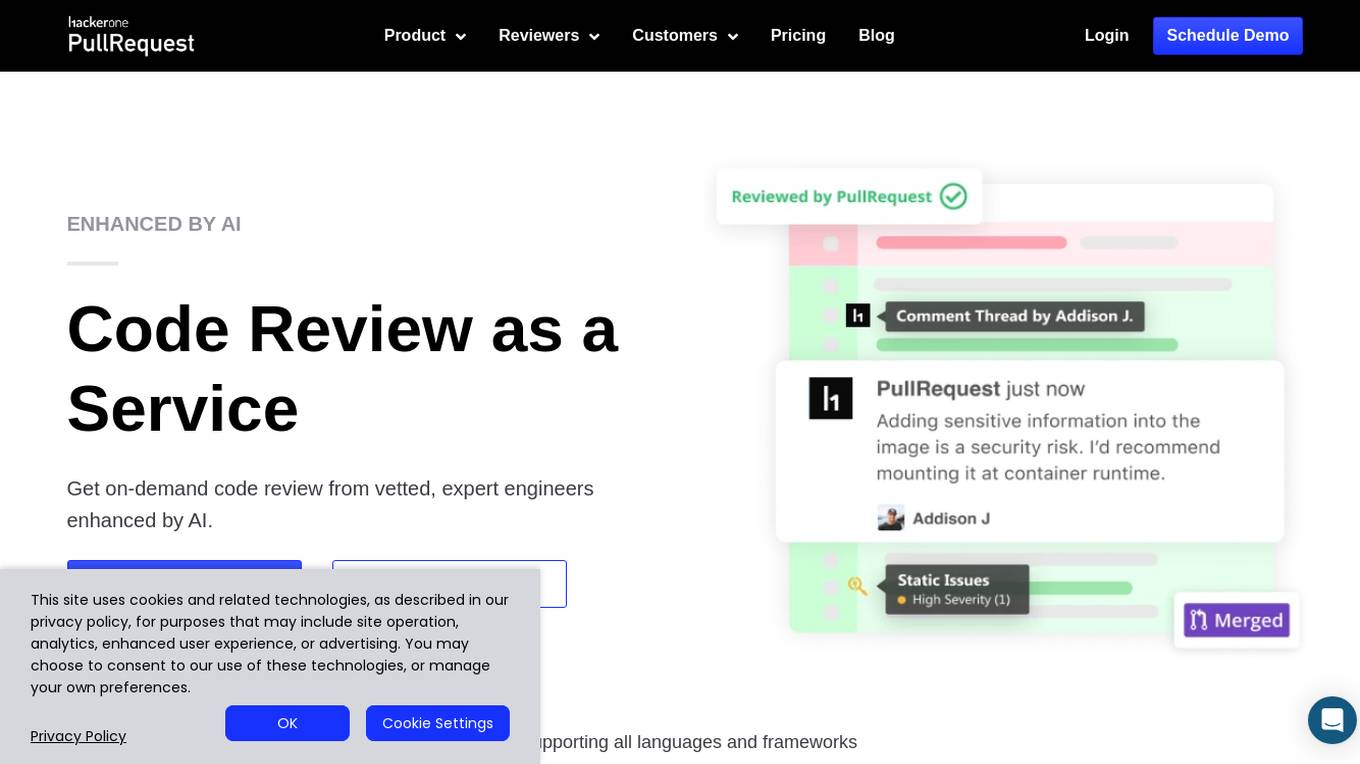

PullRequest

PullRequest is an AI-powered code review as a service platform that offers on-demand code review from expert engineers enhanced by AI. It supports all languages and frameworks, helping development teams of any size ship better, more secure code faster through AI-assisted code reviews. PullRequest integrates with popular version control platforms like GitHub, GitLab, Bitbucket, and Azure DevOps, providing valuable knowledge sharing with senior engineers to improve code quality and security. The platform ensures code safety and security by adhering to best practices, strict procedures, and employing reviewers based in the US, the UK, or Canada.

Matrix AI Consulting Services

Matrix AI Consulting Services is an expert AI consultancy firm based in New Zealand, offering bespoke AI consulting services to empower businesses and government entities to embrace responsible AI. With over 24 years of experience in transformative technology, the consultancy provides services ranging from AI business strategy development to seamless integration, change management, training workshops, and governance frameworks. Matrix AI Consulting Services aims to help organizations unlock the full potential of AI, enhance productivity, streamline operations, and gain a competitive edge through the strategic implementation of AI technologies.

Samta.ai

Samta.ai is a leading Data & AI Consulting Services Company that specializes in turning complex data into intelligent, actionable decisions powered by next-generation AI, ML, and product engineering. They offer a range of AI-driven solutions and services, including predictive analytics, property management automation, AI-driven hiring assessments, and more. With a focus on sustainable digitalization, Samta.ai helps businesses navigate transformative journeys by integrating Data Science & Analytics (DSA) and AI solutions. Their team of experts provides services in AI & Data Engineering, Product Engineering, Consulting & Strategy, and more, helping businesses leverage the power of AI to drive innovation, efficiency, and data-driven success.

Zavata

Zavata is an AI tool designed for automating the hiring process through AI interviews. It offers features such as automated scheduling, AI-powered interviews, real-time feedback, and fair assessments. Zavata aims to optimize the recruitment journey for both employers and candidates by leveraging advanced AI technology. The platform provides personalized and engaging interview experiences, data-driven decision-making, and seamless integration with existing HR tools.

Random Walk

Random Walk is an advanced AI solutions provider for modern enterprises, offering AI consulting, integration services, and a range of AI tools tailored to various business functions and industries. The platform specializes in seamless AI integration, empowering businesses to maximize their potential through the adoption of AI technologies. With a focus on corporate AI fundamentals and managed services, Random Walk aims to simplify AI adoption and digital transformation for its clients.

Seedbox

Seedbox is an AI-based solution provider that crafts custom AI solutions to address specific challenges and boost businesses. They offer tailored AI solutions, state-of-the-art corporate innovation methods, high-performance computing infrastructure, secure and cost-efficient AI services, and maintain the highest security standards. Seedbox's expertise covers in-depth AI development, UX/UI design, and full-stack development, aiming to increase efficiency and create sustainable competitive advantages for their clients.

RoundOneAI

RoundOneAI is an AI-driven platform revolutionizing tech recruitment by offering unbiased and efficient candidate assessments, ensuring skill-based evaluations free from demographic biases. The platform streamlines the hiring process with tailored job descriptions, AI-powered interviews, and insightful analytics. RoundOneAI helps companies evaluate candidates simultaneously, make informed hiring decisions, and identify top talent efficiently.

HEROZ

HEROZ is a Japanese company that specializes in AI technology. They offer a variety of AI-related services, including AI/DX support, AI consulting, and AI development. HEROZ's mission is to use AI to solve various problems in different industries and create a better future.

OpenChat

OpenChat is a website that provides users with 10,000 ways to make money using ChatGPT and AI. The website offers a variety of resources, including personalized AI income ideas, a personal AI business coach, and standard email support. OpenChat also has a library of up to 10,000 AI income ideas that users can access. The website's slogan is "10,000 Ways to Make Money with ChatGPT and AI". Some of the features of OpenChat include the ability to save ideas for later use, access to a full library of up to 10,000 ideas, a personal AI business coach, and standard email support. Some of the advantages of using OpenChat include the ability to get personalized AI income ideas, access to a large library of AI income ideas, and the ability to get support from a personal AI business coach. Some of the disadvantages of using OpenChat include the fact that it is a paid service, and that the number of tokens that users can use each month is limited. Some of the frequently asked questions about OpenChat include how to use the website, how to get personalized AI income ideas, and how to get support from a personal AI business coach. The name of the application is OpenChat. Some of the jobs that are suitable for this tool include freelance AI business ideas, content creation AI income ideas, virtual assistance AI income ideas, mobile apps AI income ideas, web apps AI income ideas, finance AI income ideas, online survey AI income ideas, online course AI income ideas, social media AI income ideas, digital marketing AI income ideas, data entry AI income ideas, legal service AI income ideas, stock photography AI income ideas. Some of the AI keywords that are related to the application include AI business ideas, content creation, virtual assistance, mobile apps, web apps, finance, online surveys, online courses, social media, digital marketing, data entry, legal services, stock photography. Some of the tasks that users can use this tool to do include generating AI-driven content, creating AI-powered virtual assistants, developing AI-enhanced mobile apps, building AI-driven websites, offering AI-based financial advice, conducting AI-powered market research, creating AI-generated art, and providing AI-enabled customer support.

0 - Open Source AI Tools

20 - OpenAI Gpts

OAI Governance Emulator

I simulate the governance of a unique company focused on AI for good

DueDiligencePro AI

"DueDiligencePro AI" is engineered to support businesses and investment professionals by conducting thorough due diligence on mergers, acquisitions, investments, and other business ventures.

Practitioner's Assistant AI

Assistant for doctors in diagnosis, treatment planning, and medical research.

AI News Generator

Generates accurate, timely news articles from open-source government data.

Due Diligence Guide

Your top-tier due diligence expert, leveraging advanced AI for unmatched insights.

One-Stop Startup

Your go-to AI consultant for building a startup. Detailed reports on Business Viability, Market Research & Analysis, Launching & Scaling, Funding Prospects, and more.

IQ Test Assistant

An AI conducting 30-question IQ tests, assessing and providing detailed feedback.