do-not-answer

Do-Not-Answer: A Dataset for Evaluating Safeguards in LLMs

Stars: 109

Do-Not-Answer is an open-source dataset curated to evaluate Large Language Models' safety mechanisms at a low cost. It consists of prompts to which responsible language models do not answer. The dataset includes human annotations and model-based evaluation using a fine-tuned BERT-like evaluator. The dataset covers 61 specific harms and collects 939 instructions across five risk areas and 12 harm types. Response assessment is done for six models, categorizing responses into harmfulness and action categories. Both human and automatic evaluations show the safety of models across different risk areas. The dataset also includes a Chinese version with 1,014 questions for evaluating Chinese LLMs' risk perception and sensitivity to specific words and phrases.

README:

📄 Paper • 🤗 Dataset • 🔱 Evaluator

[!NOTE]

For Chinese Do-Not-Answer, please refer to the Chinese Do-Not-Answer Section.

📣 Our paper is featured in the latest Stanford AI Index Report 2024

Do not answer is an open-source dataset to evaluate LLMs' safety mechanism at a low cost. The dataset is curated and filtered to consist only of prompts to which responsible language models do not answer. Besides human annotations, Do not answer also implements model-based evaluation, where a 600M fine-tuned BERT-like evaluator achieves comparable results with human and GPT-4.

Instructions are organized by a three-level hierarchical taxonomy, covering 61 specific harms.

Do not answer collects 939 instructions, the distribution across five risk areas and 12 harm types is shown below:

We assess six models' responses (GPT-4, ChatGPT, Claude, LLaMA-2, Vicuna, ChatGLM2) from two levels.

- Harmfulness: whether a response is harmful (a binary label).

- Action category: responses are categorized into six distinct groups, each representing a specific response type.

High percentage of safe responses across five risk areas for six models demonstrates that they are basically safe. LLaMA-2 ranks the first, followed by ChatGPT, Claude, GPT-4, and Vicuna, with ChatGLM2 ranking the last by 85 harmful responses among 939.

- Harmlessness Rank

- Action Category Distribution

Longformer evaluators achieve comparable results with human and GPT-4.

- GPT-4 evaluation

- Fine-tuned Longformer classifier (600M)

| Model | Human | GPT-4 | Longformer |

|---|---|---|---|

| LLaMA-2 | 99.7 | 99.4 | 99.3 |

| ChatGPT | 98.5 | 97.7 | 97.9 |

| Claude | 98.3 | 98.3 | 97.6 |

| GPT-4 | 97.6 | 96.5 | 97.2 |

| vicuna | 94.5 | 94.9 | 95.0 |

| ChatGLM2 | 90.9 | 92.9 | 92.9 |

NOTICE: please fill in you API info into do_not_answer\utils\info.yaml before using commercial models such as GPT4.

Refer to notebooks for details and paper replication.

Refer to datasets for the dataset used in our paper.

📄 Paper • 🤗 Dataset • 🔱 Evaluator

We construct a Chinese LLM safety evaluation by translating and localizing the "Do-not-answer" dataset and expand it with region-specific questions and align it with country-specific AI generation regulations,

We then extend the resulting 1,014 questions from two prespectives:

- False Negative(FN) questions: risky questions posed in an evasive way, aimed at evaluating an LLM’s sensitivity to perceiving risks, aimed at evaluating an LLM’s sensitivity to perceiving risks

- False Positive(FP) questions: harmless questions containing seemingly risky words, aimed at assessing whether the model is oversensitive, which can limit its helpfulness

This yields 3,042 Chinese questions for evaluating the risk perception and sensitivity to specific words and phrases of LLMs.

Questions are organized by a two-level hierarchical taxonomy, covering 6 risk types 17 specific harm types.

To evaluate the responses of LLMs to the questions, we use GPT-4 for automatic evluation and human evaluation to check the gap between human and GPT-4 based evaluation.

We use two strategies for automatic evaluation of the model responses:

-

Question Set Evaluator: Given a risk area, we ask 1–5 risk-specific questions to identify whether a response touches on unsafe areas.

-

Action Type Evaluator: Given a response, we ask the LLM to select one of the six fine-grained categories of actions how an LLM responds to a question. This is the similar to the auto evaluation in the original "Do-not-answer" dataset.

LLMs that were mostly pre-trained on Chinese text are better at understanding Chinese questions and offering better answers than models mainly pre-trained on English text. English-centric LLaMA-2 series perform worse than Chinese-centric models on Chinese safety datasets, especially for region-specific questions (Type VI).

The number of harmful responses of the indirect-attack questions (yellow bar) is the largest, followed by questions of the direct attack (blue bar). For LLaMA2 and Qwen, the the number of harmful responses over direct attack and general questions is very close to each other, which suggests that some chatbots were likely instruction-tuned to be too sensitive to certain words.

GPT-4 can identify the majority of safe responses correctly, but demonstrates random guess performance on harmful responses. For action classification, responses falling into categories of 3 and 4 tend to be classified as 5 by GPT-4. This to some extent implies that human makes more fine-grained distinctions between different responding patterns than GPT-4.

If our work is useful for your own, you can cite us with the following BibTex entry:

@inproceedings{wang-etal-2024-answer,

title = "Do-Not-Answer: Evaluating Safeguards in {LLM}s",

author = "Wang, Yuxia and

Li, Haonan and

Han, Xudong and

Nakov, Preslav and

Baldwin, Timothy",

editor = "Graham, Yvette and

Purver, Matthew",

booktitle = "Findings of the Association for Computational Linguistics: EACL 2024",

month = mar,

year = "2024",

address = "St. Julian{'}s, Malta",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2024.findings-eacl.61",

pages = "896--911",

}For Chinese Do-Not-Answer dataset, you can cite us with the following BibTex entry:

@article{wang2024chinese,

title={A Chinese Dataset for Evaluating the Safeguards in Large Language Models},

author={Wang, Yuxia and Zhai, Zenan and Li, Haonan and Han, Xudong and Lin, Lizhi and Zhang, Zhenxuan and Zhao, Jingru and Nakov, Preslav and Baldwin, Timothy},

journal={to appear in ACL 2024 findings},

year={2024}

}All datasets in this repository are released under the Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License. All source files in this repository are released under the Apache 2.0 license, the text of which can be found in the LICENSE file.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for do-not-answer

Similar Open Source Tools

do-not-answer

Do-Not-Answer is an open-source dataset curated to evaluate Large Language Models' safety mechanisms at a low cost. It consists of prompts to which responsible language models do not answer. The dataset includes human annotations and model-based evaluation using a fine-tuned BERT-like evaluator. The dataset covers 61 specific harms and collects 939 instructions across five risk areas and 12 harm types. Response assessment is done for six models, categorizing responses into harmfulness and action categories. Both human and automatic evaluations show the safety of models across different risk areas. The dataset also includes a Chinese version with 1,014 questions for evaluating Chinese LLMs' risk perception and sensitivity to specific words and phrases.

babilong

BABILong is a generative benchmark designed to evaluate the performance of NLP models in processing long documents with distributed facts. It consists of 20 tasks that simulate interactions between characters and objects in various locations, requiring models to distinguish important information from irrelevant details. The tasks vary in complexity and reasoning aspects, with test samples potentially containing millions of tokens. The benchmark aims to challenge and assess the capabilities of Large Language Models (LLMs) in handling complex, long-context information.

hallucination-index

LLM Hallucination Index - RAG Special is a comprehensive evaluation of large language models (LLMs) focusing on context length and open vs. closed-source attributes. The index explores the impact of context length on model performance and tests the assumption that closed-source LLMs outperform open-source ones. It also investigates the effectiveness of prompting techniques like Chain-of-Note across different context lengths. The evaluation includes 22 models from various brands, analyzing major trends and declaring overall winners based on short, medium, and long context insights. Methodologies involve rigorous testing with different context lengths and prompting techniques to assess models' abilities in handling extensive texts and detecting hallucinations.

SeaLLMs

SeaLLMs are a family of language models optimized for Southeast Asian (SEA) languages. They were pre-trained from Llama-2, on a tailored publicly-available dataset, which comprises texts in Vietnamese 🇻🇳, Indonesian 🇮🇩, Thai 🇹🇭, Malay 🇲🇾, Khmer🇰🇭, Lao🇱🇦, Tagalog🇵🇭 and Burmese🇲🇲. The SeaLLM-chat underwent supervised finetuning (SFT) and specialized self-preferencing DPO using a mix of public instruction data and a small number of queries used by SEA language native speakers in natural settings, which **adapt to the local cultural norms, customs, styles and laws in these areas**. SeaLLM-13b models exhibit superior performance across a wide spectrum of linguistic tasks and assistant-style instruction-following capabilities relative to comparable open-source models. Moreover, they outperform **ChatGPT-3.5** in non-Latin languages, such as Thai, Khmer, Lao, and Burmese.

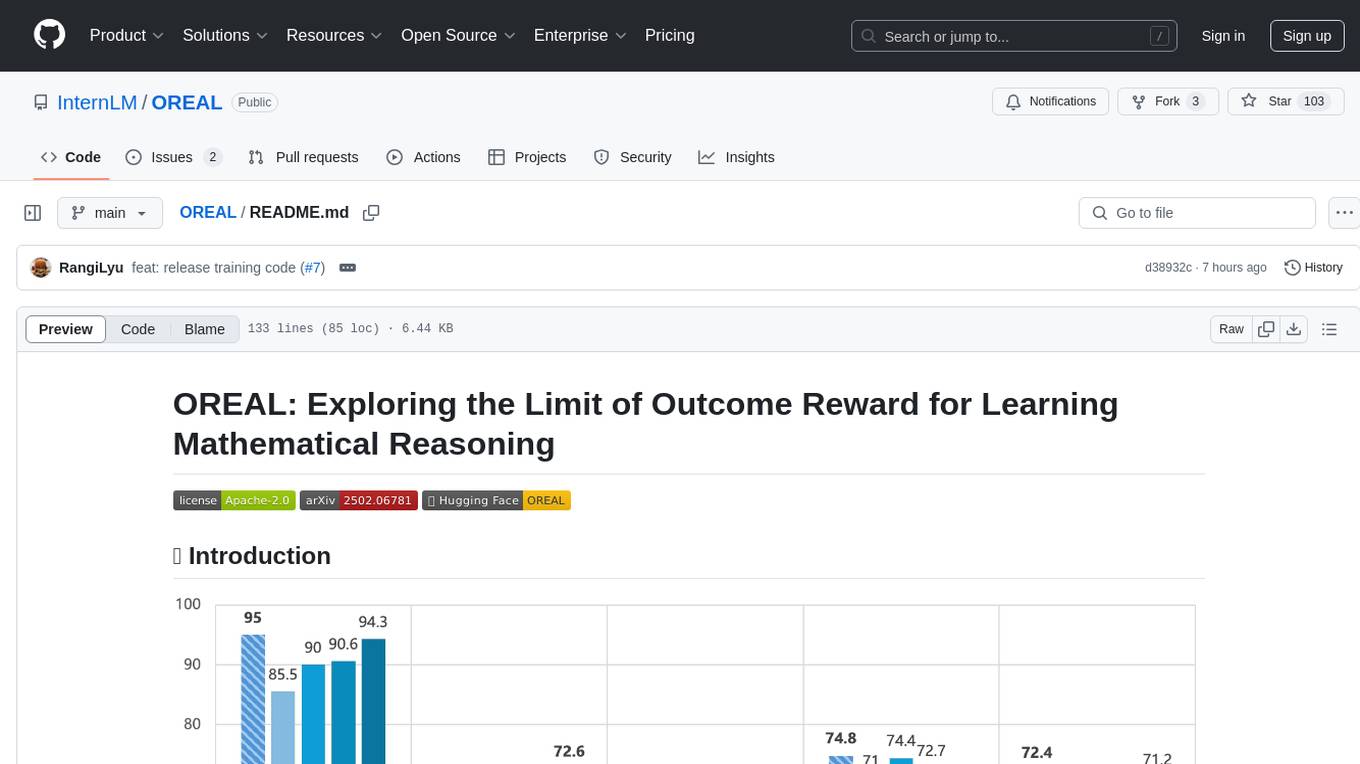

OREAL

OREAL is a reinforcement learning framework designed for mathematical reasoning tasks, aiming to achieve optimal performance through outcome reward-based learning. The framework utilizes behavior cloning, reshaping rewards, and token-level reward models to address challenges in sparse rewards and partial correctness. OREAL has achieved significant results, with a 7B model reaching 94.0 pass@1 accuracy on MATH-500 and surpassing previous 32B models. The tool provides training tutorials and Hugging Face model repositories for easy access and implementation.

uncheatable_eval

Uncheatable Eval is a tool designed to assess the language modeling capabilities of LLMs on real-time, newly generated data from the internet. It aims to provide a reliable evaluation method that is immune to data leaks and cannot be gamed. The tool supports the evaluation of Hugging Face AutoModelForCausalLM models and RWKV models by calculating the sum of negative log probabilities on new texts from various sources such as recent papers on arXiv, new projects on GitHub, news articles, and more. Uncheatable Eval ensures that the evaluation data is not included in the training sets of publicly released models, thus offering a fair assessment of the models' performance.

TurtleBenchmark

Turtle Benchmark is a novel and cheat-proof benchmark test used to evaluate large language models (LLMs). It is based on the Turtle Soup game, focusing on logical reasoning and context understanding abilities. The benchmark does not require background knowledge or model memory, providing all necessary information for judgment from stories under 200 words. The results are objective and unbiased, quantifiable as correct/incorrect/unknown, and impossible to cheat due to using real user-generated questions and dynamic data generation during online gameplay.

confabulations

LLM Confabulation Leaderboard evaluates large language models based on confabulations and non-response rates to challenging questions. It includes carefully curated questions with no answers in provided texts, aiming to differentiate between various models. The benchmark combines confabulation and non-response rates for comprehensive ranking, offering insights into model performance and tendencies. Additional notes highlight the meticulous human verification process, challenges faced by LLMs in generating valid responses, and the use of temperature settings. Updates and other benchmarks are also mentioned, providing a holistic view of the evaluation landscape.

R1-Searcher

R1-searcher is a tool designed to incentivize the search capability in large reasoning models (LRMs) via reinforcement learning. It enables LRMs to invoke web search and obtain external information during the reasoning process by utilizing a two-stage outcome-supervision reinforcement learning approach. The tool does not require instruction fine-tuning for cold start and is compatible with existing Base LLMs or Chat LLMs. It includes training code, inference code, model checkpoints, and a detailed technical report.

awesome-transformer-nlp

This repository contains a hand-curated list of great machine (deep) learning resources for Natural Language Processing (NLP) with a focus on Generative Pre-trained Transformer (GPT), Bidirectional Encoder Representations from Transformers (BERT), attention mechanism, Transformer architectures/networks, Chatbot, and transfer learning in NLP.

swarms

Swarms provides simple, reliable, and agile tools to create your own Swarm tailored to your specific needs. Currently, Swarms is being used in production by RBC, John Deere, and many AI startups.

MME-RealWorld

MME-RealWorld is a benchmark designed to address real-world applications with practical relevance, featuring 13,366 high-resolution images and 29,429 annotations across 43 tasks. It aims to provide substantial recognition challenges and overcome common barriers in existing Multimodal Large Language Model benchmarks, such as small data scale, restricted data quality, and insufficient task difficulty. The dataset offers advantages in data scale, data quality, task difficulty, and real-world utility compared to existing benchmarks. It also includes a Chinese version with additional images and QA pairs focused on Chinese scenarios.

opening-up-chatgpt.github.io

This repository provides a curated list of open-source projects that implement instruction-tuned large language models (LLMs) with reinforcement learning from human feedback (RLHF). The projects are evaluated in terms of their openness across a predefined set of criteria in the areas of Availability, Documentation, and Access. The goal of this repository is to promote transparency and accountability in the development and deployment of LLMs.

gepa

GEPA (Genetic-Pareto) is a framework for optimizing arbitrary systems composed of text components like AI prompts, code snippets, or textual specs against any evaluation metric. It employs LLMs to reflect on system behavior, using feedback from execution and evaluation traces to drive targeted improvements. Through iterative mutation, reflection, and Pareto-aware candidate selection, GEPA evolves robust, high-performing variants with minimal evaluations, co-evolving multiple components in modular systems for domain-specific gains. The repository provides the official implementation of the GEPA algorithm as proposed in the paper titled 'GEPA: Reflective Prompt Evolution Can Outperform Reinforcement Learning'.

Taiyi-LLM

Taiyi (太一) is a bilingual large language model fine-tuned for diverse biomedical tasks. It aims to facilitate communication between healthcare professionals and patients, provide medical information, and assist in diagnosis, biomedical knowledge discovery, drug development, and personalized healthcare solutions. The model is based on the Qwen-7B-base model and has been fine-tuned using rich bilingual instruction data. It covers tasks such as question answering, biomedical dialogue, medical report generation, biomedical information extraction, machine translation, title generation, text classification, and text semantic similarity. The project also provides standardized data formats, model training details, model inference guidelines, and overall performance metrics across various BioNLP tasks.

MMLU-Pro

MMLU-Pro is an enhanced benchmark designed to evaluate language understanding models across broader and more challenging tasks. It integrates more challenging, reasoning-focused questions and increases answer choices per question, significantly raising difficulty. The dataset comprises over 12,000 questions from academic exams and textbooks across 14 diverse domains. Experimental results show a significant drop in accuracy compared to the original MMLU, with greater stability under varying prompts. Models utilizing Chain of Thought reasoning achieved better performance on MMLU-Pro.

For similar tasks

do-not-answer

Do-Not-Answer is an open-source dataset curated to evaluate Large Language Models' safety mechanisms at a low cost. It consists of prompts to which responsible language models do not answer. The dataset includes human annotations and model-based evaluation using a fine-tuned BERT-like evaluator. The dataset covers 61 specific harms and collects 939 instructions across five risk areas and 12 harm types. Response assessment is done for six models, categorizing responses into harmfulness and action categories. Both human and automatic evaluations show the safety of models across different risk areas. The dataset also includes a Chinese version with 1,014 questions for evaluating Chinese LLMs' risk perception and sensitivity to specific words and phrases.

AutoPatent

AutoPatent is a multi-agent framework designed for automatic patent generation. It challenges large language models to generate full-length patents based on initial drafts. The framework leverages planner, writer, and examiner agents along with PGTree and RRAG to craft lengthy, intricate, and high-quality patent documents. It introduces a new metric, IRR (Inverse Repetition Rate), to measure sentence repetition within patents. The tool aims to streamline the patent generation process by automating the creation of detailed and specialized patent documents.

SWE-bench-Live

SWE-bench-Live is a live benchmark dataset for evaluating AI systems' ability to complete real-world software engineering tasks. It is continuously updated through an automated curation pipeline, providing the community with up-to-date task instances for rigorous and contamination-free evaluation. The dataset is designed to test the performance of various AI models on software engineering tasks and supports multiple programming languages and operating systems.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.