AI tools for LLaMA

Related Tools:

Meta Llama

Meta Llama is an AI-powered chatbot that helps you write better. It can help you with a variety of writing tasks, including generating text, translating languages, and writing different kinds of creative content.

Llama Coder

Llama Coder is an AI code generator that leverages the power of Llama 3.1 and Together AI to transform your ideas into functional applications. It provides a user-friendly platform for developers to quickly generate code for their projects, reducing the time and effort required for coding. With its advanced algorithms and machine learning capabilities, Llama Coder streamlines the app development process, making it easier for users to bring their concepts to life.

Botsy

Botsy is an AI chatbot builder designed for WhatsApp, enabling users to create conversational AI chatbots without the need for coding. It allows for natural conversations, brand customization, audience management, user data analysis, and knowledge augmentation. Botsy offers usage-based pricing with discounts for larger message bundles, along with hands-on training and tech support for customers. The platform aims to leverage AI for social impact by providing personalized AI services to communities in need.

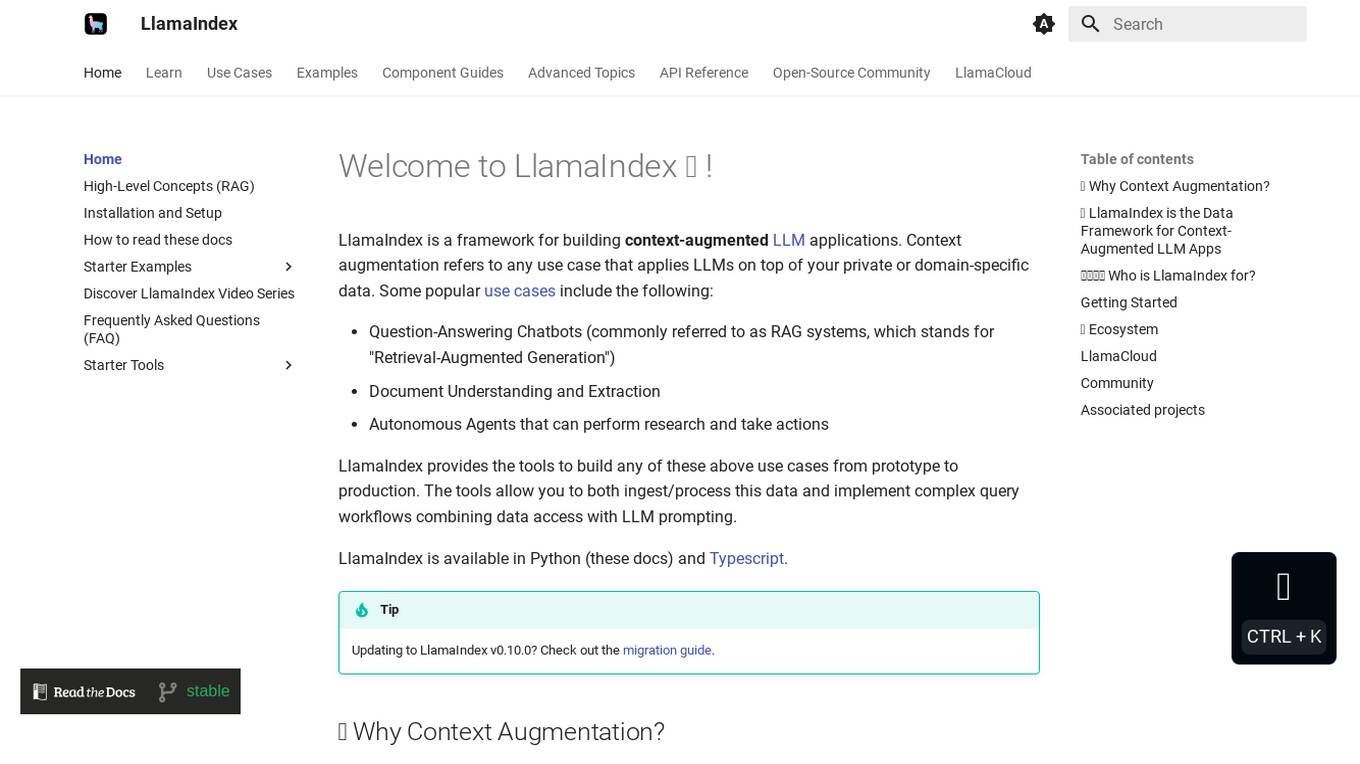

LlamaIndex

LlamaIndex is a leading data framework designed for building LLM (Large Language Model) applications. It allows enterprises to turn their data into production-ready applications by providing functionalities such as loading data from various sources, indexing data, orchestrating workflows, and evaluating application performance. The platform offers extensive documentation, community-contributed resources, and integration options to support developers in creating innovative LLM applications.

LlamaIndex

LlamaIndex is a framework for building context-augmented Large Language Model (LLM) applications. It provides tools to ingest and process data, implement complex query workflows, and build applications like question-answering chatbots, document understanding systems, and autonomous agents. LlamaIndex enables context augmentation by combining LLMs with private or domain-specific data, offering tools for data connectors, data indexes, engines for natural language access, chat engines, agents, and observability/evaluation integrations. It caters to users of all levels, from beginners to advanced developers, and is available in Python and Typescript.

LlamaGen.Ai

LlamaGen.Ai is an ultimate AI-powered ACG creation tool that allows users to effortlessly create comics, webtoons, manga, and manhwa online. It breaks the limits of anime, comics, and games creation, offering endless possibilities for storytelling and visual art. With cutting-edge technology and user-friendly features, LlamaGen.Ai revolutionizes the way creators generate engaging comic scenes and stunning cartoons, providing a seamless experience for both beginners and experienced artists.

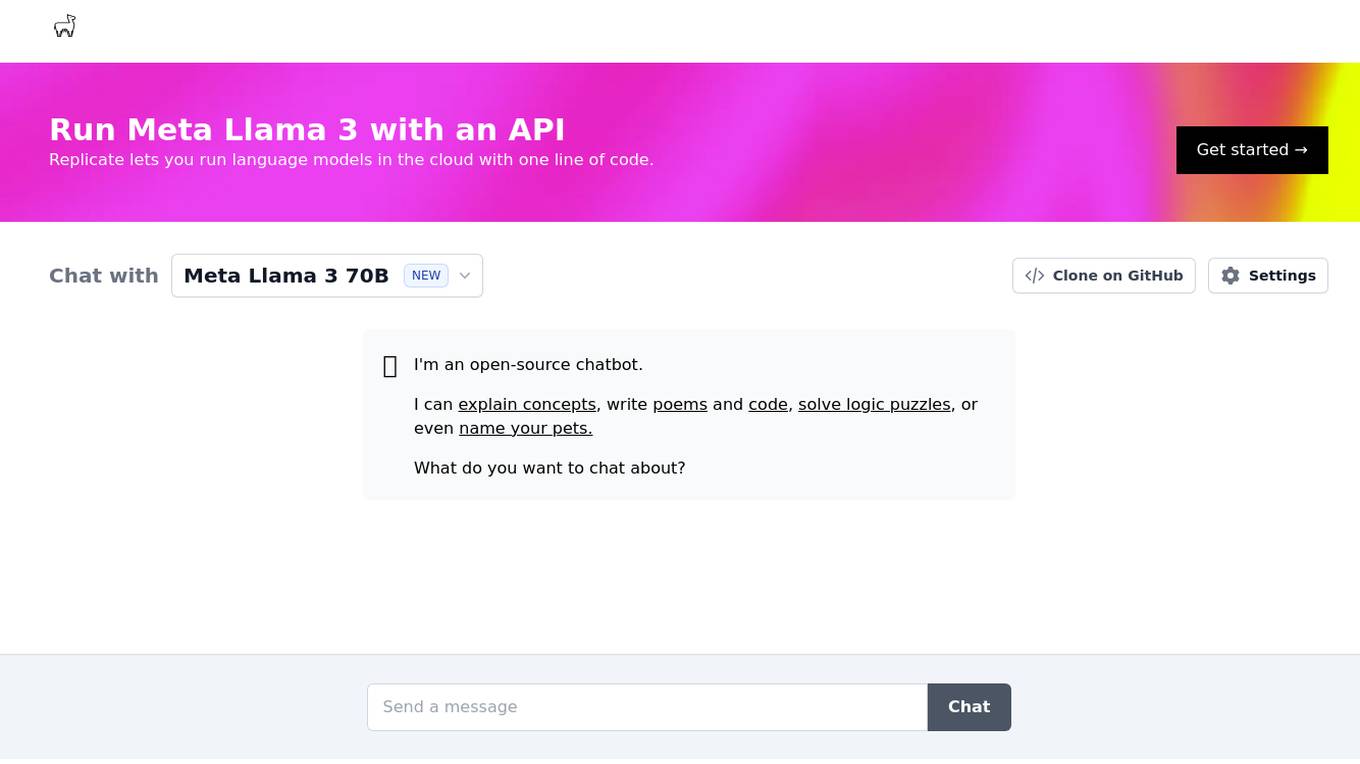

Chat With Llama

Chat with Llama is a free website that allows users to interact with Meta's Llama3, a state-of-the-art AI chat model comparable to ChatGPT. Users can ask unlimited questions and receive prompt responses. Llama3 is open-source and commercially available, enabling developers to customize and profit from AI chatbots. It is trained on 70 billion parameters and generates outputs matching the quality of ChatGPT-4.

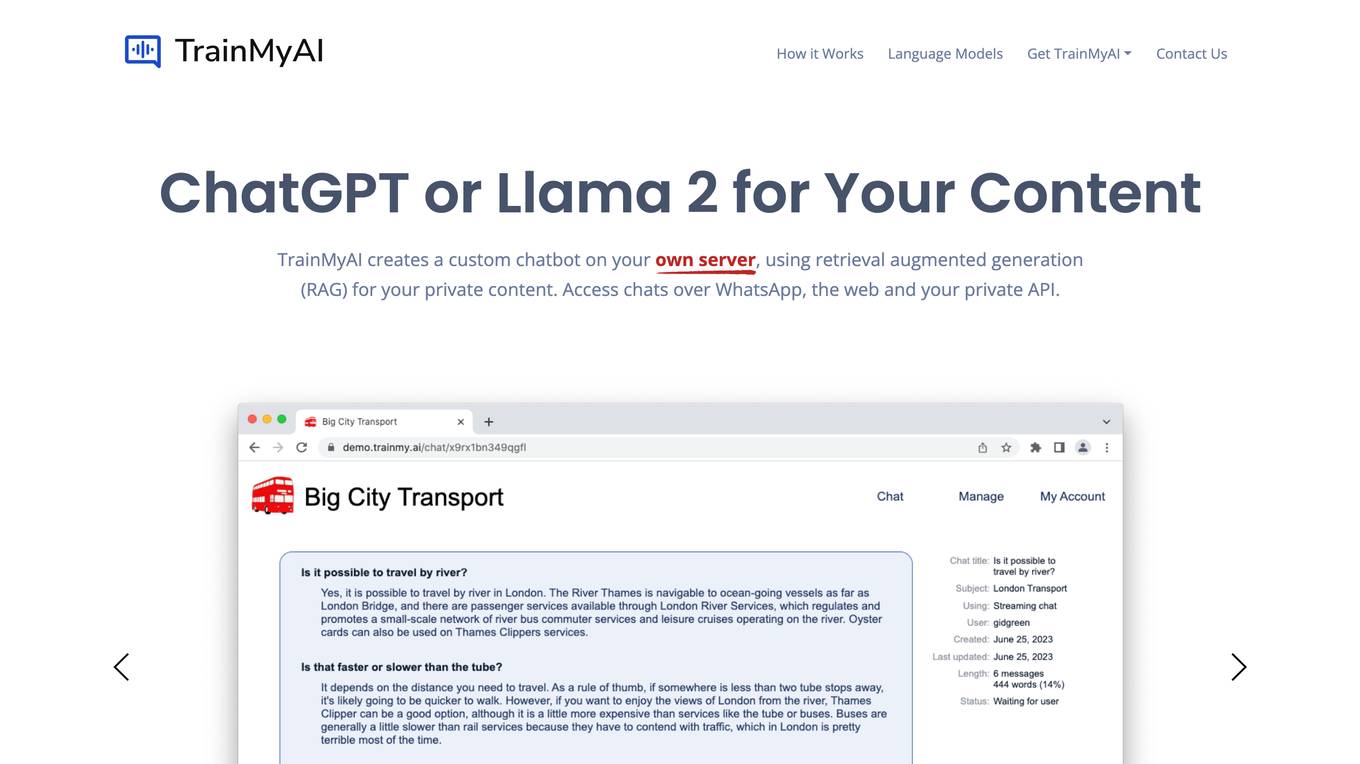

TrainMyAI

TrainMyAI is a comprehensive solution for creating AI chatbots using retrieval augmented generation (RAG) technology. It allows users to build custom AI chatbots on their servers, enabling interactions over WhatsApp, web, and private APIs. The platform offers deep customization options, fine-grained user management, usage history tracking, content optimization, and linked citations. With TrainMyAI, users can maintain full control over their AI models and data, either on-premise or in the cloud.

Gista

Gista is an AI-powered conversion agent that helps businesses turn more website visitors into leads. It is equipped with knowledge about your products and services and can offer value props, build an email list, and more. Gista is easy to set up and use, and it integrates with your favorite platforms.

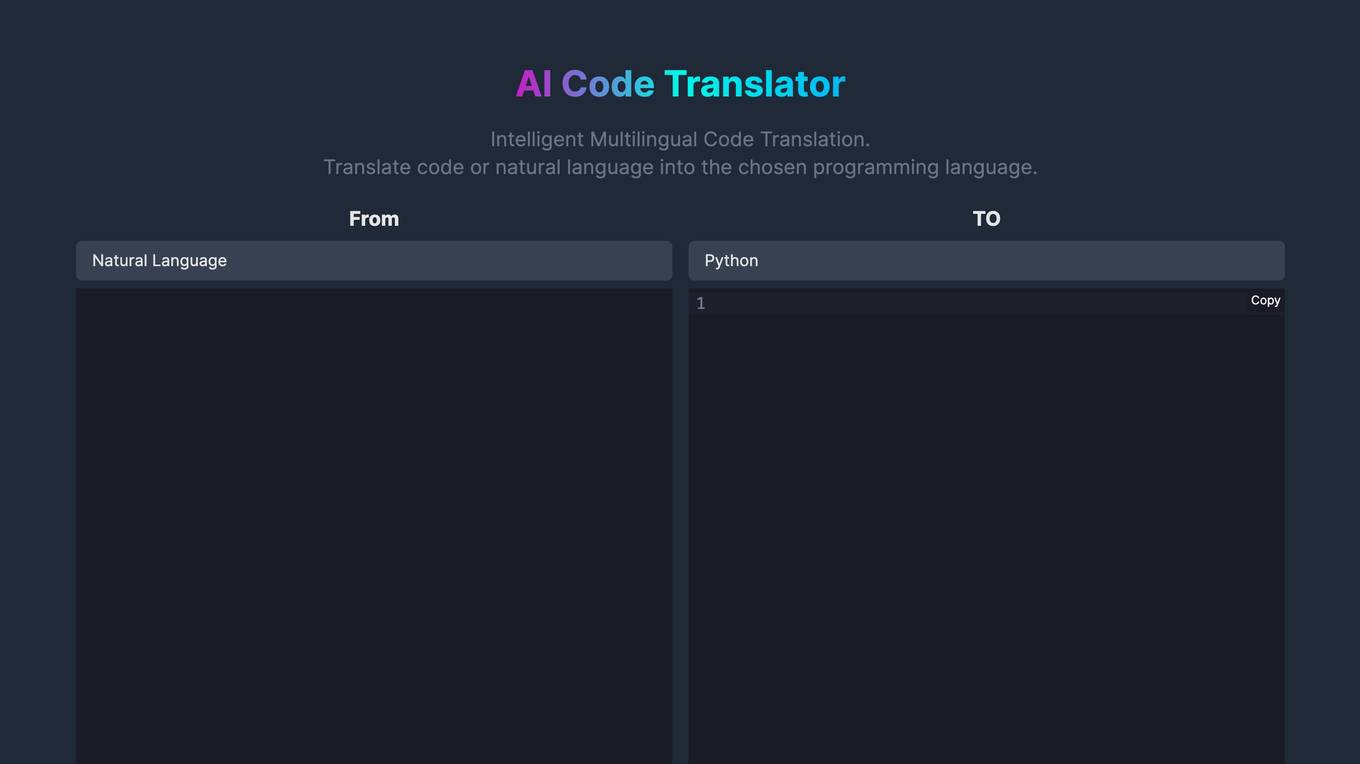

AI Code Translator

AI Code Translator is an online tool that allows users to translate code or natural language into multiple programming languages. It is powered by artificial intelligence (AI) and provides intelligent and efficient code translation. With AI Code Translator, developers can save time and effort by quickly converting code between different languages, optimizing their development process.

Reflection 70B

Reflection 70B is a next-gen open-source LLM powered by Llama 70B, offering groundbreaking self-correction capabilities that outsmart GPT-4. It provides advanced AI-powered conversations, assists with various tasks, and excels in accuracy and reliability. Users can engage in human-like conversations, receive assistance in research, coding, creative writing, and problem-solving, all while benefiting from its innovative self-correction mechanism. Reflection 70B sets new standards in AI performance and is designed to enhance productivity and decision-making across multiple domains.

MultiChat AI

MultiChat AI is an advanced AI tool that provides a platform for users to interact with a diverse range of language models (LLMs) in one place. Users can chat with top-tier LLMs, access premium and open-source models, and utilize pre-built assistants for various tasks. The tool offers features such as AI image generation, image editing, and customizable AI experiences. MultiChat AI aims to revolutionize digital interactions by offering a seamless and efficient communication experience.

DeepUnit

DeepUnit is a software tool designed to facilitate automated unit testing for code. By using DeepUnit, developers can ensure the quality and reliability of their code by automatically running tests to identify bugs and errors. The tool is user-friendly and integrates seamlessly with popular development environments like NPM and VS Code.

Aicado.ai

Aicado.ai is an AI tool that provides comprehensive comparisons and insights into various AI models such as GPT-4, Llama, Gemini, and more. Users can access detailed reviews and features to find the best AI model for their specific needs. The platform offers a premium dashboard for in-depth analysis and comparison of different AI models.

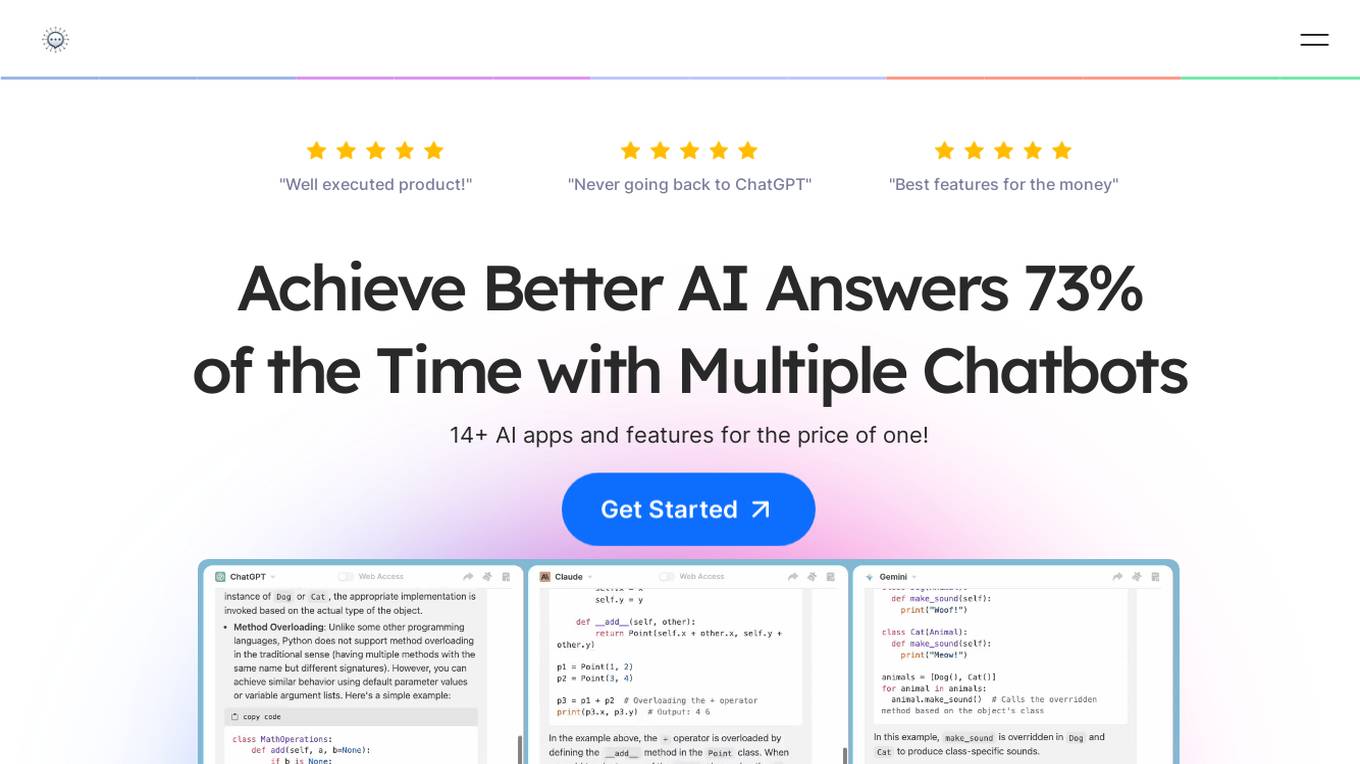

ChatPlayground AI

ChatPlayground AI is a versatile platform that allows users to compare multiple AI chatbots to obtain the best responses. With 14+ AI apps and features available, users can achieve better AI answers 73% of the time. The platform offers a comprehensive prompt library, real-time web search capabilities, image generation, history recall, document upload and analysis, and multilingual support. It caters to developers, data scientists, students, researchers, content creators, writers, and AI enthusiasts. Testimonials from users highlight the efficiency and creativity-enhancing benefits of using ChatPlayground AI.

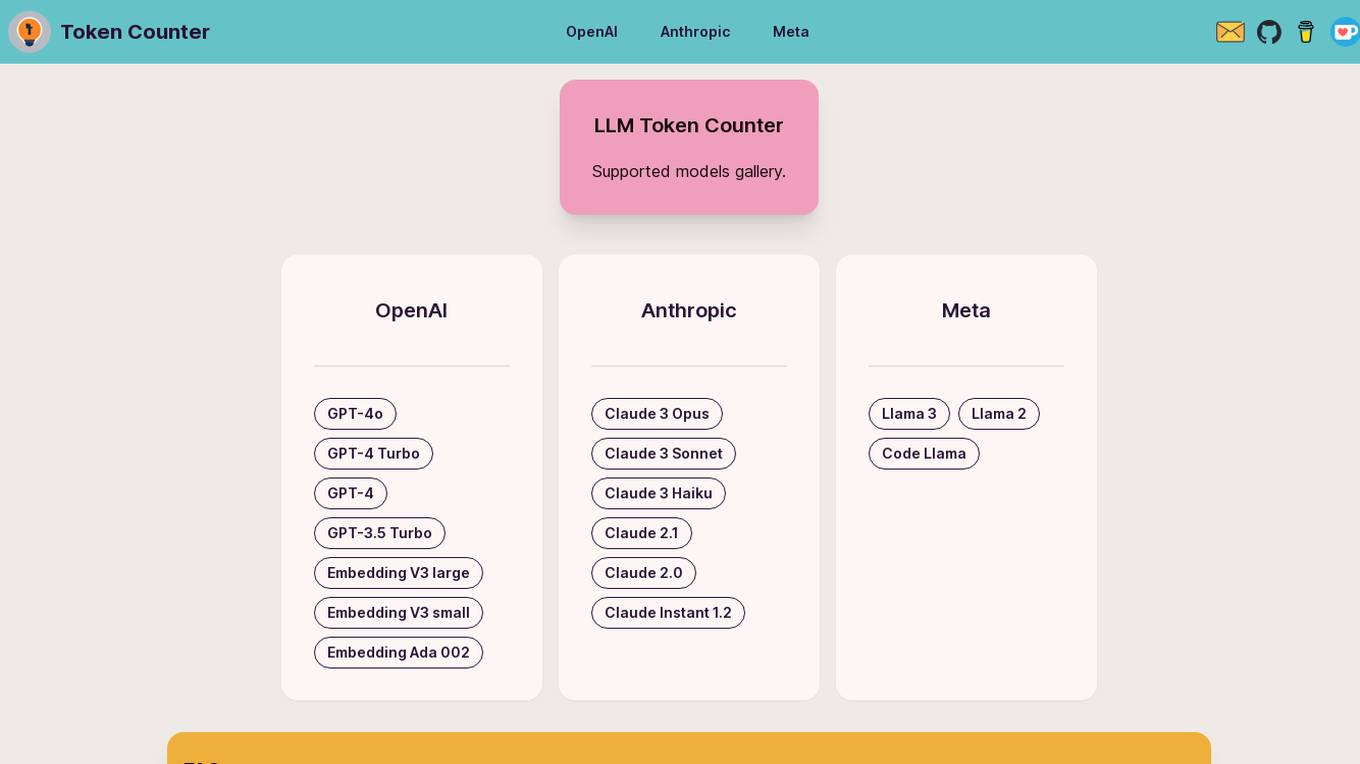

LLM Token Counter

The LLM Token Counter is a sophisticated tool designed to help users effectively manage token limits for various Language Models (LLMs) like GPT-3.5, GPT-4, Claude-3, Llama-3, and more. It utilizes Transformers.js, a JavaScript implementation of the Hugging Face Transformers library, to calculate token counts client-side. The tool ensures data privacy by not transmitting prompts to external servers.

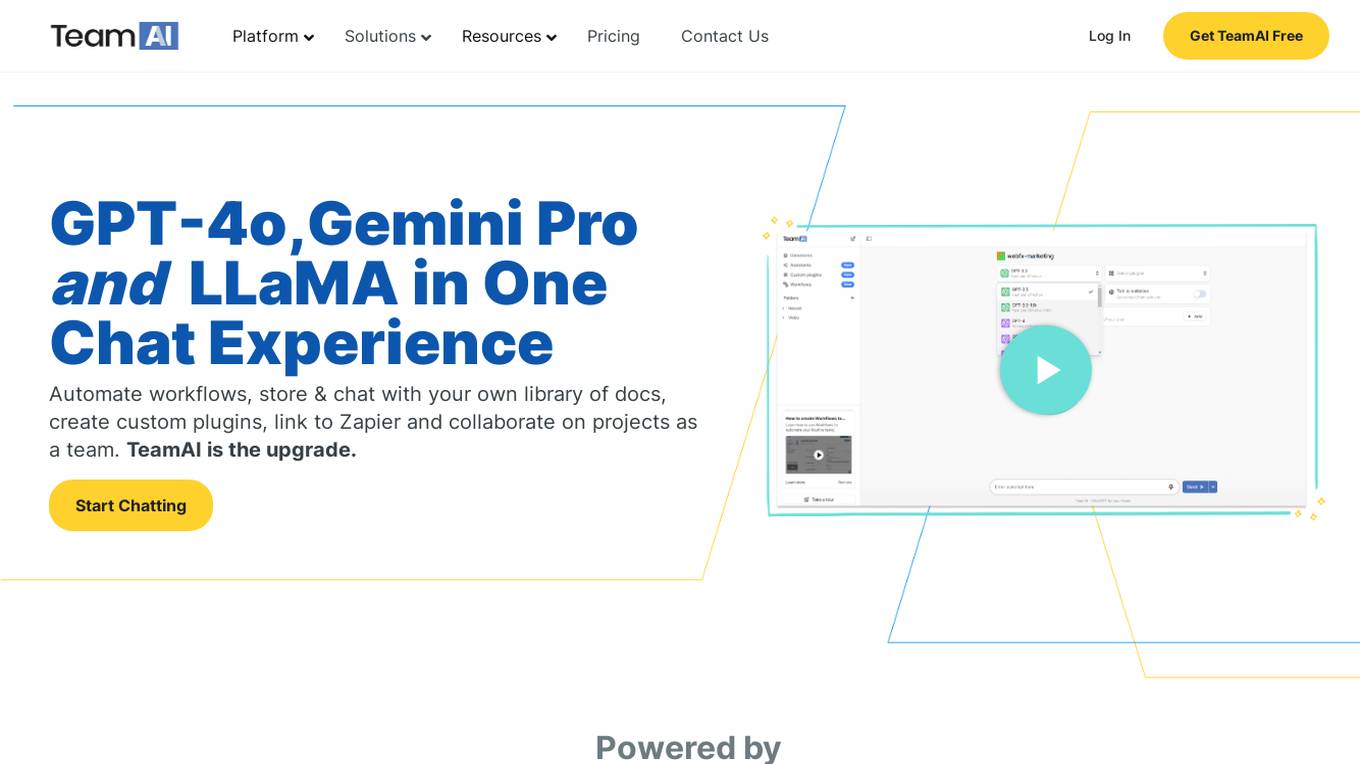

TeamAI

TeamAI is an AI platform that offers a shared workspace for businesses to leverage AI technology in various functions such as sales, marketing, design, HR, and more. It provides custom assistants, automated workflows, collaboration tools, and multiple models to enhance productivity and decision-making. With features like custom plugins, shared prompt libraries, and collaborative workspaces, TeamAI empowers teams to streamline processes and improve efficiency. The platform is designed to help organizations implement AI seamlessly and stay ahead of the curve in a rapidly evolving digital landscape.

dalAI Lama - Neuroscience Meditation

Learn about Meditation and Neuroscience explained by AI Lama, by using vivid daily life examples.

llama-recipes

The llama-recipes repository provides a scalable library for fine-tuning Llama 2, along with example scripts and notebooks to quickly get started with using the Llama 2 models in a variety of use-cases, including fine-tuning for domain adaptation and building LLM-based applications with Llama 2 and other tools in the LLM ecosystem. The examples here showcase how to run Llama 2 locally, in the cloud, and on-prem.

llama_index

LlamaIndex is a data framework for building LLM applications. It provides tools for ingesting, structuring, and querying data, as well as integrating with LLMs and other tools. LlamaIndex is designed to be easy to use for both beginner and advanced users, and it provides a comprehensive set of features for building LLM applications.

llama-cpp-agent

The llama-cpp-agent framework is a tool designed for easy interaction with Large Language Models (LLMs). Allowing users to chat with LLM models, execute structured function calls and get structured output (objects). It provides a simple yet robust interface and supports llama-cpp-python and OpenAI endpoints with GBNF grammar support (like the llama-cpp-python server) and the llama.cpp backend server. It works by generating a formal GGML-BNF grammar of the user defined structures and functions, which is then used by llama.cpp to generate text valid to that grammar. In contrast to most GBNF grammar generators it also supports nested objects, dictionaries, enums and lists of them.

llama-on-lambda

This project provides a proof of concept for deploying a scalable, serverless LLM Generative AI inference engine on AWS Lambda. It leverages the llama.cpp project to enable the usage of more accessible CPU and RAM configurations instead of limited and expensive GPU capabilities. By deploying a container with the llama.cpp converted models onto AWS Lambda, this project offers the advantages of scale, minimizing cost, and maximizing compute availability. The project includes AWS CDK code to create and deploy a Lambda function leveraging your model of choice, with a FastAPI frontend accessible from a Lambda URL. It is important to note that you will need ggml quantized versions of your model and model sizes under 6GB, as your inference RAM requirements cannot exceed 9GB or your Lambda function will fail.

llama.cpp

llama.cpp is a C++ implementation of LLaMA, a large language model from Meta. It provides a command-line interface for inference and can be used for a variety of tasks, including text generation, translation, and question answering. llama.cpp is highly optimized for performance and can be run on a variety of hardware, including CPUs, GPUs, and TPUs.

llama_ros

This repository provides a set of ROS 2 packages to integrate llama.cpp into ROS 2. By using the llama_ros packages, you can easily incorporate the powerful optimization capabilities of llama.cpp into your ROS 2 projects by running GGUF-based LLMs and VLMs.

LLaMA-Factory

LLaMA Factory is a unified framework for fine-tuning 100+ large language models (LLMs) with various methods, including pre-training, supervised fine-tuning, reward modeling, PPO, DPO and ORPO. It features integrated algorithms like GaLore, BAdam, DoRA, LongLoRA, LLaMA Pro, LoRA+, LoftQ and Agent tuning, as well as practical tricks like FlashAttention-2, Unsloth, RoPE scaling, NEFTune and rsLoRA. LLaMA Factory provides experiment monitors like LlamaBoard, TensorBoard, Wandb, MLflow, etc., and supports faster inference with OpenAI-style API, Gradio UI and CLI with vLLM worker. Compared to ChatGLM's P-Tuning, LLaMA Factory's LoRA tuning offers up to 3.7 times faster training speed with a better Rouge score on the advertising text generation task. By leveraging 4-bit quantization technique, LLaMA Factory's QLoRA further improves the efficiency regarding the GPU memory.

llama_cpp.rb

llama_cpp.rb provides Ruby bindings for the llama.cpp, a library that allows you to use the Llama language model in your Ruby applications. Llama is a large language model that can be used for a variety of natural language processing tasks, such as text generation, translation, and question answering. This gem is still under development and may undergo many changes in the future.

Llama-Chinese

Llama中文社区是一个专注于Llama模型在中文方面的优化和上层建设的高级技术社区。 **已经基于大规模中文数据,从预训练开始对Llama2模型进行中文能力的持续迭代升级【Done】**。**正在对Llama3模型进行中文能力的持续迭代升级【Doing】** 我们热忱欢迎对大模型LLM充满热情的开发者和研究者加入我们的行列。

llama-gpt

LlamaGPT is a self-hosted, offline, ChatGPT-like chatbot, powered by Llama 2. It is 100% private, with no data leaving your device. It supports various models, including Nous Hermes Llama 2 7B/13B/70B Chat and Code Llama 7B/13B/34B Chat. You can install LlamaGPT on your umbrelOS home server, M1/M2 Mac, or anywhere else with Docker. It also provides an OpenAI-compatible API for easy integration. LlamaGPT is still under development, with plans to add more features such as custom model loading and model switching.

Chinese-LLaMA-Alpaca-3

Chinese-LLaMA-Alpaca-3 is a project based on Meta's latest release of the new generation open-source large model Llama-3. It is the third phase of the Chinese-LLaMA-Alpaca open-source large model series projects (Phase 1, Phase 2). This project open-sources the Chinese Llama-3 base model and the Chinese Llama-3-Instruct instruction fine-tuned large model. These models incrementally pre-train with a large amount of Chinese data on the basis of the original Llama-3 and further fine-tune using selected instruction data, enhancing Chinese basic semantics and instruction understanding capabilities. Compared to the second-generation related models, significant performance improvements have been achieved.

llama-coder

Llama Coder is a self-hosted Github Copilot replacement for VS Code that provides autocomplete using Ollama and Codellama. It works best with Mac M1/M2/M3 or RTX 4090, offering features like fast performance, no telemetry or tracking, and compatibility with any coding language. Users can install Ollama locally or on a dedicated machine for remote usage. The tool supports different models like stable-code and codellama with varying RAM/VRAM requirements, allowing users to optimize performance based on their hardware. Troubleshooting tips and a changelog are also provided for user convenience.

export_llama_to_onnx

Export LLM like llama to ONNX files without modifying transformers modeling_xx_model.py. Supported models include llama (Hugging Face format), Baichuan, Alibaba Qwen 1.5/2, ChatGlm2/ChatGlm3, and Gemma. Usage examples provided for exporting different models to ONNX files. Various arguments can be used to configure the export process. Note on uninstalling/disabling FlashAttention and xformers before model conversion. Recommendations for handling kv_cache format and simplifying large ONNX models. Disclaimer regarding correctness of exported models and consequences of usage.

llama-github

Llama-github is a powerful tool that helps retrieve relevant code snippets, issues, and repository information from GitHub based on queries. It empowers AI agents and developers to solve coding tasks efficiently. With features like intelligent GitHub retrieval, repository pool caching, LLM-powered question analysis, and comprehensive context generation, llama-github excels at providing valuable knowledge context for development needs. It supports asynchronous processing, flexible LLM integration, robust authentication options, and logging/error handling for smooth operations and troubleshooting. The vision is to seamlessly integrate with GitHub for AI-driven development solutions, while the roadmap focuses on empowering LLMs to automatically resolve complex coding tasks.

llama-zip

llama-zip is a command-line utility for lossless text compression and decompression. It leverages a user-provided large language model (LLM) as the probabilistic model for an arithmetic coder, achieving high compression ratios for structured or natural language text. The tool is not limited by the LLM's maximum context length and can handle arbitrarily long input text. However, the speed of compression and decompression is limited by the LLM's inference speed.

llama3-tokenizer-js

JavaScript tokenizer for LLaMA 3 designed for client-side use in the browser and Node, with TypeScript support. It accurately calculates token count, has 0 dependencies, optimized running time, and somewhat optimized bundle size. Compatible with most LLaMA 3 models. Can encode and decode text, but training is not supported. Pollutes global namespace with `llama3Tokenizer` in the browser. Mostly compatible with LLaMA 3 models released by Facebook in April 2024. Can be adapted for incompatible models by passing custom vocab and merge data. Handles special tokens and fine tunes. Developed by belladore.ai with contributions from xenova, blaze2004, imoneoi, and ConProgramming.

llama-index

This repository, llama-index, contains a collection of apps powered by LlamaIndex. LlamaIndex is an open-source project that provides a simple interface between LLMs and external data sources like APIs, PDFs, SQL etc. It provides indices over structured and unstructured data, helping to abstract away the differences across data sources. The repository includes apps like chat-with-pdf and summarize-url, showcasing the capabilities of LlamaIndex in interacting with PDFs and summarizing URLs.