YaneuraOu

YaneuraOu is the World's Strongest Shogi engine(AI player) , WCSC29 1st winner , educational and USI compliant engine.

Stars: 518

YaneuraOu is the World's Strongest Shogi engine (AI player), winner of WCSC29 and other prestigious competitions. It is an educational and USI compliant engine that supports various features such as Ponder, MultiPV, and ultra-parallel search. The engine is known for its compatibility with different platforms like Windows, Ubuntu, macOS, and ARM. Additionally, YaneuraOu offers a standard opening book format, on-the-fly opening book support, and various maintenance commands for opening books. With a massive transposition table size of up to 33TB, YaneuraOu is a powerful and versatile tool for Shogi enthusiasts and developers.

README:

YaneuraOu is the World's Strongest Shogi engine(AI player) , WCSC29 1st winner , educational and USI compliant engine.

やねうら王は、WCSC29(世界コンピュータ将棋選手権/2019年)、第4回世界将棋AI電竜戦本戦(2023年)などにおいて優勝した世界最強の将棋の思考エンジンです。教育的でUSIプロトコルに準拠しています。

- 2024年 第34回 世界コンピュータ将棋選手権(WCSC34)『お前、CSA会員にならねーか?』優勝。(探索部やねうら王V8.20 GitHub版)

- 2024年 第2回 マイナビニュース杯電竜戦ハードウェア統一戦 『水匠』準優勝 (探索部やねうら王V8.10開発版)

- 2023年 第4回世界将棋AI電竜戦本戦 『水匠』優勝 (探索部やねうら王。やねうらおは、チームメンバーとして参加)

- 2023年 第1回 マイナビニュース杯電竜戦ハードウェア統一戦 『水匠』優勝。(探索部やねうら王)

- 2023年 第33回 世界コンピュータ将棋選手権(WCSC33)『やねうら王』準優勝。

- 2023年 第4回世界将棋AI電竜戦TSEC4 ファイナル『やねうら王』相居飛車部門優勝。総合2位。

- 2022年 第3回世界将棋AI電竜戦本戦 『水匠』優勝。(探索部やねうら王)

- 2021年 第2回世界将棋AI電竜戦TSEC 『水匠』総合優勝。(探索部やねうら王)

- 2020年 第1回 世界コンピュータ将棋オンライン大会(WCSO1) 『水匠』優勝。(探索部やねうら王)

- 2019年 世界コンピュータ将棋選手権(WCSC29) 『やねうら王 with お多福ラボ2019』優勝。

- 決勝の上位8チームすべてがやねうら王の思考エンジンを採用。

- 2018年 世界コンピュータ将棋選手権(WCSC28) 『Hefeweizen』優勝

- 2017年 世界コンピュータ将棋選手権(WCSC27) 『elmo』優勝

- 2017年 第5回将棋電王トーナメント(SDT5) 『平成将棋合戦ぽんぽこ』優勝

- USIプロトコルに準拠した思考エンジンです。

- 入玉宣言勝ち、トライルール等にも対応しています。

- Ponder(相手番で思考する)、StochasticPonder(確率的ponder)に対応しています。

- MultiPV(複数の候補手を出力する)に対応しています。

- 秒読み、フィッシャールールなど様々な持時間に対応しています。

- 256スレッドのような超並列探索に対応しています。

- 定跡DBにやねうら王標準定跡フォーマットを採用しています。

- 定跡DBのon the fly(メモリに丸読みしない)に対応しています。

- 定跡DBの様々なメンテナンス用コマンドをサポートしています。

- 置換表の上限サイズは33TB(実質的に無限)まで対応しています。

- Windows、Ubuntu、macOS、ARMなど様々なプラットフォームをサポートしています。

- 評価関数として、KPPT、KPP_KKPT、NNUE(各種)に対応しています。

- dlshogi互換エンジンです。

- やねうら王の思考エンジンオプションをサポートしています。

- 定跡DBにやねうら王標準定跡フォーマットを採用しています。

- 定跡DBのon the fly(メモリに丸読みしない)に対応しています。

- GPU無しでも動作するDirectML版、TensorRT版を用意しています。

| 記事内容 | リンク | レベル |

|---|---|---|

| やねうら王のインストール手順について | やねうら王のインストール手順 | 入門 |

| ふかうら王のインストール手順について | ふかうら王のインストール手順 | 中級 |

| やねうら王のお勧めエンジン設定について | やねうら王のお勧めエンジン設定 | 入門 |

| ふかうら王のお勧めエンジン設定について | ふかうら王のお勧めエンジン設定 | 入門 |

| やねうら王のエンジンオプションについて | 思考エンジンオプション | 入門~中級 |

| やねうら王詰将棋エンジンについて | やねうら王詰将棋エンジン | 入門~中級 |

| やねうら王のよくある質問 | よくある質問 | 初級~中級 |

| やねうら王の隠し機能 | 隠し機能 | 中級~上級 |

| やねうら王の定跡を作る | 定跡の作成 | 中級~上級 |

| やねうら王のUSI拡張コマンドについて | USI拡張コマンド | 開発者向け |

| やねうら王のビルド手順について | やねうら王のビルド手順 | 開発者向け |

| ふかうら王のビルド手順について | ふかうら王のビルド手順 | 開発者向け |

| やねうら王のソースコード解説 | やねうら王のソースコード解説 | 開発者向け |

| AWSでやねうら王を動かす | AWSでやねうら王 | 中級~開発者 |

| 大会に参加する時の設定 | 大会に参加する時の設定 | 開発者 |

| やねうら王の学習コマンド | やねうら王の学習コマンド | 開発者 |

| ふかうら王の学習手順 | ふかうら王の学習手順 | 開発者 |

| USI対応エンジンの自己対局 | USI対応エンジンの自己対局 | 中級~開発者 |

| パラメーター自動調整フレームワーク | パラメーター自動調整フレームワーク | 開発者 |

| 探索部の計測資料 | 探索部の計測資料 | 開発者 |

| 廃止したコマンド・オプションなど | 過去の資料 | 開発者 |

| やねうら王の更新履歴 | やねうら王の更新履歴 | 開発者 |

| プロジェクト名 | 進捗 |

|---|---|

| やねうら王 | 現在進行形で改良しています。 |

| ふかうら王 | 現在進行形で改良しています。 |

| やねうら王詰将棋エンジンV2 | 省メモリで長手数の詰将棋が解ける詰将棋用のエンジン。 |

| Bloodgate | floodgateに取って代わる対局場です |

過去のサブプロジェクトである、やねうら王nano , mini , classic、王手将棋、取る一手将棋、協力詰めsolver、連続自己対戦フレームワークなどはこちらからどうぞ。

やねうら王公式ブログの関連記事の見出し一覧です。

各エンジンオプションの解説、定跡ファイルのダウンロード、定跡の生成手法などについての詳しい資料があります。初心者から開発者まで、知りたいことが全部詰まっています。

やねうら王プロジェクトのソースコードはStockfishをそのまま用いている部分が多々あり、Apery/SilentMajorityを参考にしている部分もありますので、やねうら王プロジェクトは、それらのプロジェクトのライセンス(GPLv3)に従うものとします。

「リゼロ評価関数ファイル」については、やねうら王プロジェクトのオリジナルですが、一切の権利は主張しませんのでご自由にお使いください。

やねうら王関連の最新情報がキャッチできる主要なサイトです。

| サイト | リンク |

|---|---|

| やねうら王公式ブログ | https://yaneuraou.yaneu.com/ |

| やねうら王mini 公式 (解説記事等) | http://yaneuraou.yaneu.com/YaneuraOu_Mini/ |

| やねうら王Twitter | https://twitter.com/yaneuraou |

| やねうら王公式ちゃんねる(YouTube) | https://www.youtube.com/c/yanechan |

上記のやねうら王公式ブログでは、コンピュータ将棋に関する情報を大量に発信していますので、やねうら王に興味がなくとも、コンピュータ将棋の開発をしたいなら、非常に参考になると思います。

やねうら王関連の質問は、以下のブログ記事のコメント欄にお願いします。 https://yaneuraou.yaneu.com/2022/05/19/yaneuraou-question-box/

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for YaneuraOu

Similar Open Source Tools

YaneuraOu

YaneuraOu is the World's Strongest Shogi engine (AI player), winner of WCSC29 and other prestigious competitions. It is an educational and USI compliant engine that supports various features such as Ponder, MultiPV, and ultra-parallel search. The engine is known for its compatibility with different platforms like Windows, Ubuntu, macOS, and ARM. Additionally, YaneuraOu offers a standard opening book format, on-the-fly opening book support, and various maintenance commands for opening books. With a massive transposition table size of up to 33TB, YaneuraOu is a powerful and versatile tool for Shogi enthusiasts and developers.

PyTorch-Tutorial-2nd

The second edition of "PyTorch Practical Tutorial" was completed after 5 years, 4 years, and 2 years. On the basis of the essence of the first edition, rich and detailed deep learning application cases and reasoning deployment frameworks have been added, so that this book can more systematically cover the knowledge involved in deep learning engineers. As the development of artificial intelligence technology continues to emerge, the second edition of "PyTorch Practical Tutorial" is not the end, but the beginning, opening up new technologies, new fields, and new chapters. I hope to continue learning and making progress in artificial intelligence technology with you in the future.

poco-agent

Poco Agent is a cloud-based tool that provides a secure sandbox environment for running tasks without affecting the host machine. It offers a modern UI with mobile adaptability, easy configuration through Docker, and extensive capabilities with support for MCP protocol and custom skills. Users can run tasks asynchronously and schedule them, even when the web interface is closed. Additional features include a built-in browser for internet research and GitHub repository integration. Poco Agent aims to be a more secure, visually appealing, and user-friendly alternative to OpenClaw.

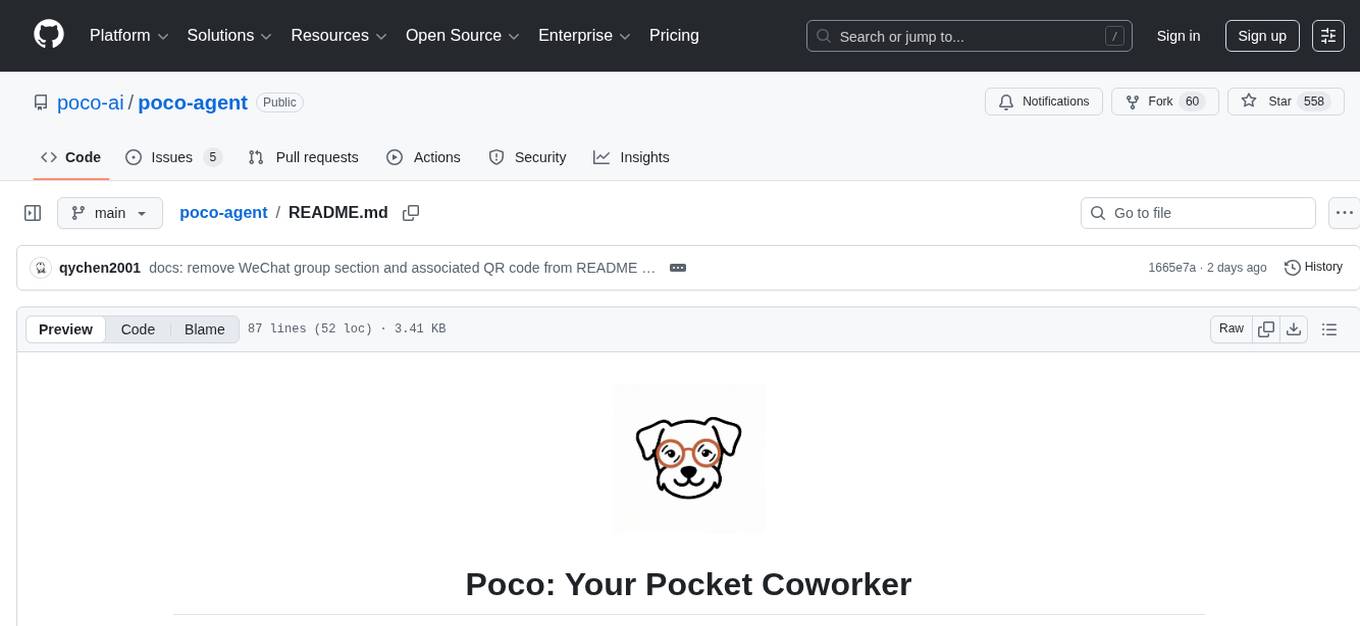

intlayer

Intlayer is an open-source, flexible i18n toolkit with AI-powered translation and CMS capabilities. It is a modern i18n solution for web and mobile apps, framework-agnostic, and includes features like per-locale content files, TypeScript autocompletion, tree-shakable dictionaries, and CI/CD integration. With Intlayer, internationalization becomes faster, cleaner, and smarter, offering benefits such as cross-framework support, JavaScript-powered content management, simplified setup, enhanced routing, AI-powered translation, and more.

bitcart

Bitcart is a platform designed for merchants, users, and developers, providing easy setup and usage. It includes various linked repositories for core daemons, admin panel, ready store, Docker packaging, Python library for coins connection, BitCCL scripting language, documentation, and official site. The platform aims to simplify the process for merchants and developers to interact and transact with cryptocurrencies, offering a comprehensive ecosystem for managing transactions and payments.

codemod

Codemod platform is a tool that helps developers create, distribute, and run codemods in codebases of any size. The AI-powered, community-led codemods enable automation of framework upgrades, large refactoring, and boilerplate programming with speed and developer experience. It aims to make dream migrations a reality for developers by providing a platform for seamless codemod operations.

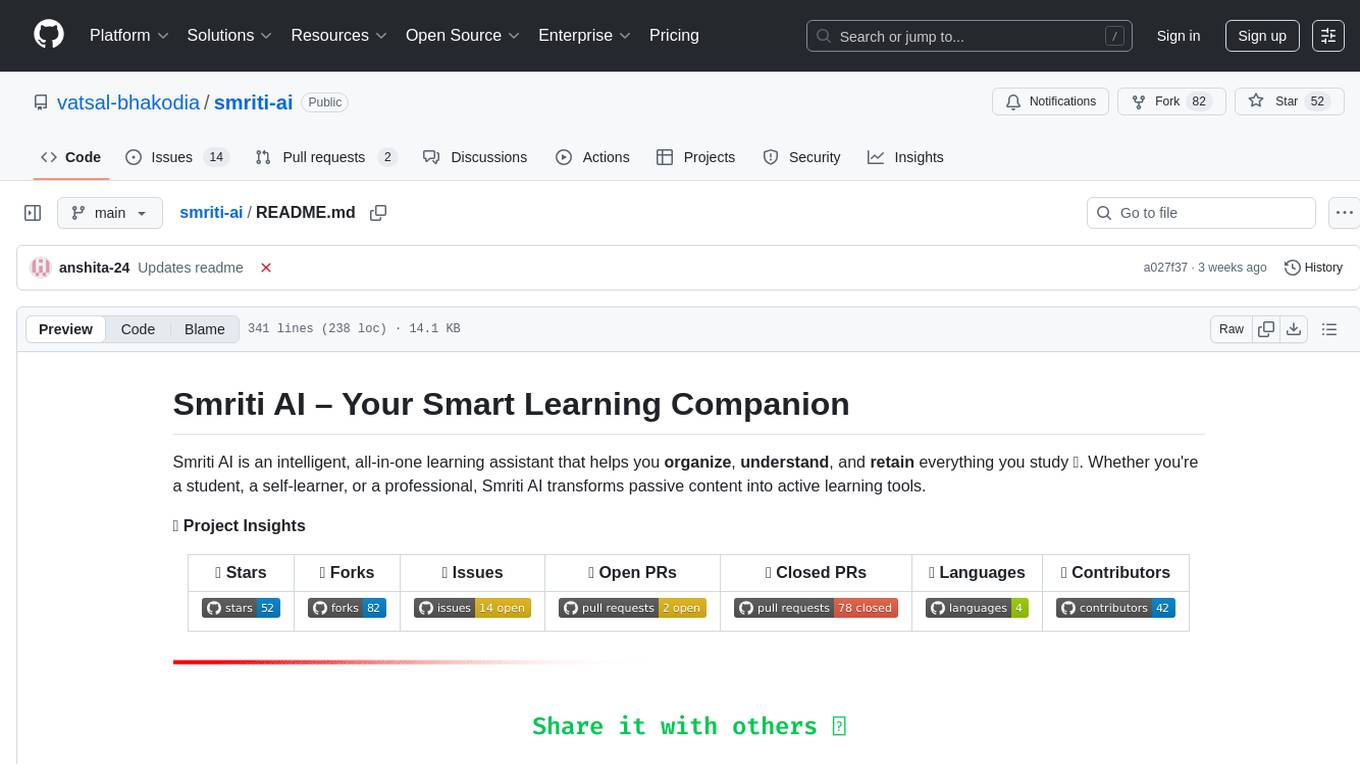

smriti-ai

Smriti AI is an intelligent learning assistant that helps users organize, understand, and retain study materials. It transforms passive content into active learning tools by capturing resources, converting them into summaries and quizzes, providing spaced revision with reminders, tracking progress, and offering a multimodal interface. Suitable for students, self-learners, professionals, educators, and coaching institutes.

matrixone

MatrixOne is the industry's first database to bring Git-style version control to data, combined with MySQL compatibility, AI-native capabilities, and cloud-native architecture. It is a HTAP (Hybrid Transactional/Analytical Processing) database with a hyper-converged HSTAP engine that seamlessly handles transactional, analytical, full-text search, and vector search workloads in a single unified system—no data movement, no ETL, no compromises. Manage your database like code with features like instant snapshots, time travel, branch & merge, instant rollback, and complete audit trail. Built for the AI era, MatrixOne is MySQL-compatible, AI-native, and cloud-native, offering storage-compute separation, elastic scaling, and Kubernetes-native deployment. It serves as one database for everything, replacing multiple databases and ETL jobs with native OLTP, OLAP, full-text search, and vector search capabilities.

anylabeling

AnyLabeling is a tool for effortless data labeling with AI support from YOLO and Segment Anything. It combines features from LabelImg and Labelme with an improved UI and auto-labeling capabilities. Users can annotate images with polygons, rectangles, circles, lines, and points, as well as perform auto-labeling using YOLOv5 and Segment Anything. The tool also supports text detection, recognition, and Key Information Extraction (KIE) labeling, with multiple language options available such as English, Vietnamese, and Chinese.

L3AGI

L3AGI is an open-source tool that enables AI Assistants to collaborate together as effectively as human teams. It provides a robust set of functionalities that empower users to design, supervise, and execute both autonomous AI Assistants and Teams of Assistants. Key features include the ability to create and manage Teams of AI Assistants, design and oversee standalone AI Assistants, equip AI Assistants with the ability to retain and recall information, connect AI Assistants to an array of data sources for efficient information retrieval and processing, and employ curated sets of tools for specific tasks. L3AGI also offers a user-friendly interface, APIs for integration with other systems, and a vibrant community for support and collaboration.

claude-code-ultimate-guide

The Claude Code Ultimate Guide is an exhaustive documentation resource that takes users from beginner to power user in using Claude Code. It includes production-ready templates, workflow guides, a quiz, and a cheatsheet for daily use. The guide covers educational depth, methodologies, and practical examples to help users understand concepts and workflows. It also provides interactive onboarding, a repository structure overview, and learning paths for different user levels. The guide is regularly updated and offers a unique 257-question quiz for comprehensive assessment. Users can also find information on agent teams coverage, methodologies, annotated templates, resource evaluations, and learning paths for different roles like junior developer, senior developer, power user, and product manager/devops/designer.

MaixPy

MaixPy is a Python SDK that enables users to easily create AI vision projects on edge devices. It provides a user-friendly API for accessing NPU, making it suitable for AI Algorithm Engineers, STEM teachers, Makers, Engineers, Students, Enterprises, and Contestants. The tool supports Python programming, MaixVision Workstation, AI vision, video streaming, voice recognition, and peripheral usage. It also offers an online AI training platform called MaixHub. MaixPy is designed for new hardware platforms like MaixCAM, offering improved performance and features compared to older versions. The ecosystem includes hardware, software, tools, documentation, and a cloud platform.

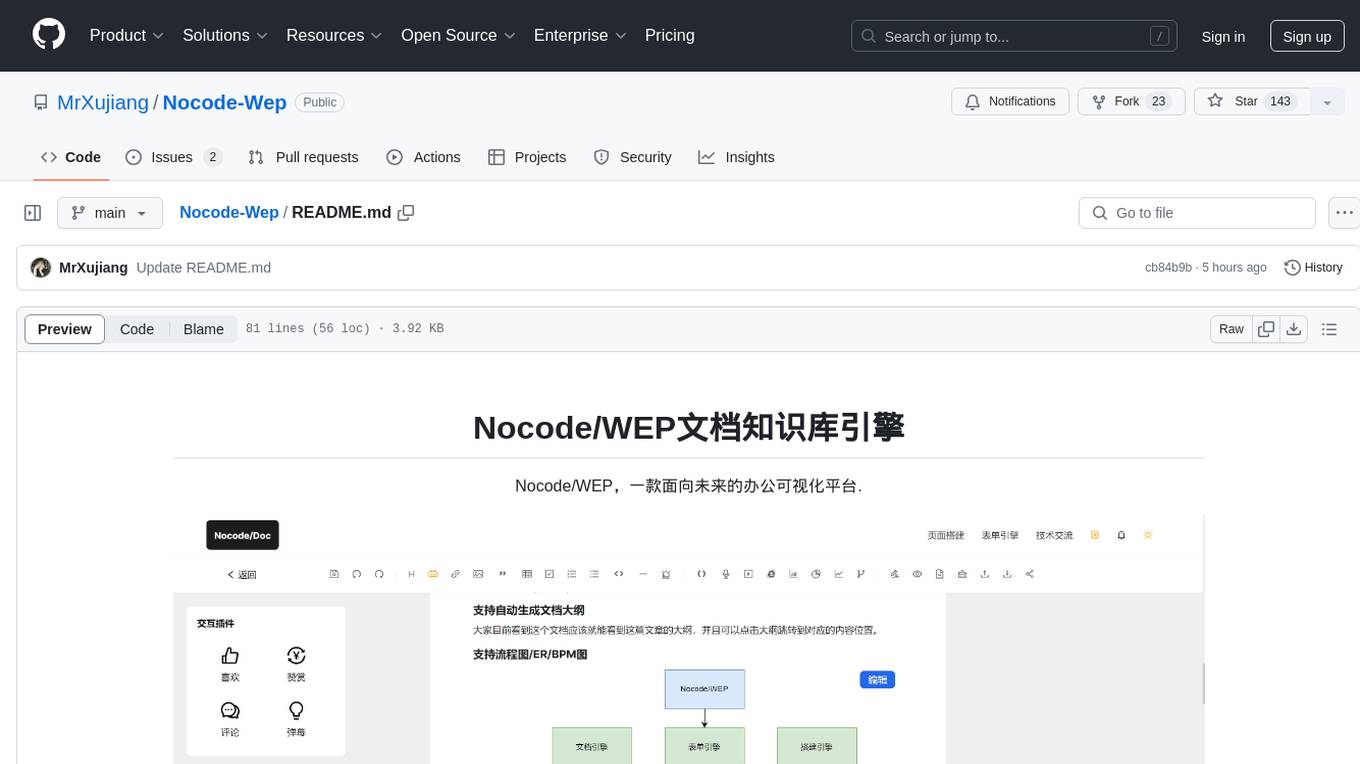

Nocode-Wep

Nocode/WEP is a forward-looking office visualization platform that includes modules for document building, web application creation, presentation design, and AI capabilities for office scenarios. It supports features such as configuring bullet comments, global article comments, multimedia content, custom drawing boards, flowchart editor, form designer, keyword annotations, article statistics, custom appreciation settings, JSON import/export, content block copying, and unlimited hierarchical directories. The platform is compatible with major browsers and aims to deliver content value, iterate products, share technology, and promote open-source collaboration.

WFGY

WFGY is a lightweight and user-friendly tool for generating random data. It provides a simple interface to create custom datasets for testing, development, and other purposes. With WFGY, users can easily specify the data types, formats, and constraints for each field in the dataset. The tool supports various data types such as strings, numbers, dates, and more, allowing users to generate realistic and diverse datasets efficiently. WFGY is suitable for developers, testers, data scientists, and anyone who needs to create sample data for their projects quickly and effortlessly.

nacos

Nacos is an easy-to-use platform designed for dynamic service discovery and configuration and service management. It helps build cloud native applications and microservices platform easily. Nacos provides functions like service discovery, health check, dynamic configuration management, dynamic DNS service, and service metadata management.

Ultimate-Data-Science-Toolkit---From-Python-Basics-to-GenerativeAI

Ultimate Data Science Toolkit is a comprehensive repository covering Python basics to Generative AI. It includes modules on Python programming, data analysis, statistics, machine learning, MLOps, case studies, and deep learning. The repository provides detailed tutorials on various topics such as Python data structures, control statements, functions, modules, object-oriented programming, exception handling, file handling, web API, databases, list comprehension, lambda functions, Pandas, Numpy, data visualization, statistical analysis, supervised and unsupervised machine learning algorithms, model serialization, ML pipeline orchestration, case studies, and deep learning concepts like neural networks and autoencoders.

For similar tasks

YaneuraOu

YaneuraOu is the World's Strongest Shogi engine (AI player), winner of WCSC29 and other prestigious competitions. It is an educational and USI compliant engine that supports various features such as Ponder, MultiPV, and ultra-parallel search. The engine is known for its compatibility with different platforms like Windows, Ubuntu, macOS, and ARM. Additionally, YaneuraOu offers a standard opening book format, on-the-fly opening book support, and various maintenance commands for opening books. With a massive transposition table size of up to 33TB, YaneuraOu is a powerful and versatile tool for Shogi enthusiasts and developers.

Bagatur

Bagatur chess engine is a powerful Java chess engine that can run on Android devices and desktop computers. It supports the UCI protocol and can be easily integrated into chess programs with user interfaces. The engine is available for download on various platforms and has advanced features like SMP (multicore) support and NNUE evaluation function. Bagatur also includes syzygy endgame tablebases and offers various UCI options for customization. The project started as a personal challenge to create a chess program that could defeat a friend, leading to years of development and improvements.

katrain

KaTrain is a tool designed for analyzing games and playing go with AI feedback from KataGo. Users can review their games to find costly moves, play against AI with immediate feedback, play against weakened AI versions, and generate focused SGF reviews. The tool provides various features such as previews, tutorials, installation instructions, and configuration options for KataGo. Users can play against AI, receive instant feedback on moves, explore variations, and request in-depth analysis. KaTrain also supports distributed training for contributing to KataGo's strength and training bigger models. The tool offers themes customization, FAQ section, and opportunities for support and contribution through GitHub issues and Discord community.

SyPB

SyPB is a Counter-Strike 1.6 bot based on YaPB2.7.2. It provides a NPC system and an AMXX API for enhancing gameplay. The tool is designed for Windows users and can be downloaded from the provided link. SyPB aims to improve the gaming experience by offering advanced bot functionalities and integration with the AMXX API.

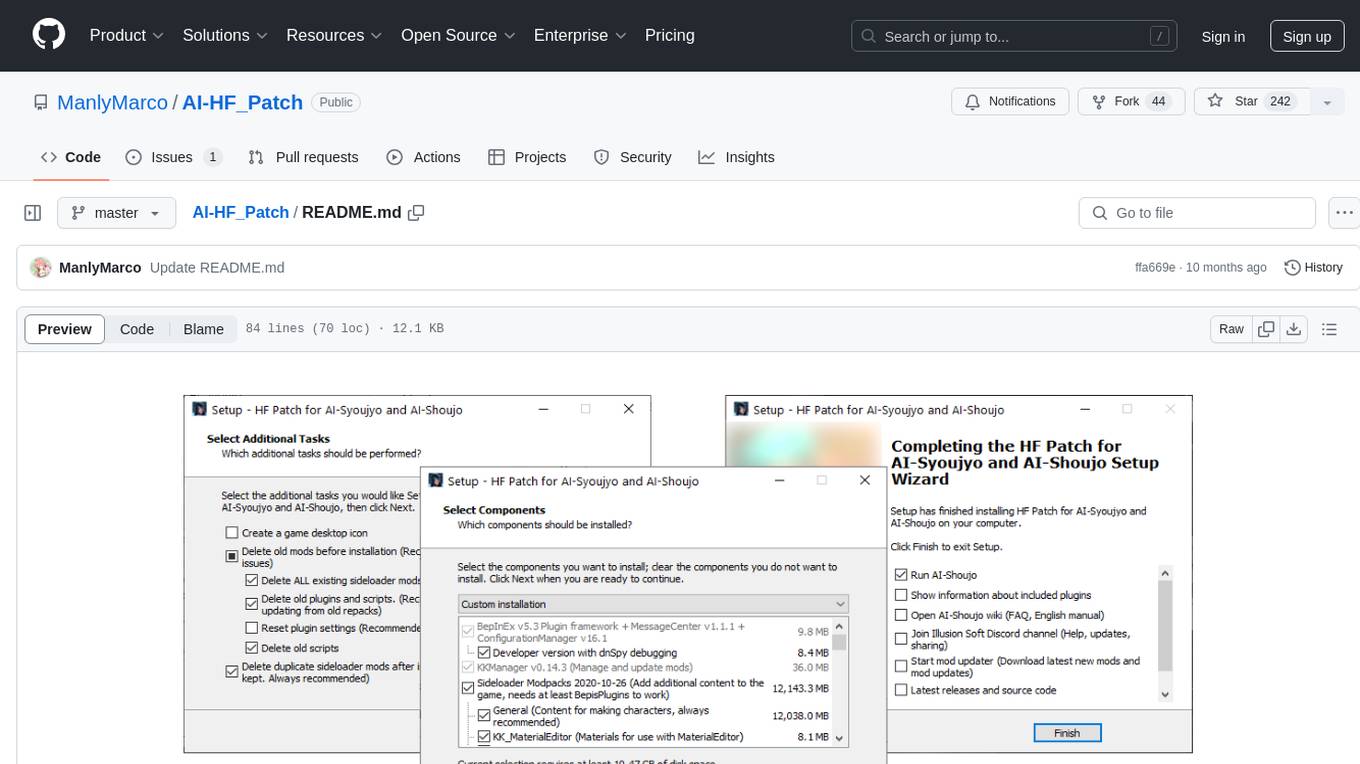

AI-HF_Patch

AI-HF_Patch is a comprehensive patch for AI-Shoujo that includes all free updates, fan-made English translations, essential mods, and gameplay improvements. It ensures compatibility with character cards and scenes while maintaining the original game's feel. The patch addresses common issues and provides uncensoring options. Users can support development through Patreon. The patch does not include the full game or pirated content, requiring a separate purchase from Steam. Installation is straightforward, with detailed guides available for users.

Mortal

Mortal (凡夫) is a free and open source AI for Japanese mahjong, powered by deep reinforcement learning. It provides a comprehensive solution for playing Japanese mahjong with AI assistance. The project focuses on utilizing deep reinforcement learning techniques to enhance gameplay and decision-making in Japanese mahjong. Mortal offers a user-friendly interface and detailed documentation to assist users in understanding and utilizing the AI effectively. The project is actively maintained and welcomes contributions from the community to further improve the AI's capabilities and performance.

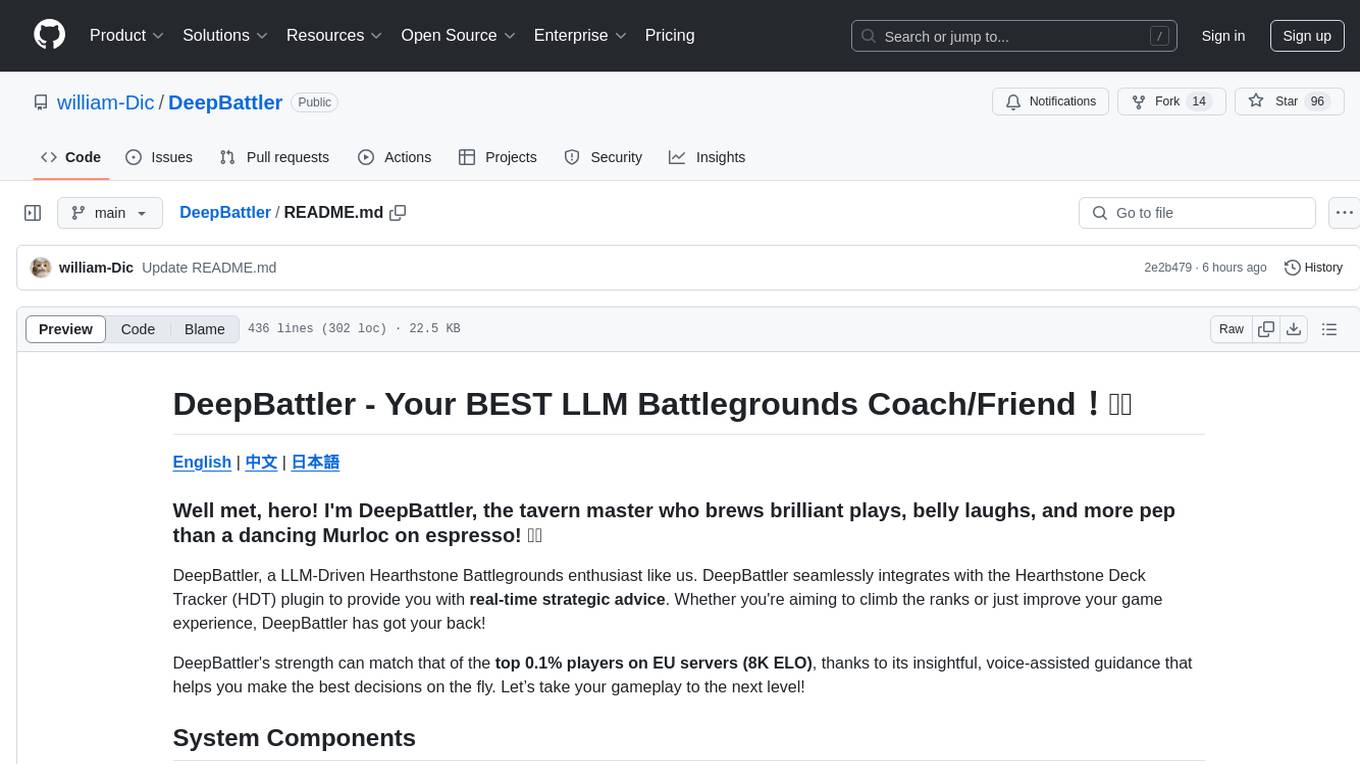

DeepBattler

DeepBattler is a tool designed for Hearthstone Battlegrounds players, providing real-time strategic advice and insights to improve gameplay experience. It integrates with the Hearthstone Deck Tracker plugin and offers voice-assisted guidance. The tool is powered by a large language model (LLM) and can match the strength of top players on EU servers. Users can set up the tool by adding dependencies, configuring the plugin path, and launching the LLM agent. DeepBattler is licensed for personal, educational, and non-commercial use, with guidelines on non-commercial distribution and acknowledgment of external contributions.

tt-metal

TT-NN is a python & C++ Neural Network OP library. It provides a low-level programming model, TT-Metalium, enabling kernel development for Tenstorrent hardware.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.