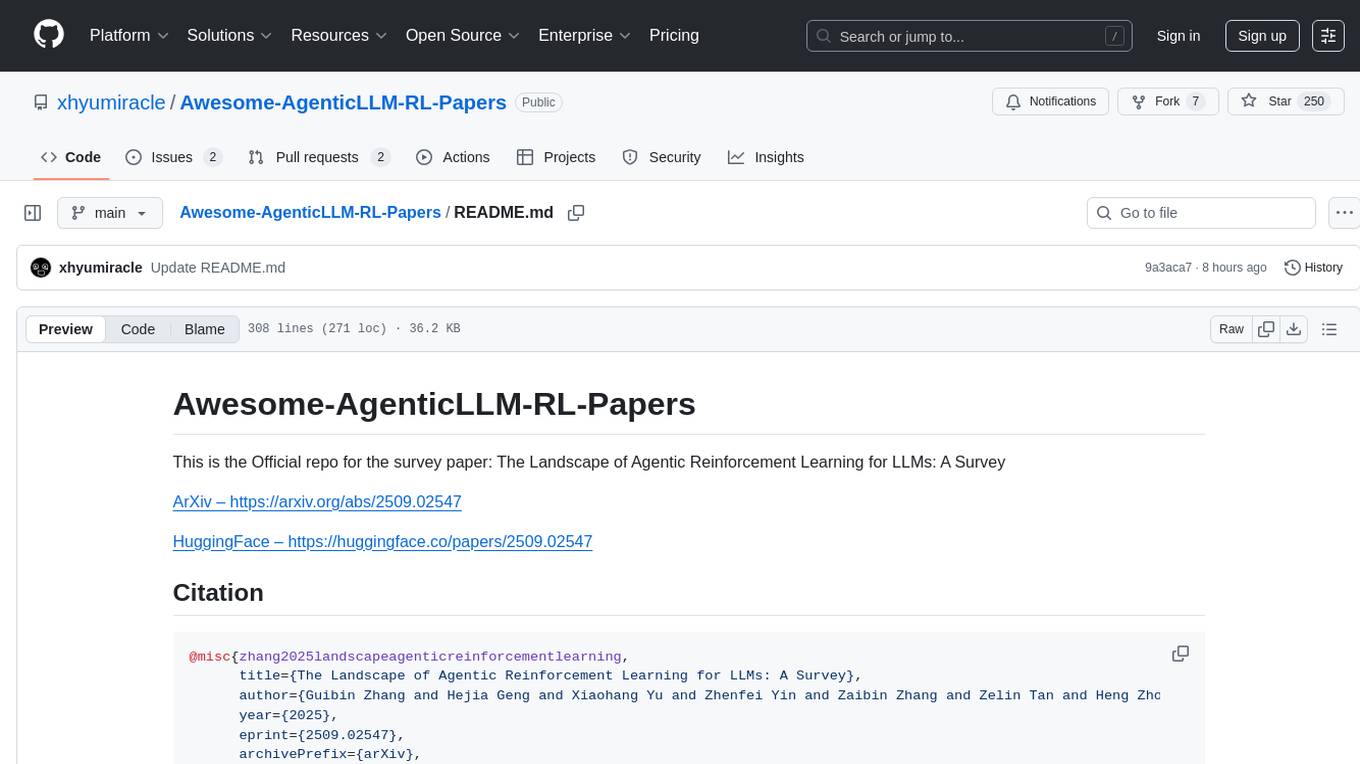

Awesome-AgenticLLM-RL-Papers

None

Stars: 245

This repository serves as the official source for the survey paper 'The Landscape of Agentic Reinforcement Learning for LLMs: A Survey'. It provides an extensive overview of various algorithms, methods, and frameworks related to Agentic RL, including detailed information on different families of algorithms, their key mechanisms, objectives, and links to relevant papers and resources. The repository covers a wide range of tasks such as Search & Research Agent, Code Agent, Mathematical Agent, GUI Agent, RL in Vision Agents, RL in Embodied Agents, and RL in Multi-Agent Systems. Additionally, it includes information on environments, frameworks, and methods suitable for different tasks related to Agentic RL and LLMs.

README:

This is the Official repo for the survey paper: The Landscape of Agentic Reinforcement Learning for LLMs: A Survey

ArXiv – https://arxiv.org/abs/2509.02547

HuggingFace – https://huggingface.co/papers/2509.02547

@misc{zhang2025landscapeagenticreinforcementlearning,

title={The Landscape of Agentic Reinforcement Learning for LLMs: A Survey},

author={Guibin Zhang and Hejia Geng and Xiaohang Yu and Zhenfei Yin and Zaibin Zhang and Zelin Tan and Heng Zhou and Zhongzhi Li and Xiangyuan Xue and Yijiang Li and Yifan Zhou and Yang Chen and Chen Zhang and Yutao Fan and Zihu Wang and Songtao Huang and Yue Liao and Hongru Wang and Mengyue Yang and Heng Ji and Michael Littman and Jun Wang and Shuicheng Yan and Philip Torr and Lei Bai},

year={2025},

eprint={2509.02547},

archivePrefix={arXiv},

primaryClass={cs.AI},

url={https://arxiv.org/abs/2509.02547},

}Clip corresponds to preventing the policy ratio from moving too far from 1 for ensuring stable updates.

KL penalty corresponds to penalizing the KL divergence between the learned policy and the reference policy for ensuring alignment.

| Method | Year | Objective Type | Clip | KL Penalty | Key Mechanism | Signal | Link | Resource |

|---|---|---|---|---|---|---|---|---|

| PPO family | ||||||||

| PPO | 2017 | Policy gradient | Yes | No | Policy ratio clipping | Reward | Paper | - |

| VAPO | 2025 | Policy gradient | Yes | Adaptive | Adaptive KL penalty + variance control | Reward + variance signal | Paper | - |

| PF-PPO | 2024 | Policy gradient | Yes | Yes | Policy filtration | Noisy reward | Paper | Code |

| VinePPO | 2024 | Policy gradient | Yes | Yes | Unbiased value estimates | Reward | Paper | Code |

| PSGPO | 2024 | Policy gradient | Yes | Yes | Process supervision | Process Reward | Paper | - |

| DPO family | ||||||||

| DPO | 2024 | Preference optimization | No | Yes | Implicit reward related to the policy | Human preference | Paper | - |

| β-DPO | 2024 | Preference optimization | No | Adaptive | Dynamic KL coefficient | Human preference | Paper | Code |

| SimPO | 2024 | Preference optimization | No | Scaled | Use avg log-prob of a sequence as implicit reward | Human preference | Paper | Code |

| IPO | 2024 | Implicit preference | No | No | LLMs as preference classifiers | Preference rank | Paper | - |

| KTO | 2024 | Knowledge transfer optimization | No | Yes | Teacher stabilization | Teacher-student logit | Paper | Code Model |

| ORPO | 2024 | Online regularized preference optimization | No | Yes | Online stabilization | Online feedback reward | Paper | Code Model |

| Step-DPO | 2024 | Preference optimization | No | Yes | Step-wise supervision | Step-wise preference | Paper | Code Model |

| LCPO | 2025 | Preference optimization | No | Yes | Length preference with limited data/training | Reward | Paper | - |

| GRPO family | ||||||||

| GRPO | 2025 | Policy gradient under group-based reward | Yes | Yes | Group-based relative reward to eliminate value estimates | Group-based reward | Paper | - |

| DAPO | 2025 | Surrogate of GRPO's | Yes | Yes | Decoupled clip + dynamic sampling | Dynamic group-based reward | Paper | Code Model Website |

| GSPO | 2025 | Surrogate of GRPO's | Yes | Yes | Sequence-level clipping, rewarding, optimization | Smooth group-based reward | Paper | - |

| GMPO | 2025 | Surrogate of GRPO's | Yes | Yes | Geometric mean of token-level rewards | Margin-based reward | Paper | Code |

| ProRL | 2025 | Same as GRPO's | Yes | Yes | Reference policy reset | Group-based reward | Paper | Model |

| Posterior-GRPO | 2025 | Same as GRPO's | Yes | Yes | Reward only successful processes | Process-based reward | Paper | - |

| Dr.GRPO | 2025 | Unbiased GRPO objective | Yes | Yes | Eliminate bias in optimization | Group-based reward | Paper | Code Model |

| Step-GRPO | 2025 | Same as GRPO's | Yes | Yes | Rule-based reasoning rewards | Step-wise reward | Paper | Code Model |

| SRPO | 2025 | Same as GRPO's | Yes | Yes | Two-staged history-resampling | Reward | Paper | Model |

| GRESO | 2025 | Same as GRPO's | Yes | Yes | Pre-rollout filtering | Reward | Paper | Code Website |

| StarPO | 2025 | Same as GRPO's | Yes | Yes | Reasoning-guided actions for multi-turn interactions | Group-based reward | Paper | Code Website |

| GHPO | 2025 | Policy gradient | Yes | Yes | Adaptive prompt refinement | Reward | Paper | Code |

| Skywork R1V2 | 2025 | GRPO with hybrid reward signal | Yes | Yes | Selective sample buffer | Multimodal reward | Paper | Code Model |

| ASPO | 2025 | GRPO with shaped advantage | Yes | Yes | Clipped bias to advantage | Group-based reward | Paper | Code Model |

| TreePo | 2025 | Same as GRPO's | Yes | Yes | Self-guided rollout, reduced compute burden | Group-based reward | Paper | Code Model Website |

| EDGE-GRPO | 2025 | Same as GRPO's | Yes | Yes | Entropy-driven advantage + error correction | Group-based reward | Paper | Code Model |

| DARS | 2025 | Same as GRPO's | Yes | No | Multi-stage rollout for hardest problems | Group-based reward | Paper | Code Model |

| CHORD | 2025 | Weighted GRPO + SFT | Yes | Yes | Auxiliary supervised loss | Group-based reward | Paper | Code |

| PAPO | 2025 | Surrogate of GRPO's | Yes | Yes | Implicit Perception Loss | Group-based reward | Paper | Code Model Website |

| Pass@k Training | 2025 | Same as GRPO's | Yes | Yes | Pass@k metric as reward | Group-based reward | Paper | Code |

| Method | Category | Base LLM | Link | Resource |

|---|---|---|---|---|

| Open Source Methods | ||||

| DeepRetrieval | External | Qwen2.5-3B-Instruct, Llama-3.2-3B-Instruct | Paper | Code |

| Search-R1 | External | Qwen2.5-3B/7B-Base/Instruct | Paper | Code |

| R1-Searcher | External | Qwen2.5-7B, Llama3.1-8B-Instruct | Paper | Code |

| R1-Searcher++ | External | Qwen2.5-7B-Instruct | Paper | Code |

| ReSearch | External | Qwen2.5-7B/32B-Instruct | Paper | Code |

| StepSearch | External | Qwen2.5-3B/7B-Base/Instruct | Paper | Code |

| WebDancer | External | Qwen2.5-7B/32B, QWQ-32B | Paper | Code |

| WebThinker | External | QwQ-32B, DeepSeek-R1-Distilled-Qwen-7B/14B/32B, Qwen2.5-32B-Instruct | Paper | Code |

| WebSailor | External | Qwen2.5-3B/7B/32B/72B | Paper | Code |

| WebWatcher | External | Qwen2.5-VL-7B/32B | Paper | Code |

| ASearcher | External | Qwen2.5-7B/14B, QwQ-32B | Paper | Code |

| ZeroSearch | Internal | Qwen2.5-3B/7B-Base/Instruct | Paper | Code |

| SSRL | Internal | Qwen2.5-1.5B/3B/7B/14B/32B/72B-Instruct, Llama-3.2-1B/8B-Instruct, Llama-3.1-8B/70B-Instruct, Qwen3-0.6B/1.7B/4B/8B/14B/32B | Paper | Code |

| Closed Source Methods | ||||

| OpenAI Deep Research | External | OpenAI Models | Blog | Website |

| Perplexity’s DeepResearch | External | - | Blog | Website |

| Google Gemini’s DeepResearch | External | Gemini | Blog | Website |

| Kimi-Researcher | External | Kimi K2 | Blog | Website |

| Grok AI DeepSearch | External | Grok3 | Blog | Website |

| Doubao with Deep Think | External | Doubao | Blog | Website |

| Method | RL Reward Type | Base LLM | Link | Resource |

|---|---|---|---|---|

| RL for Code Generation | ||||

| AceCoder | Outcome | Qwen2.5-Coder-7B-Base/Instruct, Qwen2.5-7B-Instruct | Paper | Code |

| DeepCoder-14B | Outcome | Deepseek-R1-Distilled-Qwen-14B | Blog | Code |

| RLTF | Outcome | CodeGen-NL 2.7B, CodeT5 | Paper | Code |

| CURE | Outcome | Qwen2.5-7B/14B-Instruct, Qwen3-4B | Paper | Code |

| Absolute Zero | Outcome | Qwen2.5-7B/14B, Qwen2.5-Coder-3B/7B/14B, Llama-3.1-8B | Paper | Code |

| StepCoder | Process | DeepSeek-Coder-Instruct-6.7B | Paper | Code |

| Process Supervision-Guided PO | Process | - | Paper | - |

| CodeBoost | Process | Qwen2.5-Coder-7B-Instruct, Llama-3.1-8B-Instruct, Seed-Coder-8B-Instruct, Yi-Coder-9B-Chat | Paper | Code |

| PRLCoder | Process | CodeT5+, Unixcoder, T5-base | Paper | - |

| o1-Coder | Process | DeepSeek-1.3B-Instruct | Paper | Code |

| CodeFavor | Process | Mistral-NeMo-12B-Instruct, Gemma-2-9B-Instruct, Llama-3-8B-Instruct, Mistral-7B-Instruct-v0.3 | Paper | Code |

| Focused-DPO | Process | DeepSeek-Coder-6.7B-Base/Instruct, Magicoder-S-DS-6.7B, Qwen2.5-Coder-7B-Instruct | Paper | - |

| RL for Iterative Code Refinement | ||||

| RLEF | Outcome | Llama-3.0-8B-Instruct, Llama-3.1-8B/70B-Instruct | Paper | - |

| μCode | Outcome | Llama-3.2-1B/8B-Instruct | Paper | Code |

| R1-Code-Interpreter | Outcome | Qwen2.5-7B/14B-Instruct-1M, Qwen2.5-3B-Instruct | Paper | Code |

| IterPref | Process | Deepseek-Coder-7B-Instruct, Qwen2.5-Coder-7B, StarCoder2-15B | Paper | - |

| LeDex | Process | StarCoder-15B, CodeLlama-7B/13B | Paper | - |

| CTRL | Process | Qwen2.5-Coder-7B/14B/32B-Instruct | Paper | Code |

| ReVeal | Process | DAPO-Qwen-32B, Qwen2.5-32B-Instruc(not-working) | Paper | - |

| Posterior-GRPO | Process | Qwen2.5-Coder-3B/7B-Base, Qwen2.5-Math-7B | Paper | - |

| Policy Filtration for RLHF | Process | DeepSeek-Coder-6.7B, Qwen1.5-7B | Paper | Code |

| RL for Automated Software Engineering (SWE) | ||||

| DeepSWE | Outcome | Qwen3-32B | Blog | Code |

| SWE-RL | Outcome | Llama-3.3-70B-Instruct | Paper | Code |

| Satori-SWE | Outcome | Qwen-2.5-Math-7B | Paper | Code |

| RLCoder | Outcome | CodeLlama7B, StartCoder-7B, StarCoder2-7B, DeepSeekCoder-1B/7B | Paper | Code |

| Qwen3-Coder | Outcome | - | Paper | Code |

| ML-Agent | Outcome | Qwen2.5-7B-Base/Instruct, DeepSeek-R1-Distill-Qwen-7B | Paper | Code |

| Golubev et al. | Process | Qwen2.5-72B-Instruct | Paper | - |

| SWEET-RL | Process | Llama-3.1-8B/70B-Instruct | Paper | Code |

| Method | Reward | Link | Resource |

|---|---|---|---|

| RL for Informal Mathematical Reasoning | |||

| ARTIST | Outcome | Paper | - |

| ToRL | Outcome | Paper | Code Model |

| ZeroTIR | Outcome | Paper | Code Model |

| TTRL | Outcome | Paper | Code |

| RENT | Outcome | Paper | Code Website |

| Satori | Outcome | Paper | Code Model Website |

| 1-shot RLVR | Outcome | Paper | Code Model |

| Prover-Verifier Games (legibility) | Outcome | Paper | - |

| rStar2-Agent | Outcome | Paper | Code |

| START | Process | Paper | - |

| LADDER | Process | Paper | - |

| SWiRL | Process | Paper | - |

| RLoT | Process | Paper | Code |

| RL for Formal Mathematical Reasoning | |||

| DeepSeek-Prover-v1.5 | Outcome | Paper | Code Model |

| Leanabell-Prover | Outcome | Paper | Code Model |

| Kimina-Prover (Preview) | Outcome | Paper | Code Model |

| Seed-Prover | Outcome | Paper | Code |

| DeepSeek-Prover-v2 | Process | Paper | Code Model |

| ProofNet++ | Process | Paper | - |

| Leanabell-Prover-v2 | Process | Paper | Code |

| Hybrid | |||

| InternLM2.5-StepProver | Hybrid | Paper | Code |

| Lean-STaR | Hybrid | Paper | Code Model Website |

| STP | Hybrid | Paper | Code Model |

| Method | Paradigm | Environment | Link | Resource |

|---|---|---|---|---|

| Non-RL GUI Agents | ||||

| MM-Navigator | Vanilla VLM | - | Paper | Code |

| SeeAct | Vanilla VLM | - | Paper | Code |

| TRISHUL | Vanilla VLM | - | Paper | - |

| InfiGUIAgent | SFT | - | Paper | Code Model Website |

| UI-AGILE | SFT | - | Paper | Code Model |

| TongUI | SFT | - | Paper | Code Model Website |

| RL-based GUI Agents | ||||

| GUI-R1 | RL | Static | Paper | Code Model |

| UI-R1 | RL | Static | Paper | Code Model |

| InFiGUI-R1 | RL | Static | Paper | Code Model |

| AgentCPM | RL | Static | Paper | Code Model |

| WebAgent-R1 | RL | Interactive | Paper | - |

| Vattikonda et al. | RL | Interactive | Paper | - |

| UI-TARS | RL | Interactive | Paper | Code Model Website |

| DiGiRL | RL | Interactive | Paper | Code Model Website |

| ZeroGUI | RL | Interactive | Paper | Code |

| MobileGUI-RL | RL | Interactive | Paper | - |

TO BE ADDED

TO BE ADDED

“Dynamic” denotes whether the multi-agent system is task-dynamic, i.e., processes different task queries with different configurations (agent count, topologies, reasoning depth, prompts, etc).

“Train” denotes whether the method involves training the LLM backbone of agents.

| Method | Dynamic | Train | RL Algorithm | Link | Resource |

|---|---|---|---|---|---|

| RL-Free Multi-Agent Systems (not exhaustive) | |||||

| CAMEL | ✗ | ✗ | - | Paper | Code Model |

| MetaGPT | ✗ | ✗ | - | Paper | Code |

| MAD | ✗ | ✗ | - | Paper | Code |

| MoA | ✗ | ✗ | - | Paper | Code |

| AFlow | ✗ | ✗ | - | Paper | Code |

| RL-Based Multi-Agent Training | |||||

| GPTSwarm | ✗ | ✗ | policy gradient | Paper | Code Website |

| MaAS | ✓ | ✗ | policy gradient | Paper | Code |

| G-Designer | ✓ | ✗ | policy gradient | Paper | Code |

| MALT | ✗ | ✓ | DPO | Paper | - |

| MARFT | ✗ | ✓ | MARFT | Paper | Code |

| MAPoRL | ✓ | ✓ | PPO | Paper | Code |

| MLPO | ✓ | ✓ | MLPO | Paper | - |

| ReMA | ✓ | ✓ | MAMRP | Paper | Code |

| FlowReasoner | ✓ | ✓ | GRPO | Paper | Code |

| LERO | ✓ | ✓ | MLPO | Paper | - |

| CURE | ✗ | ✓ | rule-based RL | Paper | Code Model |

| MMedAgent-RL | ✗ | ✓ | GRPO | Paper | - |

TO BE ADDED

The agent capabilities are denoted by:

① Reasoning, ② Planning, ③ Tool Use, ④ Memory, ⑤ Collaboration, ⑥ Self-Improve.

| Environment / Benchmark | Agent Capability | Task Domain | Modality | Link | Resource |

|---|---|---|---|---|---|

| LMRL-Gym | ①, ④ | Interaction | Text | Paper | Code |

| ALFWorld | ②, ① | Embodied, Text Games | Text | Paper | Code Website |

| TextWorld | ②, ① | Text Games | Text | Paper | Code |

| ScienceWorld | ①, ② | Embodied, Science | Text | Paper | Code Website |

| AgentGym | ①, ④ | Text Games | Text | Paper | Code Website |

| Agentbench | ① | General | Text, Visual | Paper | Code |

| InternBootcamp | ① | General, Coding, Logic | Text | Paper | Code |

| LoCoMo | ④ | Interaction | Text | Paper | Code Website |

| MemoryAgentBench | ④ | Interaction | Text | Paper | Code |

| WebShop | ②, ③ | Web | Text | Paper | Code Website |

| Mind2Web | ②, ③ | Web | Text, Visual | Paper | Code Website |

| WebArena | ②, ③ | Web | Text | Paper | Code Website |

| VisualwebArena | ①, ②, ③ | Web | Text, Visual | Paper | Code Website |

| AppWorld | ②, ③ | App | Text | Paper | Code Website |

| AndroidWorld | ②, ③ | GUI, App | Text, Visual | Paper | Code |

| OSWorld | ②, ③ | GUI, OS | Text, Visual | Paper | Code Website |

| Debug-Gym | ①, ③ | SWE | Text | Paper | Code Website |

| MLE-Dojo | ②, ① | MLE | Text | Paper | Code Website |

| τ-bench | ①, ③ | SWE | Text | Paper | Code |

| TheAgentCompany | ②, ③, ⑤ | SWE | Text | Paper | Code Website |

| MedAgentGym | ① | Science | Text | Paper | Code |

| SecRepoBench | ①, ③ | Coding, Security | Text | Paper | - |

| R2E-Gym | ①, ② | SWE | Text | Paper | Code Website |

| HumanEval | ① | Coding | Text | Paper | Code |

| MBPP | ① | Coding | Text | Paper | Code |

| BigCodeBench | ① | Coding | Text | Paper | Code Website |

| LiveCodeBench | ① | Coding | Text | Paper | Code Website |

| SWE-bench | ①, ③ | SWE | Text | Paper | Code Website |

| SWE-rebench | ①, ③ | SWE | Text | Paper | Website |

| DevBench | ②, ① | SWE | Text | Paper | Code |

| ProjectEval | ②, ① | SWE | Text | Paper | Code Website |

| DA-Code | ①, ③ | Data Science, SWE | Text | Paper | Code Website |

| ColBench | ②, ①, ③ | SWE, Web Dev | Text | Paper | Code Website |

| NoCode-bench | ②, ① | SWE | Text | Paper | Code Website |

| MLE-Bench | ②, ①, ③ | MLE | Text | Paper | Code Website |

| PaperBench | ②, ①, ③ | MLE | Text | Paper | Code Website |

| Crafter | ②, ④ | Game | Visual | Paper | Code Website |

| Craftax | ②, ④ | Game | Visual | Paper | Code |

| ELLM (Crafter variant) | ②, ① | Game | Visual | Paper | Code Website |

| SMAC / SMAC-Exp | ⑤, ② | Game | Visual | Paper | Code |

| Factorio | ②, ① | Game | Visual | Paper | Code Website |

| Framework | Type | Key Features | Link | Resource |

|---|---|---|---|---|

| Agentic RL Frameworks | ||||

| Verifiers | Agent RL / LLM RL | Verifiable environment setup | - | Code |

| SkyRL-v0/v0.1 | Agent RL | Long-horizon real-world training | Blog (v0) Blog (v0.1) | Code |

| AREAL | Agent RL / LLM RL | Asynchronous training | Paper | Code |

| MARTI | Multi-agent RL / LLM RL | Integrated multi-agent training | - | Code |

| EasyR1 | Agent RL / LLM RL | Multimodal support | - | Code |

| AgentFly | Agent RL | Scalable asynchronous execution | Paper | Code |

| Agent Lightning | Agent RL | Decoupled hierarchical RL | Paper | Code |

| RLHF and LLM Fine-tuning Frameworks | ||||

| OpenRLHF | RLHF / LLM RL | High-performance scalable RLHF | Paper | Code |

| TRL | RLHF / LLM RL | Hugging Face RLHF | - | Code |

| trlX | RLHF / LLM RL | Distributed large-model RLHF | Paper | Code |

| HybridFlow | RLHF / LLM RL | Streamlined experiment management | Paper | Code |

| SLiMe | RLHF / LLM RL | High-performance async RL | - | Code |

| General-purpose RL Frameworks | ||||

| RLlib | General RL / Multi-agent RL | Production-grade scalable library | Paper | Code |

| Acme | General RL | Modular distributed components | Paper | Code |

| Tianshou | General RL | High-performance PyTorch platform | Paper | Code |

| Stable Baselines3 | General RL | Reliable PyTorch algorithms | Paper | Code |

| PFRL | General RL | Benchmarked prototyping algorithms | Paper | Code |

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Awesome-AgenticLLM-RL-Papers

Similar Open Source Tools

Awesome-AgenticLLM-RL-Papers

This repository serves as the official source for the survey paper 'The Landscape of Agentic Reinforcement Learning for LLMs: A Survey'. It provides an extensive overview of various algorithms, methods, and frameworks related to Agentic RL, including detailed information on different families of algorithms, their key mechanisms, objectives, and links to relevant papers and resources. The repository covers a wide range of tasks such as Search & Research Agent, Code Agent, Mathematical Agent, GUI Agent, RL in Vision Agents, RL in Embodied Agents, and RL in Multi-Agent Systems. Additionally, it includes information on environments, frameworks, and methods suitable for different tasks related to Agentic RL and LLMs.

kumo-search

Kumo search is an end-to-end search engine framework that supports full-text search, inverted index, forward index, sorting, caching, hierarchical indexing, intervention system, feature collection, offline computation, storage system, and more. It runs on the EA (Elastic automic infrastructure architecture) platform, enabling engineering automation, service governance, real-time data, service degradation, and disaster recovery across multiple data centers and clusters. The framework aims to provide a ready-to-use search engine framework to help users quickly build their own search engines. Users can write business logic in Python using the AOT compiler in the project, which generates C++ code and binary dynamic libraries for rapid iteration of the search engine.

LLM-for-Healthcare

The repository 'LLM-for-Healthcare' provides a comprehensive survey of large language models (LLMs) for healthcare, covering data, technology, applications, and accountability and ethics. It includes information on various LLM models, training data, evaluation methods, and computation costs. The repository also discusses tasks such as NER, text classification, question answering, dialogue systems, and generation of medical reports from images in the healthcare domain.

Awesome-AGI

Awesome-AGI is a curated list of resources related to Artificial General Intelligence (AGI), including models, pipelines, applications, and concepts. It provides a comprehensive overview of the current state of AGI research and development, covering various aspects such as model training, fine-tuning, deployment, and applications in different domains. The repository also includes resources on prompt engineering, RLHF, LLM vocabulary expansion, long text generation, hallucination mitigation, controllability and safety, and text detection. It serves as a valuable resource for researchers, practitioners, and anyone interested in the field of AGI.

ML-AI-2-LT

ML-AI-2-LT is a repository that serves as a glossary for machine learning and deep learning concepts. It contains translations and explanations of various terms related to artificial intelligence, including definitions and notes. Users can contribute by filling issues for unclear concepts or by submitting pull requests with suggestions or additions. The repository aims to provide a comprehensive resource for understanding key terminology in the field of AI and machine learning.

PaddleScience

PaddleScience is a scientific computing suite developed based on the deep learning framework PaddlePaddle. It utilizes the learning ability of deep neural networks and the automatic (higher-order) differentiation mechanism of PaddlePaddle to solve problems in physics, chemistry, meteorology, and other fields. It supports three solving methods: physics mechanism-driven, data-driven, and mathematical fusion, and provides basic APIs and detailed documentation for users to use and further develop.

Cool-GenAI-Fashion-Papers

Cool-GenAI-Fashion-Papers is a curated list of resources related to GenAI-Fashion, including papers, workshops, companies, and products. It covers a wide range of topics such as fashion design synthesis, outfit recommendation, fashion knowledge extraction, trend analysis, and more. The repository provides valuable insights and resources for researchers, industry professionals, and enthusiasts interested in the intersection of AI and fashion.

so-vits-models

This repository collects various LLM, AI-related models, applications, and datasets, including LLM-Chat for dialogue models, LLMs for large models, so-vits-svc for sound-related models, stable-diffusion for image-related models, and virtual-digital-person for generating videos. It also provides resources for deep learning courses and overviews, AI competitions, and specific AI tasks such as text, image, voice, and video processing.

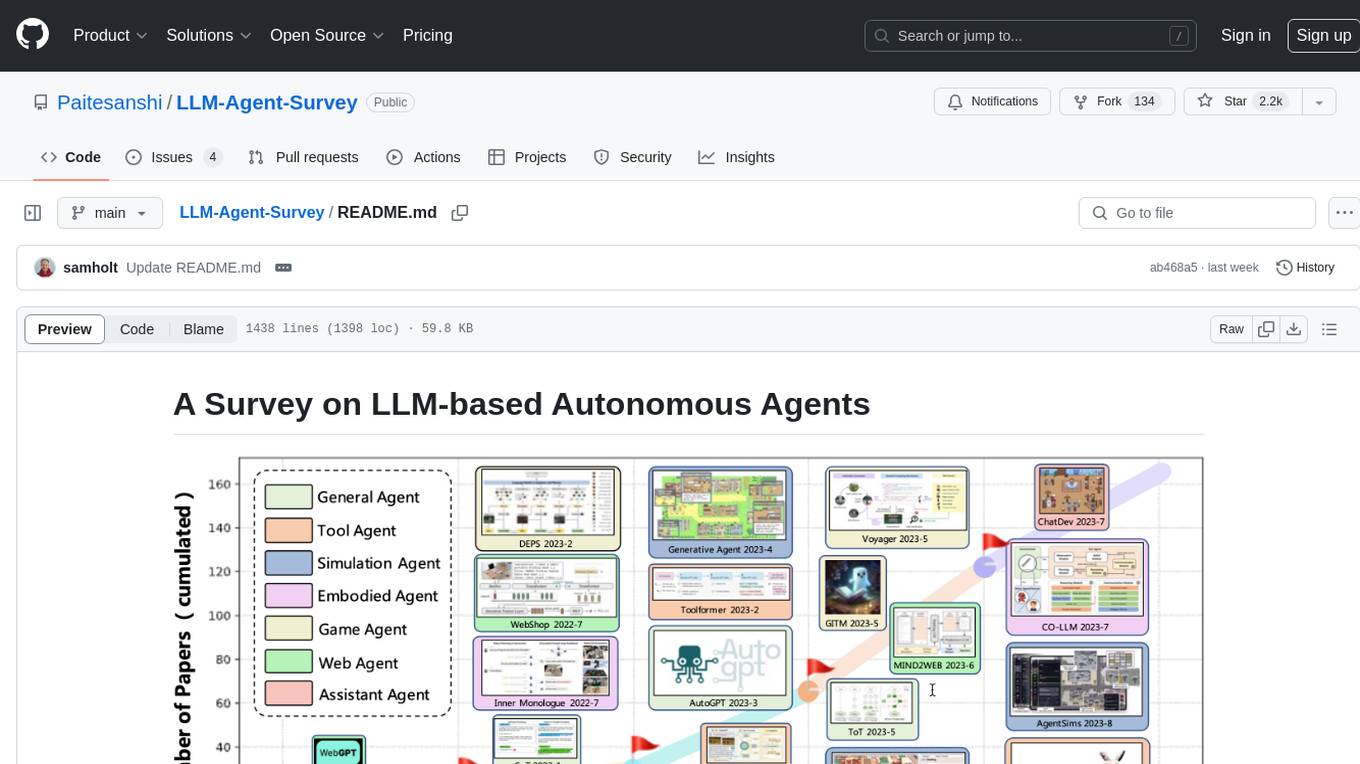

LLM-Agent-Survey

Autonomous agents are designed to achieve specific objectives through self-guided instructions. With the emergence and growth of large language models (LLMs), there is a growing trend in utilizing LLMs as fundamental controllers for these autonomous agents. This repository conducts a comprehensive survey study on the construction, application, and evaluation of LLM-based autonomous agents. It explores essential components of AI agents, application domains in natural sciences, social sciences, and engineering, and evaluation strategies. The survey aims to be a resource for researchers and practitioners in this rapidly evolving field.

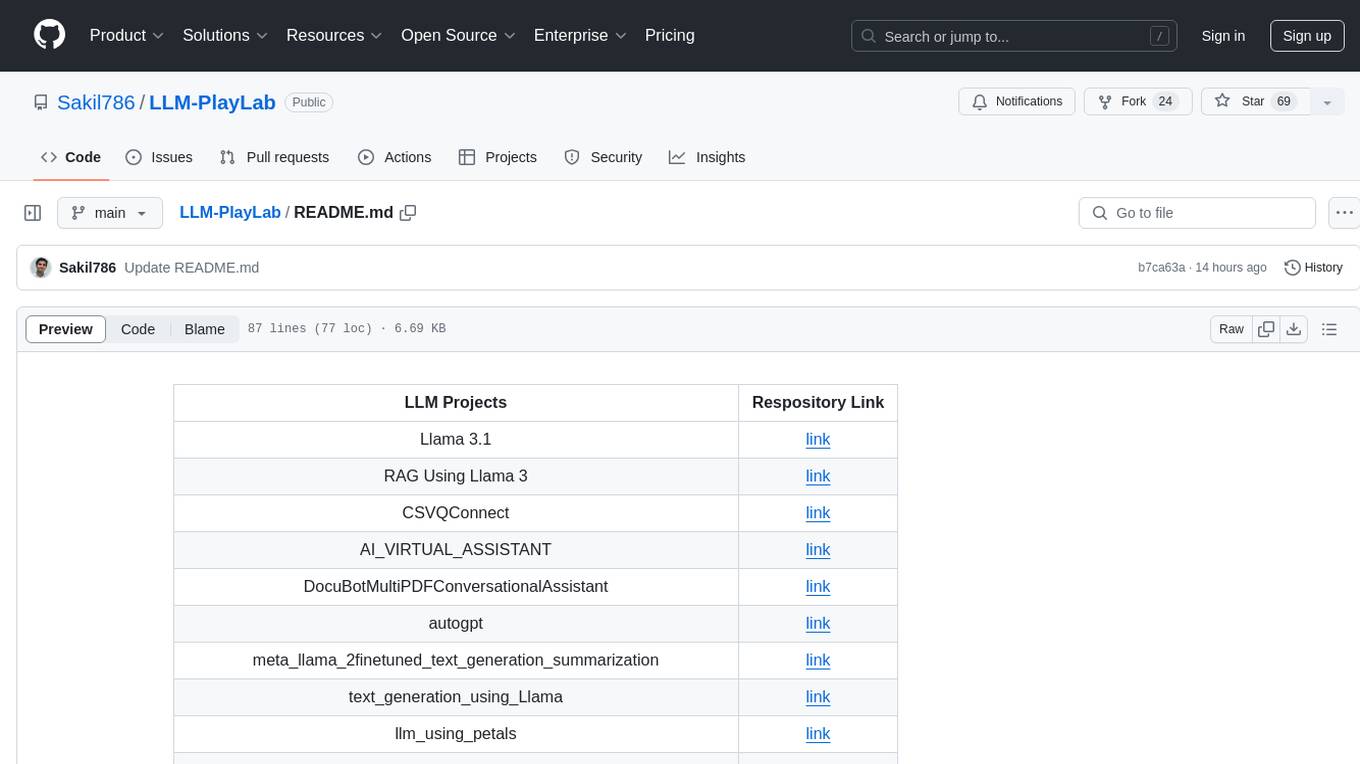

LLM-PlayLab

LLM-PlayLab is a repository containing various projects related to LLM (Large Language Models) fine-tuning, generative AI, time-series forecasting, and crash courses. It includes projects for text generation, sentiment analysis, data analysis, chat assistants, image captioning, and more. The repository offers a wide range of tools and resources for exploring and implementing advanced AI techniques.

awesome-hosting

awesome-hosting is a curated list of hosting services sorted by minimal plan price. It includes various categories such as Web Services Platform, Backend-as-a-Service, Lambda, Node.js, Static site hosting, WordPress hosting, VPS providers, managed databases, GPU cloud services, and LLM/Inference API providers. Each category lists multiple service providers along with details on their minimal plan, trial options, free tier availability, open-source support, and specific features. The repository aims to help users find suitable hosting solutions based on their budget and requirements.

AIInfra

AIInfra is an open-source project focused on AI infrastructure, specifically targeting large models in distributed clusters, distributed architecture, distributed training, and algorithms related to large models. The project aims to explore and study system design in artificial intelligence and deep learning, with a focus on the hardware and software stack for building AI large model systems. It provides a comprehensive curriculum covering topics such as AI chip principles, communication and storage, AI clusters, large model training, and inference, as well as algorithms for large models. The course is designed for undergraduate and graduate students, as well as professionals working with AI large model systems, to gain a deep understanding of AI computer system architecture and design.

Awesome-LLM-Eval

Awesome-LLM-Eval: a curated list of tools, benchmarks, demos, papers for Large Language Models (like ChatGPT, LLaMA, GLM, Baichuan, etc) Evaluation on Language capabilities, Knowledge, Reasoning, Fairness and Safety.

step_into_llm

The 'step_into_llm' repository is dedicated to the 昇思MindSpore technology open class, which focuses on exploring cutting-edge technologies, combining theory with practical applications, expert interpretations, open sharing, and empowering competitions. The repository contains course materials, including slides and code, for the ongoing second phase of the course. It covers various topics related to large language models (LLMs) such as Transformer, BERT, GPT, GPT2, and more. The course aims to guide developers interested in LLMs from theory to practical implementation, with a special emphasis on the development and application of large models.

For similar tasks

Awesome-AgenticLLM-RL-Papers

This repository serves as the official source for the survey paper 'The Landscape of Agentic Reinforcement Learning for LLMs: A Survey'. It provides an extensive overview of various algorithms, methods, and frameworks related to Agentic RL, including detailed information on different families of algorithms, their key mechanisms, objectives, and links to relevant papers and resources. The repository covers a wide range of tasks such as Search & Research Agent, Code Agent, Mathematical Agent, GUI Agent, RL in Vision Agents, RL in Embodied Agents, and RL in Multi-Agent Systems. Additionally, it includes information on environments, frameworks, and methods suitable for different tasks related to Agentic RL and LLMs.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

onnxruntime-genai

ONNX Runtime Generative AI is a library that provides the generative AI loop for ONNX models, including inference with ONNX Runtime, logits processing, search and sampling, and KV cache management. Users can call a high level `generate()` method, or run each iteration of the model in a loop. It supports greedy/beam search and TopP, TopK sampling to generate token sequences, has built in logits processing like repetition penalties, and allows for easy custom scoring.

mistral.rs

Mistral.rs is a fast LLM inference platform written in Rust. We support inference on a variety of devices, quantization, and easy-to-use application with an Open-AI API compatible HTTP server and Python bindings.

generative-ai-python

The Google AI Python SDK is the easiest way for Python developers to build with the Gemini API. The Gemini API gives you access to Gemini models created by Google DeepMind. Gemini models are built from the ground up to be multimodal, so you can reason seamlessly across text, images, and code.

jetson-generative-ai-playground

This repo hosts tutorial documentation for running generative AI models on NVIDIA Jetson devices. The documentation is auto-generated and hosted on GitHub Pages using their CI/CD feature to automatically generate/update the HTML documentation site upon new commits.

chat-ui

A chat interface using open source models, eg OpenAssistant or Llama. It is a SvelteKit app and it powers the HuggingChat app on hf.co/chat.

MetaGPT

MetaGPT is a multi-agent framework that enables GPT to work in a software company, collaborating to tackle more complex tasks. It assigns different roles to GPTs to form a collaborative entity for complex tasks. MetaGPT takes a one-line requirement as input and outputs user stories, competitive analysis, requirements, data structures, APIs, documents, etc. Internally, MetaGPT includes product managers, architects, project managers, and engineers. It provides the entire process of a software company along with carefully orchestrated SOPs. MetaGPT's core philosophy is "Code = SOP(Team)", materializing SOP and applying it to teams composed of LLMs.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.