MyLLM

"LLM from Zero to Hero: An End-to-End Large Language Model Journey from Data to Application!"

Stars: 128

MyLLM is a web application designed to help law students and legal professionals manage their LLM (Master of Laws) program. It provides features such as course tracking, assignment management, exam schedules, and collaboration tools. Users can easily organize their academic tasks, stay on top of deadlines, and communicate with peers and professors. MyLLM aims to streamline the LLM experience and enhance productivity for students pursuing advanced legal studies.

README:

<p align="center"> <img src="myllm.png" alt="MyLLM Overview"> </p>

MyLLM isn’t just another library; it's a playground for learning and building LLMs from scratch. This project was born out of a desire to fully understand every line of a transformer stack, from tokenization to RLHF.

Here's what's inside right now:

| Area | Status | Description |

|---|---|---|

| Interactive Notebooks | ✅ Stable | Step-by-step guided learning path |

| Modular Mini-Projects | ✅ Stable | Self-contained, targeted experiments |

| MyLLM Core Framework | ⚙️ Active Development | Pure PyTorch, lightweight, transparent |

| MetaBot | 🛠 Coming Soon | A chatbot that explains itself |

Warning: Some parts are stable, while others are actively evolving.

Use this repo to explore, experiment, and break things safely — that's how you learn deeply.

There are plenty of libraries out there (Hugging Face, Lightning, etc.), but they hide too much of the magic. I wanted something different:

- Minimal – No unnecessary abstractions, no magic.

- Hackable – Every part of the stack is visible and editable.

- Research-Friendly – A place to experiment with cutting-edge techniques like LoRA, QLoRA, PPO, and DPO.

- From Scratch – So you truly understand the internals.

This is a framework for engineers who want to think like researchers and researchers who want to ship real systems.

MyLLM is structured into three progressive layers, designed to guide you from fundamental understanding to building a complete system.

The notebooks/ directory is where your journey begins. Each notebook is a step-by-step guide with theory and code, building components from first principles.

MyLLM/

└── notebooks/

├── 1.DATA.ipynb # Text preprocessing & tokenization

├── 2.ATTENTION.ipynb # Building the core attention mechanism

├── 3.TRAINING.ipynb # Basic training loop

├── 4.FINETUNING.ipynb # LoRA, QLoRA, and SFT

├── 5.RLHF.ipynb # PPO and DPO algorithms

└── 6.INFERENCE.ipynb # KV caching and quantization

💡 Modify the attention mask in a notebook and see how the output changes — that's hands-on learning at its best.

The Modules/ folder is a collection of self-contained experiments, each focusing on a specific part of the LLM pipeline. This lets you experiment on one piece of the puzzle without touching the whole framework.

MyLLM/

└── Modules/

├── 1.data/ # Dataset loading and preprocessing utilities

├── 2.models/ # Core model architectures (GPT, Llama)

├── 3.training/ # Training scripts and utilities

├── 4.finetuning/ # Experiments with SFT, DPO, PPO

└── 5.inference/ # Inference with quantization and KV caching

Example: Train a small GPT from scratch

python Modules/3.training/train.py --config configs/basic.ymlThe myllm/ folder is where all the components from the notebooks and mini-projects converge into a production-grade framework. This is the final layer, designed for scaling, research, and deployment.

myllm/

├── CLI/ # Command-Line Interface

├── Configs/ # Centralized configuration objects

├── Train/ # Advanced training engine (SFT, DPO, PPO)

├── Tokenizers/ # Production-ready tokenizer implementations

├── utils/ # Shared utility functions

├── api.py # RESTful API for model serving

└── model.py # The core LLM model definition

Example usage:

from myllm.model import LLMModel

from myllm.Train.sft_trainer import SFTTrainer

# Instantiate a model from the core framework

model = LLMModel()

# Fine-tune with a single line of code

trainer = SFTTrainer(model=model, dataset=my_dataset)

trainer.train()

# Every line here maps to real, visible code — no magic.The final vision is MetaBot — an interactive chatbot built entirely with MyLLM.

A chatbot that not only answers your questions but also shows you exactly how it works under the hood.

Built with:

- MyLLM core framework

- Gradio for UI

- Fully open source, located in the

Meta_Bot/directory.

| Status | Milestone | Details |

|---|---|---|

| ✅ | Interactive Notebooks | Learn LLM fundamentals hands-on |

| ✅ | Modular Mini-Projects | Build reusable, composable components |

| ⚙️ | MyLLM Core Framework | Fine-tuning, DPO, PPO, quantization, CLI, API |

| 🛠 | MetaBot + Gradio UI | Interactive chatbot & deployment |

- Run a notebook → tweak hyperparameters → watch how the model changes.

- Build a mini GPT that writes haiku poems.

- Add a new trainer to the framework (e.g., a TRL variant).

- Quantize a model and measure the speedup in inference.

- Fork the repo and contribute a new attention mechanism.

This project wouldn’t exist without the incredible work of others:

- Andrej Karpathy — NanoGPT minimalism

- Umar Jamil — Practical LLM tutorials

- Sebastian Raschka — Deep transformer insights

The end goal: A transparent, educational, and production-ready LLM stack built entirely from scratch, by and for engineers who want to own every line of their AI system.

Let's strip away the black boxes and build the future of LLMs — together.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for MyLLM

Similar Open Source Tools

MyLLM

MyLLM is a web application designed to help law students and legal professionals manage their LLM (Master of Laws) program. It provides features such as course tracking, assignment management, exam schedules, and collaboration tools. Users can easily organize their academic tasks, stay on top of deadlines, and communicate with peers and professors. MyLLM aims to streamline the LLM experience and enhance productivity for students pursuing advanced legal studies.

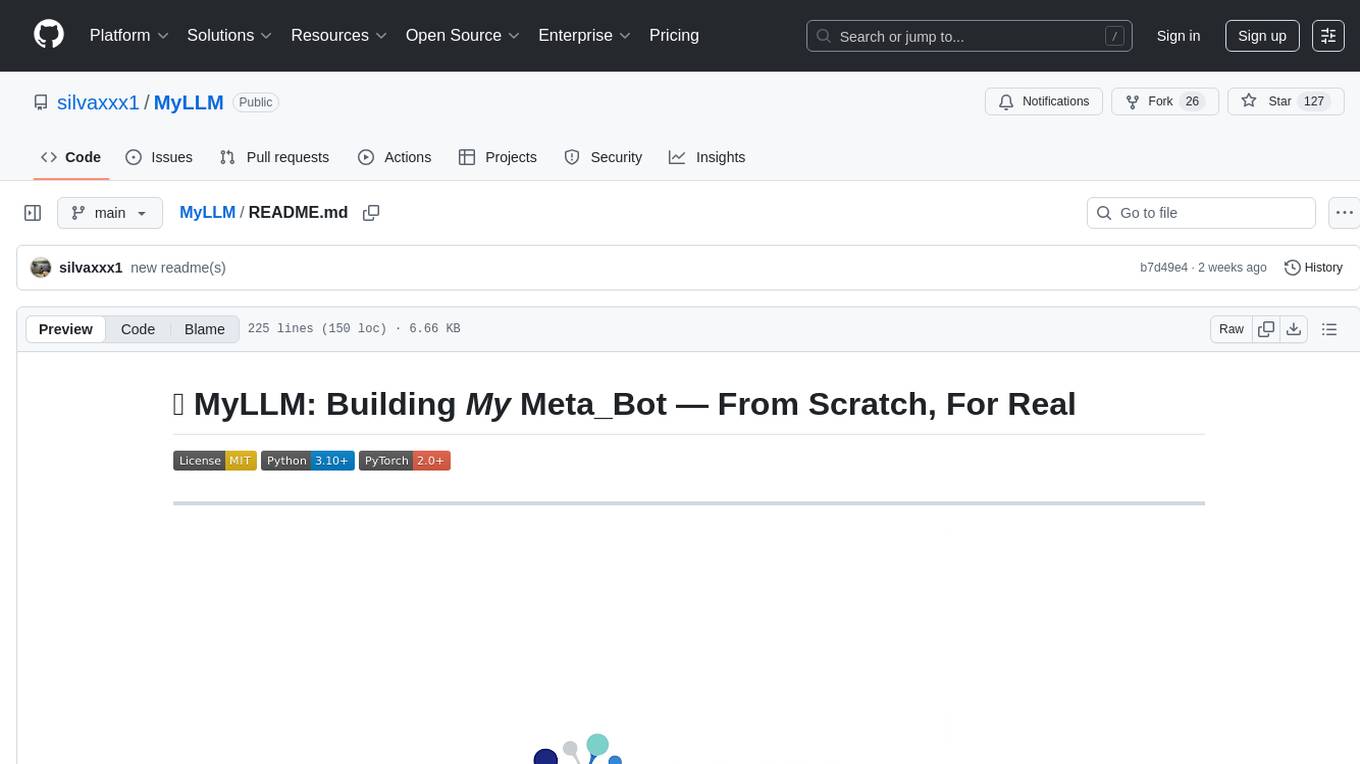

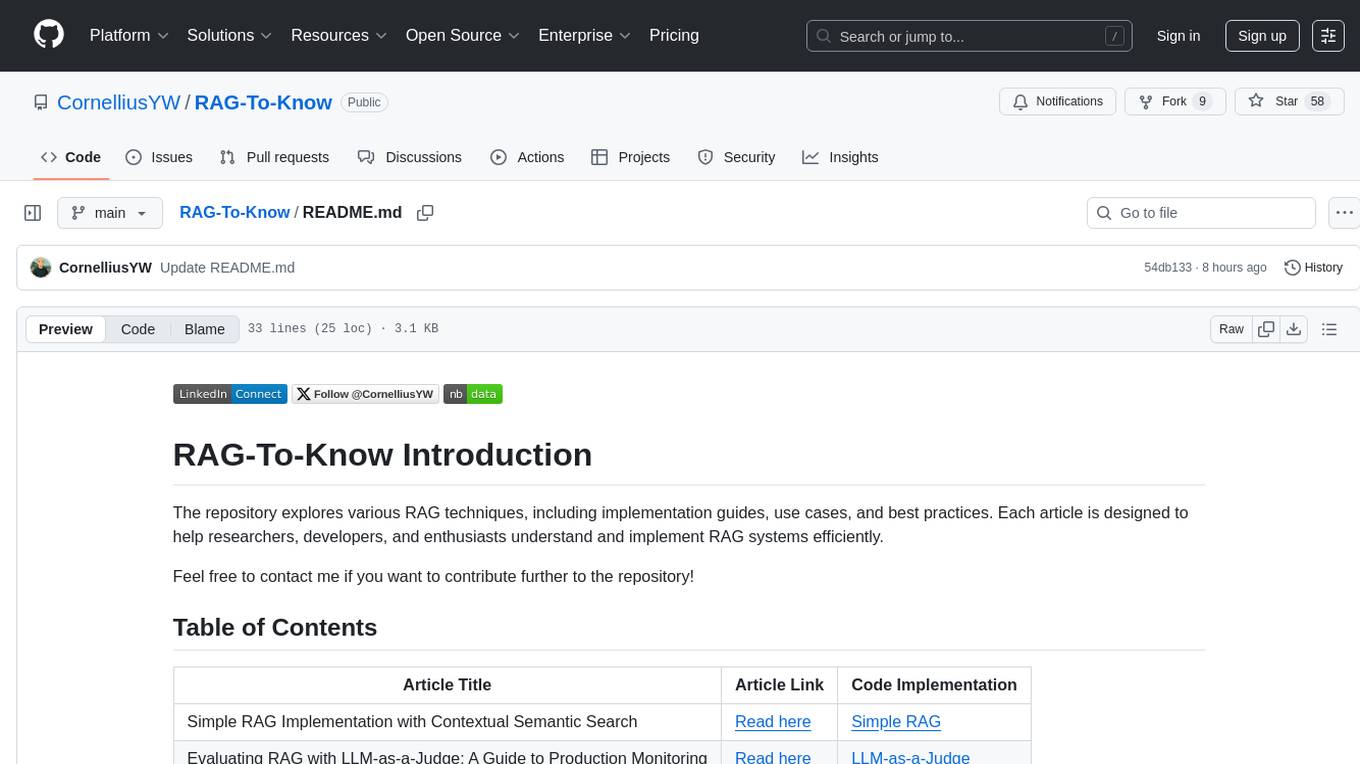

RAG-To-Know

RAG-To-Know is a versatile tool for knowledge extraction and summarization. It leverages the RAG (Retrieval-Augmented Generation) framework to provide a seamless way to retrieve and summarize information from various sources. With RAG-To-Know, users can easily extract key insights and generate concise summaries from large volumes of text data. The tool is designed to streamline the process of information retrieval and summarization, making it ideal for researchers, students, journalists, and anyone looking to quickly grasp the essence of complex information.

ome

Ome is a versatile tool designed for managing and organizing tasks and projects efficiently. It provides a user-friendly interface for creating, tracking, and prioritizing tasks, as well as collaborating with team members. With Ome, users can easily set deadlines, assign tasks, and monitor progress to ensure timely completion of projects. The tool offers customizable features such as tags, labels, and filters to streamline task management and improve productivity. Ome is suitable for individuals, teams, and organizations looking to enhance their task management process and achieve better results.

J.A.R.V.I.S.

J.A.R.V.I.S.1.0 is an advanced virtual assistant tool designed to assist users in various tasks. It provides a wide range of functionalities including voice commands, task automation, information retrieval, and communication management. With its intuitive interface and powerful capabilities, J.A.R.V.I.S.1.0 aims to enhance productivity and streamline daily activities for users.

God-Level-AI

A drill of scientific methods, processes, algorithms, and systems to build stories & models. An in-depth learning resource for humans. This repository is designed for individuals aiming to excel in the field of Data and AI, providing video sessions and text content for learning. It caters to those in leadership positions, professionals, and students, emphasizing the need for dedicated effort to achieve excellence in the tech field. The content covers various topics with a focus on practical application.

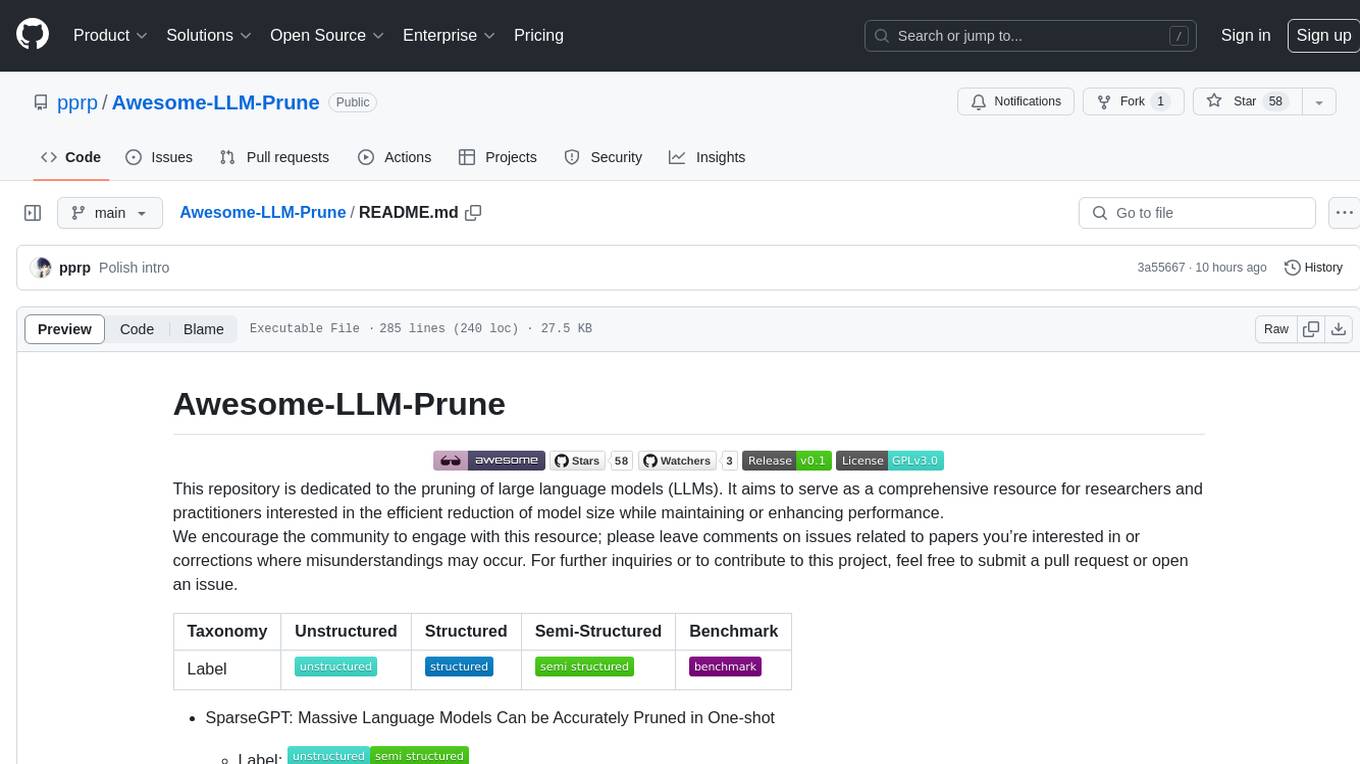

Awesome-LLM-Prune

This repository is dedicated to the pruning of large language models (LLMs). It aims to serve as a comprehensive resource for researchers and practitioners interested in the efficient reduction of model size while maintaining or enhancing performance. The repository contains various papers, summaries, and links related to different pruning approaches for LLMs, along with author information and publication details. It covers a wide range of topics such as structured pruning, unstructured pruning, semi-structured pruning, and benchmarking methods. Researchers and practitioners can explore different pruning techniques, understand their implications, and access relevant resources for further study and implementation.

Introduction_to_Machine_Learning

This repository contains course materials for the 'Introduction to Machine Learning' course at Sharif University of Technology. It includes slides, Jupyter notebooks, and exercises for the Fall 2024 semester. The content is continuously updated throughout the semester. Previous semester materials are also accessible. Visit www.SharifML.ir for class videos and additional information.

HuggingArxivLLM

HuggingArxiv is a tool designed to push research papers related to large language models from Arxiv. It helps users stay updated with the latest developments in the field of large language models by providing notifications and access to relevant papers.

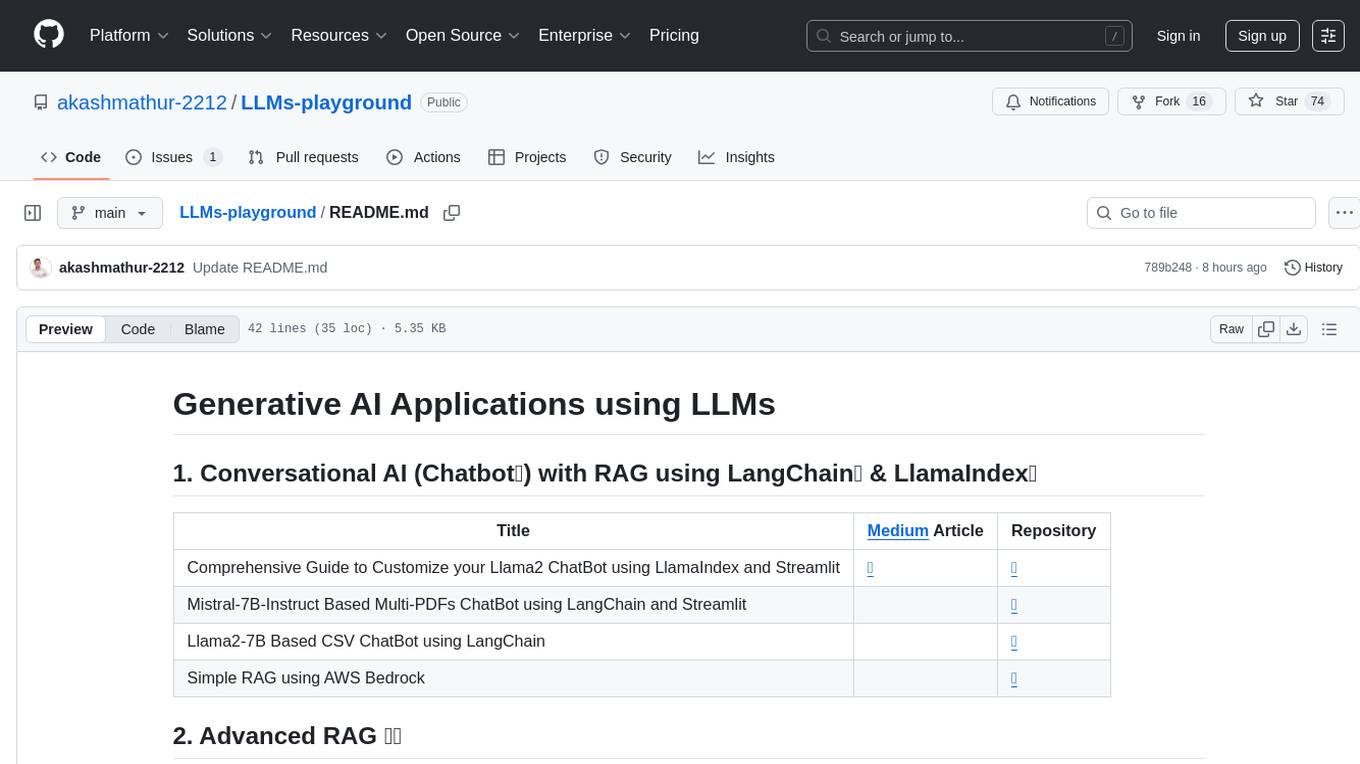

LLMs-playground

LLMs-playground is a repository containing code examples and tutorials for learning and experimenting with Large Language Models (LLMs). It provides a hands-on approach to understanding how LLMs work and how to fine-tune them for specific tasks. The repository covers various LLM architectures, pre-training techniques, and fine-tuning strategies, making it a valuable resource for researchers, students, and practitioners interested in natural language processing and machine learning. By exploring the code and following the tutorials, users can gain practical insights into working with LLMs and apply their knowledge to real-world projects.

mcp-fundamentals

The mcp-fundamentals repository is a collection of fundamental concepts and examples related to microservices, cloud computing, and DevOps. It covers topics such as containerization, orchestration, CI/CD pipelines, and infrastructure as code. The repository provides hands-on exercises and code samples to help users understand and apply these concepts in real-world scenarios. Whether you are a beginner looking to learn the basics or an experienced professional seeking to refresh your knowledge, mcp-fundamentals has something for everyone.

gpt-researcher

GPT Researcher is an autonomous agent designed for comprehensive online research on a variety of tasks. It can produce detailed, factual, and unbiased research reports with customization options. The tool addresses issues of speed, determinism, and reliability by leveraging parallelized agent work. The main idea involves running 'planner' and 'execution' agents to generate research questions, seek related information, and create research reports. GPT Researcher optimizes costs and completes tasks in around 3 minutes. Features include generating long research reports, aggregating web sources, an easy-to-use web interface, scraping web sources, and exporting reports to various formats.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

PurpleLlama

Purple Llama is an umbrella project that aims to provide tools and evaluations to support responsible development and usage of generative AI models. It encompasses components for cybersecurity and input/output safeguards, with plans to expand in the future. The project emphasizes a collaborative approach, borrowing the concept of purple teaming from cybersecurity, to address potential risks and challenges posed by generative AI. Components within Purple Llama are licensed permissively to foster community collaboration and standardize the development of trust and safety tools for generative AI.

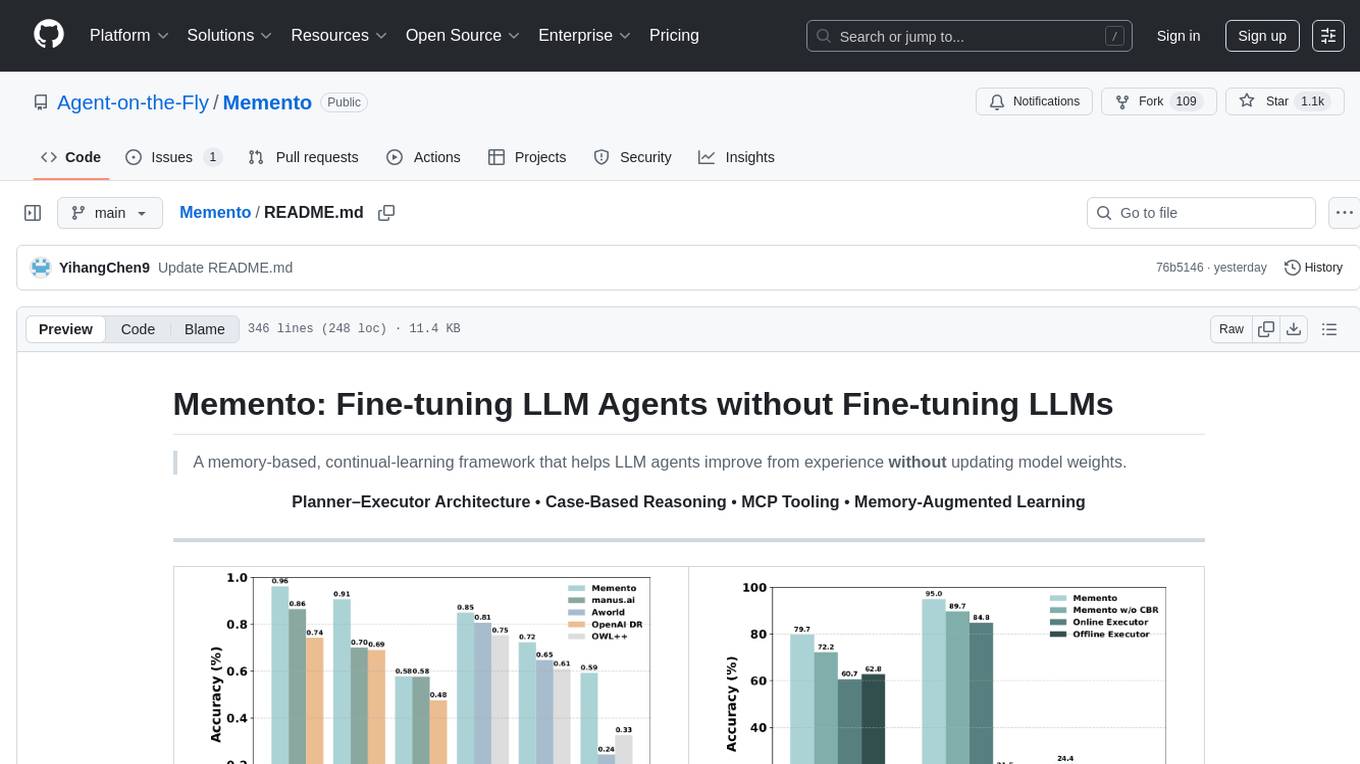

Memento

Memento is a lightweight and user-friendly version control tool designed for small to medium-sized projects. It provides a simple and intuitive interface for managing project versions and collaborating with team members. With Memento, users can easily track changes, revert to previous versions, and merge different branches. The tool is suitable for developers, designers, content creators, and other professionals who need a streamlined version control solution. Memento simplifies the process of managing project history and ensures that team members are always working on the latest version of the project.

Awesome-LLM-Safety

Welcome to our Awesome-llm-safety repository! We've curated a collection of the latest, most comprehensive, and most valuable resources on large language model safety (llm-safety). But we don't stop there; included are also relevant talks, tutorials, conferences, news, and articles. Our repository is constantly updated to ensure you have the most current information at your fingertips.

ISEK

ISEK is a decentralized agent network framework that enables building intelligent, collaborative agent-to-agent systems. It integrates the Google A2A protocol and ERC-8004 contracts for identity registration, reputation building, and cooperative task-solving, creating a self-organizing, decentralized society of agents. The platform addresses challenges in the agent ecosystem by providing an incentive system for users to pay for agent services, motivating developers to build high-quality agents and fostering innovation and quality in the ecosystem. ISEK focuses on decentralized agent collaboration and coordination, allowing agents to find each other, reason together, and act as a decentralized system without central control. The platform utilizes ERC-8004 for decentralized identity, reputation, and validation registries, establishing trustless verification and reputation management.

For similar tasks

MyLLM

MyLLM is a web application designed to help law students and legal professionals manage their LLM (Master of Laws) program. It provides features such as course tracking, assignment management, exam schedules, and collaboration tools. Users can easily organize their academic tasks, stay on top of deadlines, and communicate with peers and professors. MyLLM aims to streamline the LLM experience and enhance productivity for students pursuing advanced legal studies.

For similar jobs

llm-course

The llm-course repository is a collection of resources and materials for a course on Legal and Legislative Drafting. It includes lecture notes, assignments, readings, and other educational materials to help students understand the principles and practices of drafting legal documents. The course covers topics such as statutory interpretation, legal drafting techniques, and the role of legislation in the legal system. Whether you are a law student, legal professional, or someone interested in understanding the intricacies of legal language, this repository provides valuable insights and resources to enhance your knowledge and skills in legal drafting.

MyLLM

MyLLM is a web application designed to help law students and legal professionals manage their LLM (Master of Laws) program. It provides features such as course tracking, assignment management, exam schedules, and collaboration tools. Users can easily organize their academic tasks, stay on top of deadlines, and communicate with peers and professors. MyLLM aims to streamline the LLM experience and enhance productivity for students pursuing advanced legal studies.

lawglance

LawGlance is an AI-powered legal assistant that aims to bridge the gap between people and legal access. It is a free, open-source initiative designed to provide quick and accurate legal support tailored to individual needs. The project covers various laws, with plans for international expansion in the future. LawGlance utilizes AI-powered Retriever-Augmented Generation (RAG) to deliver legal guidance accessible to both laypersons and professionals. The tool is developed with support from mentors and experts at Data Science Academy and Curvelogics.

DISC-LawLLM

DISC-LawLLM is a legal domain large model that aims to provide professional, intelligent, and comprehensive **legal services** to users. It is developed and open-sourced by the Data Intelligence and Social Computing Lab (Fudan-DISC) at Fudan University.

ChatLaw

ChatLaw is an open-source legal large language model tailored for Chinese legal scenarios. It aims to combine LLM and knowledge bases to provide solutions for legal scenarios. The models include ChatLaw-13B and ChatLaw-33B, trained on various legal texts to construct dialogue data. The project focuses on improving logical reasoning abilities and plans to train models with parameters exceeding 30B for better performance. The dataset consists of forum posts, news, legal texts, judicial interpretations, legal consultations, exam questions, and court judgments, cleaned and enhanced to create dialogue data. The tool is designed to assist in legal tasks requiring complex logical reasoning, with a focus on accuracy and reliability.

marly

Marly is a tool that allows users to search for and extract context-specific data from various types of documents such as PDFs, Word files, Powerpoints, and websites. It provides the ability to extract data in structured formats like JSON or Markdown, making it easy to integrate into workflows. Marly supports multi-schema and multi-document extraction, offers built-in caching for rapid repeat extractions, and ensures no vendor lock-in by allowing flexibility in choosing model providers.

docutranslate

Docutranslate is a versatile tool for translating documents efficiently. It supports multiple file formats and languages, making it ideal for businesses and individuals needing quick and accurate translations. The tool uses advanced algorithms to ensure high-quality translations while maintaining the original document's formatting. With its user-friendly interface, Docutranslate simplifies the translation process and saves time for users. Whether you need to translate legal documents, technical manuals, or personal letters, Docutranslate is the go-to solution for all your document translation needs.

lawyer-llama

Lawyer LLaMA is a large language model that has been specifically trained on legal data, including Chinese laws, regulations, and case documents. It has been fine-tuned on a large dataset of legal questions and answers, enabling it to understand and respond to legal inquiries in a comprehensive and informative manner. Lawyer LLaMA is designed to assist legal professionals and individuals with a variety of law-related tasks, including: * **Legal research:** Quickly and efficiently search through vast amounts of legal information to find relevant laws, regulations, and case precedents. * **Legal analysis:** Analyze legal issues, identify potential legal risks, and provide insights on how to proceed. * **Document drafting:** Draft legal documents, such as contracts, pleadings, and legal opinions, with accuracy and precision. * **Legal advice:** Provide general legal advice and guidance on a wide range of legal matters, helping users understand their rights and options. Lawyer LLaMA is a powerful tool that can significantly enhance the efficiency and effectiveness of legal research, analysis, and decision-making. It is an invaluable resource for lawyers, paralegals, law students, and anyone else who needs to navigate the complexities of the legal system.