oneclick-subtitles-generator

🎬 Auto-subtitle videos with AI transcription, translation, voice cloning & professional rendering

Stars: 136

A comprehensive web application for auto-subtitling videos and audio, translating SRT files, generating AI narration with voice cloning, creating background images, and rendering professional subtitled videos. Designed for content creators, educators, and general users who need high-quality subtitle generation and video production capabilities.

README:

Xem bản tiếng Việt tại đây.

Click to view screenshots

Here are some screenshots showcasing the application's current features:

A comprehensive web application for auto-subtitling videos and audio, translating SRT files, generating AI narration with voice cloning, creating background images, and rendering professional subtitled videos. Designed for content creators, educators, and general users who need high-quality subtitle generation and video production capabilities.

Choose the right version for your needs:

| Feature | OSG Lite | OSG Full | OSG Vercel |

|---|---|---|---|

| AI Subtitle Generation | ✅ Gemini AI transcription | ✅ Gemini AI transcription | ✅ Gemini AI transcription |

| Video Sources | ✅ YouTube, Douyin/TikTok, 1000+ platforms + Upload | ✅ YouTube, Douyin/TikTok, 1000+ platforms + Upload | Upload only |

| Subtitle Editor | ✅ Visual timeline, waveform, real-time preview | ✅ Visual timeline, waveform, real-time preview | ✅ Visual timeline, waveform, real-time preview |

| Translation | ✅ Multi-language with context awareness | ✅ Multi-language with context awareness | ✅ Multi-language with context awareness |

| Video Rendering | ✅ GPU-accelerated with Remotion | ✅ GPU-accelerated with Remotion | ❌ Not available |

| Background Generation | ✅ Gemini Native/Nano Banana | ✅ Gemini Native/Nano Banana | ✅ Gemini Native/Nano Banana |

| Basic TTS | ✅ Gemini Live API, Edge TTS, Google TTS | ✅ Gemini Live API, Edge TTS, Google TTS | ❌ Not available |

| Voice Cloning | ❌ Not included | ✅ F5-TTS, Chatterbox | ❌ Not available |

| Project Folder Size | ~2-3 GB | ~8-12 GB | N/A (hosted) |

| GPU Requirements | Any GPU for video rendering | GPU accelerated voice cloning (CPU fallback available) | None (no rendering) |

- Choose OSG Lite if you need fast subtitle generation and video rendering without voice cloning

- Choose OSG (Full) if you need advanced voice cloning and narration capabilities

-

Go to Releases and download the latest OSG_installer_Windows.bat.

-

Open the downloaded .bat file and follow the instructions (app size will be large if installing with voice cloning feature)

-

Clone this repo and run the OSG_installer.sh file:

git clone https://github.com/nganlinh4/oneclick-subtitles-generator.git cd oneclick-subtitles-generator chmod +x OSG_installer.sh ./OSG_installer.sh -

Follow the on-screen instructions (app size will be large if installing with voice cloning feature)

- Open OSG_installer_Windows.bat and follow the instructions.

-

Open Terminal and run the OSG_installer.sh file again:

./OSG_installer.sh

-

Browser will automatically open at http://localhost:3030

- Multi-source support: Upload video/audio files, YouTube URLs, Douyin/TikTok links, or search YouTube by title

- Format compatibility: Supports MP4, AVI, MOV, WebM, WMV, MP3, WAV, AAC, FLAC, and more

- Quality scanning: Intelligent video quality detection with cookie-based authentication for premium content

- Video compatibility checking: Automatic format conversion for Remotion compatibility

- Google Gemini AI: Uses latest Gemini 2.5 models (Flash, Pro) for accurate transcription

- Multi-language support: Generate subtitles in multiple languages with high accuracy

- Parallel processing: Handles long videos (15+ minutes) with intelligent segmentation

- Custom prompts: Configurable transcription prompts for specialized content

- Retry mechanisms: Smart retry with different models for failed segments

- Visual timeline editor: Drag-and-drop timing adjustments with waveform visualization

- Real-time preview: Live subtitle synchronization with video playback

- Sticky timing: Batch adjust multiple subtitles simultaneously

- Text editing: Direct text modification with undo/redo functionality

- Merge & split: Combine adjacent subtitles or split long ones

- Format support: Export to SRT, JSON, or custom formats

- F5-TTS integration: State-of-the-art voice cloning technology

- Chatterbox TTS: High-quality text-to-speech with voice conversion

- Edge TTS & Google TTS: Multiple TTS engine options

- Reference audio: Upload, record, or extract voice samples from videos

- Multi-audio tracks: Combine original audio with AI-generated narration

- Volume controls: Independent audio level management

- Multi-language translation: Translate subtitles to any language while preserving timing

- Custom formatting: Configurable output formats with brackets, delimiters, and chains

- Batch processing: Translate multiple subtitle sets simultaneously

- Context awareness: AI-powered translation with video context understanding

- AI-powered creation: Generate custom backgrounds using Gemini's image generation

- Album art integration: Use existing artwork as reference for style consistency

- Batch generation: Create multiple variations with unique prompts

- Smart prompting: Automatic prompt generation based on lyrics and content

- Remotion integration: GPU-accelerated video rendering with hardware optimization

- Multi-resolution support: 360p to 8K output with automatic aspect ratio detection

- Subtitle customization: Extensive styling options including fonts, colors, effects, and animations

- Multi-audio support: Combine original video audio with AI narration tracks

- Background integration: Use generated images or video backgrounds

- Render queue: Batch processing with progress tracking

- File Upload: Drag & drop or browse for video/audio files

- YouTube: Paste URL or search by title with thumbnail preview

- Douyin/TikTok: Paste URL for automatic extraction

- Other platforms: Use any supported video URL

- Choose your preferred Gemini model (2.5 Flash/Pro recommended)

- Configure custom prompts for specialized content

- Click "Generate timed subtitles" and monitor progress

- Long videos are automatically processed in parallel segments

- Visual timeline: Drag timing handles with waveform visualization

- Real-time preview: See changes instantly synchronized with video

- Text editing: Click to edit subtitle content directly

- Batch operations: Use sticky timing for multiple subtitle adjustments

- Advanced tools: Merge, split, insert, or delete subtitle segments

- Select target languages for translation

- Configure output formatting (brackets, delimiters, chains)

- Use context-aware AI translation with video understanding

- Preserve original timing while adapting text

- Set up reference audio: Upload, record, or extract from video

- Choose TTS engine: F5-TTS (voice cloning), Chatterbox, Edge TTS, or Google TTS

- Configure voice settings: Adjust speed, pitch, and style parameters

- Generate narration: Create AI voice for original or translated subtitles

- Upload album art or reference images

- Generate AI-powered backgrounds based on content

- Create multiple variations with unique prompts

- Use generated images in video rendering

- Open video renderer: Access the integrated Remotion-based renderer

- Customize subtitles: Extensive styling options (fonts, colors, effects, animations)

- Configure audio: Balance original video audio with AI narration

- Set output quality: Choose resolution from 360p to 8K

- Render with GPU acceleration: Hardware-optimized processing for fast output

- Subtitle files: SRT, JSON, or custom formats

- Audio files: Generated narration in various formats

- Background images: AI-generated artwork

- Rendered videos: Professional subtitled videos with custom styling

Access settings via the gear icon in the top-right corner:

- API Keys: Gemini (required), YouTube (optional for search)

- AI Models: Choose between Gemini 2.5 Flash, Pro, or experimental models

- Languages: English, Vietnamese, Korean interface support

- Video Processing: Segment duration, quality preferences, cookie management

- TTS Engines: F5-TTS, Chatterbox, Edge TTS, or Google TTS selection

- Interface: Dark/light themes, time format, waveform visualization

- Cache Management: Clear caches and monitor storage usage

- Frontend: React 18, Material-UI, Styled Components, i18next

- Video Rendering: Remotion 4 with GPU acceleration (Vulkan/OpenGL)

- Backend: Node.js/Express, Python Flask, FastAPI

- AI Integration: Google Gemini API, F5-TTS, Chatterbox TTS

- Audio/Video: FFmpeg, Web Audio API, yt-dlp, Playwright

- Performance: React Window virtualization, multi-level caching, hardware acceleration

- GPU Acceleration: Hardware-accelerated video rendering with Vulkan/OpenGL

- Virtualized UI: Only renders visible elements for optimal performance with long videos

- Parallel Processing: Multi-core subtitle generation and video processing

- Smart Caching: Multi-layer cache system for subtitles, videos, and generated content

- Optimized Timeline: Hardware-accelerated canvas visualization with adaptive rendering

- Efficient Memory: Automatic cleanup and smart resource management

- React - Modern UI framework with hooks and context

- Material-UI - Professional design system and components

- Remotion - Programmatic video creation and rendering

- Node.js - JavaScript runtime for backend services

- Express - Web application framework for Node.js

- Google Gemini AI - Advanced language models for transcription and image generation

- F5-TTS - State-of-the-art voice cloning technology

- Chatterbox - High-quality TTS and voice conversion

- Microsoft Edge TTS - Neural text-to-speech service

- Google Text-to-Speech - Cloud-based speech synthesis

- FFmpeg - Comprehensive multimedia framework

- yt-dlp - Universal video downloader for 1000+ platforms

- Playwright - Browser automation for complex site interactions

- Puppeteer - Headless Chrome control for web scraping

- Styled Components - CSS-in-JS styling solution

- React Router - Declarative routing for React

- React Window - Efficient virtualization for large lists

- React Icons - Popular icon libraries for React

- HTML5 Canvas - Hardware-accelerated timeline visualization

- i18next - Internationalization framework

- React i18next - React integration for i18next

- Material Design 3 - Modern design principles and accessibility standards

- TypeScript - Type-safe JavaScript development

- Create React App - React application scaffolding

- Concurrently - Multi-service development environment

- Cross-env - Cross-platform environment variables

- npm - Package manager for JavaScript

- uv - Fast Python package installer and resolver

- Python - Backend services for AI processing

- Open source community for maintaining these incredible tools

- Google DeepMind for advancing AI accessibility

- Remotion team for revolutionizing programmatic video creation

- F5-TTS contributors for open-source voice cloning technology

- All beta testers and contributors who helped improve this application

MIT License

Copyright (c) 2024 Subtitles Generator

Permission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the "Software"), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions:

The above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software.

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for oneclick-subtitles-generator

Similar Open Source Tools

oneclick-subtitles-generator

A comprehensive web application for auto-subtitling videos and audio, translating SRT files, generating AI narration with voice cloning, creating background images, and rendering professional subtitled videos. Designed for content creators, educators, and general users who need high-quality subtitle generation and video production capabilities.

J.A.R.V.I.S.2.0

J.A.R.V.I.S. 2.0 is an AI-powered assistant designed for voice commands, capable of tasks like providing weather reports, summarizing news, sending emails, and more. It features voice activation, speech recognition, AI responses, and handles multiple tasks including email sending, weather reports, news reading, image generation, database functions, phone call automation, AI-based task execution, website & application automation, and knowledge-based interactions. The assistant also includes timeout handling, automatic input processing, and the ability to call multiple functions simultaneously. It requires Python 3.9 or later and specific API keys for weather, news, email, and AI access. The tool integrates Gemini AI for function execution and Ollama as a fallback mechanism. It utilizes a RAG-based knowledge system and ADB integration for phone automation. Future enhancements include deeper mobile integration, advanced AI-driven automation, improved NLP-based command execution, and multi-modal interactions.

retrace

Retrace is a local-first screen recording and search application for macOS, inspired by Rewind AI. It captures screen activity, extracts text via OCR, and makes everything searchable locally on-device. The project is in very early development, offering features like continuous screen capture, OCR text extraction, full-text search, timeline viewer, dashboard analytics, Rewind AI import, settings panel, global hotkeys, HEVC video encoding, search highlighting, privacy controls, and more. Built with a modular architecture, Retrace uses Swift 5.9+, SwiftUI, Vision framework, SQLite with FTS5, HEVC video encoding, CryptoKit for encryption, and more. Future releases will include features like audio transcription and semantic search. Retrace requires macOS 13.0+ (Apple Silicon required) and Xcode 15.0+ for building from source, with permissions for screen recording and accessibility. Contributions are welcome, and the project is licensed under the MIT License.

RSTGameTranslation

RSTGameTranslation is a tool designed for translating game text into multiple languages efficiently. It provides a user-friendly interface for game developers to easily manage and localize their game content. With RSTGameTranslation, developers can streamline the translation process, ensuring consistency and accuracy across different language versions of their games. The tool supports various file formats commonly used in game development, making it versatile and adaptable to different project requirements. Whether you are working on a small indie game or a large-scale production, RSTGameTranslation can help you reach a global audience by making localization a seamless and hassle-free experience.

timeline-studio

Timeline Studio is a next-generation professional video editor with AI integration that automates content creation for social media. It combines the power of desktop applications with the convenience of web interfaces. With 257 AI tools, GPU acceleration, plugin system, multi-language interface, and local processing, Timeline Studio offers complete video production automation. Users can create videos for various social media platforms like TikTok, YouTube, Vimeo, Telegram, and Instagram with optimized versions. The tool saves time, understands trends, provides professional quality, and allows for easy feature extension through plugins. Timeline Studio is open source, transparent, and offers significant time savings and quality improvements for video editing tasks.

Wegent

Wegent is an open-source AI-native operating system designed to define, organize, and run intelligent agent teams. It offers various core features such as a chat agent with multi-model support, conversation history, group chat, attachment parsing, follow-up mode, error correction mode, long-term memory, sandbox execution, and extensions. Additionally, Wegent includes a code agent for cloud-based code execution, AI feed for task triggers, AI knowledge for document management, and AI device for running tasks locally. The platform is highly extensible, allowing for custom agents, agent creation wizard, organization management, collaboration modes, skill support, MCP tools, execution engines, YAML config, and an API for easy integration with other systems.

ComfyUI_Yvann-Nodes

ComfyUI_Yvann-Nodes is a pack of custom nodes that enable audio reactivity within ComfyUI, allowing users to create AI-driven animations that sync with music. Users can generate audio reactive AI videos, control AI generation styles, content, and composition with any audio input. The tool is simple to use by dropping workflows in ComfyUI and specifying audio and visual inputs. It is flexible and works with existing ComfyUI AI tech and nodes like IPAdapter, AnimateDiff, and ControlNet. Users can pick workflows for Images → Video or Video → Video, download the corresponding .json file, drop it into ComfyUI, install missing custom nodes, set inputs, and generate audio-reactive animations.

ito

Ito is an intelligent voice assistant that provides seamless voice dictation to any application on your computer. It works in any app, offers global keyboard shortcuts, real-time transcription, and instant text insertion. It is smart and adaptive with features like custom dictionary, context awareness, multi-language support, and intelligent punctuation. Users can customize trigger keys, audio preferences, and privacy controls. It also offers data management features like a notes system, interaction history, cloud sync, and export capabilities. Ito is built as a modern Electron application with a multi-process architecture and utilizes technologies like React, TypeScript, Rust, gRPC, and AWS CDK.

ALwrity

ALwrity is a lightweight and user-friendly text analysis tool designed for developers and data scientists. It provides various functionalities for analyzing and processing text data, including sentiment analysis, keyword extraction, and text summarization. With ALwrity, users can easily gain insights from their text data and make informed decisions based on the analysis results. The tool is highly customizable and can be integrated into existing workflows seamlessly, making it a valuable asset for anyone working with text data in their projects.

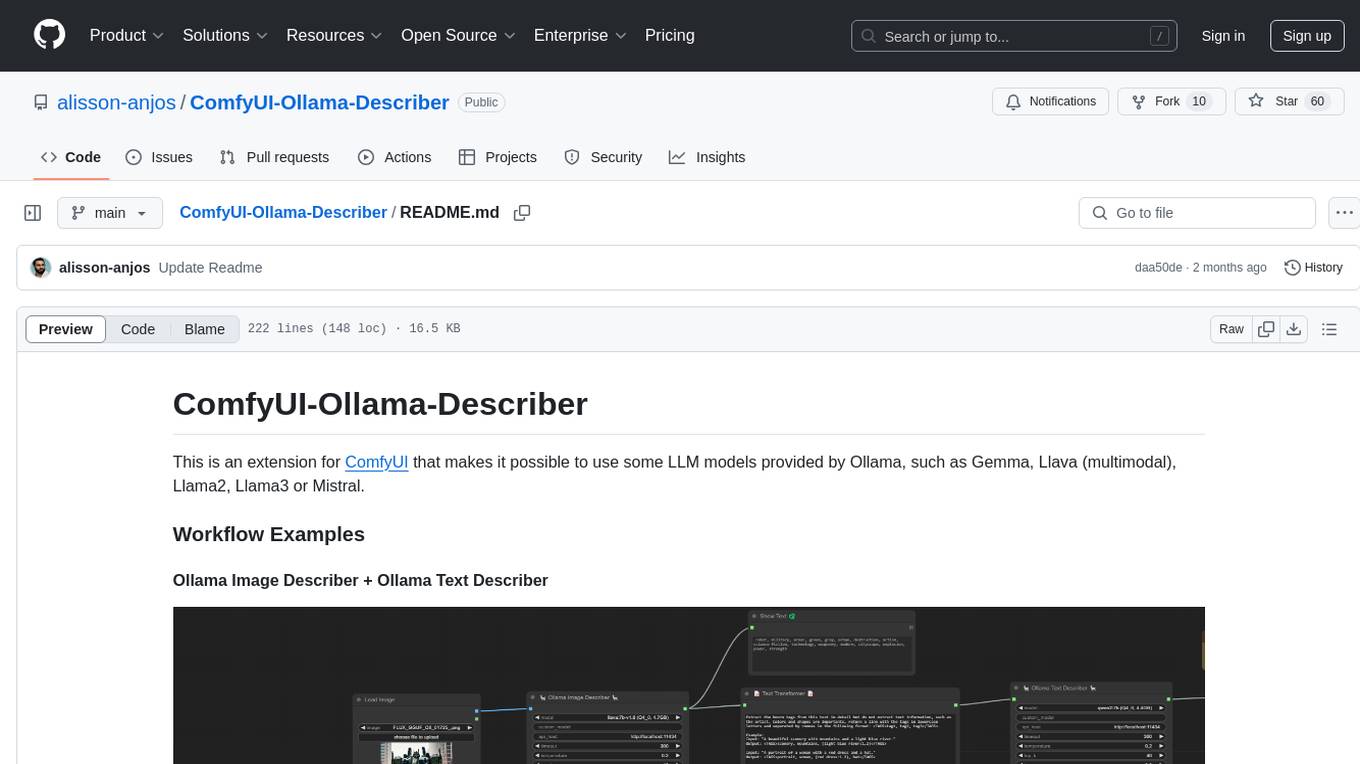

ComfyUI-Ollama-Describer

ComfyUI-Ollama-Describer is an extension for ComfyUI that enables the use of LLM models provided by Ollama, such as Gemma, Llava (multimodal), Llama2, Llama3, or Mistral. It requires the Ollama library for interacting with large-scale language models, supporting GPUs using CUDA and AMD GPUs on Windows, Linux, and Mac. The extension allows users to run Ollama through Docker and utilize NVIDIA GPUs for faster processing. It provides nodes for image description, text description, image captioning, and text transformation, with various customizable parameters for model selection, API communication, response generation, and model memory management.

gen-ai-experiments

Gen-AI-Experiments is a structured collection of Jupyter notebooks and AI experiments designed to guide users through various AI tools, frameworks, and models. It offers valuable resources for both beginners and experienced practitioners, covering topics such as AI agents, model testing, RAG systems, real-world applications, and open-source tools. The repository includes folders with curated libraries, AI agents, experiments, LLM testing, open-source libraries, RAG experiments, and educhain experiments, each focusing on different aspects of AI development and application.

pluely

Pluely is a versatile and user-friendly tool for managing tasks and projects. It provides a simple interface for creating, organizing, and tracking tasks, making it easy to stay on top of your work. With features like task prioritization, due date reminders, and collaboration options, Pluely helps individuals and teams streamline their workflow and boost productivity. Whether you're a student juggling assignments, a professional managing multiple projects, or a team coordinating tasks, Pluely is the perfect solution to keep you organized and efficient.

presenton

Presenton is an open-source AI presentation generator and API that allows users to create professional presentations locally on their devices. It offers complete control over the presentation workflow, including custom templates, AI template generation, flexible generation options, and export capabilities. Users can use their own API keys for various models, integrate with Ollama for local model running, and connect to OpenAI-compatible endpoints. The tool supports multiple providers for text and image generation, runs locally without cloud dependencies, and can be deployed as a Docker container with GPU support.

ToolNeuron

ToolNeuron is a secure, offline AI ecosystem for Android devices that allows users to run private AI models and dynamic plugins fully offline, with hardware-grade encryption ensuring maximum privacy. It enables users to have an offline-first experience, add capabilities without app updates through pluggable tools, and ensures security by design with strict plugin validation and sandboxing.

AionUi

AionUi is a user interface library for building modern and responsive web applications. It provides a set of customizable components and styles to create visually appealing user interfaces. With AionUi, developers can easily design and implement interactive web interfaces that are both functional and aesthetically pleasing. The library is built using the latest web technologies and follows best practices for performance and accessibility. Whether you are working on a personal project or a professional application, AionUi can help you streamline the UI development process and deliver a seamless user experience.

osaurus

Osaurus is a native, Apple Silicon-only local LLM server built on Apple's MLX for maximum performance on M‑series chips. It is a SwiftUI app + SwiftNIO server with OpenAI‑compatible and Ollama‑compatible endpoints. The tool supports native MLX text generation, model management, streaming and non‑streaming chat completions, OpenAI‑compatible function calling, real-time system resource monitoring, and path normalization for API compatibility. Osaurus is designed for macOS 15.5+ and Apple Silicon (M1 or newer) with Xcode 16.4+ required for building from source.

For similar tasks

oneclick-subtitles-generator

A comprehensive web application for auto-subtitling videos and audio, translating SRT files, generating AI narration with voice cloning, creating background images, and rendering professional subtitled videos. Designed for content creators, educators, and general users who need high-quality subtitle generation and video production capabilities.

TeroSubtitler

Tero Subtitler is an open source, cross-platform, and free subtitle editing software with a user-friendly interface. It offers fully fledged editing with SMPTE and MEDIA modes, support for various subtitle formats, multi-level undo/redo, search and replace, auto-backup, source and transcription modes, translation memory, audiovisual preview, timeline with waveform visualizer, manipulation tools, formatting options, quality control features, translation and transcription capabilities, validation tools, automation for correcting errors, and more. It also includes features like exporting subtitles to MP3, importing/exporting Blu-ray SUP format, generating blank video, generating video with hardcoded subtitles, video dubbing, and more. The tool utilizes powerful multimedia playback engines like mpv, advanced audio/video manipulation tools like FFmpeg, tools for automatic transcription like whisper.cpp/Faster-Whisper, auto-translation API like Google Translate, and ElevenLabs TTS for video dubbing.

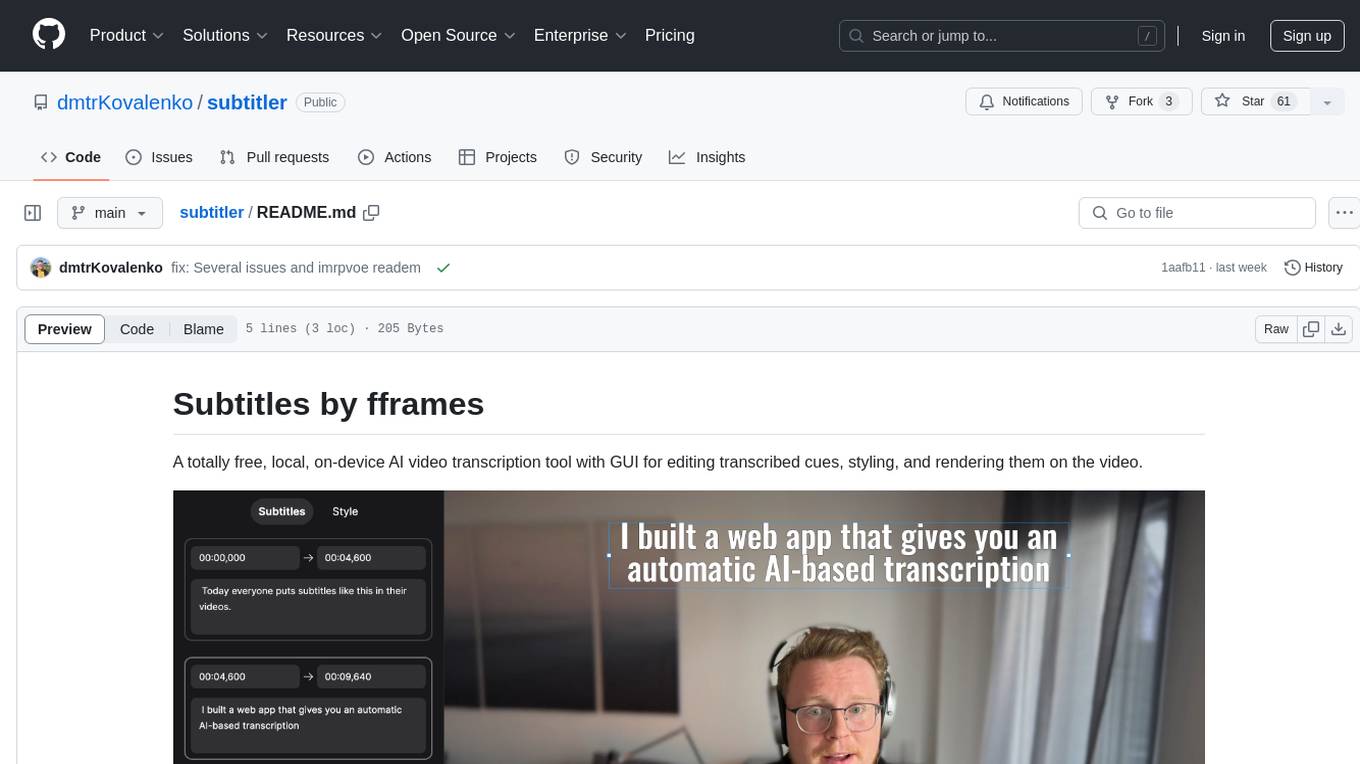

subtitler

Subtitles by fframes is a free, local, on-device AI video transcription tool with a user-friendly GUI. It allows users to transcribe video content, edit transcribed cues, style the subtitles, and render them directly onto the video. The tool provides a convenient way to create accurate subtitles for videos without the need for an internet connection.

VideoCaptioner

VideoCaptioner is a video subtitle processing assistant based on a large language model (LLM), supporting speech recognition, subtitle segmentation, optimization, translation, and full-process handling. It is user-friendly and does not require high configuration, supporting both network calls and local offline (GPU-enabled) speech recognition. It utilizes a large language model for intelligent subtitle segmentation, correction, and translation, providing stunning subtitles for videos. The tool offers features such as accurate subtitle generation without GPU, intelligent segmentation and sentence splitting based on LLM, AI subtitle optimization and translation, batch video subtitle synthesis, intuitive subtitle editing interface with real-time preview and quick editing, and low model token consumption with built-in basic LLM model for easy use.

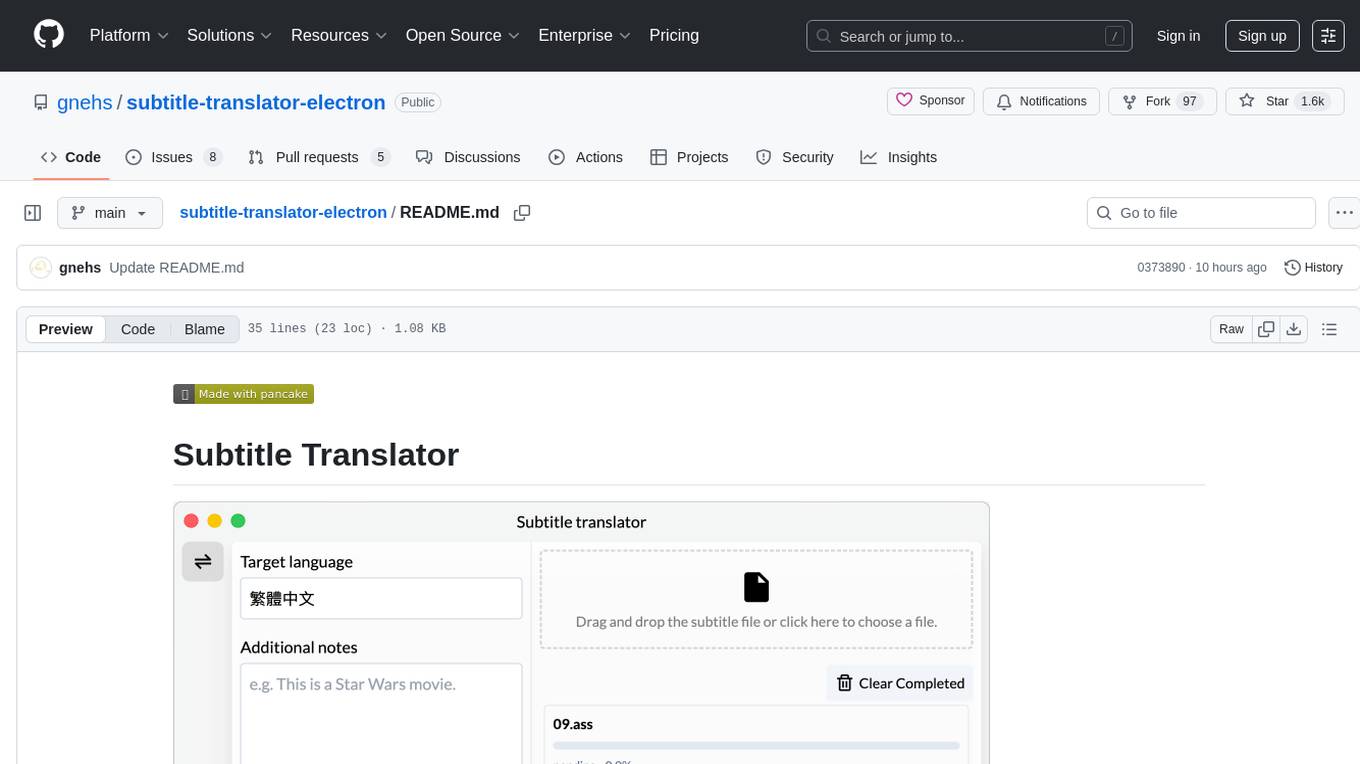

subtitle-translator-electron

Subtitle Translator is a tool that utilizes ChatGPT to translate subtitles in various formats such as .ass, .srt, .ssa, and .vtt. It supports multiple languages and provides translations based on context from preceding and following sentences. Users can download the stable version from the Releases page and contribute through pull requests. The tool aims to simplify the process of translating subtitles for different media content.

Chenyme-AAVT

Chenyme-AAVT is a user-friendly tool that provides automatic video and audio recognition and translation. It leverages the capabilities of Whisper, a powerful speech recognition model, to accurately identify speech in videos and audios. The recognized speech is then translated using ChatGPT or KIMI, ensuring high-quality translations. With Chenyme-AAVT, you can quickly generate字幕 files and merge them with the original video, making video translation a breeze. The tool supports various languages, allowing you to translate videos and audios into your desired language. Additionally, Chenyme-AAVT offers features such as VAD (Voice Activity Detection) to enhance recognition accuracy, GPU acceleration for faster processing, and support for multiple字幕 formats. Whether you're a content creator, translator, or anyone looking to make video translation more efficient, Chenyme-AAVT is an invaluable tool.

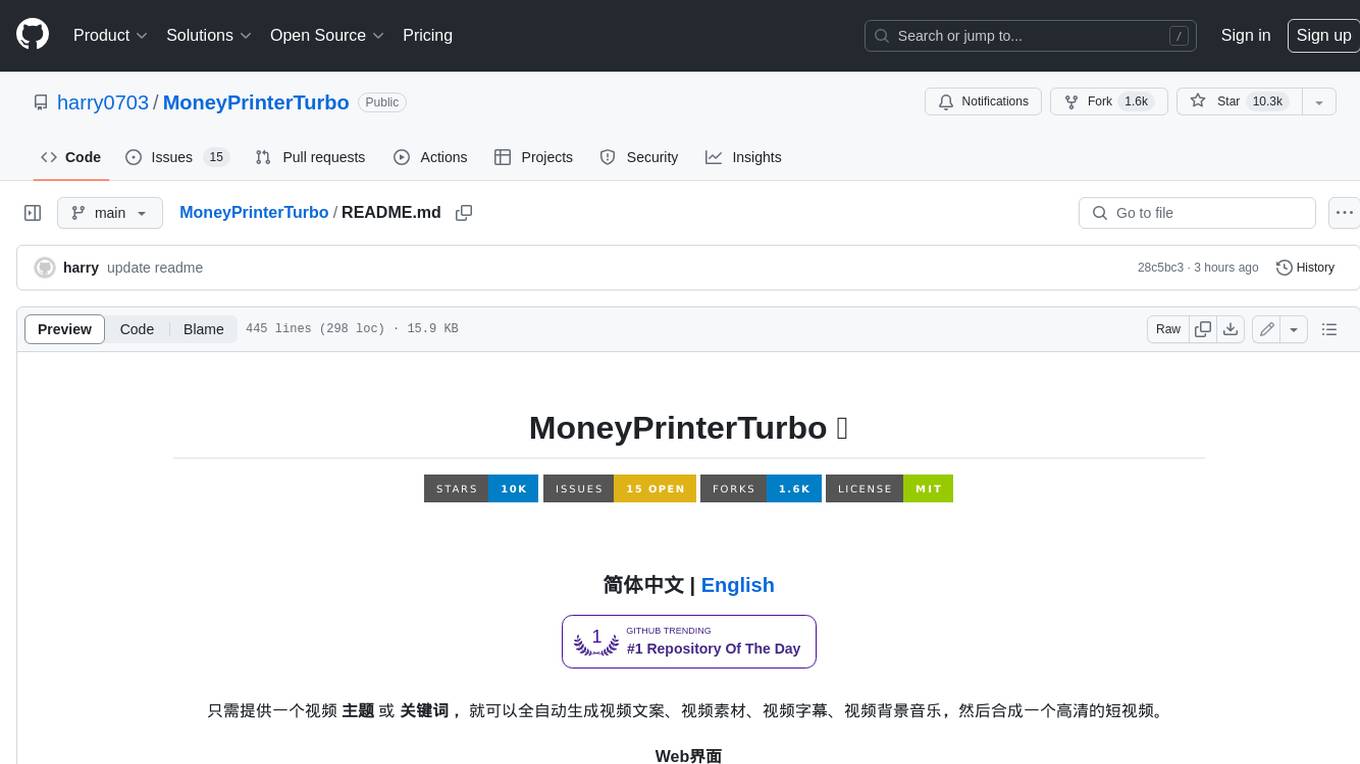

MoneyPrinterTurbo

MoneyPrinterTurbo is a tool that can automatically generate video content based on a provided theme or keyword. It can create video scripts, materials, subtitles, and background music, and then compile them into a high-definition short video. The tool features a web interface and an API interface, supporting AI-generated video scripts, customizable scripts, multiple HD video sizes, batch video generation, customizable video segment duration, multilingual video scripts, multiple voice synthesis options, subtitle generation with font customization, background music selection, access to high-definition and copyright-free video materials, and integration with various AI models like OpenAI, moonshot, Azure, and more. The tool aims to simplify the video creation process and offers future plans to enhance voice synthesis, add video transition effects, provide more video material sources, offer video length options, include free network proxies, enable real-time voice and music previews, support additional voice synthesis services, and facilitate automatic uploads to YouTube platform.

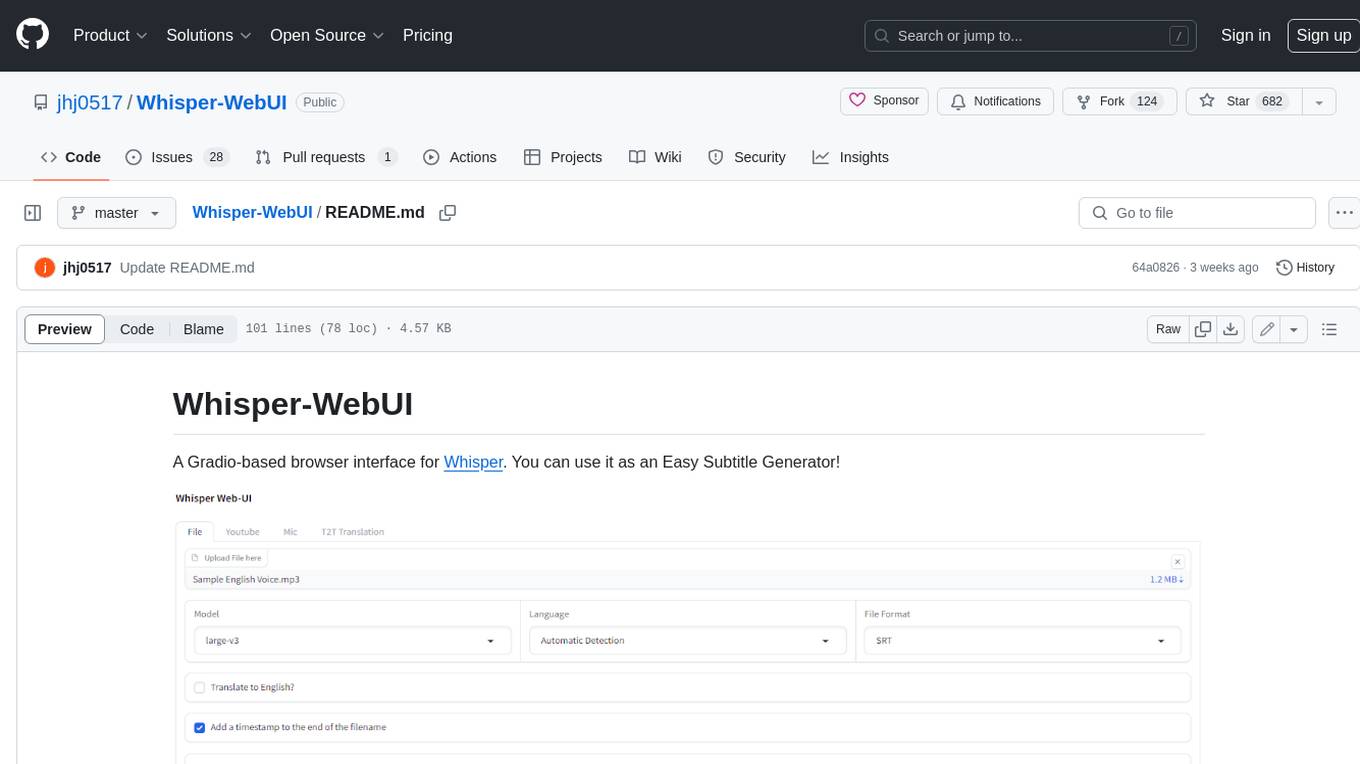

Whisper-WebUI

Whisper-WebUI is a Gradio-based browser interface for Whisper, serving as an Easy Subtitle Generator. It supports generating subtitles from various sources such as files, YouTube, and microphone. The tool also offers speech-to-text and text-to-text translation features, utilizing Facebook NLLB models and DeepL API. Users can translate subtitle files from other languages to English and vice versa. The project integrates faster-whisper for improved VRAM usage and transcription speed, providing efficiency metrics for optimized whisper models. Additionally, users can choose from different Whisper models based on size and language requirements.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.