aitour26-WRK541-real-world-code-migration-with-github-copilot-agent-mode

None

Stars: 241

Microsoft AI Tour 2026 WRK541 is a workshop focused on real-world code migration using GitHub Copilot Agent Mode. The session is designed for technologists interested in applying AI pair-programming techniques to challenging tasks like migrating or translating code between different programming languages. Participants will learn advanced GitHub Copilot techniques, differences between Python and C#, JSON serialization and deserialization in C#, developing and validating endpoints, integrating Swagger/OpenAPI documentation, and writing unit tests with MSTest. The workshop aims to help users gain hands-on experience in using GitHub Copilot for real-world code migration scenarios.

README:

If you will be delivering this session, check the session-delivery-sources folder for slides, scripts, and other resources.

- Who is this for: Any technologist that is looking to apply AI pair-programming techniques with GitHub Copilot to perform challenging work like migrating or translating from one programming language to another.

- What you'll learn: You'll use advanced GitHub Copilot techniques that are specifically useful when translating projects in different programming languages, as well as the different modes GitHub Copilot has to offer.

- What you'll build: An HTTP API migrated from Python to C# (with .NET Minimal APIs), demonstrating how to use GitHub Copilot for real-world code migration scenarios.

In this workshop, you will:

- Learn the differences about each of GitHub Copilot Modes, when to use each one, best practices and tools to help you get the most out of your interactions.

- Understand the Differences Between Python and C# for Web Development Learn the key differences in syntax, libraries, and frameworks when transitioning from Python's FastAPI to C#'s ASP.NET Core Minimal APIs.

- Implement JSON Serialization and Deserialization in C# Gain hands-on experience using System.Text.Json to handle JSON data, ensuring compatibility with the original Python API.

- Develop and Validate Incremental Endpoints in C# Practice creating and testing individual endpoints iteratively, ensuring correctness and alignment with the original Python API.

- Integrate Swagger/OpenAPI Documentation Learn to add comprehensive API documentation using Swashbuckle and ASP.NET Core's built-in OpenAPI support.

- Write Unit Tests with MSTest Practice creating integration tests using MSTest and WebApplicationFactory to validate API functionality.

Before joining the workshop, there is only one prerequisite: you must have a public GitHub account. All resources, dependencies, and data are part of the repository itself. Make sure you have your GitHub Copilot license, trial, or the free version.

- Local machine: If you already have Git, VS Code with GitHub Copilot, Python 3.12, and the .NET 10 SDK installed, you can clone the repository and follow the exercises locally. A full checklist lives in PREREQUISITES.md, and the Resources page includes a quick reminder of the required tools.

-

GitHub Codespaces: Prefer a zero-install setup? Launch a Codespace from the repository page and continue the tutorial in the cloud. The step-by-step guide is in

docs/en/opening-repository-in-GH-codespaces.md.

- GitHub Copilot Chat

- VS Code

- Python 3.12

- C# with .NET 10 (Minimal APIs)

- MSTest for unit testing

- Swagger/OpenAPI for API documentation

| Resources | Links | Description |

|---|---|---|

| AI Tour 2026 Resource Center | https://aka.ms/AITour26-Resource-center | Links to all repos for AI Tour 26 Sessions |

| Microsoft Foundry Community Discord | Connect with the Microsoft Foundry Community! | |

| Learn at AI Tour | https://aka.ms/LearnAtAITour | Continue learning on Microsoft Learn |

| Code with GitHub Codespaces | https://learn.microsoft.com/training/modules/code-with-github-codespaces/ | Try out GitHub Codespaces! |

| Using advanced GitHub Copilot features | https://learn.microsoft.com/training/modules/advanced-github-copilot/ | Some advanced features and tooling |

Additional languages coming soon!

Bruno Capuano 📢 |

Gustavo Cordido 📢 |

Microsoft is committed to helping our customers use our AI products responsibly, sharing our learnings, and building trust-based partnerships through tools like Transparency Notes and Impact Assessments. Many of these resources can be found at https://aka.ms/RAI. Microsoft’s approach to responsible AI is grounded in our AI principles of fairness, reliability and safety, privacy and security, inclusiveness, transparency, and accountability.

Large-scale natural language, image, and speech models - like the ones used in this sample - can potentially behave in ways that are unfair, unreliable, or offensive, in turn causing harms. Please consult the Azure OpenAI service Transparency note to be informed about risks and limitations.

The recommended approach to mitigating these risks is to include a safety system in your architecture that can detect and prevent harmful behavior. Azure AI Content Safety provides an independent layer of protection, able to detect harmful user-generated and AI-generated content in applications and services. Azure AI Content Safety includes text and image APIs that allow you to detect material that is harmful. Within Azure AI Foundry portal, the Content Safety service allows you to view, explore and try out sample code for detecting harmful content across different modalities. The following quickstart documentation guides you through making requests to the service.

Another aspect to take into account is the overall application performance. With multi-modal and multi-models applications, we consider performance to mean that the system performs as you and your users expect, including not generating harmful outputs. It's important to assess the performance of your overall application using Performance and Quality and Risk and Safety evaluators. You also have the ability to create and evaluate with custom evaluators.

You can evaluate your AI application in your development environment using the Azure AI Evaluation SDK. Given either a test dataset or a target, your generative AI application generations are quantitatively measured with built-in evaluators or custom evaluators of your choice. To get started with the azure ai evaluation sdk to evaluate your system, you can follow the quickstart guide. Once you execute an evaluation run, you can visualize the results in Azure AI Foundry portal.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for aitour26-WRK541-real-world-code-migration-with-github-copilot-agent-mode

Similar Open Source Tools

aitour26-WRK541-real-world-code-migration-with-github-copilot-agent-mode

Microsoft AI Tour 2026 WRK541 is a workshop focused on real-world code migration using GitHub Copilot Agent Mode. The session is designed for technologists interested in applying AI pair-programming techniques to challenging tasks like migrating or translating code between different programming languages. Participants will learn advanced GitHub Copilot techniques, differences between Python and C#, JSON serialization and deserialization in C#, developing and validating endpoints, integrating Swagger/OpenAPI documentation, and writing unit tests with MSTest. The workshop aims to help users gain hands-on experience in using GitHub Copilot for real-world code migration scenarios.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

coze-studio

Coze Studio is an all-in-one AI agent development tool that offers the most convenient AI agent development environment, from development to deployment. It provides core technologies for AI agent development, complete app templates, and build frameworks. Coze Studio aims to simplify creating, debugging, and deploying AI agents through visual design and build tools, enabling powerful AI app development and customized business logic. The tool is developed using Golang for the backend, React + TypeScript for the frontend, and follows microservices architecture based on domain-driven design principles.

supervisely

Supervisely is a computer vision platform that provides a range of tools and services for developing and deploying computer vision solutions. It includes a data labeling platform, a model training platform, and a marketplace for computer vision apps. Supervisely is used by a variety of organizations, including Fortune 500 companies, research institutions, and government agencies.

commanddash

Dash AI is an open-source coding assistant for Flutter developers. It is designed to not only write code but also run and debug it, allowing it to assist beyond code completion and automate routine tasks. Dash AI is powered by Gemini, integrated with the Dart Analyzer, and specifically tailored for Flutter engineers. The vision for Dash AI is to create a single-command assistant that can automate tedious development tasks, enabling developers to focus on creativity and innovation. It aims to assist with the entire process of engineering a feature for an app, from breaking down the task into steps to generating exploratory tests and iterating on the code until the feature is complete. To achieve this vision, Dash AI is working on providing LLMs with the same access and information that human developers have, including full contextual knowledge, the latest syntax and dependencies data, and the ability to write, run, and debug code. Dash AI welcomes contributions from the community, including feature requests, issue fixes, and participation in discussions. The project is committed to building a coding assistant that empowers all Flutter developers.

llama-github

Llama-github is a powerful tool that helps retrieve relevant code snippets, issues, and repository information from GitHub based on queries. It empowers AI agents and developers to solve coding tasks efficiently. With features like intelligent GitHub retrieval, repository pool caching, LLM-powered question analysis, and comprehensive context generation, llama-github excels at providing valuable knowledge context for development needs. It supports asynchronous processing, flexible LLM integration, robust authentication options, and logging/error handling for smooth operations and troubleshooting. The vision is to seamlessly integrate with GitHub for AI-driven development solutions, while the roadmap focuses on empowering LLMs to automatically resolve complex coding tasks.

mastra

Mastra is an opinionated Typescript framework designed to help users quickly build AI applications and features. It provides primitives such as workflows, agents, RAG, integrations, syncs, and evals. Users can run Mastra locally or deploy it to a serverless cloud. The framework supports various LLM providers, offers tools for building language models, workflows, and accessing knowledge bases. It includes features like durable graph-based state machines, retrieval-augmented generation, integrations, syncs, and automated tests for evaluating LLM outputs.

cody-vs

Sourcegraph’s AI code assistant, Cody for Visual Studio, enhances developer productivity by providing a natural and intuitive way to work. It offers features like chat, auto-edit, prompts, and works with various IDEs. Cody focuses on team productivity, offering whole codebase context and shared prompts for consistency. Users can choose from different LLM models like Claude, Gemini Pro, and OpenAI's GPT. Engineered for enterprise use, Cody supports flexible deployment and enterprise security. Suitable for any programming language, Cody excels with Python, Go, JavaScript, and TypeScript code.

TagUI

TagUI is an open-source RPA tool that allows users to automate repetitive tasks on their computer, including tasks on websites, desktop apps, and the command line. It supports multiple languages and offers features like interacting with identifiers, automating data collection, moving data between TagUI and Excel, and sending Telegram notifications. Users can create RPA robots using MS Office Plug-ins or text editors, run TagUI on the cloud, and integrate with other RPA tools. TagUI prioritizes enterprise security by running on users' computers and not storing data. It offers detailed logs, enterprise installation guides, and support for centralised reporting.

dewhale

Dewhale is a GitHub-Powered AI tool designed for effortless development. It utilizes prompt engineering techniques under the GPT-4 model to issue commands, allowing users to generate code with lower usage costs and easy customization. The tool seamlessly integrates with GitHub, providing version control, code review, and collaborative features. Users can join discussions on the design philosophy of Dewhale and explore detailed instructions and examples for setting up and using the tool.

posthog

PostHog is an all-in-one, open source platform for building successful products. It provides tools for product analytics, web analytics, session replays, feature flags, experiments, error tracking, surveys, data warehouse, data pipelines, LLM analytics, and workflows. Users can get started with a generous free tier, self-host the platform, or use PostHog Cloud. The platform supports various SDKs and libraries for popular languages and frameworks, making it versatile and easy to integrate. PostHog is suitable for teams looking to understand user behavior, improve product performance, and automate actions or messages to users.

generative_ai_with_langchain

Generative AI with LangChain is a code repository for building large language model (LLM) apps with Python, ChatGPT, and other LLMs. The repository provides code examples, instructions, and configurations for creating generative AI applications using the LangChain framework. It covers topics such as setting up the development environment, installing dependencies with Conda or Pip, using Docker for environment setup, and setting API keys securely. The repository also emphasizes stability, code updates, and user engagement through issue reporting and feedback. It aims to empower users to leverage generative AI technologies for tasks like building chatbots, question-answering systems, software development aids, and data analysis applications.

semantic-kernel-java

Semantic Kernel for Java is an SDK that integrates Large Language Models (LLMs) like OpenAI, Azure OpenAI, and Hugging Face with conventional programming languages like C#, Python, and Java. It allows defining plugins that can be chained together in just a few lines of code. The tool automatically orchestrates plugins with AI, enabling users to generate plans to achieve unique goals and execute them. The project welcomes contributions, bug reports, and suggestions from the community.

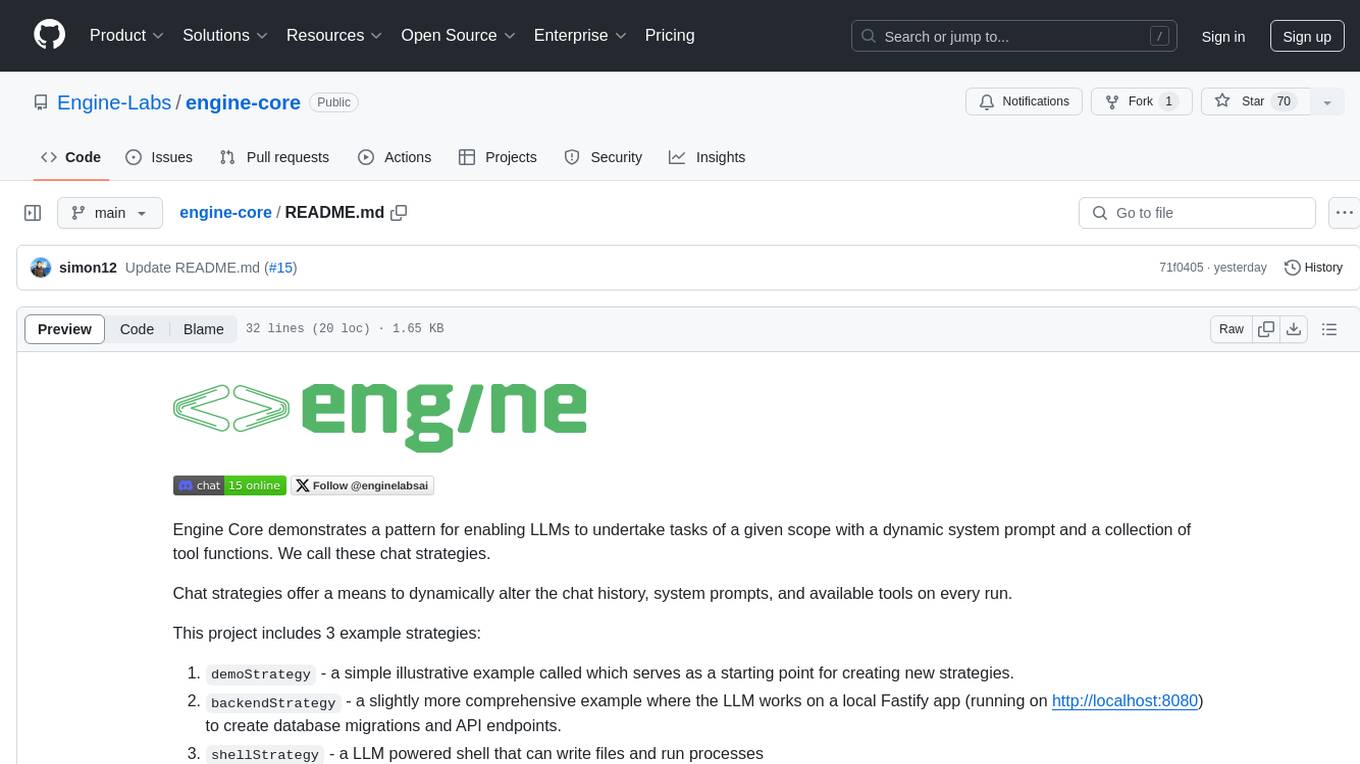

ai-accelerators

DataRobot AI Accelerators are code-first workflows to speed up model development, deployment, and time to value using the DataRobot API. The accelerators include approaches for specific business challenges, generative AI, ecosystem integration templates, and advanced ML and API usage. Users can clone the repo, import desired accelerators into notebooks, execute them, learn and modify content to solve their own problems.

autoMate

autoMate is an AI-powered local automation tool designed to help users automate repetitive tasks and reclaim their time. It leverages AI and RPA technology to operate computer interfaces, understand screen content, make autonomous decisions, and support local deployment for data security. With natural language task descriptions, users can easily automate complex workflows without the need for programming knowledge. The tool aims to transform work by freeing users from mundane activities and allowing them to focus on tasks that truly create value, enhancing efficiency and liberating creativity.

AgentUp

AgentUp is an active development tool that provides a developer-first agent framework for creating AI agents with enterprise-grade infrastructure. It allows developers to define agents with configuration, ensuring consistent behavior across environments. The tool offers secure design, configuration-driven architecture, extensible ecosystem for customizations, agent-to-agent discovery, asynchronous task architecture, deterministic routing, and MCP support. It supports multiple agent types like reactive agents and iterative agents, making it suitable for chatbots, interactive applications, research tasks, and more. AgentUp is built by experienced engineers from top tech companies and is designed to make AI agents production-ready, secure, and reliable.

For similar tasks

engine-core

Engine Core is a project that demonstrates a pattern for enabling Large Language Models (LLMs) to undertake tasks with a dynamic system prompt and a collection of tool functions known as chat strategies. These strategies allow for the dynamic alteration of chat history, system prompts, and available tools on every run. The project includes example strategies such as demoStrategy, backendStrategy, and shellStrategy. Additionally, LLM integrations like Anthropic or OpenAI have been extracted into adapters to enable running the same app code and strategies while switching foundation models.

aitour26-WRK541-real-world-code-migration-with-github-copilot-agent-mode

Microsoft AI Tour 2026 WRK541 is a workshop focused on real-world code migration using GitHub Copilot Agent Mode. The session is designed for technologists interested in applying AI pair-programming techniques to challenging tasks like migrating or translating code between different programming languages. Participants will learn advanced GitHub Copilot techniques, differences between Python and C#, JSON serialization and deserialization in C#, developing and validating endpoints, integrating Swagger/OpenAPI documentation, and writing unit tests with MSTest. The workshop aims to help users gain hands-on experience in using GitHub Copilot for real-world code migration scenarios.

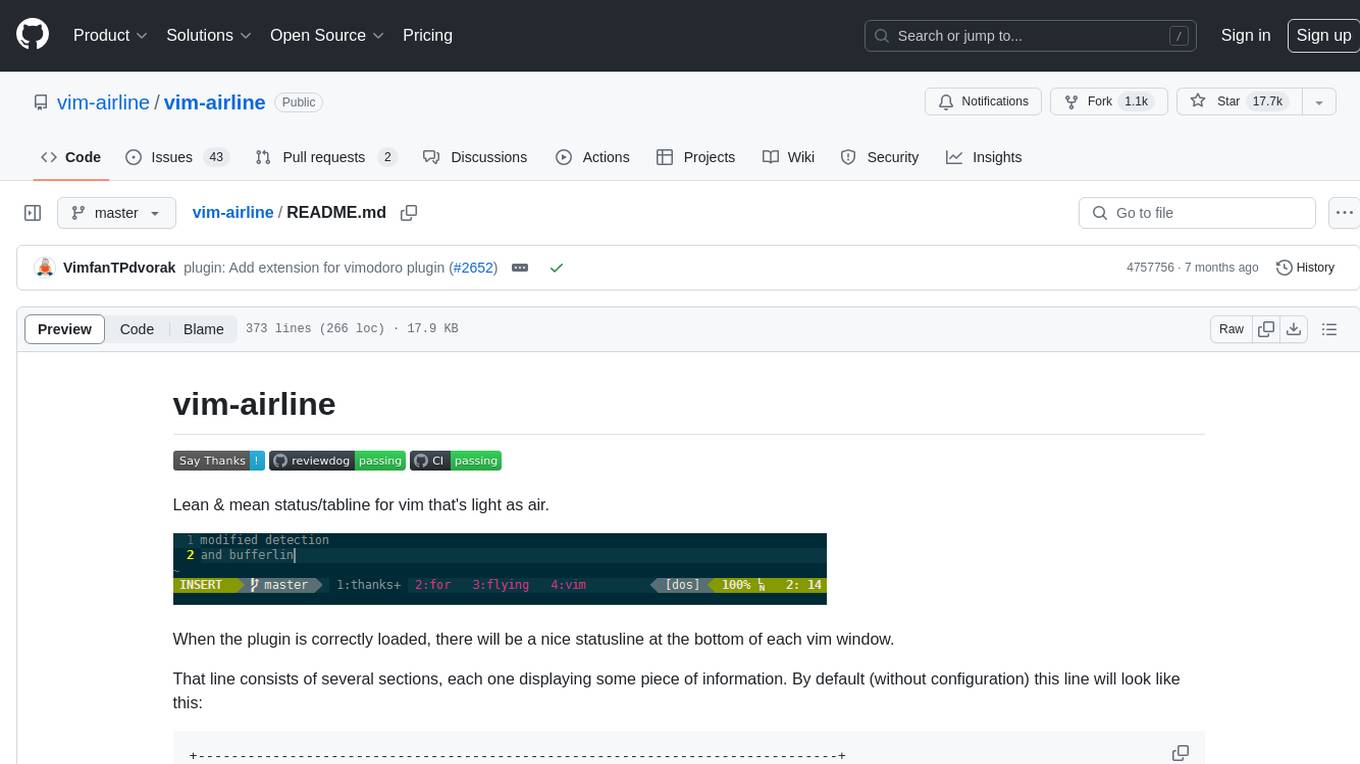

vim-airline

Vim-airline is a lean and mean status/tabline plugin for Vim that provides a nice statusline at the bottom of each Vim window. It consists of several sections displaying information such as mode, environment status, filename, filetype, file encoding, and current position in the file. The plugin is highly customizable and integrates with various plugins, providing a tiny core with extensibility in mind. It is optimized for speed, supports multiple themes, and integrates seamlessly with other plugins. Vim-airline is written in 100% Vimscript, eliminating the need for Python. The plugin aims to be stable and includes a unit testing suite for reliability.

langevals

LangEvals is an all-in-one Python library for testing and evaluating LLM models. It can be used in notebooks for exploration, in pytest for writing unit tests, or as a server API for live evaluations and guardrails. The library is modular, with 20+ evaluators including Ragas for RAG quality, OpenAI Moderation, and Azure Jailbreak detection. LangEvals powers LangWatch evaluations and provides tools for batch evaluations on notebooks and unit test evaluations with PyTest. It also offers LangEvals evaluators for LLM-as-a-Judge scenarios and out-of-the-box evaluators for language detection and answer relevancy checks.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.