web-bench

Web-Bench is a benchmark designed to evaluate the performance of LLMs in actual Web development.

Stars: 205

Web-bench is a simple tool for benchmarking web servers. It is designed to generate a large number of requests to a web server and measure the performance of the server under load. The tool allows users to specify the number of requests, concurrency level, and other parameters to simulate different traffic scenarios. Web-bench provides detailed statistics on response times, throughput, and errors encountered during the benchmarking process. It is a useful tool for web developers, system administrators, and anyone interested in evaluating the performance of web servers.

README:

中文 • Install • Paper • Datasets • LeaderBoard • Citation

Web-Bench is a benchmark designed to evaluate the performance of LLMs in actual Web development. Web-Bench contains 50 projects, each consisting of 20 tasks with sequential dependencies. The tasks implement project features in sequence, simulating real-world human development workflows. When designing Web-Bench, we aim to cover the foundational elements of Web development: Web Standards and Web Frameworks. Given the scale and complexity of these projects, which were designed by engineers with 5-10 years of experience, each presents a significant challenge. On average, a single project takes 4–8 hours for a senior engineer to complete. On our given benchmark agent (Web-Agent), SOTA (Claude 3.7 Sonnet) achieves only 25.1% Pass@1.

The distribution of the experimental data aligns well with the current code generation capabilities of mainstream LLMs.

HumanEval and MBPP have approached saturation. APPS and EvalPlus are approaching saturation. The SOTA for Web-Bench is 25.1%, which is lower (better) than that of the SWE-bench Full and Verified sets.

Refer to the Docker setup guide for instructions on installing Docker on your machine

- Create a new empty folder, add two files in this folder:

./config.json5

./docker-compose.yml

- For

config.json5, copy the json below and edit by Config Parameters:

{

models: [

'openai/gpt-4o',

// You can add more models here

// "claude-sonnet-4-20250514"

],

// Eval one project only

// "projects": ["@web-bench/react"]

}- For

docker-compose.yml, copy the yaml below and set environment

services:

web-bench:

image: maoyiweiebay777/web-bench:latest

volumes:

- ./config.json5:/app/apps/eval/src/config.json5

- ./report:/app/apps/eval/report

environment:

# Add enviorment variables according to apps/src/model.json

- OPENROUTER_API_KEY=your_api_key

# Add more model's key

# - ANTHROPIC_API_KEY=your_api_key- Run docker-compose:

docker compose up- Evaluation Report will be generated under

./report/

If you wish to evaluate from source code, refer to Install from source.

@article{xu2025webbench,

title={Web-Bench: A LLM Code Benchmark Based on Web Standards and Frameworks},

author={Xu, Kai and Mao, YiWei and Guan, XinYi and Feng, ZiLong},

journal={arXiv preprint arXiv:2505.07473},

year={2025}

}- Lark: Scan the QR code below with Register Feishu to join our Web Bench user group.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for web-bench

Similar Open Source Tools

web-bench

Web-bench is a simple tool for benchmarking web servers. It is designed to generate a large number of requests to a web server and measure the performance of the server under load. The tool allows users to specify the number of requests, concurrency level, and other parameters to simulate different traffic scenarios. Web-bench provides detailed statistics on response times, throughput, and errors encountered during the benchmarking process. It is a useful tool for web developers, system administrators, and anyone interested in evaluating the performance of web servers.

BrowserGym

BrowserGym is an open, easy-to-use, and extensible framework designed to accelerate web agent research. It provides benchmarks like MiniWoB, WebArena, VisualWebArena, WorkArena, AssistantBench, and WebLINX. Users can design new web benchmarks by inheriting the AbstractBrowserTask class. The tool allows users to install different packages for core functionalities, experiments, and specific benchmarks. It supports the development setup and offers boilerplate code for running agents on various tasks. BrowserGym is not a consumer product and should be used with caution.

airflow-provider-great-expectations

The 'airflow-provider-great-expectations' repository contains a set of Airflow operators for Great Expectations, a Python library used for testing and validating data. The operators enable users to run Great Expectations validations and checks within Apache Airflow workflows. The package requires Airflow 2.1.0+ and Great Expectations >=v0.13.9. It provides functionalities to work with Great Expectations V3 Batch Request API, Checkpoints, and allows passing kwargs to Checkpoints at runtime. The repository includes modules for a base operator and examples of DAGs with sample tasks demonstrating the operator's functionality.

PentestGPT

PentestGPT provides advanced AI and integrated tools to help security teams conduct comprehensive penetration tests effortlessly. Scan, exploit, and analyze web applications, networks, and cloud environments with ease and precision, without needing expert skills. The tool utilizes Supabase for data storage and management, and Vercel for hosting the frontend. It offers a local quickstart guide for running the tool locally and a hosted quickstart guide for deploying it in the cloud. PentestGPT aims to simplify the penetration testing process for security professionals and enthusiasts alike.

knavigator

Knavigator is a project designed to analyze, optimize, and compare scheduling systems, with a focus on AI/ML workloads. It addresses various needs, including testing, troubleshooting, benchmarking, chaos engineering, performance analysis, and optimization. Knavigator interfaces with Kubernetes clusters to manage tasks such as manipulating with Kubernetes objects, evaluating PromQL queries, as well as executing specific operations. It can operate both outside and inside a Kubernetes cluster, leveraging the Kubernetes API for task management. To facilitate large-scale experiments without the overhead of running actual user workloads, Knavigator utilizes KWOK for creating virtual nodes in extensive clusters.

onnxruntime-server

ONNX Runtime Server is a server that provides TCP and HTTP/HTTPS REST APIs for ONNX inference. It aims to offer simple, high-performance ML inference and a good developer experience. Users can provide inference APIs for ONNX models without writing additional code by placing the models in the directory structure. Each session can choose between CPU or CUDA, analyze input/output, and provide Swagger API documentation for easy testing. Ready-to-run Docker images are available, making it convenient to deploy the server.

agent-o-rama

Agent-O-Rama is a powerful open-source tool designed for automating repetitive tasks in the field of software development. It provides a user-friendly interface to create and manage automated agents that can perform various tasks such as code deployment, testing, and monitoring. With Agent-O-Rama, developers can save time and effort by automating routine processes and focusing on more critical aspects of their projects. The tool is highly customizable and extensible, allowing users to tailor it to their specific needs and integrate it with other tools and services. Agent-O-Rama is suitable for both individual developers and teams working on projects of any size, providing a scalable solution for improving productivity and efficiency in software development.

contextgem

Contextgem is a Ruby gem that provides a simple way to manage context-specific configurations in your Ruby applications. It allows you to define different configurations based on the context in which your application is running, such as development, testing, or production. This helps you keep your configuration settings organized and easily accessible, making it easier to maintain and update your application. With Contextgem, you can easily switch between different configurations without having to modify your code, making it a valuable tool for managing complex applications with multiple environments.

Acontext

Acontext is a context data platform designed for production AI agents, offering unified storage, built-in context management, and observability features. It helps agents scale from local demos to production without the need to rebuild context infrastructure. The platform provides solutions for challenges like scattered context data, long-running agents requiring context management, and tracking states from multi-modal agents. Acontext offers core features such as context storage, session management, disk storage, agent skills management, and sandbox for code execution and analysis. Users can connect to Acontext, install SDKs, initialize clients, store and retrieve messages, perform context engineering, and utilize agent storage tools. The platform also supports building agents using end-to-end scripts in Python and Typescript, with various templates available. Acontext's architecture includes client layer, backend with API and core components, infrastructure with PostgreSQL, S3, Redis, and RabbitMQ, and a web dashboard. Join the Acontext community on Discord and follow updates on GitHub.

stagehand

Stagehand is an AI web browsing framework that simplifies and extends web automation using three simple APIs: act, extract, and observe. It aims to provide a lightweight, configurable framework without complex abstractions, allowing users to automate web tasks reliably. The tool generates Playwright code based on atomic instructions provided by the user, enabling natural language-driven web automation. Stagehand is open source, maintained by the Browserbase team, and supports different models and model providers for flexibility in automation tasks.

yao

YAO is an open-source application engine written in Golang, suitable for developing business systems, website/APP API, admin panel, and self-built low-code platforms. It adopts a flow-based programming model to implement functions by writing YAO DSL or using JavaScript. Yao allows developers to create web services by processes, creating a database model, writing API services, and describing dashboard interfaces just by JSON for web & hardware, and 10x productivity. It is based on the flow-based programming idea, developed in Go language, and supports multiple ways to expand the data stream processor. Yao has a built-in data management system, making it suitable for quickly making various management backgrounds, CRM, ERP, and other internal enterprise systems. It is highly versatile, efficient, and performs better than PHP, JAVA, and other languages.

n8n-docs

n8n is an extendable workflow automation tool that enables you to connect anything to everything. It is open-source and can be self-hosted or used as a service. n8n provides a visual interface for creating workflows, which can be used to automate tasks such as data integration, data transformation, and data analysis. n8n also includes a library of pre-built nodes that can be used to connect to a variety of applications and services. This makes it easy to create complex workflows without having to write any code.

kilo

Kilo CLI is an open source AI coding agent that provides a command-line interface for developers. It includes built-in agents for different tasks like development work and code analysis. Users can switch between agents using the Tab key. The tool also offers a general subagent for complex searches and multi-step tasks. Kilo CLI supports autonomous mode for CI/CD pipelines, allowing fully automated operation without user interaction. It provides migration support for users transitioning from the Kilo Code VS Code extension. The tool is designed to enhance the agentic engineering platform and offers detailed documentation for configuration. Contributors are welcome to join the community and contribute to the project.

ais-k8s

AIStore on Kubernetes is a toolkit for deploying a lightweight, scalable object storage solution designed for AI applications in a Kubernetes environment. It includes documentation, Ansible playbooks, Kubernetes operator, Helm charts, and Terraform definitions for deployment on public cloud platforms. The system overview shows deployment across nodes with proxy and target pods utilizing Persistent Volumes. The AIStore Operator automates cluster management tasks. The repository focuses on production deployments but offers different deployment options. Thorough planning and configuration decisions are essential for successful multi-node deployment. The AIStore Operator simplifies tasks like starting, deploying, adjusting size, and updating AIStore resources within Kubernetes.

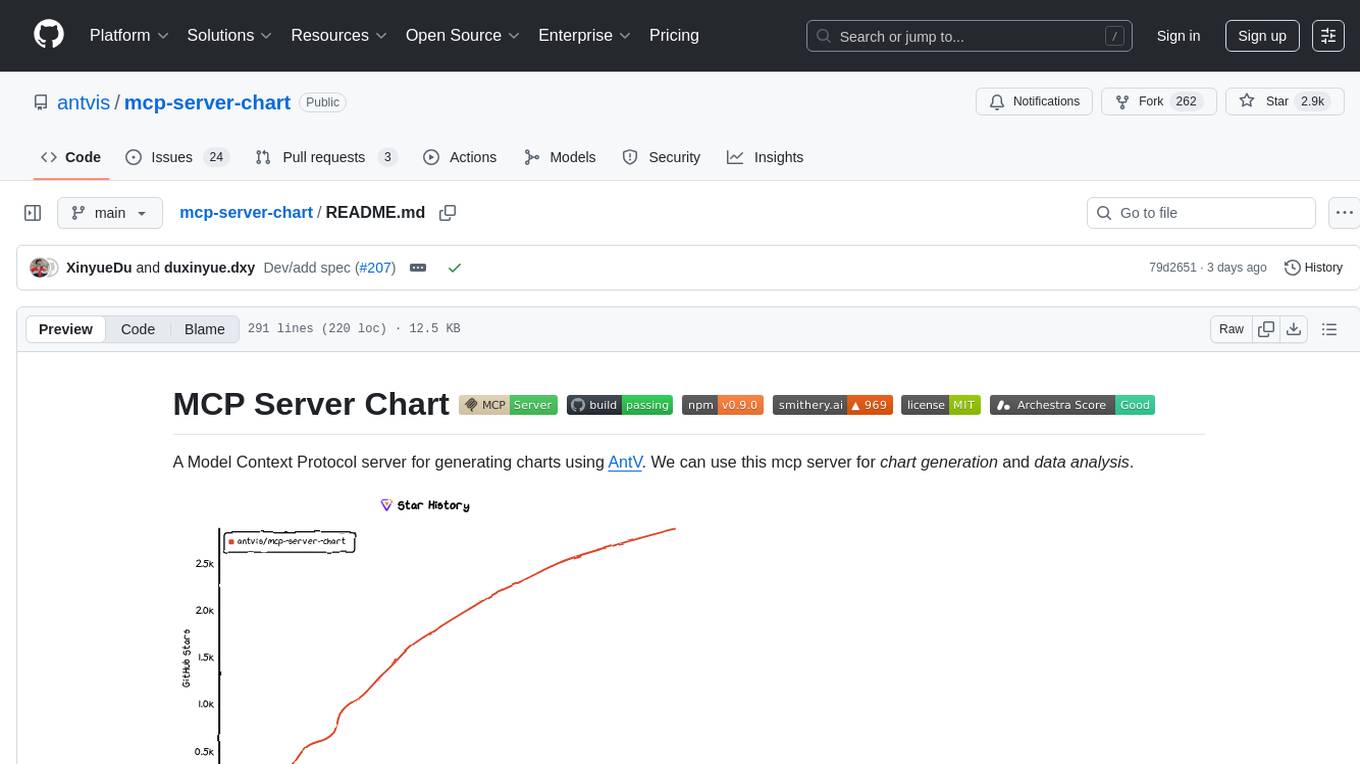

mcp-server-chart

mcp-server-chart is a Helm chart for deploying a Minecraft server on Kubernetes. It simplifies the process of setting up and managing a Minecraft server in a Kubernetes environment. The chart includes configurations for specifying server settings, resource limits, and persistent storage options. With mcp-server-chart, users can easily deploy and scale Minecraft servers on Kubernetes clusters, ensuring high availability and performance for multiplayer gaming experiences.

onlook

Onlook is a web scraping tool that allows users to extract data from websites easily and efficiently. It provides a user-friendly interface for creating web scraping scripts and supports various data formats for exporting the extracted data. With Onlook, users can automate the process of collecting information from multiple websites, saving time and effort. The tool is designed to be flexible and customizable, making it suitable for a wide range of web scraping tasks.

For similar tasks

web-bench

Web-bench is a simple tool for benchmarking web servers. It is designed to generate a large number of requests to a web server and measure the performance of the server under load. The tool allows users to specify the number of requests, concurrency level, and other parameters to simulate different traffic scenarios. Web-bench provides detailed statistics on response times, throughput, and errors encountered during the benchmarking process. It is a useful tool for web developers, system administrators, and anyone interested in evaluating the performance of web servers.

digma

Digma is a Continuous Feedback platform that provides code-level insights related to performance, errors, and usage during development. It empowers developers to own their code all the way to production, improving code quality and preventing critical issues. Digma integrates with OpenTelemetry traces and metrics to generate insights in the IDE, helping developers analyze code scalability, bottlenecks, errors, and usage patterns.

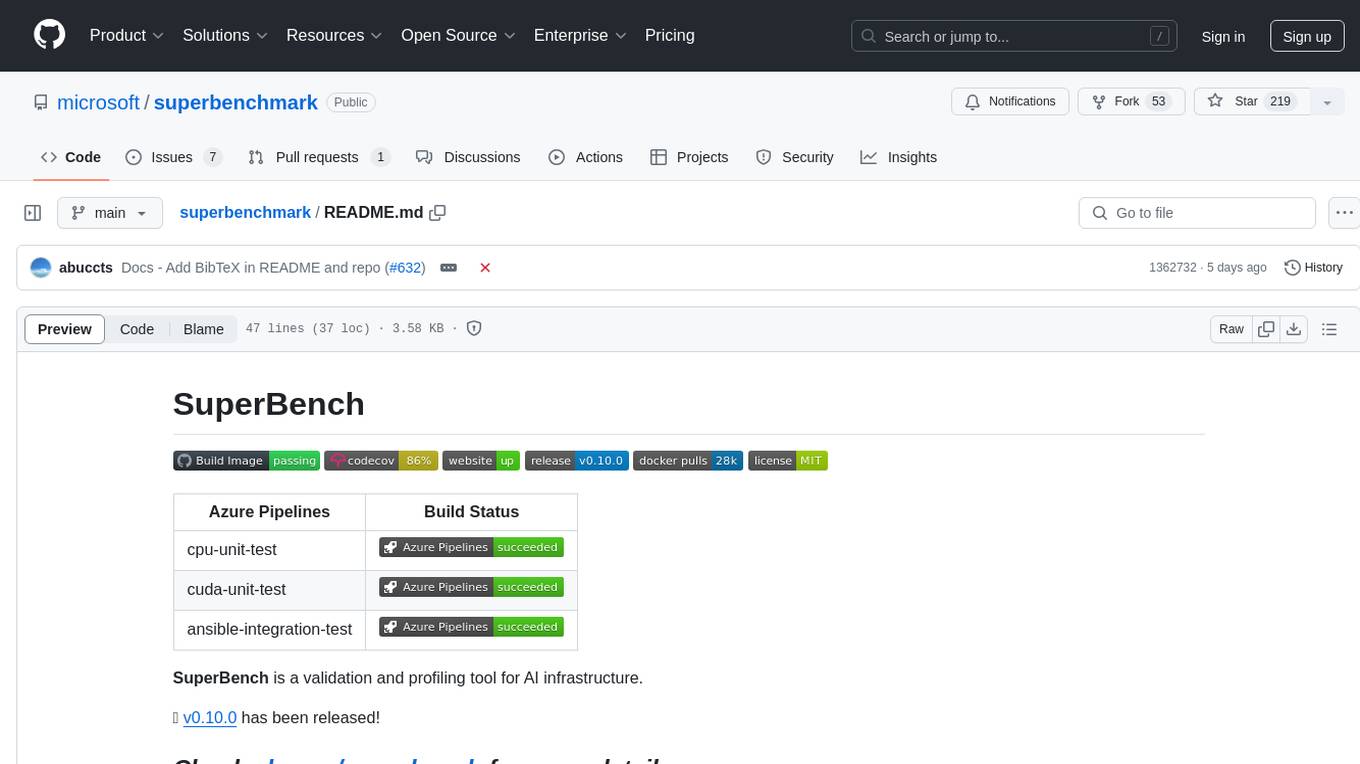

superbenchmark

SuperBench is a validation and profiling tool for AI infrastructure. It provides a comprehensive set of tests and benchmarks to evaluate the performance and reliability of AI systems. The tool helps users identify bottlenecks, optimize configurations, and ensure the stability of their AI infrastructure. SuperBench is designed to streamline the validation process and improve the overall efficiency of AI deployments.

For similar jobs

aio-proxy

This script automates setting up TUIC, hysteria and other proxy-related tools in Linux. It features setting domains, getting SSL certification, setting up a simple web page, SmartSNI by Bepass, Chisel Tunnel, Hysteria V2, Tuic, Hiddify Reality Scanner, SSH, Telegram Proxy, Reverse TLS Tunnel, different panels, installing, disabling, and enabling Warp, Sing Box 4-in-1 script, showing ports in use and their corresponding processes, and an Android script to use Chisel tunnel.

aiohttp

aiohttp is an async http client/server framework that supports both client and server side of HTTP protocol. It also supports both client and server Web-Sockets out-of-the-box and avoids Callback Hell. aiohttp provides a Web-server with middleware and pluggable routing.

OpsPilot

OpsPilot is an AI-powered operations navigator developed by the WeOps team. It leverages deep learning and LLM technologies to make operations plans interactive and generalize and reason about local operations knowledge. OpsPilot can be integrated with web applications in the form of a chatbot and primarily provides the following capabilities: 1. Operations capability precipitation: By depositing operations knowledge, operations skills, and troubleshooting actions, when solving problems, it acts as a navigator and guides users to solve operations problems through dialogue. 2. Local knowledge Q&A: By indexing local knowledge and Internet knowledge and combining the capabilities of LLM, it answers users' various operations questions. 3. LLM chat: When the problem is beyond the scope of OpsPilot's ability to handle, it uses LLM's capabilities to solve various long-tail problems.

aiocoap

aiocoap is a Python library that implements the Constrained Application Protocol (CoAP) using native asyncio methods in Python 3. It supports various CoAP standards such as RFC7252, RFC7641, RFC7959, RFC8323, RFC7967, RFC8132, RFC9176, RFC8613, and draft-ietf-core-oscore-groupcomm-17. The library provides features for clients and servers, including multicast support, blockwise transfer, CoAP over TCP, TLS, and WebSockets, No-Response, PATCH/FETCH, OSCORE, and Group OSCORE. It offers an easy-to-use interface for concurrent operations and is suitable for IoT applications.

aiounifi

Aiounifi is a Python library that provides a simple interface for interacting with the Unifi Controller API. It allows users to easily manage their Unifi network devices, such as access points, switches, and gateways, through automated scripts or applications. With Aiounifi, users can retrieve device information, perform configuration changes, monitor network performance, and more, all through a convenient and efficient API wrapper. This library simplifies the process of integrating Unifi network management into custom solutions, making it ideal for network administrators, developers, and enthusiasts looking to automate and streamline their network operations.

AirConnect-Synology

AirConnect-Synology is a minimal Synology package that allows users to use AirPlay to stream to UPnP/Sonos & Chromecast devices that do not natively support AirPlay. It is compatible with DSM 7.0 and DSM 7.1, and provides detailed information on installation, configuration, supported devices, troubleshooting, and more. The package automates the installation and usage of AirConnect on Synology devices, ensuring compatibility with various architectures and firmware versions. Users can customize the configuration using the airconnect.conf file and adjust settings for specific speakers like Sonos, Bose SoundTouch, and Pioneer/Phorus/Play-Fi.

axoned

Axone is a public dPoS layer 1 designed for connecting, sharing, and monetizing resources in the AI stack. It is an open network for collaborative AI workflow management compatible with any data, model, or infrastructure, allowing sharing of data, algorithms, storage, compute, APIs, both on-chain and off-chain. The 'axoned' node of the AXONE network is built on Cosmos SDK & Tendermint consensus, enabling companies & individuals to define on-chain rules, share off-chain resources, and create new applications. Validators secure the network by maintaining uptime and staking $AXONE for rewards. The blockchain supports various platforms and follows Semantic Versioning 2.0.0. A docker image is available for quick start, with documentation on querying networks, creating wallets, starting nodes, and joining networks. Development involves Go and Cosmos SDK, with smart contracts deployed on the AXONE blockchain. The project provides a Makefile for building, installing, linting, and testing. Community involvement is encouraged through Discord, open issues, and pull requests.

paddler

Paddler is an open-source load balancer and reverse proxy designed specifically for optimizing servers running llama.cpp. It overcomes typical load balancing challenges by maintaining a stateful load balancer that is aware of each server's available slots, ensuring efficient request distribution. Paddler also supports dynamic addition or removal of servers, enabling integration with autoscaling tools.