Best AI tools for< Performance Engineer >

Infographic

20 - AI tool Sites

Neural Concept

Neural Concept is an end-to-end platform for high-performance engineering teams, powered by a leading proprietary 3D AI core. It accelerates product development and innovation with industry-leading 3D deep-learning and simulation capabilities. The platform works with various CAE and CAD softwares, offering 3D visual feedback, collaborative environment, and LLM guidance to boost engineers' impact. Neural Concept is used by engineering companies to design and deliver better products faster, bringing AI-designed products to market up to 75% faster.

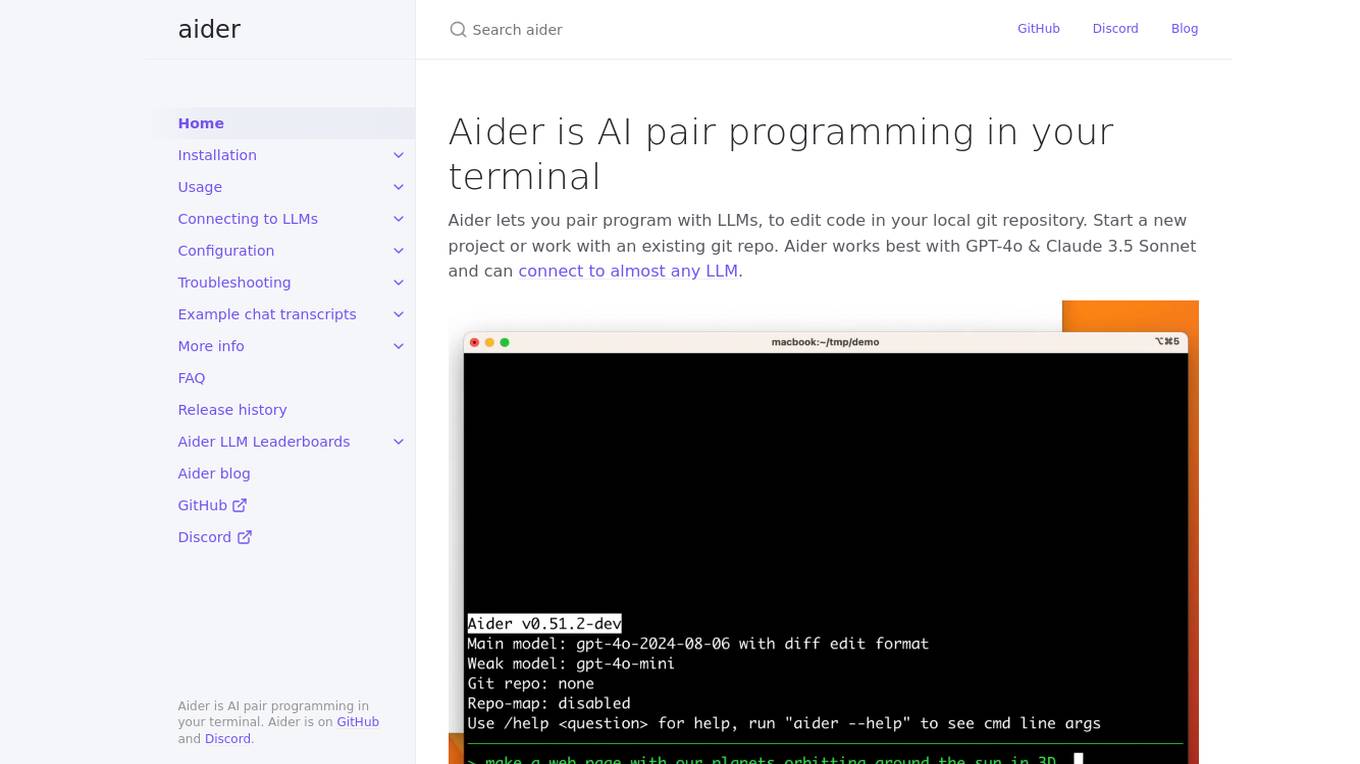

Aider

Aider is an AI pair programming tool that allows users to collaborate with Language Model Models (LLMs) to edit code in their local git repository. It supports popular languages like Python, JavaScript, TypeScript, PHP, HTML, and CSS. Aider can handle complex requests, automatically commit changes, and work well in larger codebases by using a map of the entire git repository. Users can edit files while chatting with Aider, add images and URLs to the chat, and even code using their voice. Aider has received positive feedback from users for its productivity-enhancing features and performance on software engineering benchmarks.

Medium.engineering

Medium.engineering is a website that provides security verification services to protect against malicious bots. Users may encounter a brief waiting period while the website verifies that they are not bots. The service ensures performance and security through the use of Cloudflare technology.

Prompt Engineering

Prompt Engineering is a discipline focused on developing and optimizing prompts to efficiently utilize language models (LMs) for various applications and research topics. It involves skills to understand the capabilities and limitations of large language models, improving their performance on tasks like question answering and arithmetic reasoning. Prompt engineering is essential for designing robust prompting techniques that interact with LLMs and other tools, enhancing safety and building new capabilities by augmenting LLMs with domain knowledge and external tools.

Hivel

Hivel is a Software Engineering Analytics Platform designed to provide actionable insights and metrics for engineering teams. It offers features such as AI Code Review Agent, DORA Metrics, Investment Profile, SPACE Metrics, and more. Hivel helps users measure the impact of AI adoption, analyze engineering data, and improve software quality with velocity. The platform is trusted by users and awarded by G2 Platforms for its excellence in analytics and code review. With a focus on AI-driven engineering, Hivel aims to empower teams to achieve balance, efficiency, and excellence in their software development lifecycle.

Weavel

Weavel is an AI tool designed to revolutionize prompt engineering for large language models (LLMs). It offers features such as tracing, dataset curation, batch testing, and evaluations to enhance the performance of LLM applications. Weavel enables users to continuously optimize prompts using real-world data, prevent performance regression with CI/CD integration, and engage in human-in-the-loop interactions for scoring and feedback. Ape, the AI prompt engineer, outperforms competitors on benchmark tests and ensures seamless integration and continuous improvement specific to each user's use case. With Weavel, users can effortlessly evaluate LLM applications without the need for pre-existing datasets, streamlining the assessment process and enhancing overall performance.

Nscale

Nscale is a full-stack, scalable, and sustainable AI cloud platform that offers a wide range of AI services and solutions. It provides services for developing, training, tuning, and deploying AI models using on-demand services. Nscale also offers serverless inference API endpoints, fine-tuning capabilities, private cloud solutions, and various GPU clusters engineered for AI. The platform aims to simplify the journey from AI model development to production, offering a marketplace for AI/ML tools and resources. Nscale's infrastructure includes data centers powered by renewable energy, high-performance GPU nodes, and optimized networking and storage solutions.

Axify

Axify is a Software Engineering Intelligence Platform that provides AI-driven insights and tools to enhance software development processes. It offers features such as AI adoption impact analysis, real-time tracking of DORA metrics, value stream mapping, developer productivity monitoring, engineering metrics tracking, goal setting, and reporting. The platform also includes integrations with popular tools like Slack, Microsoft Teams, Jira, and GitHub, ensuring seamless workflow. Axify aims to optimize resource allocation, improve software delivery management, and enhance developer experience for efficient project delivery.

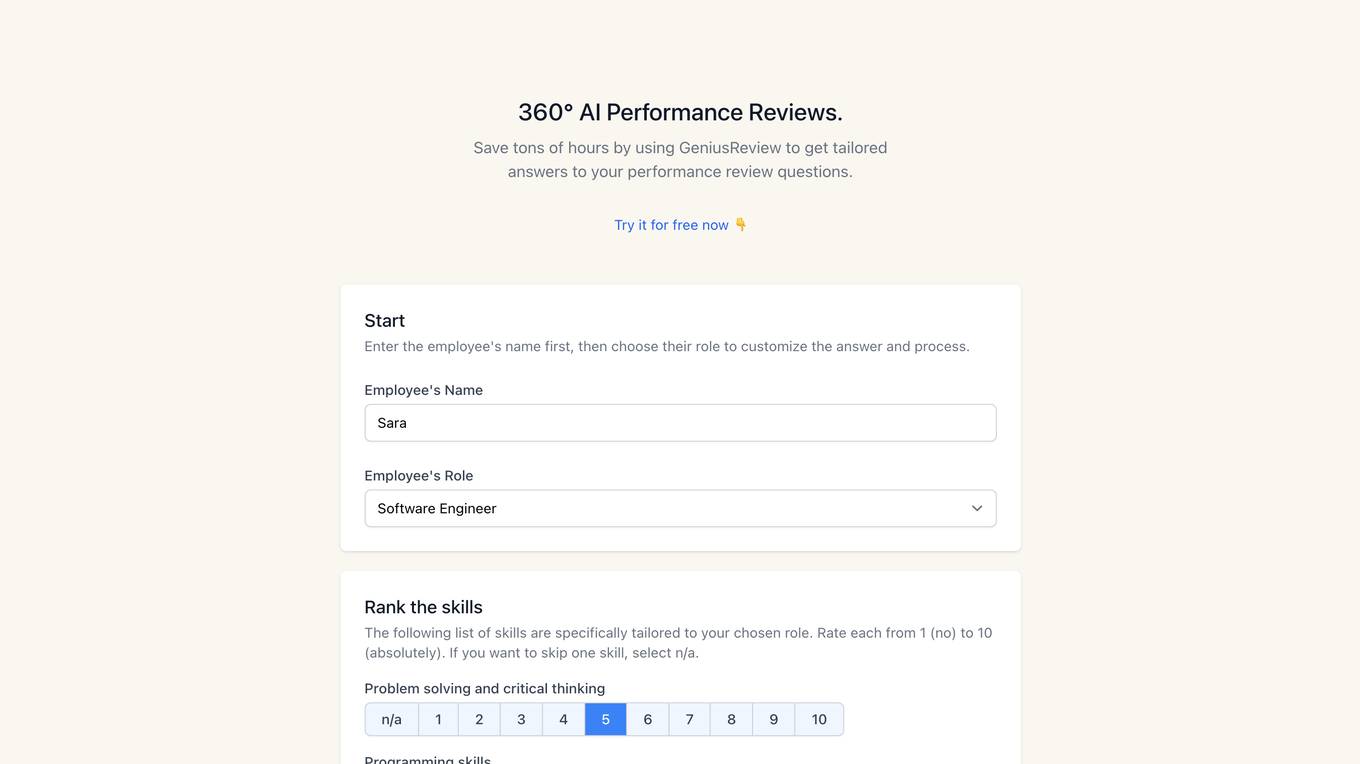

GeniusReview

GeniusReview is a 360° AI-powered performance review tool that helps users save time by providing tailored answers to performance review questions. Users can input employee details, rate skills, add custom questions, and generate personalized reviews. The tool aims to streamline the performance review process and enhance feedback quality for various roles like Software Engineer, Product Designer, and Product Manager.

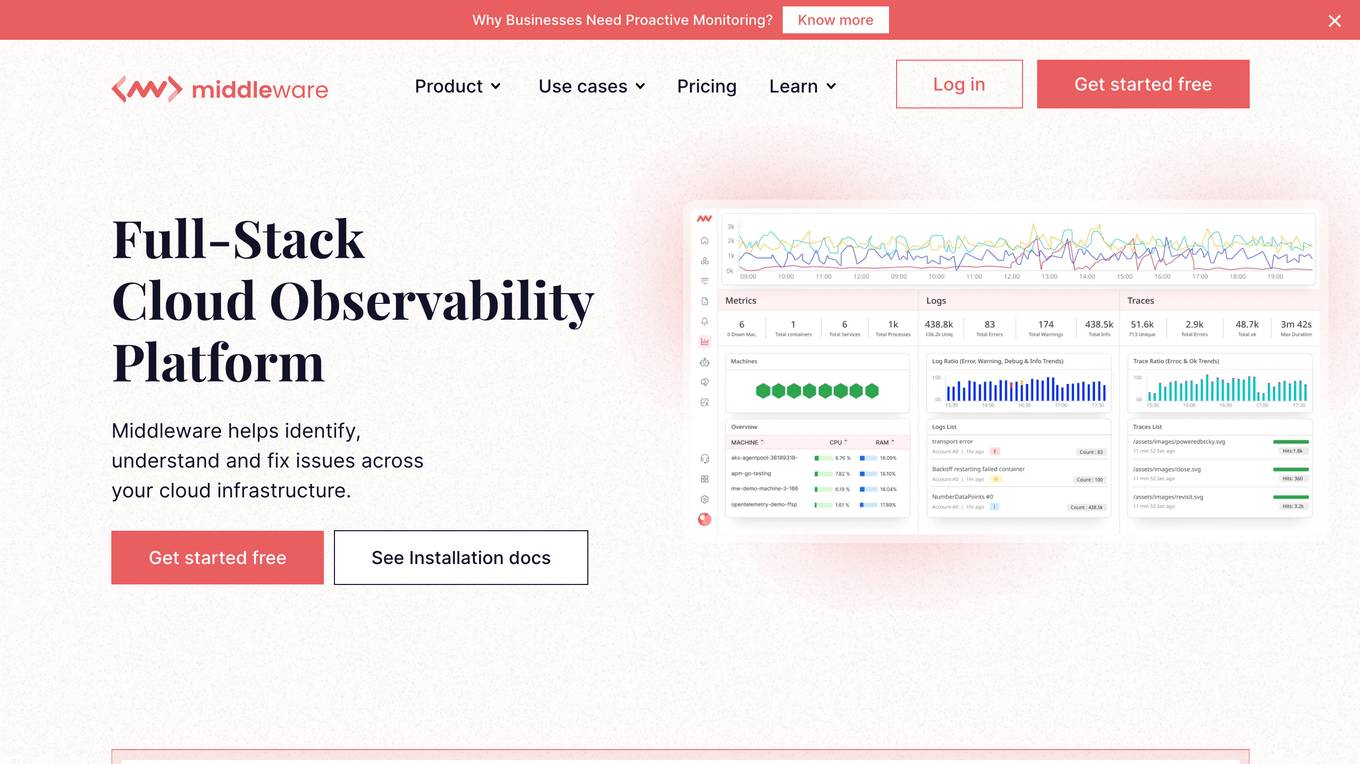

Cloud Observability Middleware

The website provides Full-Stack Cloud Observability services with a focus on Middleware. It offers comprehensive monitoring and analysis tools to help businesses optimize their cloud infrastructure performance. The platform enables users to gain insights into their middleware applications, identify bottlenecks, and improve overall system efficiency.

Milio

Milio is an AI-powered voice-operated Interview Companion that helps users ace their interviews by handling a wide range of questions, from Leetcode to behavioral questions. It adapts quickly to new question types and trends, providing real-time performance feedback. Milio also offers features like answering complex questions, resolving conflicts, and keeping technical skills up-to-date. With a focus on data-driven insights and engagement levels, Milio prioritizes leads and opportunities effectively. The application is designed to elevate interview performance and reduce anxiety, offering all features for a single subscription of $35 per month.

Parrot

Parrot is a leading AI interview practice platform that helps users master their interview skills through AI-powered practice. It offers personalized feedback and in-depth analysis to elevate interview performance. With a focus on behavioral interviews, the platform provides a variety of questions and realistic scenarios to build confidence. Users can receive custom guidance tailored to their industry, fast-track their career growth with AI-driven feedback, and benefit from actionable strategies for success. Parrot aims to help users boost their confidence, stand out with relevant insights, and accelerate their career development.

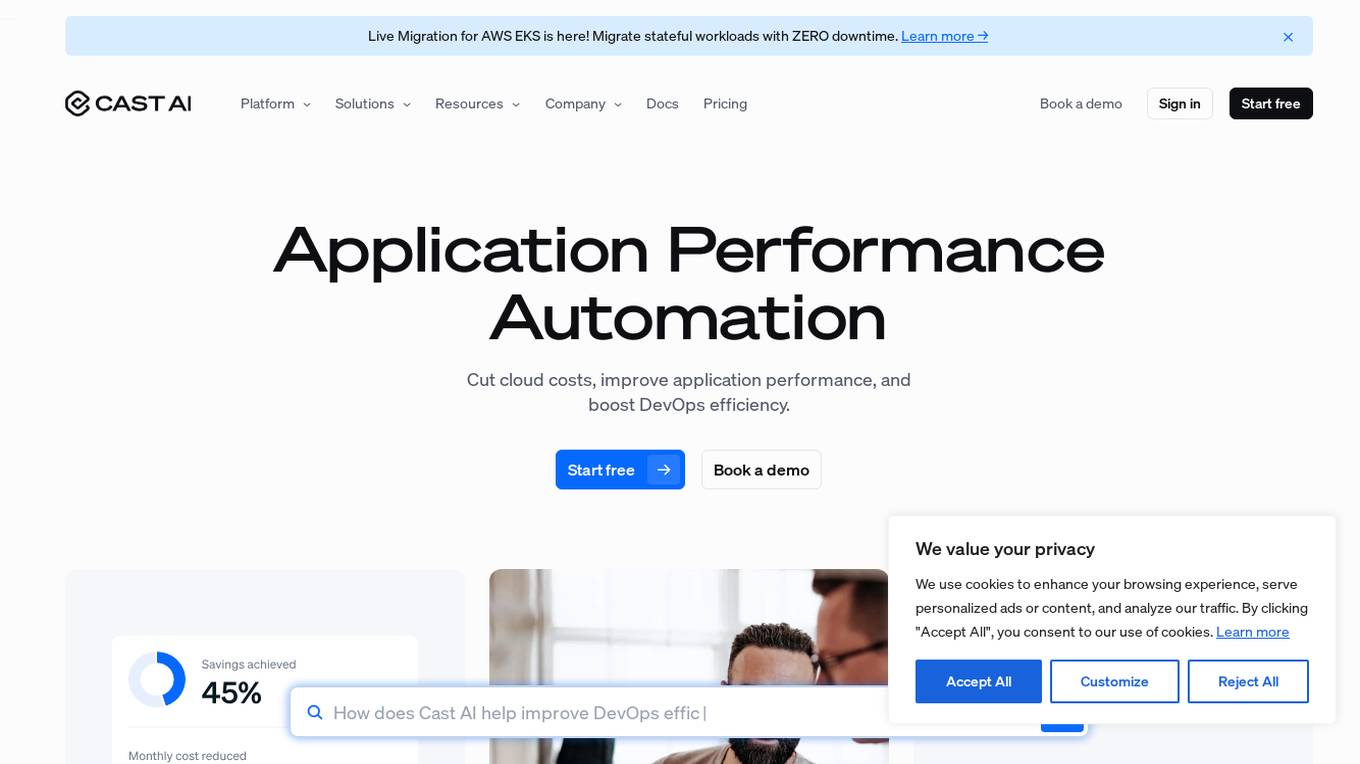

Cast AI

Cast AI is an intelligent Kubernetes automation platform that offers live migration for AWS EKS, enabling users to migrate stateful workloads with zero downtime. The platform provides application performance automation by automating and optimizing the entire application stack, including Kubernetes cluster optimization, security, workload optimization, LLM optimization for AIOps, cost monitoring, and database optimization. Cast AI integrates with various cloud services and tools, offering solutions for migration of stateful workloads, inference at scale, and cutting AI costs without sacrificing scale. The platform helps users improve performance, reduce costs, and boost productivity through end-to-end application performance automation.

Modal

Modal is a high-performance cloud platform designed for developers, AI data, and ML teams. It offers a serverless environment for running generative AI models, large-scale batch jobs, job queues, and more. With Modal, users can bring their own code and leverage the platform's optimized container file system for fast cold boots and seamless autoscaling. The platform is engineered for large-scale workloads, allowing users to scale to hundreds of GPUs, pay only for what they use, and deploy functions to the cloud in seconds without the need for YAML or Dockerfiles. Modal also provides features for job scheduling, web endpoints, observability, and security compliance.

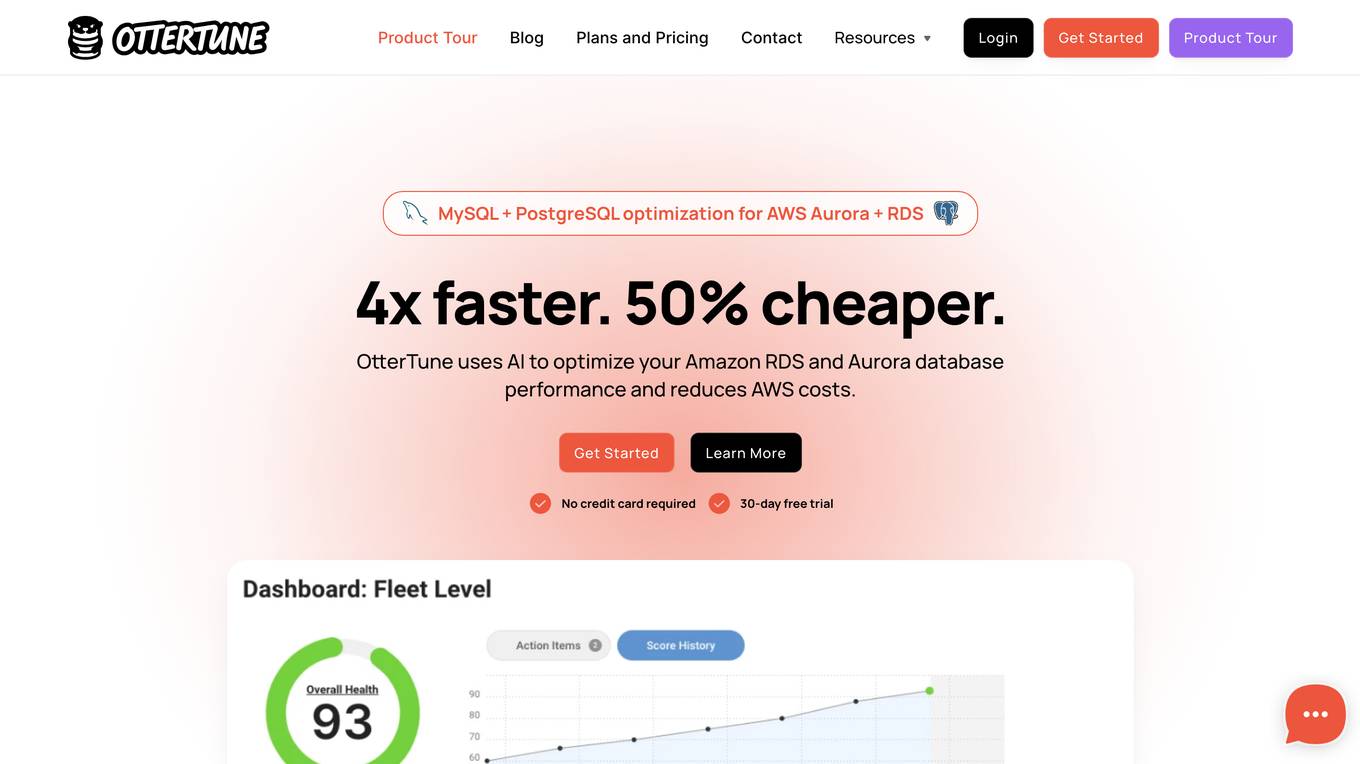

OtterTune

OtterTune was a database tuning service start-up founded at Carnegie Mellon University. Unfortunately, the company is no longer operational. The website mentions DJ OT being in prison for a parole violation, indicating the company's closure. Despite this, the team and investors are acknowledged for their support and belief in the project. The website also references a research music archive on GitHub.

DINGR

DINGR is an AI-powered solution designed to help gamers analyze their performance in League of Legends. The tool uses AI algorithms to provide accurate insights into gameplay, comparing performance metrics with friends and offering suggestions for improvement. DINGR is currently in development, with a focus on enhancing the gaming experience through data-driven analysis and personalized feedback.

AI Tech Debt Analysis Tool

This website is an AI tool that helps senior developers analyze AI tech debt. AI tech debt is the technical debt that accumulates when AI systems are developed and deployed. It can be difficult to identify and quantify AI tech debt, but it can have a significant impact on the performance and reliability of AI systems. This tool uses a variety of techniques to analyze AI tech debt, including static analysis, dynamic analysis, and machine learning. It can help senior developers to identify and quantify AI tech debt, and to develop strategies to reduce it.

Jungle AI

Jungle AI is an AI application that offers solutions to improve machine performance and uptime across various industries such as wind, solar, manufacturing, and maritime. By leveraging AI technology, Jungle AI provides real-time insights into asset performance, increases production efficiency, and prevents unplanned downtime. The application is trusted by global teams and has a proven track record of delivering results through advanced AI algorithms and predictive analytics.

Tability.io

Tability.io is a website that provides performance and security services through Cloudflare. It offers security verification to protect against malicious bots and ensures a safe browsing experience for users. The platform verifies users to prevent bot activities and maintains privacy by enabling JavaScript and cookies. Tability.io focuses on enhancing website performance and security by leveraging Cloudflare's advanced technologies.

OpenResty

The website is currently displaying a '403 Forbidden' error message, which indicates that the server is refusing to respond to the request. This error is often caused by insufficient permissions or misconfiguration on the server side. The 'openresty' mentioned in the message is a web platform based on NGINX and LuaJIT, commonly used for building high-performance web applications. It is designed to handle a large number of concurrent connections and provide a scalable and efficient web server solution.

3 - Open Source Tools

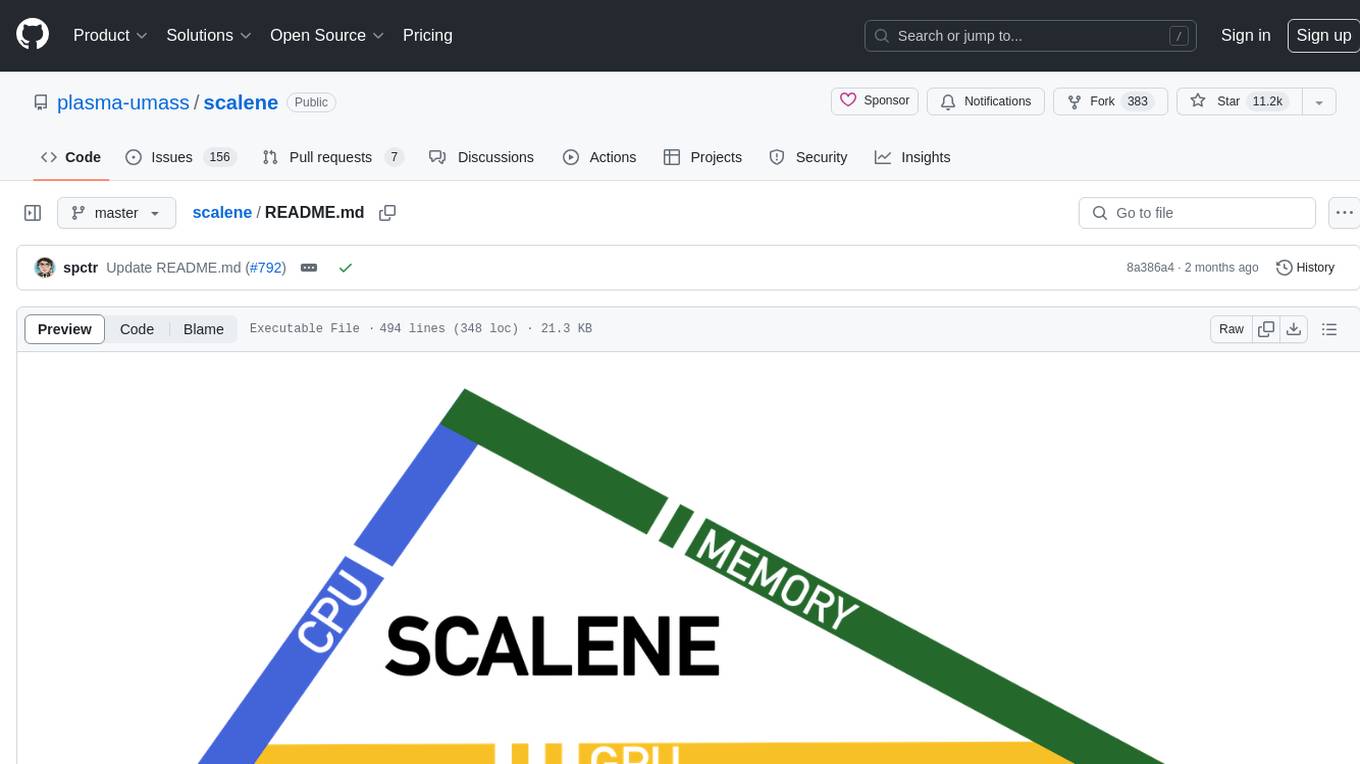

scalene

Scalene is a high-performance CPU, GPU, and memory profiler for Python that provides detailed information and runs faster than many other profilers. It incorporates AI-powered proposed optimizations, allowing users to generate optimization suggestions by clicking on specific lines or regions of code. Scalene separates time spent in Python from native code, highlights hotspots, and identifies memory usage per line. It supports GPU profiling on NVIDIA-based systems and detects memory leaks. Users can generate reduced profiles, profile specific functions using decorators, and suspend/resume profiling for background processes. Scalene is available as a pip or conda package and works on various platforms. It offers features like profiling at the line level, memory trends, copy volume reporting, and leak detection.

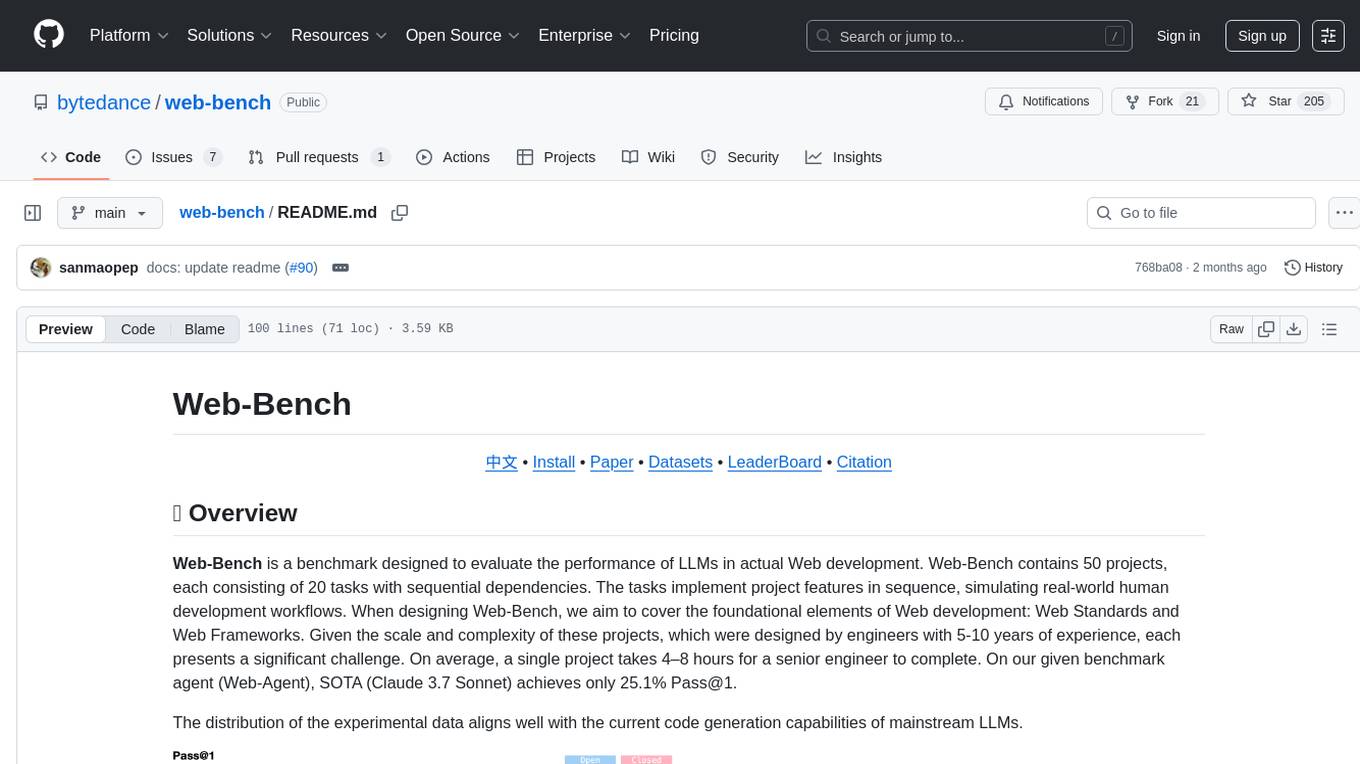

web-bench

Web-bench is a simple tool for benchmarking web servers. It is designed to generate a large number of requests to a web server and measure the performance of the server under load. The tool allows users to specify the number of requests, concurrency level, and other parameters to simulate different traffic scenarios. Web-bench provides detailed statistics on response times, throughput, and errors encountered during the benchmarking process. It is a useful tool for web developers, system administrators, and anyone interested in evaluating the performance of web servers.

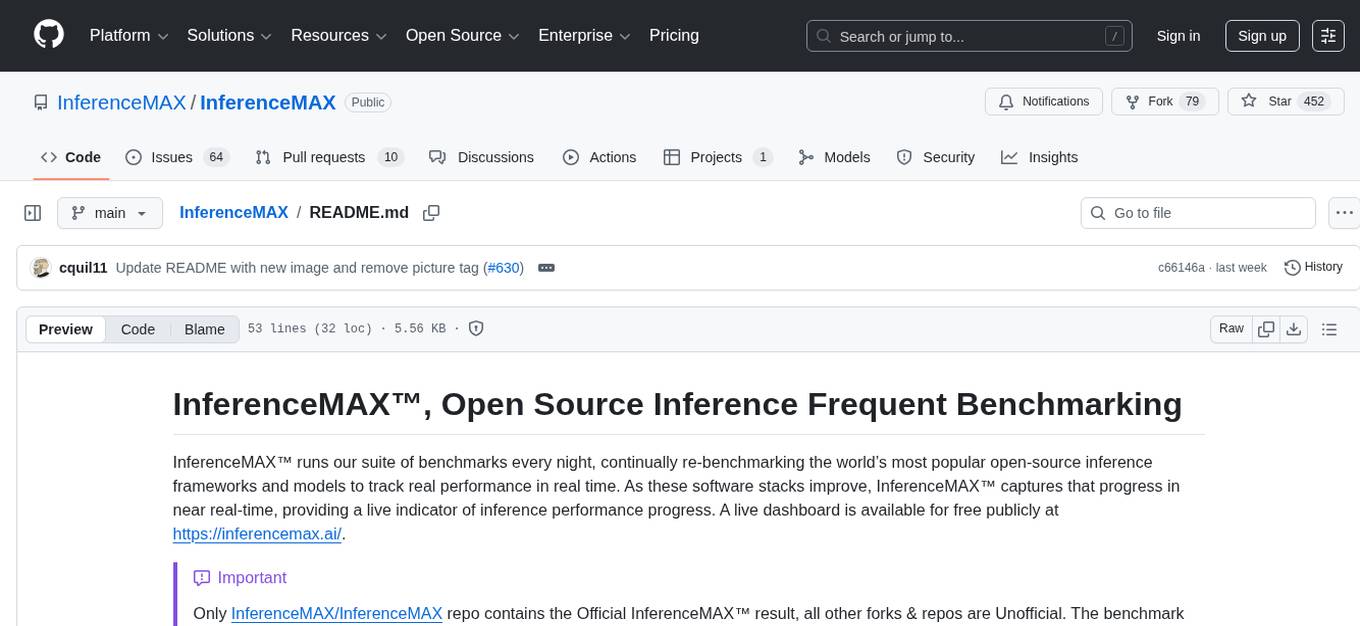

InferenceMAX

InferenceMAX™ is an open-source benchmarking tool designed to track real-time performance improvements in popular open-source inference frameworks and models. It runs a suite of benchmarks every night to capture progress in near real-time, providing a live indicator of inference performance. The tool addresses the challenge of rapidly evolving software ecosystems by benchmarking the latest software packages, ensuring that benchmarks do not go stale. InferenceMAX™ is supported by industry leaders and contributors, providing transparent and reproducible benchmarks that help the ML community make informed decisions about hardware and software performance.

20 - OpenAI Gpts

Java Performance Specialist

Enthusiastic Java code optimizer with a focus on clarity and encouragement.

Optimisateur de Performance GPT

Expert en optimisation de performance et traitement de données

Performance Testing Advisor

Ensures software performance meets organizational standards and expectations.

Vue.js Optimizer for a truly faster application

Expert in Vue.js performance optimization, offering tailored advice.

Thermal Engineering Advisor

Guides thermal management solutions for efficient system performance.

Network Operations Advisor

Ensures efficient and effective network performance and security.

Power Systems Advisor

Ensures optimal performance of power systems through strategic advisory.

ML Engineer GPT

I'm a Python and PyTorch expert with knowledge of ML infrastructure requirements ready to help you build and scale your ML projects.